Using OpenAI to Generate Vector Embeddings

In a recent post, I demonstrated how you could use Cohere to generate Vector Embeddings. Despite having upgraded to a Production API (i.e. a paid one), the experience and time taken to process a relatively small dataset was underwhelming. The result was Cohere wasn’t up to the job of generating Vector Embeddings for the dataset, never mind a few hundred records of that dataset.

This post explores using OpenAI to perform the same task.

First thing is to install the OpenAI python library

pip install openaiNext, you need to create an account with OpenAI. This will allow you to get an API key. OpenAI gives you greater access to and usage of their algorithms than Cohere. Their response times are quicker and you have to lots before any rate limiting might kick in.

Using the same Wine Reviews dataset, the following code processes the data in the same/similar using the OpenAI embedding models. OpenAI has three text embedding models and these create vector embeddings with up to 1536 dimensions, or if you use the large model it will have 3072 dimensions. However, with these OpenAI Embedding models, you can specify a small number of dimensions, which gives you flexibility based on your datasets and the problems being addressed. It also allows you to be more careful with the space allocation for the vector embeddings and the vector indexes created on these.

One other setup you need to do to use the OpenAI API is to create a local environment variable. You can do this at the terminal level, or set/define it in your Python code, as shown below.

import openai

import os

import numpy as np

import os

import time

import pandas as pd

print_every = 200

num_records = 10000

#define your OpenAI API Key

os.environ["OPENAI_API_KEY"] = "... Your OpenAI API Key ..."

client = openai.OpenAI()

#load file into pandas DF

data_file = '.../winemag-data-130k-v2.csv'

df = pd.read_csv(data_file)

#define the outfile name

print("Input file :", data_file)

v_file = os.path.splitext(data_file)[0]+'.openai'

print(v_file)

#Open file with write (over-writes previous file)

f=open(v_file,"w")

f.write("delete from WINE_REVIEWS_130K;\n")

for index,row in df.head(num_records).iterrows():

response = client.embeddings.create(

model = "text-embedding-3-large",

input = row['description'],

dimensions = 100

)

v_embedding = str(response.data[0].embedding)

tab_insert="INSERT into WINE_REVIEWS_130K VALUES ("+str(row["Seq"])+"," \

+"'"+str(row["country"])+"'," \

+"'"+str(row["description"])+"'," \

+"'"+str(row["designation"])+"'," \

+str(row["points"])+"," \

+str(row["price"])+"," \

+"'"+str(row["province"])+"'," \

+"'"+str(row["region_1"])+"'," \

+"'"+str(row["region_2"])+"'," \

+"'"+str(row["taster_name"])+"'," \

+"'"+str(row["taster_twitter_handle"])+"'," \

+"'"+str(row["title"])+"'," \

+"'"+str(row["variety"])+"'," \

+"'"+str(row["winery"])+"'," \

+"'"+v_embedding+"'"+");\n"

# print(tab_insert)

f.write(tab_insert)

if (index%print_every == 0):

print(f'Processed {index} vectors ', time.strftime("%H:%M:%S", time.localtime()))

#Close vector file

f.write("commit;\n")

f.close()

print(f"Finished writing file with Vector data [{index+1} vectors]", time.strftime("%H:%M:%S", time.localtime()))

That’s it. You can now run the script file to populate the table in your Database.

I mentioned above in in my previous post about using Cohere for this task. Yes there were some issues when using Cohere but for OpenAI everything ran very smoothly and was much. quicker too. I did encounter some rate limiting with OpenAI when I tried to processs more than ten thousand records. But the code did eventually complete.

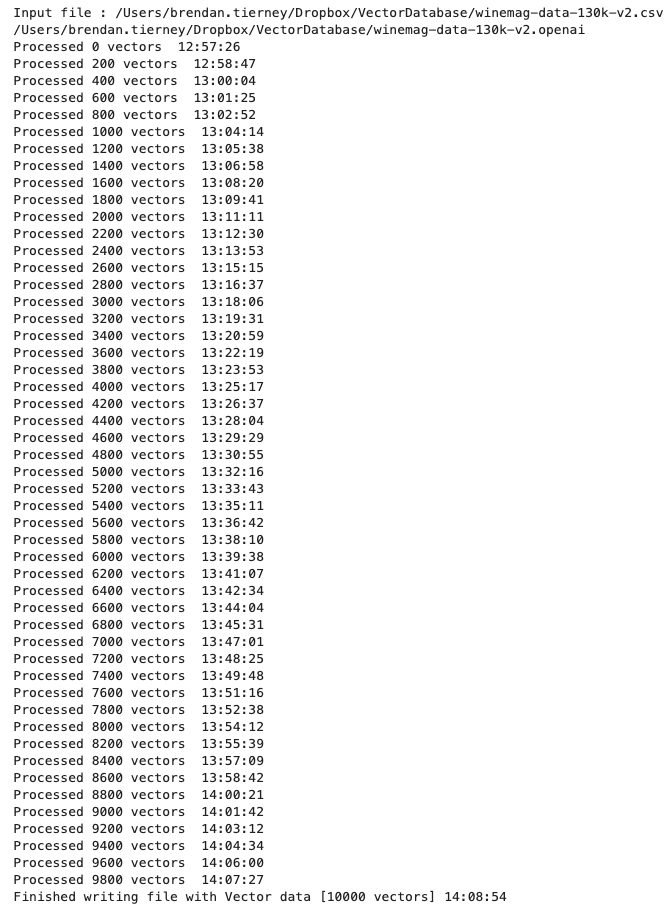

Here is an example of the output from one of my tests.

This entry was posted in Vector Database, Vector Embeddings and tagged OpenAI, Vector Embedding.