Vector Indexes

Vector Databases – Part 8 – Creating Vector Indexes

Building upon the previous posts, this post will explore how to create Vector Indexes and the different types. In the next post I’ll demonstrate how to explore wine reviews using the Vector Embeddings and the Vector Indexes.

Before we start working with Vector Indexes, we need to allocate some memory within the Database where these Vector Indexes can will located. To do this we need to log into the container database and change the parameter for this. I’m using a 23.5ai VM. On the VM I run the following:

sqlplus system/oracle@freeThis will connect you to the contain DB. No run the following to change the memory allocation. Be careful not to set this too high, particularly on the VM. Probably the maximum value would be 512M, but in my case I’ve set it to a small value.

alter system set vector_memory_size = 200M scope=spfile;You need to bounce the database. You can do this using SQL commands. If you’re not sure how to do that, just restart the VM.

After the restart, log into your schema and run the following to see if the parameter has been set correctly.

show parameter vector_memory_size;

NAME TYPE VALUE

------------------ ----------- -----

vector_memory_size big integer 208M

We can see from the returned results we have the 200M allocated (ok we have 208M allocated). If you get zero displayed, then something went wrong. Typically, this is because you didn’t run the command at the container database level.

You only need to set this once for the database.

There are two types of Vector Indexes:

- Inverted File Flat Vector Index (IVF). The inverted File Flat (IVF) index is the only type of Neighbor Partition vector index supported. Inverted File Flat Index (IVF Flat or simply IVF) is a partitioned-based index which balances high search quality with reasonable speed. This index is typically disk based.

- In-Memory Neighbor Graph Vector Index. Hierarchical Navigable Small World (HNSW) is the only type of In-Memory Neighbor Graph vector index supported. HNSW graphs are very efficient indexes for vector approximate similarity search. HNSW graphs are structured using principles from small world networks along with layered hierarchical organization. As the name suggests these are located in-memory. See the step above for allocating Vector Memory space for these indexes.

Let’s have a look at creating each of these.

CREATE VECTOR INDEX wine_desc_ivf_idx

ON wine_reviews_130k (embedding)

ORGANIZATION NEIGHBOR PARTITIONS

DISTANCE COSINE

WITH TARGET ACCURACY 90 PARAMETERS (type IVF, neighbor partitions 10);As with your typical create index, you define the column and table. The column must have the data type of VECTOR. We can then say what the distance measure should be, the target accuracy and any additional parameters required. Although the parameters part is not required, and the defaults will be used instead.

For other in-memory index we have

CREATE VECTOR INDEX wine_desc_idx ON wine_reviews_130k (embedding)

ORGANIZATION inmemory neighbor graph

distance cosine

WITH target accuracy 95;You can do some testing or evaluating to determine the accuracy of the Vector Index. You’ll need a test string which has been converted into a Vector by the same embedding mode used on the original data. See my previous posts for some example of how to do this.

declare

q_v VECTOR;

report varchar2(128);

begin

q_v := to_vector(:query_vector);

report := dbms_vector.index_accuracy_query(

OWNER_NAME => 'VECTORAI',

INDEX_NAME => 'WINE_DESC_HNSW_IDX',

qv => q_v, top_K =>10,

target_accuracy =>90 );

dbms_output.put_line(report);

end; This entry was posted in 23ai, Vector Database, Vector Embeddings and tagged Vector Database, Vector Embeddings, Vector Indexes.

Vector Databases – Part 4 – Vector Indexes

In this post on Vector Databases, I’ll explore some of the commonly used indexing techniques available in Databases. I’ll also explore the Vector Indexes available in Oracle 23c. Be sure to check that section towards the end of the post, where I’ll also include links to other articles in this series.

As with most data in a Databases, indexes are used for fast access to data. They create an organised structure (typically B+ tree) for storing the location of certain values within a table. When searching for data, if an index exists on that data, the index will be used for matching and the location of the records is used to quickly retrieve the data.

Similarly, for Vector searches, we need some way to search through thousands or millions of vectors to find those that best match our search criteria (vector search). For vector search, there are many more calculations to perform. We want to avoid a MxN search space.

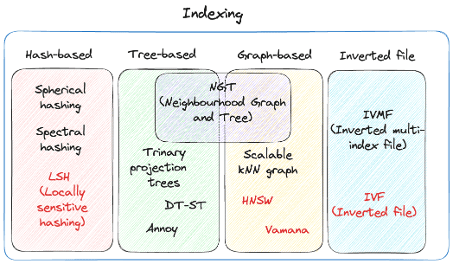

Given the nature of Vectors and the the type of search performed on these, databases need to have different types of indexes. Common Vector Index types include Hash-base, Tree-based, Graph-base and Inverted-file. Let’s have a look at each of these.

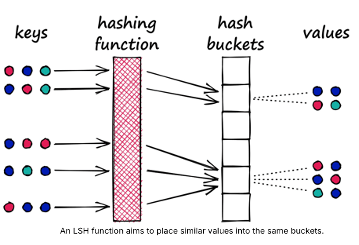

Hash-based Vector Indexes

Locality-Sensitive Hashing (LSH) uses hash functions to bucket similar vectors into a hash table. The query vectors are also hashed using the same hash function and it is compared with the other vectors already present in the table. This method is much faster than doing an exhaustive search across the entire dataset because there are fewer vectors in each hash table than in the whole vector space. While this technique is quite fast, the downside is that it is not very accurate. LSH is an approximate method, so a better hash function will result in a better approximation, but the result will not be the exact answer.

Tree-based Vector Indexes

Tree-based indexing allows for fast searches by using a data structure such as a binary tree. The tree gets created in a way that similar vectors are grouped in the same subtree. Approximate Nearest Neighbour (ANN) uses a forest of binary trees to perform approximate nearest neighbors search. ANN performs well with high-dimension data where doing an exact nearest neighbors search can be expensive. The downside of using this method is that it can take a significant amount of time to build the index. Whenever a new data point is received, the indices cannot be restructured on the fly. The entire index has to be rebuilt from scratch.

Graph-based Vector Indexes

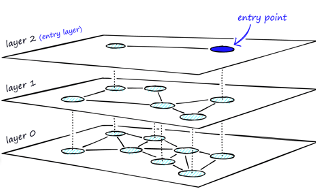

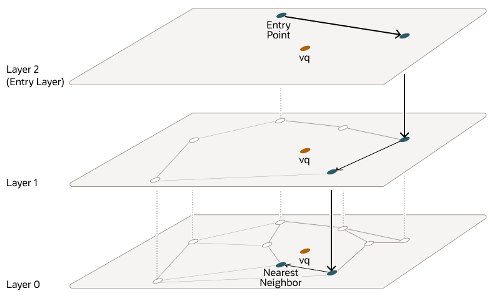

Similar to tree-based indexing, graph-based indexing groups similar data points by connecting them with an edge. Graph-based indexing is useful when trying to search for vectors in a high-dimensional space. Hierarchical Navigable Small Worlds (HNSW) creates a layered graph with the topmost layer containing the fewest points and the bottom layer containing the most points. When an input query comes in, the topmost layer is searched via ANN. The graph is traversed downward layer by layer. At each layer, the ANN algorithm is run to find the closest point to the input query. Once the bottom layer is hit, the nearest point to the input query is returned. Graph-based indexing is very efficient because it allows one to search through a high-dimensional space by narrowing down the location at each layer. However, re-indexing can be challenging because the entire graph may need to be recreated

Inverted-FIle Vector Indexes

IVF (InVerted File) narrows the search space by partitioning the dataset and creating a centroid(random point) for each partition. The centroids get updated via the K-Means algorithm. Once the index is populated, the ANN algorithm finds the nearest centroid to the input query and only searches through that partition. Although IVF is efficient at searching for similar points once the index is created, the process of creating the partitions and centroids can be quite slow.

Oracle 23ai comes with two main types of indexes for Vectors. These are:

In-Memory – Neighbor Graph Vector Index

Hierarchical Navigable Small World (HNSW) is the only type of In-Memory Neighbor Graph vector index supported. With Navigable Small World (NSW), the idea is to build a proximity graph where each vector in the graph connects to several others based on three characteristics:

- The distance between vectors

- The maximum number of closest vector candidates considered at each step of the search during insertion (EFCONSTRUCTION)

- Within the maximum number of connections (NEIGHBORS) permitted per vector

Navigable Small World (NSW) graph traversal for vector search begins with a predefined entry point in the graph, accessing a cluster of closely related vectors. The search algorithm employs two key lists:

- Candidates, a dynamically updated list of vectors that we encounter while traversing the graph,

- and Results, which contains the vectors closest to the query vector found thus far.

As the search progresses, the algorithm navigates through the graph, continually refining the Candidates by exploring and evaluating vectors that might be closer than those in the Results. The process concludes once there are no vectors in the Candidates closer than the farthest in the Results, indicating

Neighbor Partition Vector Index

Inverted File Flat (IVF) index is the only type of Neighbor Partition vector index supported.

Inverted File Flat Index (IVF Flat or simply IVF) is a partitioned-based index which balance high search quality with reasonable speed.

The IVF index is a technique designed to enhance search efficiency by narrowing the search area through the use of neighbor partitions or clusters.

Here is an example of creating such an index in Oracle 23ai.

CREATE VECTOR INDEX galaxies_ivf_idx ON galaxies (embedding)

ORGANIZATION NEIGHBOR

PARTITIONS DISTANCE COSINE

WITH TARGET ACCURACY 95;

Check out my other posts in this series on Vector Databases.

This entry was posted in Vector Database, Vector Embeddings and tagged Vector Databases, Vector Indexes, Vector Search.

You must be logged in to post a comment.