Unlock Text Analytics with Oracle OCI Python – Part 1

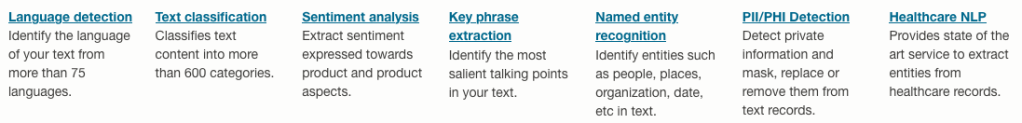

Oracle OCI has a number of features that allows you to perform Text Analytics such as Language Detection, Text Classification, Sentiment Analysis, Key Phrase Extraction, Named Entity Recognition, Private Data detection and masking, and Healthcare NLP.

While some of these have particular (and in some instances limited) use cases, the following examples will illustrate some of the main features using the OCI Python library. Why am I using Python to illustrate these? This is because most developers are using Python to build applications.

In this post, the Python examples below will cover the following:

- Language Detection

- Text Classification

- Sentiment Analysis

In my next post on this topic, I’ll cover:

- Key Phrase

- Named Entity Recognition

- Detect private information and marking

Before you can use any of the OCI AI Services, you need to set up a config file on your computer. This will contain the details necessary to establish a secure connection to your OCI tendency. Check out this blog post about setting this up.

The following Python examples illustrate what is possible for each feature. In the first example, I include what is needed for the config file. This is not repeated in the examples that follow, but it is still needed.

Language Detection

Let’s begin with a simple example where we provide a simple piece of text and as OCI Language Service, using OCI Python, to detect the primary language for the text and display some basic information about this prediction.

import oci

from oci.config import from_file

#Read in config file - this is needed for connecting to the OCI AI Services

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', profile_name=CONFIG_PROFILE)

###

ai_language_client = oci.ai_language.AIServiceLanguageClient(config)

# French :

text_fr = "Bonjour et bienvenue dans l'analyse de texte à l'aide de ce service cloud"

response = ai_language_client.detect_dominant_language(

oci.ai_language.models.DetectLanguageSentimentsDetails(

text=text_fr

)

)

print(response.data.languages[0].name)

----------

FrenchIn this example, I’ve a simple piece of French (for any native French speakers, I do apologise). We can see the language was identified as French. Let’s have a closer look at what is returned by the OCI function.

print(response.data)

----------

{

"languages": [

{

"code": "fr",

"name": "French",

"score": 1.0

}

]

}We can see from the above, the object contains the language code, the full name of the language and the score to indicate how strong or how confident the function is with the prediction. When the text contains two or more languages, the function will return the primary language used.

Note: OCI Language can detect at least 113 different languages. Check out the full list here.

Let’s give it a try with a few other languages, including Irish, which localised to certain parts of Ireland. Using the same code as above, I’ve included the same statement (google) translated into other languages. The code loops through each text statement and detects the language.

import oci

from oci.config import from_file

###

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', profile_name=CONFIG_PROFILE)

###

ai_language_client = oci.ai_language.AIServiceLanguageClient(config)

# French :

text_fr = "Bonjour et bienvenue dans l'analyse de texte à l'aide de ce service cloud"

# German:

text_ger = "Guten Tag und willkommen zur Textanalyse mit diesem Cloud-Dienst"

# Danish

text_dan = "Goddag, og velkommen til at analysere tekst ved hjælp af denne skytjeneste"

# Italian

text_it = "Buongiorno e benvenuti all'analisi del testo tramite questo servizio cloud"

# English:

text_eng = "Good day, and welcome to analysing text using this cloud service"

# Irish

text_irl = "Lá maith, agus fáilte romhat chuig anailís a dhéanamh ar théacs ag baint úsáide as an tseirbhís scamall seo"

for text in [text_eng, text_ger, text_dan, text_it, text_irl]:

response = ai_language_client.detect_dominant_language(

oci.ai_language.models.DetectLanguageSentimentsDetails(

text=text

)

)

print('[' + response.data.languages[0].name + ' ('+ str(response.data.languages[0].score) +')' + '] '+ text)

----------

[English (1.0)] Good day, and welcome to analysing text using this cloud service

[German (1.0)] Guten Tag und willkommen zur Textanalyse mit diesem Cloud-Dienst

[Danish (1.0)] Goddag, og velkommen til at analysere tekst ved hjælp af denne skytjeneste

[Italian (1.0)] Buongiorno e benvenuti all'analisi del testo tramite questo servizio cloud

[Irish (1.0)] Lá maith, agus fáilte romhat chuig anailís a dhéanamh ar théacs ag baint úsáide as an tseirbhís scamall seoWhen you run this code yourself, you’ll notice how quick the response time is for each.

Text Classification

Now that we can perform some simple language detections, we can move on to some more insightful functions. The first of these is Text Classification. With Text Classification, it will analyse the text to identify categories and a confidence score of what is covered in the text. Let’s have a look at an example using the English version of the text used above. This time, we need to perform two steps. The first is to set up and prepare the document to be sent. The second step is to perform the classification.

### Text Classification

text_document = oci.ai_language.models.TextDocument(key="Demo", text=text_eng, language_code="en")

text_class_resp = ai_language_client.batch_detect_language_text_classification(

batch_detect_language_text_classification_details=oci.ai_language.models.BatchDetectLanguageTextClassificationDetails(

documents=[text_document]

)

)

print(text_class_resp.data)

----------

{

"documents": [

{

"key": "Demo",

"language_code": "en",

"text_classification": [

{

"label": "Internet and Communications/Web Services",

"score": 1.0

}

]

}

],

"errors": []

}We can see it has correctly identified the text is referring to or is about “Internet and Communications/Web Services”. For a second example, let’s use some text about F1. The following is taken from an article on F1 app and refers to the recent Driver issues, and we’ll use the first two paragraphs.

{

"documents": [

{

"key": "Demo",

"language_code": "en",

"text_classification": [

{

"label": "Sports and Games/Motor Sports",

"score": 1.0

}

]

}

],

"errors": []

}We can format this response object as follows.

print(text_class_resp.data.documents[0].text_classification[0].label

+ ' [' + str(text_class_resp.data.documents[0].text_classification[0].score) + ']')

----------

Sports and Games/Motor Sports [1.0]It is possible to get multiple classifications being returned. To handle this we need to use a couple of loops.

for i in range(len(text_class_resp.data.documents)):

for j in range(len(text_class_resp.data.documents[i].text_classification)):

print("Label: ", text_class_resp.data.documents[i].text_classification[j].label)

print("Score: ", text_class_resp.data.documents[i].text_classification[j].score)

----------

Label: Sports and Games/Motor Sports

Score: 1.0Yet again, it correctly identified the type of topic area for the text. At this point, you are probably starting to get ideas about how this can be used and in what kinds of scenarios. This list will probably get longer over time.

Sentiement Analysis

For Sentiment Analysis we are looking to gauge the mood or tone of a text. For example, we might be looking to identify opinions, appraisals, emotions, attitudes towards a topic or person or an entity. The function returned an object containing a positive, neutral, mixed and positive sentiments and a confidence score. This feature currently supports English and Spanish.

The Sentiment Analysis function provides two way of analysing the text:

- At a Sentence level

- Looks are certain Aspects of the text. This identifies parts/words/phrase and determines the sentiment for each

Let’s start with the Sentence level Sentiment Analysis with a piece of text containing two sentences with both negative and positive sentiments.

#Sentiment analysis

text = "This hotel was in poor condition and I'd recommend not staying here. There was one helpful member of staff"

text_document = oci.ai_language.models.TextDocument(key="Demo", text=text, language_code="en")

text_doc=oci.ai_language.models.BatchDetectLanguageSentimentsDetails(documents=[text_document])

text_sentiment_resp = ai_language_client.batch_detect_language_sentiments(text_doc, level=["SENTENCE"])

print (text_sentiment_resp.data)The response object gives us:

{

"documents": [

{

"aspects": [],

"document_scores": {

"Mixed": 0.3458947,

"Negative": 0.41229093,

"Neutral": 0.0061426135,

"Positive": 0.23567174

},

"document_sentiment": "Negative",

"key": "Demo",

"language_code": "en",

"sentences": [

{

"length": 68,

"offset": 0,

"scores": {

"Mixed": 0.17541811,

"Negative": 0.82458186,

"Neutral": 0.0,

"Positive": 0.0

},

"sentiment": "Negative",

"text": "This hotel was in poor condition and I'd recommend not staying here."

},

{

"length": 37,

"offset": 69,

"scores": {

"Mixed": 0.5163713,

"Negative": 0.0,

"Neutral": 0.012285227,

"Positive": 0.4713435

},

"sentiment": "Mixed",

"text": "There was one helpful member of staff"

}

]

}

],

"errors": []

}There are two parts to this object. The first part gives us the overall Sentiment for the text, along with the confidence scores for all possible sentiments. The second part of the object breaks the test into individual sentences and gives the Sentiment and confidence scores for the sentence. Overall, the text used in “Negative” with a confidence score of 0.41229093. When we look at the sentences, we can see the first sentence is “Negative” and the second sentence is “Mixed”.

When we switch to using Aspect we can see the difference in the response.

text_sentiment_resp = ai_language_client.batch_detect_language_sentiments(text_doc, level=["ASPECT"])

print (text_sentiment_resp.data)The response object gives us:

{

"documents": [

{

"aspects": [

{

"length": 5,

"offset": 5,

"scores": {

"Mixed": 0.17299445074935532,

"Negative": 0.8268503302365734,

"Neutral": 0.0,

"Positive": 0.0001552190140712097

},

"sentiment": "Negative",

"text": "hotel"

},

{

"length": 9,

"offset": 23,

"scores": {

"Mixed": 0.0020200687053503,

"Negative": 0.9971282906307877,

"Neutral": 0.0,

"Positive": 0.0008516406638620019

},

"sentiment": "Negative",

"text": "condition"

},

{

"length": 6,

"offset": 91,

"scores": {

"Mixed": 0.0,

"Negative": 0.002300517913679934,

"Neutral": 0.023815747524769032,

"Positive": 0.973883734561551

},

"sentiment": "Positive",

"text": "member"

},

{

"length": 5,

"offset": 101,

"scores": {

"Mixed": 0.10319573538533408,

"Negative": 0.2070680870320537,

"Neutral": 0.0,

"Positive": 0.6897361775826122

},

"sentiment": "Positive",

"text": "staff"

}

],

"document_scores": {},

"document_sentiment": "",

"key": "Demo",

"language_code": "en",

"sentences": []

}

],

"errors": []

}The different aspects are extracted, and the sentiment for each within the text is determined. What you need to look out for are the labels “text” and “sentiment.