EU

EU AI Act: Key Dates and Impact on AI Developers

The official text of the EU AI Act has been published in the EU Journal. This is another landmark point for the EU AI Act, as these regulations are set to enter into force on 1st August 2024. If you haven’t started your preparations for this, you really need to start now. See the timeline for the different stages of the EU AI Act below.

The EU AI Act is a landmark piece of legislation and similar legislation is being drafted/enacted in various geographic regions around the world. The EU AI Act is considered the most extensive legal framework for AI developers, deployers, importers, etc and aims to ensure AI systems introduced or currently being used in the EU internal market (even if they are developed and located outside of the EU) are secure, compliant with existing and new laws on fundamental rights and align with EU principles.

The key dates are:

- 2 February 2025: Prohibitions on Unacceptable Risk AI

- 2 August 2025: Obligations come into effect for providers of general purpose AI models. Appointment of member state competent authorities. Annual Commission review of and possible legislative amendments to the list of prohibited AI.

- 2 February 2026: Commission implements act on post market monitoring

- 2 August 2026: Obligations go into effect for high-risk AI systems specifically listed in Annex III, including systems in biometrics, critical infrastructure, education, employment, access to essential public services, law enforcement, immigration and administration of justice. Member states to have implemented rules on penalties, including administrative fines. Member state authorities to have established at least one operational AI regulatory sandbox. Commission review, and possible amendment of, the list of high-risk AI systems.

- 2 August 2027: Obligations go into effect for high-risk AI systems not prescribed in Annex III but intended to be used as a safety component of a product. Obligations go into effect for high-risk AI systems in which the AI itself is a product and the product is required to undergo a third-party conformity assessment under existing specific EU laws, for example, toys, radio equipment, in vitro diagnostic medical devices, civil aviation security and agricultural vehicles.

- By End of 2030: Obligations go into effect for certain AI systems that are components of the large-scale information technology systems established by EU law in the areas of freedom, security and justice, such as the Schengen Information System.

Here is the link to the official text in the EU Journal publication.

EU AI Act has been passed by EU parliament

It feels like we’ve been hearing about and talking about the EU AI Act for a very long time now. But on Wednesday 13th March 2024, the EU Parliament finally voted to approve the Act. While this is a major milestone, we haven’t crossed the finish line. There are a few steps to complete, although these are minor steps and are part of the process.

The remaining timeline is:

- The EU AI Act will undergo final linguistic approval by lawyer-linguists in April. This is considered a formality step.

- It will then be published in the Official EU Journal

- 21 days after being published it will come into effect (probably in May)

- The Prohibited Systems provisions will come into force six months later (probably by end of 2024)

- All other provisions in the Act will come into force over the next 2-3 years

If you haven’t already started looking at and evaluating the various elements of AI deployed in your organisation, now is the time to start. It’s time to prepare and explore what changes, if any, you need to make. If you don’t the penalties for non-compliance are hefty, with fines of up to €35 million or 7% of global turnover.

The first thing you need to address is the Prohibited AI Systems and the EU AI Act outlines the following and will need to be addressed before the end of 2024:

- Manipulative and Deceptive Practices: systems that use subliminal techniques to materially distort a person’s decision-making capacity, leading to significant harm. This includes systems that manipulate behaviour or decisions in a way that the individual would not have otherwise made.

- Exploitation of Vulnerabilities: systems that target individuals or groups based on age, disability, or socio-economic status to distort behaviour in harmful ways.

- Biometric Categorisation: systems that categorise individuals based on biometric data to infer sensitive information like race, political opinions, or sexual orientation. This prohibition does not cover any labelling or filtering of lawfully acquired biometric datasets, such as images. There are also exceptions for law enforcement.

- Social Scoring: systems designed to evaluate individuals or groups over time based on their social behaviour or predicted personal characteristics, leading to detrimental treatment.

- Real-time Biometric Identification: The use of real-time remote biometric identification systems in publicly accessible spaces for law enforcement is heavily restricted, with allowances only under narrowly defined circumstances that require judicial or independent administrative approval.

- Risk Assessment in Criminal Offences: systems that assess the risk of individuals committing criminal offences based solely on profiling, except when supporting human assessment already based on factual evidence.

- Facial Recognition Databases: systems that create or expand facial recognition databases through untargeted scraping of images are prohibited.

- Emotion Inference in Workplaces and Educational Institutions: The use of AI to infer emotions in sensitive environments like workplaces and schools is banned, barring exceptions for medical or safety reasons.

In addition to the timeline given above we also have:

- 12 months after entry into force: Obligations on providers of general purpose AI models go into effect. Appointment of member state competent authorities. Annual Commission review of and possible amendments to the list of prohibited AI.

- after 18 months: Commission implementing act on post-market monitoring

- after 24 months: Obligations on high-risk AI systems specifically listed in Annex III, which includes AI systems in biometrics, critical infrastructure, education, employment, access to essential public services, law enforcement, immigration and administration of justice. Member states to have implemented rules on penalties, including administrative fines. Member state authorities to have established at least one operational AI regulatory sandbox. Commission review and possible amendment of the last of high-risk AI systems.

- after 36 months: Obligations for high-rish AI systems that are not prescribed in Annex III but are intended to be used as a safety component of a product, or the AI is itself a product, and the product is required to undergo a third-party conformity assessment under existing specific laws, for example, toys, radio equipment, in vitro diagnostic medical devices, civil aviation security and agricultural vehicles.

The EU has provided an official compliance check that helps identify which parts of the EU AI Act apply in a given use case.

EU AI Regulations: Common Questions and Answers

As the EU AI Regulations move to the next phase, after reaching a political agreement last Nov/Dec 2023, the European Commission has published answers to 28 of the most common questions about the act. These questions are:

- Why do we need to regulate the use of Artificial Intelligence?

- Which risks will the new AI rules address?

- To whom does the AI Act apply?

- What are the risk categories?

- How do I know whether an AI system is high-risk?

- What are the obligations for providers of high-risk AI systems?

- What are examples for high-risk use cases as defined in Annex III?

- How are general-purpose AI models being regulated?

- Why is 10^25 FLOPs an appropriate threshold for GPAI with systemic risks?

- Is the AI Act future-proof?

- How does the AI Act regulate biometric identification?

- Why are particular rules needed for remote biometric identification?

- How do the rules protect fundamental rights?

- What is a fundamental rights impact assessment? Who has to conduct such an assessment, and when?

- How does this regulation address racial and gender bias in AI?

- When will the AI Act be fully applicable?

- How will the AI Act be enforced?

- Why is a European Artificial Intelligence Board needed and what will it do?

- What are the tasks of the European AI Office?

- What is the difference between the AI Board, AI Office, Advisory Forum and Scientific Panel of independent experts?

- What are the penalties for infringement?

- What can individuals do that are affected by a rule violation?

- How do the voluntary codes of conduct for high-risk AI systems work?

- How do the codes of practice for general purpose AI models work?

- Does the AI Act contain provisions regarding environmental protection and sustainability?

- How can the new rules support innovation?

- Besides the AI Act, how will the EU facilitate and support innovation in AI?

- What is the international dimension of the EU’s approach?

This list of Questions and Answers are a beneficial read and clearly addresses common questions you might have seen being addressed in other media outlets. With these being provided and answered by the commission gives us a clear explanation of what is involved.

In addition to the webpage containing these questions and answers, they provide a PDF with them too.

EU AI Act adopts OECD Definition of AI

Over the recent months, the EU AI Act has been making progress through the various hoop in the EU. Various committees and working groups have examined different parts of the AI Act and how it will impact the wider population. Their recommendations have been added to the EU Act and it has now progressed to the next stage for ratification in the EU Parliament which should happen in a few months time.

There are lots of terms within the EU AI Act which needed defining, with the most crucial one being the definition of AI, and this definition underpins the entire act, and all the other definitions of terms throughout the EU AI Act. Back in March of this year, the various political groups working on the EU AI Act reached an agreement on the definition of AI (Artificial Intelligence). The EI AI Act adopts, or is based on, the OECD definition of AI.

“Artificial intelligence system’ (AI system) means a machine-based system that is designed to operate with varying levels of autonomy and that can, for explicit or implicit objectives, generate output such as predictions, recommendations, or decisions influencing physical or virtual environments”

The working groups wanted the AI definition to be closely aligned with the work of international organisations working on artificial intelligence to ensure legal certainty, harmonisation and wide acceptance. The wording includes reference to predictions includes content, this is to ensure generative AI models like ChatGPT are included in the regulation.

Other definitions included are, significant risk, biometric authentication and identification.

“‘Significant risk’ means a risk that is significant in terms of its severity, intensity, probability of occurrence, duration of its effects, and its ability to affect an individual, a plurality of persons or to affect a particular group of persons,” the document specifies.

Remote biometric verification systems were defined as AI systems used to verify the identity of persons by comparing their biometric data against a reference database with their prior consent. That is distinguished by an authentication system, where the persons themselves ask to be authenticated.

On biometric categorisation, a practice recently added to the list of prohibited use cases, a reference was added to inferring personal characteristics and attributes like gender or health.

EU Digital Services Act

The Digital Services Act (DSA) applies to a wide variety of online services, ranging from websites to social networks and online platforms, with a view to “creating a safer digital space in which the fundamental rights of all users of digital services are protected”.

In November 2020, the European Union introduced a new legislation called the Digital Services Act (DSA) to regulate the activities of tech companies operating within the EU. The aim of the DSA is to create a safer and more transparent online environment for EU citizens by imposing new rules and responsibilities on digital service providers. This includes online platforms such as social media, search engines, e-commerce sites, and cloud services. The provisions in the DSA Act will apply from 17th February 2024, thus giving affected parties time to ensure compliance.

The DSA aims to address a number of issues related to digital services, including:

- Ensuring that digital service providers take responsibility for the content on their platforms and that they have effective measures in place to combat illegal content, such as hate speech, terrorist content, and counterfeit goods.

- Requiring digital service providers to be more transparent about their advertising practices, and to disclose more information about the algorithms they use to recommend content.

- Introducing new rules for online marketplaces to prevent the sale of unsafe products and to ensure that consumers are protected when buying online.

- Strengthening the powers of national authorities to enforce these rules and to hold digital service providers accountable for any violations.

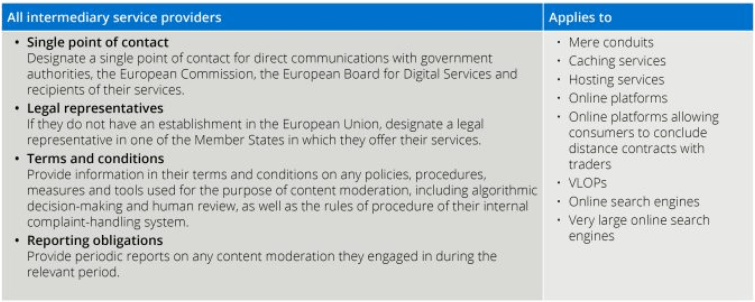

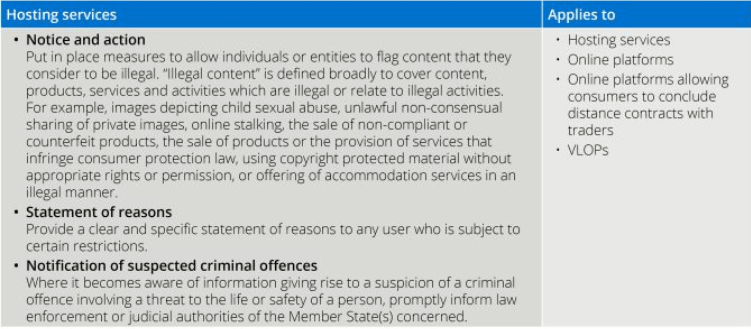

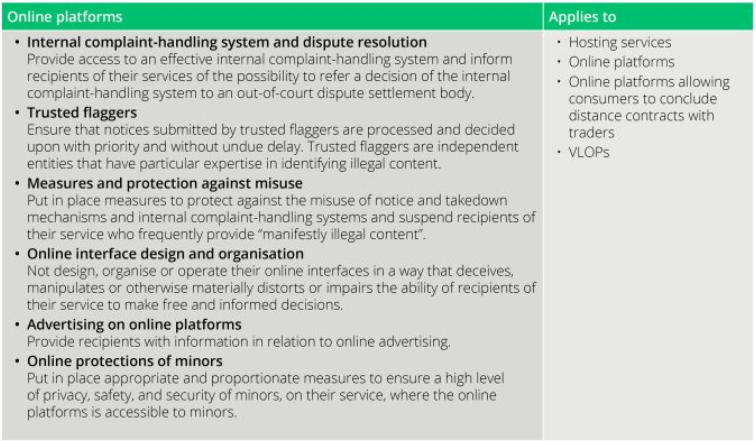

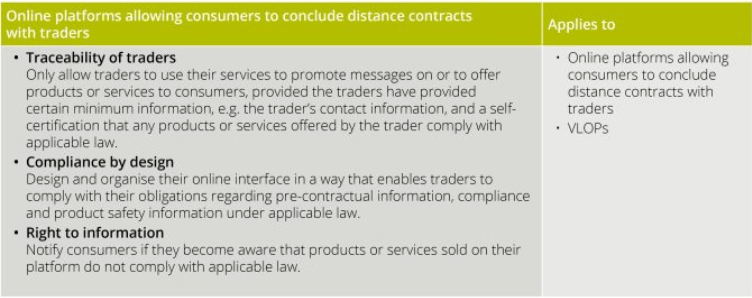

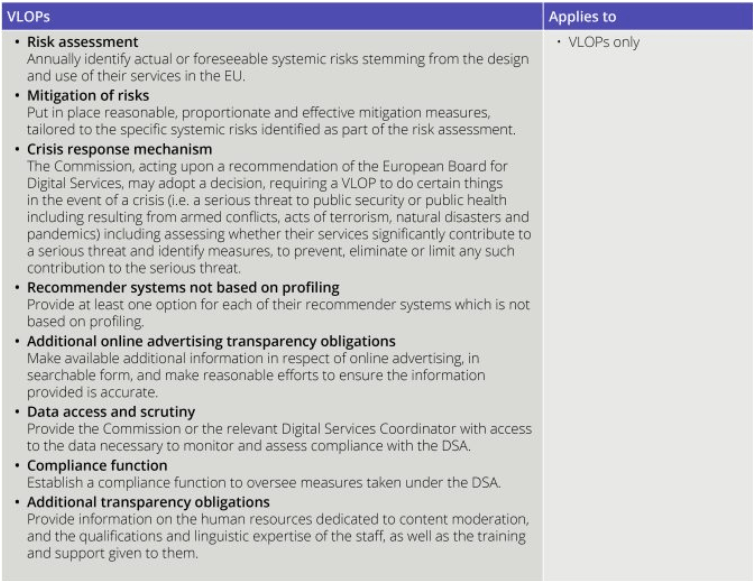

The DSA takes a layered approach to regulation. The basic obligations under the DSA apply to all online intermediary service providers, additional obligations apply to providers in other categories, with the heaviest regulation applying to very large online platforms (VLOPs) and very large online service engines (VLOSEs).

The four categories are:

- Intermediary service providers are online services which consist of either a “mere conduit” service, a “caching” service; or a “hosting” service. Examples include online search engines, wireless local area networks, cloud infrastructure services, or content delivery networks.

- Hosting services are intermediary service providers who store information at the request of the service user. Examples include cloud services and services enabling sharing information and content online, including file storage and sharing.

- Online Platforms are hosting services which also disseminate the information they store to the public at the user’s request. Examples include social media platforms, message boards, app stores, online forums, metaverse platforms, online marketplaces and travel and accommodation platforms.

- (a) VLOPs are online platforms having more than 45 million active monthly users in the EU (representing 10% of the population of the EU). (b) VLOSEs are online search engines having more than 45 million active monthly users in the EU (representing 10% of the population of the EU).

Arther Cox provide a useful table of obligations for each of these categories.

You must be logged in to post a comment.