AI Regulation

Tracking AI Regulations, Governance and Incidents

Here are the key Trackers to follow to stay ahead.

𝐀𝐈 Incidents & Risks

AI Risk Repository [MIT FutureTech]

A comprehensive database of 700 risks from AI systems https://airisk.mit.edu/

AI Incident Database [Partnership on AI]

Dedicated to indexing the collective history real-world of harms caused by the deployment of AI

https://lnkd.in/ewBaYitm

AI Incidents Monitor [OECD – OCDE]

AI incidents and hazards reported in international media globally are identified and classified using machine learning models https://lnkd.in/e4pJ7jcA

𝐀𝐈 Regulations & Policies

Global AI Law and Policy Tracker [IAPP]

Resource providing information about AI law and policy developments in key jurisdictions worldwide https://lnkd.in/eiGMk9Rm

National AI Policies and Strategies [OECD.AI]

Live repository of 1000+ AI policy initiatives from 69 countries, territories and the EU https://lnkd.in/ebVTQzdb

Global AI Regulation Tracker [Raymond Sun]

An interactive world map that tracks AI law, regulatory and policy developments around the world https://lnkd.in/ekaKzmzD

U.S. State AI Governance Legislation Tracker [IAPP]

Tracker which focuses on cross-sectoral AI governance bills that apply to the private sector https://lnkd.in/ee4N-ckB.

𝐀𝐈 Governance Toolkits & Resources

AI Standards Hub [The Alan Turing Institute]

Online repository of 300+ AI standards https://lnkd.in/erVdP4g7

AI Risk Management Framework Playbook [National Institute of Standards and Technology (NIST)]

Playbook of recommended actions, resources and materials to support implementation of the NIST AI RMF https://lnkd.in/eTzpfbCi

Catalogue of Tools & Metrics for Trustworthy AI [OECD.AI]

Tools and metrics which help AI actors to build and deploy trustworthy AI systems https://lnkd.in/e_mnAbpZ

Portfolio of AI Assurance Techniques [Department for Science, Innovation and Technology]

The Portfolio showcases examples of AI assurance techniques being used in the real-world to support the development of trustworthy AI. https://lnkd.in/eJ5V3uzb

EU AI Act has been passed by EU parliament

It feels like we’ve been hearing about and talking about the EU AI Act for a very long time now. But on Wednesday 13th March 2024, the EU Parliament finally voted to approve the Act. While this is a major milestone, we haven’t crossed the finish line. There are a few steps to complete, although these are minor steps and are part of the process.

The remaining timeline is:

- The EU AI Act will undergo final linguistic approval by lawyer-linguists in April. This is considered a formality step.

- It will then be published in the Official EU Journal

- 21 days after being published it will come into effect (probably in May)

- The Prohibited Systems provisions will come into force six months later (probably by end of 2024)

- All other provisions in the Act will come into force over the next 2-3 years

If you haven’t already started looking at and evaluating the various elements of AI deployed in your organisation, now is the time to start. It’s time to prepare and explore what changes, if any, you need to make. If you don’t the penalties for non-compliance are hefty, with fines of up to €35 million or 7% of global turnover.

The first thing you need to address is the Prohibited AI Systems and the EU AI Act outlines the following and will need to be addressed before the end of 2024:

- Manipulative and Deceptive Practices: systems that use subliminal techniques to materially distort a person’s decision-making capacity, leading to significant harm. This includes systems that manipulate behaviour or decisions in a way that the individual would not have otherwise made.

- Exploitation of Vulnerabilities: systems that target individuals or groups based on age, disability, or socio-economic status to distort behaviour in harmful ways.

- Biometric Categorisation: systems that categorise individuals based on biometric data to infer sensitive information like race, political opinions, or sexual orientation. This prohibition does not cover any labelling or filtering of lawfully acquired biometric datasets, such as images. There are also exceptions for law enforcement.

- Social Scoring: systems designed to evaluate individuals or groups over time based on their social behaviour or predicted personal characteristics, leading to detrimental treatment.

- Real-time Biometric Identification: The use of real-time remote biometric identification systems in publicly accessible spaces for law enforcement is heavily restricted, with allowances only under narrowly defined circumstances that require judicial or independent administrative approval.

- Risk Assessment in Criminal Offences: systems that assess the risk of individuals committing criminal offences based solely on profiling, except when supporting human assessment already based on factual evidence.

- Facial Recognition Databases: systems that create or expand facial recognition databases through untargeted scraping of images are prohibited.

- Emotion Inference in Workplaces and Educational Institutions: The use of AI to infer emotions in sensitive environments like workplaces and schools is banned, barring exceptions for medical or safety reasons.

In addition to the timeline given above we also have:

- 12 months after entry into force: Obligations on providers of general purpose AI models go into effect. Appointment of member state competent authorities. Annual Commission review of and possible amendments to the list of prohibited AI.

- after 18 months: Commission implementing act on post-market monitoring

- after 24 months: Obligations on high-risk AI systems specifically listed in Annex III, which includes AI systems in biometrics, critical infrastructure, education, employment, access to essential public services, law enforcement, immigration and administration of justice. Member states to have implemented rules on penalties, including administrative fines. Member state authorities to have established at least one operational AI regulatory sandbox. Commission review and possible amendment of the last of high-risk AI systems.

- after 36 months: Obligations for high-rish AI systems that are not prescribed in Annex III but are intended to be used as a safety component of a product, or the AI is itself a product, and the product is required to undergo a third-party conformity assessment under existing specific laws, for example, toys, radio equipment, in vitro diagnostic medical devices, civil aviation security and agricultural vehicles.

The EU has provided an official compliance check that helps identify which parts of the EU AI Act apply in a given use case.

AI Sandboxes – EU AI Regulations

The EU AI Regulations provides a framework for placing on the market and putting into service AI system in the EU. One of the biggest challenges most organisations will face will be how they can innovate and develop new AI systems while at the same time ensuring they are compliant with the regulations. But a what point do you know you are compliant with these new AI Systems? This can be challenging and could limit or slow down the development and deployment of such systems.

The EU does not want to limit or slow down such innovations and want organisations to continually research, develop and deploy new AI. To facilitate this the EU AI Regulations contains a structure under which this can be achieved.

Section or Title of EU AI Regulations contains Articles 53, 54, and 55 to support the development of new AI systems by the use of Sandboxes. We have already seen examples of these being introduced by the UK and Norwegian Data Protection Commissioners.

A Sandbox “provides a controlled environment that facilitates the development, testing and validation of innovative AI systems for a limited time before their placement on the market or putting into

service pursuant to a specific plan.“

Sandboxes are stand alone environments to allow the exploration and development of new AI solutions, which may or may not include some risky use of customer data or other potential AI outcomes which may not be allowed under the regulations. It becomes a controlled experiment lab for the AI team who are developing and testing a potential AI System and can do so under real world conditions. The Sandbox gives a “safe” environment for this experimental work.

The Sandbox are to be established by the Competent Authorities in each EU country. In Ireland the Competent Authority seems to be the Data Protection Commissioner, and this may be similar in other countries. As you can imagine, under the current wording of the EU AI Regulations this might present some challenges for the both the Competent Authority and also for the company looking to develop an AI solution. Firstly, does the Competent Authority need to provide sandboxes for all companies looking to develop AI, and each one of these companies may have several AI projects. This is a massive overhead for the Competent Authority to provide and resource. Secondly, will companies be willing to setup a self-contained environment, containing customer data, data insights, solutions with potential competitive advantage, etc in a Sandbox provided by the Competent Authority. The technical infrastructure used could be hosting many Sandboxes, with many competing companies using the same infrastructure at the same time. This is a big ask for the companies and the Competent Authority.

Let’s see what really happens regarding the implementation of the Sandboxes over the coming years, and how this will be defined in the final draft of the Regulations.

Article 54 defines additional requirements for the processing of personal data within the Sandbox.

- Personal Data being used is required, and can be fulfilled by processing anonymized, synthetic or other non-personal data. Even if it has been collected for other purposes.

- Continuous monitoring needed to identify any high risk to fundamental rights of the data subject, and response mechanism to mitigate those risks.

- Any personal data to be processed is in a functionally separate, isolated and protected data processing environment under the control of the participants and only authorised persons have access to that data.

- Any personal data processed are not be transmitted, transferred or otherwise accessed by other parties.

- Any processing of personal data does not lead to measures or decisions affecting the data subjects.

- All personal data is deleted once the participation in the sandbox is terminated or the personal data has reached the end of its retention period.

- Full documentation of what was done to the data, must be kept for 1 year after termination of Sandbox, and only to be used for accountability and documentation obligations.

- Documentation of the complete process and rationale behind the training, testing and validation of AI, along with test results as part of technical documentation. (see Annex IV)

- Short Summary of AI project, its objectives and expected results published on website of Competent Authorities

Based on the last bullet point the Competent Authority is required to write am annual report and submit this report to the EU AI Board. The report is to include details on the results of their scheme, good and bad practices, lessons learnt and recommendations on the setup and application of the Regulations within the Sandboxes.

OCED Framework for Classifying of AI Systems

Over the past few months we have seen more and more countries looking at how they can support and regulate the use and development of AI within their geographic areas. For those in Europe, a lot of focus has been on the draft AI Regulations. At the time of writing this post there has been a lot of politics going on in relation to the EU AI Regulations. Some of this has been around the definition of AI, what will be included and excluded in their different categories, who will be policing and enforcing the regulations, among lots of other things. We could end up with a very different set of regulations to what was included in the draft (published April 2021). It also looks like the enactment of the EU AI Regulations will be delayed to the end of 2022, with some people suggesting it would be towards mid-2023 before something formal happens.

I mentioned above one of the things that may or may not change is the definition of AI within the EU AI Regulations. Although primarily focused on the inclusion/exclusion of biometic aspects, there are other refinements being proposed. When you look at what other geographic regions are doing, we start to see some common aspects on their definitions of AI, but we also see some differences. You can imagine the difficulties this will present in the global marketplace and how AI touches upon all/many aspects of most businesses, their customers and their data.

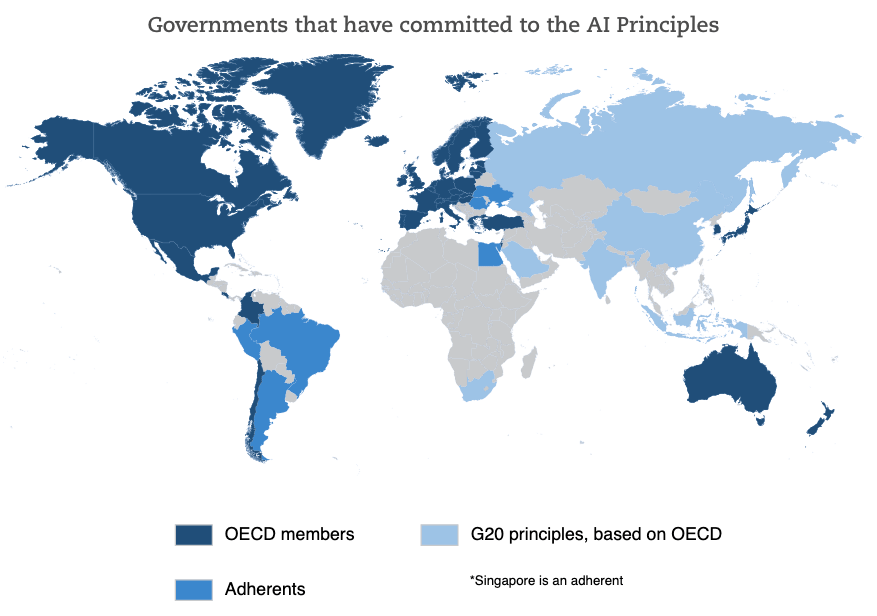

Most of you will have heard of OCED. In recent weeks they have been work across all member countries to work towards a Definition of AI and how different AI systems can be classified. They have called this their OCED Framework for Classifying of AI Systems.

The OCED Framework for Classifying AI System is a tool for policy-makers, regulators, legislators and others so that they can assess the opportunities and risks that different types of AI systems present and to inform their national AI strategies.

The Framework links the technical characteristics of AI with the policy implications set out in the OCED AI Principles which include:

- Inclusive growth, sustainable development and well-being

- Human-centred values and fairness

- Transparency and explainability

- Robustness, security and safety

- Accountability

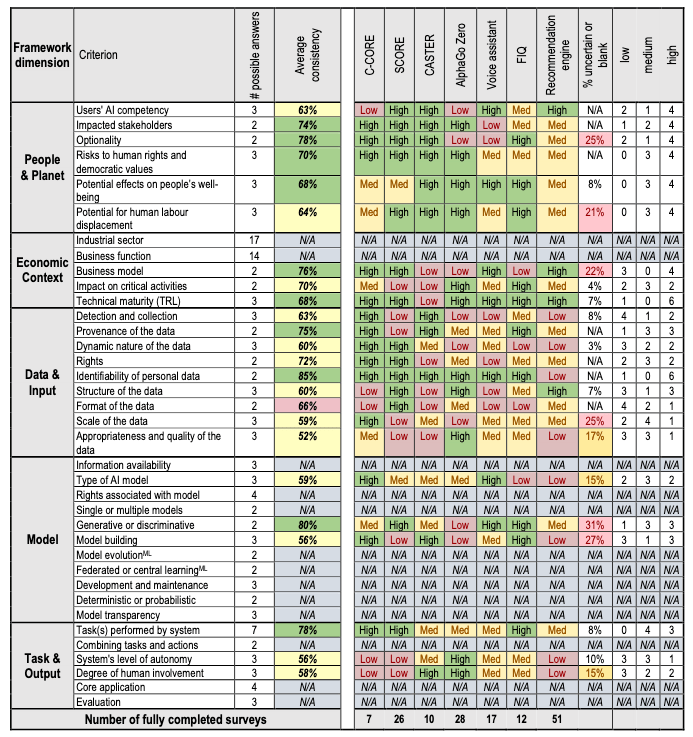

The framework looks are different aspects depending on if the AI is still within the lab (sandbox) environment or is live in production or in use in the field.

The framework goes into more detail on the various aspects that need to be considered for each of these. The working group have apply the frame work to a number of different AI systems to illustrate how it cab be used.

Check out the framework document where it goes into more detail of each of the criterion listed above for each dimension of the framework.

You must be logged in to post a comment.