Uncategorized

Part 2 – Do I have permissions to use the data for data profiling?

This is the second part of series of blog posts on ‘How the EU GDPR will affect the use of Machine Learning‘

I have the data, so I can use it? Right?

I can do what I want with that data? Right? (sure the customer won’t know!)

NO. The answer is No you cannot use the data unless you have been given the permission to use it for a particular task.

The GDPR applies to all companies worldwide that process personal data of European Union (EU) citizens. This means that any company that works with information relating to EU citizens will have to comply with the requirements of the GDPR, making it the first global data protection law.

The GDPR tightens the rules for obtaining valid consent to using personal information. Having the ability to prove valid consent for using personal information is likely to be one of the biggest challenges presented by the GDPR. Organisations need to ensure they use simple language when asking for consent to collect personal data, they need to be clear about how they will use the information, and they need to understand that silence or inactivity no longer constitutes consent.

You will need to investigate the small print of all the terms and conditions that your customers have signed. Then you need to examine what data you have, how and where it was collected or generated, and then determine if I have to use this data beyond what the original intention was. If there has been no mention of using the customer data (or any part of it) for analytics, profiling, or anything vaguely related to it then you cannot use the data. This could mean that you cannot use any data for your analytics and/or machine learning. This is a major problem. No data means no analytics and no targeting the customers with special offers, etc.

Data cannot be magically produced out of nowhere and it isn’t the fault of the data science team if they have no data to use.

How can you over come this major stumbling block?

The first place is to review all the T&Cs. Identify what data can be used and what data cannot be used. One approach for data that cannot be used is to update the T&Cs and get the customers to agree to them. Yes they need to explicitly agree (or not) to them. Giving them a time limit to respond is not allowed. It needs to be explicit.

Yes this will be hard work. Yes this will take time. Yes it will affect what machine learning and analytics you can perform for some time. But the sooner you can identify these area, get the T&Cs updated, get the approval of the customers, the sooner the better and ideally all of this should be done way in advance on 25th May, 2018.

In the next blog post I will look at addressing Discrimination in the data and in the machine learning models.

Click back to ‘How the EU GDPR will affect the use of Machine Learning – Part 1‘ for links to all the blog posts in this series.

How the EU GDPR will affect the use of Machine Learning – Part 1

On 5 December 2015, the European Parliament, the Council and the Commission reached agreement on the new data protection rules, establishing a modern and harmonised data protection framework across the EU. Then on 14th April 2016 the Regulations and Directives were adopted by the European Parliament.

The EU GDPR comes into effect on the 25th May, 2018.

Are you ready ?

The EU GDPR will affect every country around the World. As long as you capture and use/analyse data captured with the EU or by citizens in the EU then you have to comply with the EU GDPR.

Over the past few months we have seen a increase in the amount of blog posts, articles, presentations, conferences, seminars, etc being produced on how the EU GDPR will affect you. Basically if your company has not been working on implementing processes, procedures and ensuring they comply with the regulations then you a bit behind and a lot of work is ahead of you.

Like I said there was been a lot published and being talked about regarding the EU GDPR. Most of this is about the core aspects of the regulations on protecting and securing your data. But very little if anything is being discussed regarding the use of machine learning and customer profiling.

Do you use machine learning to profile, analyse and predict customers? Then the EU GDPRs affect you.

Article 22 of the EU GDPRs outlines some basic capabilities regarding machine learning, and in additionally Articles 13, 14, 19 and 21.

Over the coming weeks I will have the following blog posts. Each of these address a separate issue, within the EU GDPR, relating to the use of machine learning.

- Part 2 – Do I have permissions to use the data for data profiling?

- Part 3 – Ensuring there is no Discrimination in the Data and machine learning models.

- Part 4 – (Article 22: Profiling) Why me? and how Oracle 12c saves the day

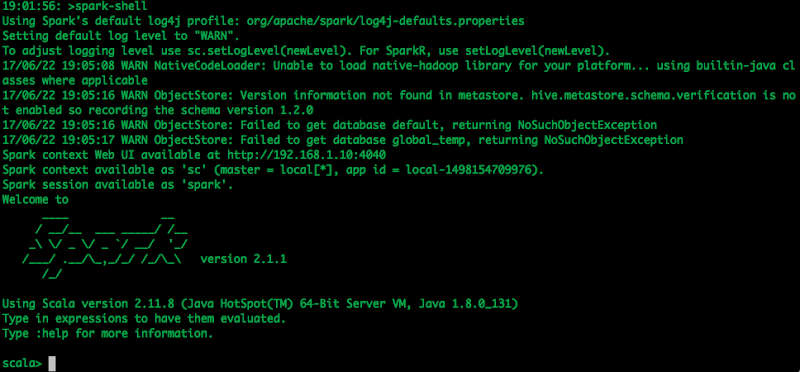

Installing Scala and Apache Spark on a Mac

The following outlines the steps I’ve followed to get get Scala and Apache Spark installed on my Mac. This allows me to play with Apache Spark on my laptop (single node) before deploying my code to a multi-node cluster.

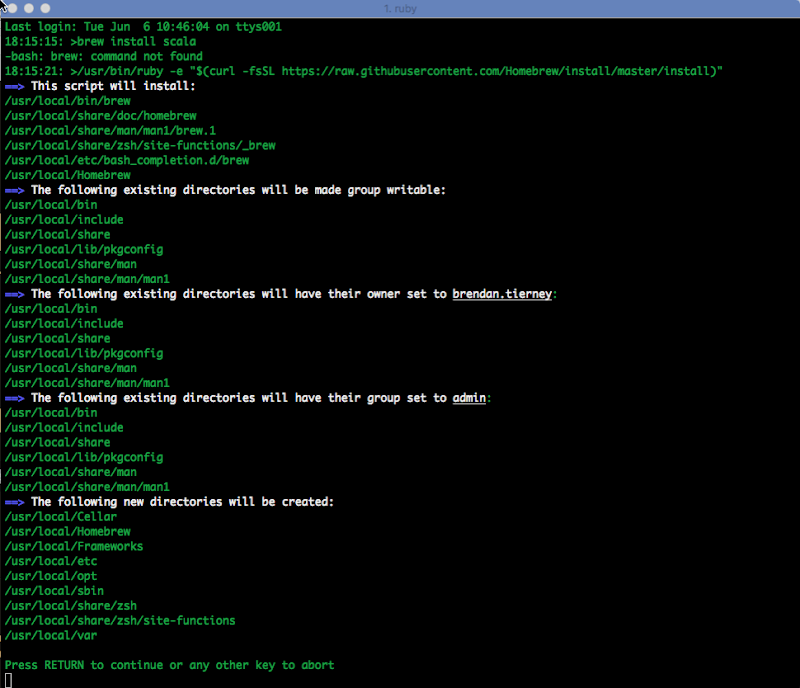

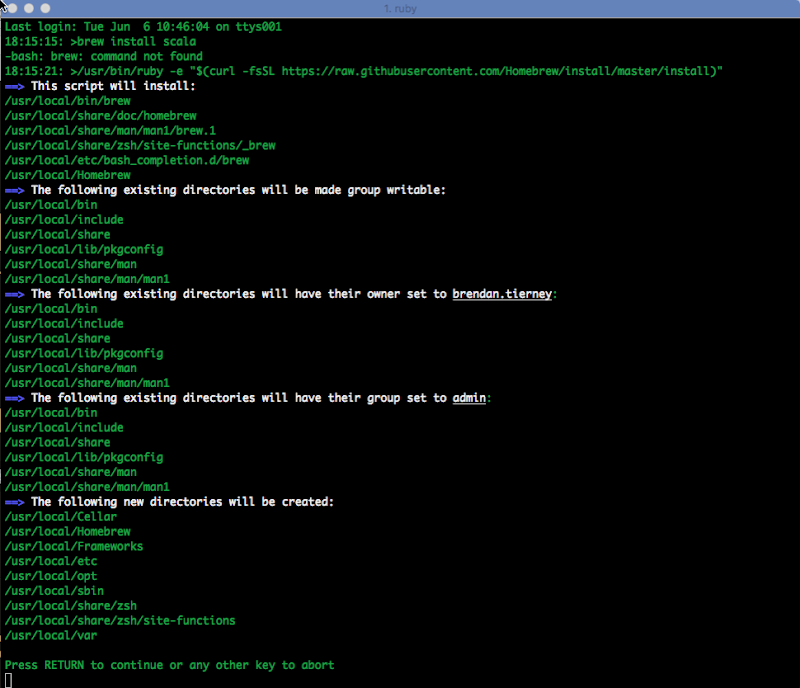

1. Install Homebrew

Homebrew seems to be the standard for installing anything on a Mac. To install Homebrew run

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

When prompted enter your system/OS password to allow the install to proceed.

2. Install xcode-select (if needed)

You may have xcode-select already installed. This tool allows you to install the languages using command line.

xcode-select --install

If it already installed then nothing will happen and you will get the following message.

xcode-select: error: command line tools are already installed, use "Software Update" to install updates

3. Install Scala

[If you haven’t installed Java then you need to also do this.]

Use Homebrew to install scala.

brew install scala

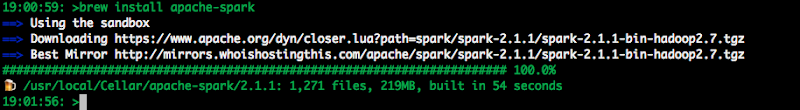

4. Install Apache Spark

Now to install Apache Spark.

brew install apache-spark

5. Start Spark

Now you can start the Apache Spark shell.

spark-shell

6. Hello-World and Reading a file

The traditional Hello-World example.

scala> val helloWorld = "Hello-World" helloWorld: String = Hello-World

or

scala> println("Hello World")

Hello World

What is my current working directory.

scala> val whereami = System.getProperty("user.dir")

whereami: String = /Users/brendan.tierney

Read and process a file.

scala> val lines = sc.textFile("docker_ora_db.txt")

lines: org.apache.spark.rdd.RDD[String] = docker_ora_db.txt MapPartitionsRDD[3] at textFile at :24

scala> lines.count()

res6: Long = 36

scala> lines.foreach(println)

####################################################################

## Specify the basic DB parameters

## Copyright(c) Oracle Corporation 1998,2016. All rights reserved.##

## ##

##------------------------------------------------------------------

## Docker OL7 db12c dat file ##

## ##

## db sid (name)

####################################################################

## default : ORCL

## cannot be longer than 8 characters

##------------------------------------------------------------------

...

There will be a lot more on how to use Spark and how to use Spark with Oracle (all their big data stuff) over the coming months.

[I’ve been busy for the past few months working on this stuff, EU GDPR issues relating to machine learning, and other things. I’ll be sharing some what I’ve been working on and learning in blog posts over the coming weeks]

Slides from the Ireland OUG Meetup May 2017

Here are some of the slides from our meetup on 11th May 2017.

The remaining slides will be added when they are available.

2016: A review of the year

As 2016 draws to a close I like to look back at what I have achieved over the year. Most of the following achievements are based on my work with the Oracle User Group community. I have some other achievements are are related to the day jobs (Yes I have multiple day jobs), but I won’t go into those here.

As you can see from the following 2016 was another busy year. There was lots of writing, which I really enjoy and I’ll be continuing with in 2017. As they say, watch this space for writing news in 2017.

Books

Yes 2016 was a busy year for writing and most of the later half of 2015 and the first half of 2016 was taken up writing two books. Yes two books. One of the books was on Oracle R Enterprise and this book compliments my previous published book on Oracle Data Mining. I now have the books that cover both components of the Oracle Advanced Analytics Option.

I also co-wrote a book with legends of Oracle community. These were Arup Nada, Martin Widlake, Heli Helskyaho and Alex Nuijten.

More news coming in 2017.

Blog Posts

One of the things I really enjoy doing is playing with various features of Oracle and then writing some blog posts about them. When writing the books I had to cut back on writing blog posts. I was luck to be part of the 12.2 Database beta this year and over the past few weeks I’ve been playing with 12.2 in the cloud. I’ve already written a blog post or two already on this and I also have an OTN article on this coming out soon. There will be more 12.2 analytics related blog posts in 2017.

In 2016 I have written 55 blog posts (including this one). This number is a little bit less when compared with previous years. I’ll blame the book writing for this. But more posts are in the works for 2017.

Articles

In 2016 I’ve written articles for OTN and for Toad World. These included:

OTN

- Oracle Advanced Analytics : Kicking the Tires/Tyres

- Kicking the Tyres of Oracle Advanced Analytics Option – Using SQL and PL/SQL to Build an Oracle Data Mining Classification Model

- Kicking the Tyres of Oracle Advanced Analytics Option – Overview of Oracle Data Miner and Build your First Workflow

- Kicking the Tyres of Oracle Advanced Analytics Option – Using SQL to score/label new data using Oracle Data Mining Models

- Setting up and configuring RStudio on the Oracle 12.2 Database Cloud Service

ToadWorld

- Introduction to Oracle R Enterprise

- ORE 1.5 – User Defined R Scripts

Conferences

- January – Yes SQL Summit, NoCOUG Winter Conference, Redwood City, CA, USA **

- January – BIWA Summit, Oracle HQ, Redwood City, CA, USA **

- March – OUG Ireland, Dublin, Ireland

- June – KScope, Chicago, USA (3 presentations)

- September – Oracle Open World (part of EMEA ACEs session) **

- December – UKOUG Tech16 & APPs16

** for these conferences the Oracle ACE Director programme funded the flights and hotels. All other expenses and other conferences I paid for out of my own pocket.

OUG Activities

I’m involved in many different roles in the user group. The UKOUG also covers Ireland (incorporating OUG Ireland), and my activities within the UKOUG included the following during 2016:

- Editor of Oracle Scene: We produced 4 editions in 2016. Thank you to all who contributed and wrote articles.

- Created the OUG Ireland Meetup. We had our first meeting in October. Our next meetup will be in January.

- OUG Ireland Committee member of TECH SIG and BI & BA SIG.

- Committee member of the OUG Ireland 2 day Conference 2016.

- Committee member of the OUG Ireland conference 2017.

- KScope17 committee member for the Data Visualization & Advanced Analytics track.

I’m sure I’ve forgotten a few things, I usually do. But it gives you a taste of some of what I got up to in 2016.

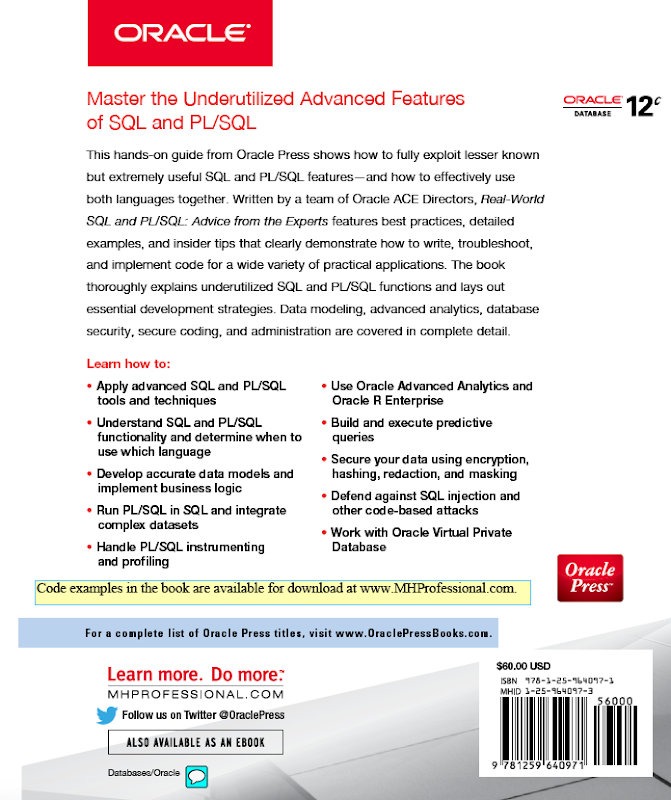

My 2nd Book: is now available: Real World SQL and PL/SQL

It has been a busy 12 month. In addition to the day jobs, I’ve also been busy writing. (More news on this in a couple of weeks!)

Today is a major milestone as my second book is officially released and available in print and ebook formats.

The tile of the book is ‘Real Word SQL and PL/SQL: Advice from the Experts’. Check it out on Amazon.

Now that sounds like a very fancy title, but it isn’t meant to be. This book is written by 5 people (including me), who are all Oracle ACE Directors, who all have 20+ years of experience, each, of working with the Oracle Database, and we all love sharing our knowledge. My co-authors are Arup Nanda, Heli Helskyaho, Martin Widlake and Alex Nuitjen. It was a pleasure working with you.

I haven’t seen a physical copy of the book yet !!! Yes the book is released and I haven’t held it in my hands. Although I have seen pictures of it that other people have taken. There was a delay in sending out the author copies of the book, but as of this morning my books are sitting in Stansted Airport and should be making their way to Ireland today. So fingers crossed I’ll have them tomorrow. I’ll update this blog post with a picture when I have them. UPDATE: They finally arrived at 13:25 on the 22nd August.

In addition to the 5 authors we also had Chet Justice (Oraclenerd), and Oracle ACE Director, as the technical editor. We also had Tim Hall, Oracle ACE Director, wrote a foreword for us.

To give you some background to the book and why we wrote it, here is an extract from the start of the book, where I describe how the idea for this book came about and the aim of the book.

“While attempting to give you an idea into our original thinking behind the need for this book and why we wanted to write it, . the words of Rod Stewart’s song ‘Sailing’ keeps popping into my mind. These are ‘We are sailing, we are sailing, home again ‘cross the sea’. This is because the idea for this book was born on a boat. Some call it a ship. Some call it a cruise ship. Whatever you want to call it, this book was born at the OUG Norway conference in March 2015. What makes the OUG Norway conference special is that it is held on a cruise ship that goes between Oslo in Norway to Kiel in Germany and back again. This means as a speaker and conference attendee you are ‘trapped’ on the cruise ship for 2 days filled with presentations, workshops, discussions and idea sharing for the Oracle community.

It was during this conference that Heli and Brendan got talking about their books. Heli had just published her Oracle SQL Developer Data Modeler book and Brendan had published his book on Oracle Data Miner the previous year. Whilst they were discussing their experiences of writing and sharing their knowledge and how much they enjoyed this,they both recognized that there are a lot of books for the people starting out in their Oracle career and then there are lots of books on specialized topics. What was missing were books that covered the middle group. A question they kept on asking but struggled to answer was, ‘after reading the introductory books, what book would they read next before getting onto the specialized books?’ This was particularly true of SQL and PL/SQL.

They also felt that something that was missing from many books, especially introductory ones, was the “Why and How” of doing things in certain ways that comes from experience. It is all well and good knowing the syntax of commands and the options, but what takes people from understanding a language to being productive in using it is that real-world derived knowledge that comes from using it for real tasks. It would be great to share some of that experience.

Then over breakfast on the final day of the OUG Norway conference, as the cruise ship was sailing through the fjorrd and around the islands that lead back to Oslo, Heli and Brendan finally agreed that this book should happen. They then listed the type of content they thought would be in such a book and who are the recognized experts (or super heroes) for these topics. This list of experts was very easy to come up with and the writing team of Oracle ACE Directors was formed, consisting of Arup Nanda, Martin Widlake and Alex Nuijten, along with Heli Helskyaho and Brendan Tierney. The author team then got to work defining the chapters and their contents. Using their combined 120+ years of SQL and PL/SQL experience they finally came up with scope and content for the book at Oracle Open World.

…”

As you can see, this book was 17 months in the making. This consisted of 4 months of proposal writing, research and refinement, 8 months of writing, 3 months of editing and 2 months for production of book.

Yes it takes a lot of time and commitment. We all finished our last tasks and final edits on the book back in early June. Since then the book has been sent for printing, converted into an ebook, books shipped to Oracle Press warehouse, then shipped to Amazon and other book sellers. Today it is finally available officially.

(when I say officially, it seems that Amazon has shipped some pre-ordered books a week ago)

If you are at Oracle Open World (OOW) in September make sure to check out the book in the Oracle Book Store, and if you buy a copy try to track us down to get us to sign it. The best way to do this is to contact us on Twitter, leave a message at the Oracle Press stand, or you will find us hanging out at the OTN Lounge.

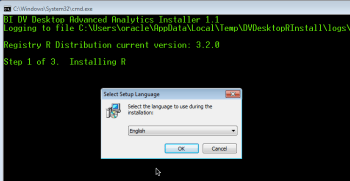

Oracle Data Visualisation Desktop : Enabling Advanced Analytics (R)

Oracle Data Visualization comes with all the typical features you have with Visual Analyzer that is part of BICS, DVCS and OBIEE.

An additional install you may want to do is to install the R language for Oracle Data Visualization Desktop. This is required to enable the Advanced Analytics feature of the tool.

After installing Data Visualisation Desktop when you open the Advanced Analytics section and try to add one of the Advanced Analytics graphing option you will get an errors message as, shown below.

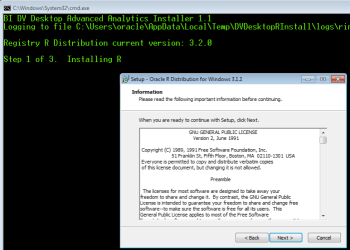

In Windows, click on the Start button, then go to Programs and then Oracle. In there you will see a menu item called install Advanced Analytics i.e. install Oracle R Distribution on your machine.

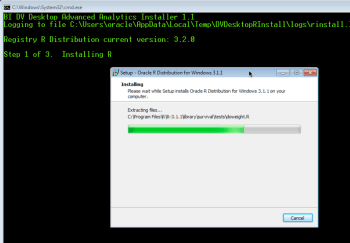

When you click on this menu option a new command line window will open and will proceed with the installation of Oracle R Distribution (in this case version 3.1.1, which is not the current version of Oracle R Distribution).

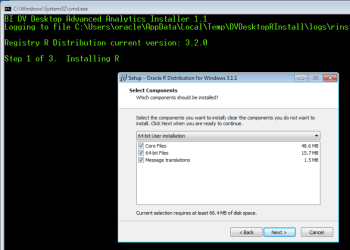

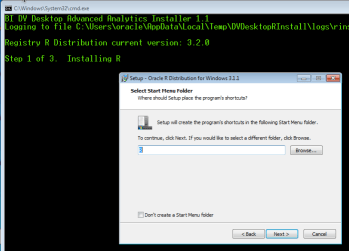

By accepting the defaults and clicking next, Oracle R Distribution will be installed. The following images will step you through the installation.

The final part of the installation is download and install lots and lots of supporting R packages.

When these supporting R packages have been installed, you can now use the Advanced Analytics features of Oracle Data Visualisation Desktop.

If you had the tool open during this installation you will need to close/shutdown the tool and restart it.

Oracle Data Visualisation : Setting up a Connection to your DB

Using Oracle Data Visualisation is just the same or very similar as to using the Cloud version of the tool.

In this blog post I will walk you through the steps you need to perform the first time you use the Oracle Data Visualization client tool and to quickly create some visualizations.

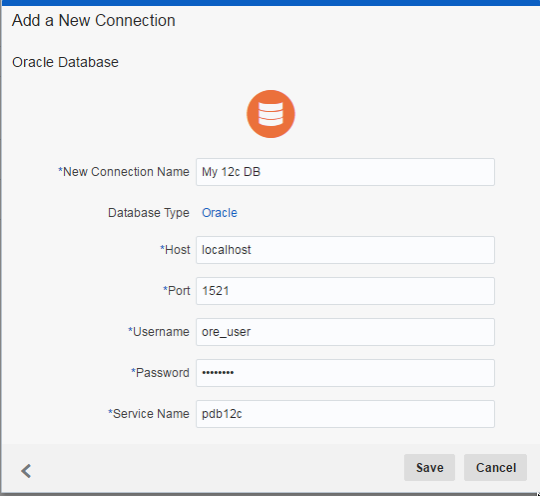

Step 1 – Create a Connection to your Oracle DB and Schema

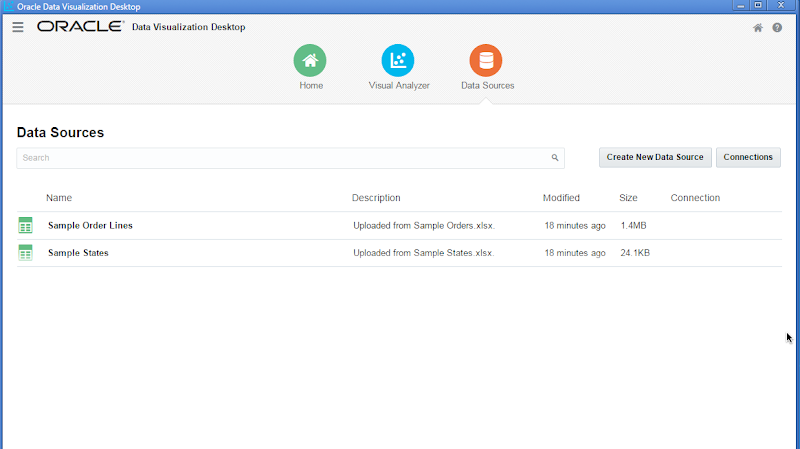

After opening Oracle Data Visualisation client tool client on the Data Sources icon that is displayed along the top of the screen.

Then click on the ‘Connection’ button. You need to create a connection to your schema in the Oracle Database. Other options exist to create a connection to files etc. But for this example click on ‘From Database.

Enter you connections details for your schema in your Oracle Database. This is exactly the same kind of information that you would enter for creating a SQL Developer connection. Then click the Save button.

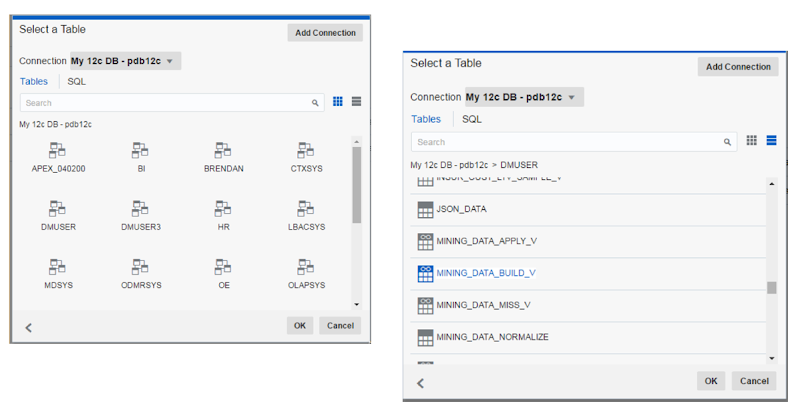

Step 2 – Defining the data source for your analytics

You need to select the tables or views that you are going to use to build up your data visualizations. In the Data Sources section of the tool (see the first image above) click on the ‘Create Data New Data Source’ button and then select ‘From Database’. The following window (or one like it) will be displayed. This will contain all the schemas in the DB that you have some privileges for. You may just see your schema or others.

Select your schema from the list. The window will be updated to display the tables and views in the schema. You can change the layout from icon based to being a list. You can also define a query that contains the data you want to analyse using the SQL tab.

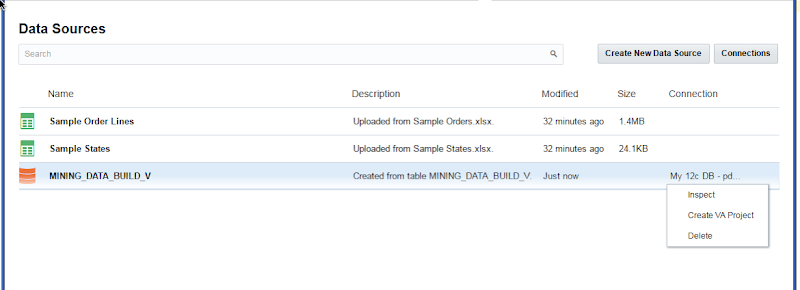

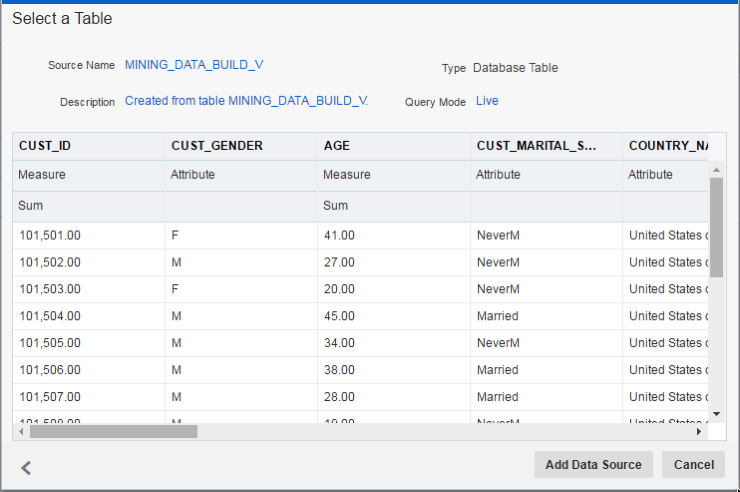

When you have select the table or view to use or have defined the SQL for the data set, a window will be displayed showing you a sample of the data. You can use this window to quickly perform a visual inspection of the data to make sure it is the data you want to use.

The data source you have defined will now be listed data sources part of the tool. You can click on the option icon (3 vertical dots) on the right hand side of the data source and then select Create VA Project from the pop up menu.

Step 3 – Create your Oracle Data Visualization project

When the Visual Analyser part of the tool opens, you can click and drag the columns from your data set on to the workspace. The data will be automatically formatted and displayed on the screen. You can also quickly generate lots of graphics and again click and drag the columns on the graphics to define various element.

DAMA Ireland: Data Protection Event 5th May

We have our next DAMA Ireland event/meeting coming up on the 5th May, and will be in our usual venue of Bank of Ireland, 1 Grand Canal Dock.

Our meeting will cover two topics. The main topic for the evening will be on Data Protection. We have Daragh O’Brien (MD of Castlebridge Associate) presenting on this. Daragh is also the Global Data Privacy Officer for DAMA International. He has also been invoked in contributing to the next version of the DMBOK, that is coming out very soon.

We also have Katherine O’Keefe who will be talking the DAMA Certified Data Management Practitioners (CDMP) certification. Katherine has been working with DAMA International on updates to the new CDMP certification.

To check out more details of the event/meeting, click on the Eventbrite image below. This will take you to the event/meeting details and where you can also register for the meeting.

Cost : FREE

When : 5th May

Where : Bank of Ireland, 1 Grand Canal Dock

PS: Please register in advance of the meeting, as we would like to know who and how many are coming, to allow us to make any necessary arrangements.

Spark versus Flink

Spark is an open source Apache project that provides a framework for multi stage in-memory analytics. Spark is based on the Hadoop platform and can interface with Cassandra OpenStack Swift, Amazon S3, Kudu and HDFS. Spark comes with a suite of analytic and machine learning algorithm allowing you to perform a wide variety of analytics on you distribute Hadoop platform. This allows you to generate data insights, data enrichment and data aggregations for storage on Hadoop and to be used on other more main stream analytics as part of your traditional infrastructure. Spark is primarily aimed at batch type analytics but it does come with a capabilities for streaming data. When data needs to be analysed it is loaded into memory and the results are then written back to Hadoop.

Flink is another open source Apache project that provides a platform for analyzing and processing data that is in a distributed stream and/or batch data processing. Similarly to Spark, Flink comes with a set of APIs that allows for each integration in with Java, Scala and Python. The machine learning algorithms have been specifically tuned to work with streaming data specifically but can also work in batch oriented data. As Flink is focused on being able to process streaming data, it run on Yarn, works with HDFS, can be easily integrated with Kafka and can connect to various other data storage systems.

Although both Spark and Flink can process streaming data, when you examine the underlying architecture of these tools you will find that Flink is more specifically focused for streaming data and can process this data in a more efficient manner.

There has been some suggestions in recent weeks and months that Spark is now long the tool of choice for analytics on Hadoop. Instead everyone should be using Flink or something else. Perhaps it is too early to say this. You need to consider the number of companies that have invested significant amount of time and resources building and releasing products on top of Spark. These two products provide similar-ish functionality but each product are designed to process this data in a different manner. So it really depends on what kind of data you need to process, if it is bulk or streaming will determine which of these products you should use. In some environments it may be suitable to use both.

Will these tool replace the more traditional advanced analytics tools in organisations? the simple answer is No they won’t replace them. Instead they will complement each other and if you have a Hadoop environment you will will probably end up using Spark to process the data on Hadoop. All other advanced analytics that are part of your more traditional environments you will use the traditional advanced analytics tools from the more main stream vendors.

My OOW15 is not over

It seems to be traditional for people to write a blog post that summaries their Oracle Open World (OOW) experience. Well here is my attempt and it really only touches on a fraction of what I did at OOW, which was one of busiest OOWs I’ve experienced.

It all began back on Wednesday 21st October when I began my journey. Yes that is 9 days ago, a long long 9 days ago. I will be glad to get to home.

The first 2 days here in San Francisco was down at Oracle HQ were the traditional Oracle ACE Directors briefings are held. I’m one of the small (but growing) number of ACEDs and it is an honour he take part in the programme. The ACE Director briefings are 2 days of packed (did I say they are packed) full on talks by the leading VPs, SVPs, EVPs and especially Thomas Kurian. Yes we had Thomas Kurian come into talk to us for 60-90 minutes of pure gold. We get told all the things that Oracle is going to release or announce at OOW and for the next few months and beyond. Some of things that I was particularly interested in was the 12.2 database stuff.

Unfortunately we are under NDA for all of the stuff we were told, until Oracle announce it themselves.

On the Thursday night a few of us meet up with Bryn Llewellyn (the god father of PL/SQL) for a meal. Here is a photo to prove it. It was a lively dinner with some “interesting” discussions.

On the Friday we all transferred hotels into a hotel beside Union Square.

We had the Saturday free, and I’m struggling to remember what I actually did. But it did consist of going out and about around San Francisco. Later that evening there was a meet up arranged by “That” Jeff Smyth in a local bar.

Sunday began with me walking across (and back) the Golden Gate Bridge with a few other ACEDs and ACEs.

The rest of the Sunday was spent at the User Groups Forum. I was giving 2 presentations. The first presentation was part of the “Another 12 things about Oracle 12c”. For this session there was 15 presenters and we each had 7 minutes to talk about a topic. Mine was on Oracle R Enterprise. It was an almost full room, which was great.

Then I had my second presentation right afterwards and for that we had a full room. I was co-presenting with Tony Heljula and we were talking about some of the projects we have done using Oracle Advanced Analytics.

After that my conference duties were done and I got to enjoy the rest of the conference.

Monday and Tuesday were a bit mental for me. I was basically in sessions from 8am until 6pm without a break. There was lots of really good topics, but unfortunately there was a couple presentations that were total rubbish. Where the title and abstract had no relevance to what was covered in the presentation, and even the presentation was rubbish. There was only a couple of these.

Wednesday was the same as Monday and Tuesday but this time I had time for lunch.

As usual the evenings are taken up with lots of socials and although I had great plans to go to lots of them, I failed and only got to one or two each evening.

On Wednesday night we ended up out at Treasure Island to be entertained by Elton John and Becks, and then back into Union Square for another social.

Thursday was very quiet and as things started to wind down at OOW finishing up at 3pm. A few of us went down to Fishermans Wharf and Pier39 for a wander around and a meal. Here are the some photos from the restaurant.

Friday has finally arrived and it is time to go home. OTN are very generous and put on a limo for the ACEDs to bring us out to the airport.

Go to www.oralytics.com

This WordPress site is only a temporary version of my main blog. To get all my content at my official site go to

www.oralytics.com

You must be logged in to post a comment.