Oracle Data Mining 11g R2

2 Day Oracle Data Miner training course by Oracle University

In the past few days Oracle University has advertised a new 2 Day instructor led training course on Oracle Data Miner.

There are no advertised dates or locations for this course yet. I suppose it will depend on the level of interest in the product.

There is the overview from the Oracle University webpage

“In this course, students review the basic concepts of data mining and learn how leverage the predictive analytical power of the Oracle Database Data Mining option by using Oracle Data Miner 11g Release 2. The Oracle Data Miner GUI is an extension to Oracle SQL Developer 3.0 that enables data analysts to work directly with data inside the database.

The Data Miner GUI provides intuitive tools that help you to explore the data graphically, build and evaluate multiple data mining models, apply Oracle Data Mining models to new data, and deploy Oracle Data Mining’s predictions and insights throughout the enterprise. Oracle Data Miner’s SQL APIs automatically mine Oracle data and deploy results in real-time. Because the data, models, and results remain in the Oracle Database, data movement is eliminated, security is maximized and information latency is minimized”

Click on the following link to access the details of the training course

http://education.oracle.com/pls/web_prod-plq-dad/db_pages.getCourseDesc?dc=D73528GC10

To view a PDF of the course details – click here

ODM–Attribute Importance using PL/SQL API

In a previous blog post I explained what attribute importance is and how it can be used in the Oracle Data Miner tool (click here to see blog post).

In this post I want to show you how to perform the same task using the ODM PL/SQL API.

The ODM tool makes extensive use of the Automatic Data Preparation (ADP) function. ADP performs some data transformations such as binning, normalization and outlier treatment of the data based on the requirements of each of the data mining algorithms. In addition to these transformations we can specify our own transformations. We do this by creating a setting tables which will contain the settings and transformations we can the data mining algorithm to perform on the data.

ADP is automatically turned on when using the ODM tool in SQL Developer. This is not the case when using the ODM PL/SQL API. So before we can run the Attribute Importance function we need to turn on ADP.

Step 1 – Create the setting table

CREATE TABLE Att_Import_Mode_Settings (

setting_name VARCHAR2(30),

setting_value VARCHAR2(30));

Step 2 – Turn on Automatic Data Preparation

BEGIN

INSERT INTO Att_Import_Mode_Settings (setting_name, setting_value)

VALUES (dbms_data_mining.prep_auto,dbms_data_mining.prep_auto_on);

COMMIT;

END;

Step 3 – Run Attribute Importance

BEGIN

DBMS_DATA_MINING.CREATE_MODEL(

model_name => ‘Attribute_Importance_Test’,

mining_function => DBMS_DATA_MINING.ATTRIBUTE_IMPORTANCE,

data_table_name > ‘mining_data_build_v’,

case_id_column_name => ‘cust_id’,

target_column_name => ‘affinity_card’,

settings_table_name => ‘Att_Import_Mode_Settings’);

END;

Step 4 – Select Attribute Importance results

SELECT *

FROM TABLE(DBMS_DATA_MINING.GET_MODEL_DETAILS_AI(‘Attribute_Importance_Test’))

ORDER BY RANK;

ATTRIBUTE_NAME IMPORTANCE_VALUE RANK

——————– —————- ———-

HOUSEHOLD_SIZE .158945397 1

CUST_MARITAL_STATUS .158165841 2

YRS_RESIDENCE .094052102 3

EDUCATION .086260794 4

AGE .084903512 5

OCCUPATION .075209339 6

Y_BOX_GAMES .063039952 7

HOME_THEATER_PACKAGE .056458722 8

CUST_GENDER .035264741 9

BOOKKEEPING_APPLICAT .019204751 10

ION

CUST_INCOME_LEVEL 0 11

BULK_PACK_DISKETTES 0 11

OS_DOC_SET_KANJI 0 11

PRINTER_SUPPLIES 0 11

COUNTRY_NAME 0 11

FLAT_PANEL_MONITOR 0 11

ODM 11gR2–Attribute Importance

I had a previous blog post on Data Exploration using Oracle Data Miner 11gR2. This blog post builds on the steps illustrated in that blog post.

After we have explored the data we can identity some attributes/features that have just one value or mainly one value, etc. In most of these cases we know that these attributes will not contribute to the model build process.

In our example data set we have a small number of attributes. So it is easy to work through the data and get a good understanding of some of the underlying information that exists in the data. Some of these were pointed out in my previous blog post.

The reality is that our data sets can have a large number of attributes/features. So it will be very difficult or nearly impossible to work through all of these to get a good understanding of what is a good attribute to use, and keep in our data set, or what attribute does not contribute and should be removed from the data set.

Plus as our data evolves over time, the importance of the attributes will evolve with some becoming less important and some becoming more important.

The Attribute Importance node in Oracle Data Miner allows use to automate this work for us and can save us many hours or even days, in our work on this task.

The Attribute Importance node using the Minimum Description Length algorithm.

The following steps, builds on our work in my previous post, and shows how we can perform Attribute Importance on our data.

1. In the Component Palette, select Filter Columns from the Transforms list

2. Click on the workflow beside the data node.

3. Link the Data Node to the Filter Columns node. Righ-click on the data node, select Connect, move the mouse to the Filter Columns node and click. the link will be created

4. Now we can configure the Attribute Importance settings.Click on the Filter Columns node. In the Property Inspector, click on the Filters tab.

– Click on the Attribute Importance Checkbox

– Set the Target Attribute from the drop down list. In our data set this is Affinity Card

5. Right click the Filter Columns node and select Run from the menu

After everything has run, we get the little green box with the tick mark on the Filter Column node. To view the results we right clicking on the Filter Columns node and select View Data from the menu. We get the list of attributes listed in order of importance and their Importance measure.

We see that there are a number of attributes that have a zero value. It algorithm has worked out that these attributes would not be used in the model build step. If we look back to the previous blog post, some of the attributes we identified in it have also been listed here with a zero value.

ODM 11gR2–Real-time scoring of data

In my previous posts I gave sample code of how you can use your ODM model to score new data.

Applying an ODM Model to new data in Oracle – Part 2

Applying an ODM Model to new data in Oracle – Part 1

The examples given in this previous post were based on the new data being in a table.

In some scenarios you may not have the data you want to score in table. For example you want to score data as it is being recorded and before it gets committed to the database.

The format of the command to use is

prediction(ODM_MODEL_NAME USING )

prediction_probability(ODM_Model_Name, Target Value, USING )

So we can list the model attributes we want to use instead of using the USING * as we did in the previous blog posts

Using the same sample data that I used in my previous posts the command would be:

Select prediction(clas_decision_tree

USING

20 as age,

‘NeverM’ as cust_marital_status,

‘HS-grad’ as education,

1 as household_size,

2 as yrs_residence,

1 as y_box_games) as scored_value

from dual;

SCORED_VALUE

————

0

Select prediction_probability(clas_decision_tree, 0

USING

20 as age,

‘NeverM’ as cust_marital_status,

‘HS-grad’ as education,

1 as household_size,

2 as yrs_residence,

1 as y_box_games) as probability_value

from dual;

PROBABILITY_VALUE

—————–

1

So we get the same result as we got in our previous examples.

Depending of what data we have gathered we may or may not have all the values for each of the attributes used in the model. In this case we can submit a subset of the values to the function and still get a result.

Select prediction(clas_decision_tree

USING

20 as age,

‘NeverM’ as cust_marital_status,

‘HS-grad’ as education) as scored_value2

from dual;

SCORED_VALUE2

————-

0

Select prediction_probability(clas_decision_tree, 0

USING

20 as age,

‘NeverM’ as cust_marital_status,

‘HS-grad’ as education) as probability_value2

from dual;

PROBABILITY_VALUE2

——————

1

Again we get the same results.

ODM 11gR2–Using different data sources for Build and Testing a Model

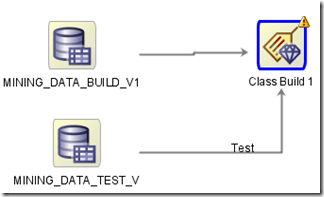

There are 2 ways to connect a data source to the Model build node in Oracle Data Miner.

The typical method is to use a single data source that contains the data for the build and testing stages of the Model Build node. Using this method you can specify what percentage of the data, in the data source, to use for the Build step and the remaining records will be used for testing the model. The default is a 50:50 split but you can change this to what ever percentage that you think is appropriate (e.g. 60:40). The records will be split randomly into the Built and Test data sets.

The second way to specify the data sources is to use a separate data source for the Build and a separate data source for the Testing of the model.

To do this you add a new data source (containing the test data set) to the Model Build node. ODM will assign a label (Test) to the connector for the second data source.

If the label was assigned incorrectly you can swap what data sources. To do this right click on the Model Build node and select Swap Data Sources from the menu.

Updating your ODM (11g R2) model in production

In my previous blog posts on creating an ODM model, I gave the details of how you can do this using the ODM PL/SQL API.

But at some point you will have a fairly stable environment. What this means is that you will know what type of algorithm and its corresponding settings work best for for your data.

At this point you should be able to re-create your ODM model in the production database. The frequency of doing this update is dependent on number of new cases that you have. So you need to update your ODM model could be daily, weekly, monthly, etc.

To update your model you will need to:

– Creating a settings table for your model

– Create a new ODM model

– Rename your new ODM model to the production name

The following examples are based on the example data, model names, etc that I’ve used in my previous post.

Creating a Settings Table

The first step is to create a setting table for your algorithm. This will contain all the parameter settings needed to create the new model. You will have worked out these setting from your previous attempts at creating your models and you will know what parameters and their values work best.

— Create the settings table

CREATE TABLE decision_tree_model_settings (

setting_name VARCHAR2(30),

setting_value VARCHAR2(30));

— Populate the settings table

— Specify DT. By default, Naive Bayes is used for classification.

— Specify ADP. By default, ADP is not used.

BEGIN

INSERT INTO decision_tree_model_settings (setting_name, setting_value)

VALUES (dbms_data_mining.algo_name,

dbms_data_mining.algo_decision_tree);

INSERT INTO decision_tree_model_settings (setting_name, setting_value)

VALUES (dbms_data_mining.prep_auto,dbms_data_mining.prep_auto_on);

COMMIT;

END;

Create a new ODM Model

We will need to use the DBMS_DATA_MINING.CREATE_MODEL procedure. In our example we will want to create a Decision Tree based on our sample data, which contains the previously generated cases and the new cases since the last model rebuild.

BEGIN

DBMS_DATA_MINING.CREATE_MODEL(

model_name => ‘Decision_Tree_Method2′,

mining_function => dbms_data_mining.classification,

data_table_name => ‘mining_data_build_v’,

case_id_column_name => ‘cust_id’,

target_column_name => ‘affinity_card’,

settings_table_name => ‘decision_tree_model_settings’);

END;

Rename your ODM model to production name

The model we have create created above is not the name that is used in our production software. So we will need to rename it to our production name.

But we need to be careful about when we do this. If you drop a model or rename a model when it is being used then you can end up with indeterminate results.

What I suggest you do, is to pick a time of the day when your production software is not doing any data mining. You should drop the existing mode (or rename it) and the to rename the new model to the production model name.

DBMS_DATA_MINING.DROP_MODEL(‘CLAS_DECISION_TREE‘);

and then

DBMS_DATA_MINING.RENAME_MODEL(‘Decision_Tree_Method2’, ‘CLAS_DECISION_TREE’);

Oracle Analytics Update & Plan for 2012

On Friday 16th December, Charlie Berger (Sr. Director, Product Management, Data Mining & Advanced Analytics) posted the following on the Oracle Data Mining forum on OTN.

“… soon you’ll be able to use the new Oracle R Enterprise (ORE) functionality. ORE is currently in beta and is targeted to go General Availability in the near future. ORE brings additional functionality to the ODM Option, which will then be renamed to the Oracle Advanced Analytics Option to reflect the significant adv. analytical functionality enhancements. ORE will allow R users to write R scripts and run them inside the database and eliminate and/or minimize data movement in/out of the DB. ORE will provide R to SQL transparency for SQL push-down to in-DB SQL and and expanding library of Oracle in-DB statistical functions. Packages that cannot be pushed down will be run in embedded R mode while the DB manages all data flows to the multiple R engines running inside the DB.

In January, we’ll open up a new OTN discussion forum specifically for Oracle R Enterprise focused technical discussions. Stay tuned.”

I’m looking forward to getting my hands on the new Oracle R Enterprise, in 2012. In particular I’m keen to see what additional functionality will be added to the Oracle Data Mining option in the DB.

So watch out for the rebranding to Oracle Advanced Analytics

Charlie – Any chance of an advanced copy of ORE and related DB bits and bobs.

My UKOUG Presentation on ODM PL/SQL API

On Wednesday 7th Dec I gave my presentation at the UKOUG conference in Birmingham. The main topic of the presentation was on using the Oracle Data Miner PL/SQL API to implement a model in a production environment.

There was a good turn out considering it was the afternoon of the last day of the conference.

I asked the attendees about their experience of using the current and previous versions of the Oracle Data Mining tool. Only one of the attendees had used the pre 11g R2 version of the tool.

From my discussions with the attendees, it looks like they would have preferred an introduction/overview type presentation of the new ODM tool. I had submitted a presentation on this, but sadly it was not accepted. Not enough people had voted for it.

For for next year, I will submit an introduction/overview presentation again, but I need more people to vote for it. So watch out for the vote stage next June and vote of it.

Here are the links to the presentation and the demo scripts (which I didn’t get time to run)

Demo Script 1 – Exploring and Exporting model

Demo Script 2 – Import, Dropping and Renaming the model. Plus Queries that use the model

Applying an ODM Model to new data in Oracle – Part 2

This is the second of a two part blog posting on using an Oracle Data Mining model to apply it to or score new data. The first part looked at how you can score data the DBMS_DATA_MINING.APPLY procedure for scoring data batch type process.

This second part looks at how you can apply or score the new data, using our ODM model, in a real-time mode, scoring a single record at a time.

PREDICTION Function

The PREDICTION SQL function can be used in many different ways. The following examples illustrate the main ways of using it. Again we will be using the same data set with data in our (NEW_DATA_TO_SCORE) table.

The syntax of the function is

PREDICTION ( model_name, USING attribute_list);

Example 1 – Real-time Prediction Calculation

In this example we will select a record and calculate its predicted value. The function will return the predicted value with the highest probability

SELECT cust_id, prediction(clas_decision_tree using *)

FROM NEW_DATA_TO_SCORE

WHERE cust_id = 103001;

CUST_ID PREDICTION(CLAS_DECISION_TREEUSING*)

———- ————————————

103001 0

So a predicted class value is 0 (zero) and this has a higher probability than a class value of 1.

We can compare and check this results with the result that was produced using the DBMS_DATA_MINING.APPLY function (see previous blog post).

SQL> select * from new_data_scored

2 where cust_id = 103001;

CUST_ID PREDICTION PROBABILITY

———- ———- ———–

103001 0 1

103001 1 0

Here we can see that the class value of 0 has a probability of 1 (100%) and the class value of 1 has a probability of 0 (0%).

Example 2 – Selecting top 10 Customers with Class value of 1

For this we are selecting from our NEW_DATA_TO_SCORE table. We want to find the records that have a class value of 1 and has the highest probability. We only want to return the first 10 of these

SELECT cust_id

FROM NEW_DATA_TO_SCORE

WHERE PREDICTION(clas_decision_tree using *) = 1

AND rownum <=10;

CUST_ID

———-

103005

103007

103010

103014

103016

103018

103020

103029

103031

103036

Example 3 – Selecting records based on Prediction value and Probability

For this example we want to find our from what Countries do the customer come from where the Prediction is 0 (wont take up offer) and the Probability of this occurring being 1 (100%). This example introduces the PREDICTION_PROBABILITY function. This function allows use to use the probability strength of the prediction.

select country_name, count(*)

from new_data_to_score

where prediction(clas_decision_tree using *) = 0

and prediction_probability (clas_decision_tree using *) = 1

group by country_name

order by count(*) asc;

COUNTRY_NAME COUNT(*)

—————————————- ———-

Brazil 1

China 1

Saudi Arabia 1

Australia 1

Turkey 1

New Zealand 1

Italy 5

Argentina 12

United States of America 293

The examples that I have give above are only the basic examples of using the PREDICTION function. There are a number of other uses that include using the PREDICTION_COST, PREDICTION_SET, PREDICTION_DETAILS. Examples of these will be covered in a later blog post

Applying an ODM Model to new data in Oracle – Part 1

This is the first of a two part blog posting on using an Oracle Data Mining model to apply it to or score new data. This first part looks at the how you can score data using the DBMS_DATA_MINING.APPLY procedure in a batch type process.

The second part will be posted in a couple of days and will look how you can apply or score the new data, using our ODM model, in a real-time mode, scoring a single record at a time.

DBMS_DATA_MINING.APPLY

Instead of applying the model to data as it is captured, you may need to apply a model to a large number of records at the same time. To perform this bulk processing we can use the APPLY procedure that is part of the DBMS_DATA_MINING package. The format of the procedure is

DBMS_DATA_MINING.APPLY (

model_name IN VARCHAR2,

data_table_name IN VARCHAR2,

case_id_column_name IN VARCHAR2,

result_table_name IN VARCHAR2,

data_schema_name IN VARCHAR2 DEFAULT NULL);

| Parameter Name | Description |

| Model_Name | The name of your data mining model |

| Data_Table_Name | The source data for the model. This can be a tree or view. |

| Case_Id_Column_Name | The attribute that give uniqueness for each record. This could be the Primary Key or if the PK contains more than one column then a new attribute is needed |

| Result_Table_Name | The name of the table where the results will be stored |

| Data_Schema_Name | The schema name for the source data |

The main condition for applying the model is that the source table (DATA_TABLE_NAME) needs to have the same structure as the table that was used when creating the model.

Also the data needs to be prepossessed in the same way as the training data to ensure that the data in each attribute/feature has the same formatting.

When you use the APPLY procedure it does not update the original data/table, but creates a new table (RESULT_TABLE_NAME) with a structure that is dependent on what the underlying DM algorithm is. The following gives the Result Table description for the main DM algorithms:

For a Classification algorithms

case_id VARCHAR2/NUMBER

prediction NUMBER / VARCHAR2 — depending a target data type

probability NUMBER

For Regression

case_id VARCHAR2/NUMBER

prediction NUMBER

For Clustering

case_id VARCHAR2/NUMBER

cluster_id NUMBER

probability NUMBER

Example / Case Study

My last few blog posts on ODM have covered most of the APIs for building and transferring models. We will be using the same data set in these posts. The following code uses the same data and models to illustrate how we can use the DBMS_DATA_MINING.APPLY procedure to perform a bulk scoring of data.

In my previous post we used the EXPORT and IMPORT procedures to move a model from one database (Test) to another database (Production). The following examples uses the model in Production to score new data. I have setup a sample of data (NEW_DATA_TO_SCORE) from the SH schema using the same set of attributes as was used to create the model (MINING_DATA_BUILD_V). This data set contains 1500 records.

SQL> desc NEW_DATA_TO_SCORE

Name Null? Type

———————————— ——– ————

CUST_ID NOT NULL NUMBER

CUST_GENDER NOT NULL CHAR(1)

AGE NUMBER

CUST_MARITAL_STATUS VARCHAR2(20)

COUNTRY_NAME NOT NULL VARCHAR2(40)

CUST_INCOME_LEVEL VARCHAR2(30)

EDUCATION VARCHAR2(21)

OCCUPATION VARCHAR2(21)

HOUSEHOLD_SIZE VARCHAR2(21)

YRS_RESIDENCE NUMBER

AFFINITY_CARD NUMBER(10)

BULK_PACK_DISKETTES NUMBER(10)

FLAT_PANEL_MONITOR NUMBER(10)

HOME_THEATER_PACKAGE NUMBER(10)

BOOKKEEPING_APPLICATION NUMBER(10)

PRINTER_SUPPLIES NUMBER(10)

Y_BOX_GAMES NUMBER(10)

OS_DOC_SET_KANJI NUMBER(10)

SQL> select count(*) from new_data_to_score;

COUNT(*)

———-

1500

The next step is to run the the DBMS_DATA_MINING.APPLY procedure. The parameters that we need to feed into this procedure are

| Parameter Name | Description |

| Model_Name | CLAS_DECISION_TREE — we imported this model from our test database |

| Data_Table_Name | NEW_DATA_TO_SCORE |

| Case_Id_Column_Name | CUST_ID — this is the PK |

| Result_Table_Name | NEW_DATA_SCORED — new table that will be created that contains the Prediction and Probability. |

The NEW_DATA_SCORED table will contain 2 records for each record in the source data (NEW_DATA_TO_SCORE). For each record in NEW_DATA_TO_SCORE we will have one record for the each of the Target Values (O or 1) and the probability for each target value. So for our NEW_DATA_TO_SCORE, which contains 1,500 records, we will get 3,000 records in the NEW_DATA_SCORED table.

To apply the model to the new data we run:

BEGIN

dbms_data_mining.apply(

model_name => ‘CLAS_DECISION_TREE’,

data_table_name => ‘NEW_DATA_TO_SCORE’,

case_id_column_name => ‘CUST_ID’,

result_table_name => ‘NEW_DATA_SCORED’);

END;

/

This takes 1 second to run on my laptop, so this apply/scoring of new data is really quick.

The new table NEW_DATA_SCORED has the following description

SQL> desc NEW_DATA_SCORED

Name Null? Type

——————————- ——– ——-

CUST_ID NOT NULL NUMBER

PREDICTION NUMBER

PROBABILITY NUMBER

SQL> select count(*) from NEW_DATA_SCORED;

COUNT(*)

———-

3000

We can now look at the prediction and the probabilities

SQL> select * from NEW_DATA_SCORED where rownum <=12;

CUST_ID PREDICTION PROBABILITY

———- ———- ———–

103001 0 1

103001 1 0

103002 0 .956521739

103002 1 .043478261

103003 0 .673387097

103003 1 .326612903

103004 0 .673387097

103004 1 .326612903

103005 1 .767241379

103005 0 .232758621

103006 0 1

103006 1 0

12 rows selected.

My UKOUG Conference 2011 Schedule

The UKOUG conference will be in a couple of weeks. I have my flights and hotel booked, and I’ve just finished selecting my agenda of presentations. I really enjoy this conference as it serves many purposes including, finding new directions Oracle is taking, new product features, some upskilling/training, confirming that the approaches that I have been using on projects are valid, getting lots of hints and tips, etc.

One thing that I always try to do and I strongly everyone (in particular first timers) to do is to go to 1 session everyday that is on a topic or product that you know (nearly) nothing about. You might discover that you know more than you think or you may learn something new that can be feed into some project on your return or over the next 12 months.

My agenda for the conference currently looks Very busy and in between these session, there is the exhibition hall, meetings with old and new friends, meetings with product/business unit managers, asking people to write articles for Oracle Scene, checking out possible presenters to come to Ireland for our conference in March 2012, etc. Then there is my presentation on the Wednesday afternoon.

Sunday

I’ll miss most of the Oak Table event on the Sunday but I hope to make it in time for

16:40-17:30 : Performance & High Availability Panel Session

Monday

9:20-9:50 : Keynote by Mark Sunday, Oracle (H1)

10:00-10:45 : The Future of BI & Oracle roadmap, Mike Durran, Oracle (H5)

11:05-12:05 : Implementing Interactive Maps with OBIEE 11g, Antony Heljula, Peak Indicators (H10A)

12:15-13:15 : OBI 11g Analysis & Reporting New Features, Mark Rittman (8A)

14:30-15:15 : Master Data Management – What is it & how to make it work – Robert Barnett, Hub Solutions Designs (H10A)

16:20-17:35 : Dummies Guide to Oracle ADF, Grant Ronald, Oracle, (Media Suite)

16:35-18:30 : The DB Time Performance Method, Graham Wood, Oracle (H8A)

17:45-18:30 : Performance & Stability with Oracle 11g SQL Plan Management, Doug Burns (H1)

17:45-18:30 : Experiences in Virtualization, Michael Doherty (H10A)

19:45-20:45 : Exhibition Welcome Drinks

20:45-Late : Focus Pubs

Tuesday

9:00-11:00 : Next Generation BI Architectures Masterclass, Andrew Bond, Oracle (H10B)

10:10-10:55 : Who’s afraid of Analytic Functions, Alex Nuijten, Maxima (H5)

11:15-12:15 : Analysing Your Data with Analytic Functions, Carl Dudley, (H9)

11:25-13:25 : Using a Physical Standby to Minimize Downtime for DB Release or Server Change, Michael Abbey, Pythian (Media Suite)

14:40-15:25 : How note to make the headlines, Mark Clewett, Hitachi (H10A)

14:40-15:25 : APEX Back to Basics, Paul Broughton, APEX Evangelists (H9)

15:35-16:20 : Can People be identified in the database, Pete Finnigan (H1)

16:40-18:35 : OTN Hands-on Workshop, Todd Trichler, Oracle (H8A)

17:50-18:35 : SQL Developer Data Modeler as a replacement for Oracle Designer, Paul Bainbridge, Fujitsu, (H8B)

18:45-19:45 : Keynote : Future of Enterprise Software and Oracle, Ray Wang, Constellation Research (H1)

20:00-Late : Evening Social & Networking

Wednesday

9:00-10:00 : Oracle 11g Database: Automatic Parallelism, Joel Goodman, Oracle (H9)

9:00-10:00 : Big Data: Learn how to predict the future, Keith Laker, Oracle (H8B)

10:10-10:55 : All about indexes – What to index, when and how, Mark Bobak, ProQuest (H5)

11:20-12:30 : Using Application Express to Build Highly Accessible Products, Anthony Rayner, Oracle (H8A)

12:30-13:30 : Practical uses for APEX Dictionary, John Scott, APEX Evangelists (H8A)

15:20-16:05 : How to deploy you Oracle Data Miner 11g R2 Workflows in a Live Environment – Me (H7B)

16:15-17:00 : Next Generation Data Warehousing, Kulvinder Hari, Oracle (H8A)

16:15-17:00 : Beyond RTFM and WTF Message Moments. Introducing a new standard: Oracle Fusion Applications User Assistance, Ultan O’Broin (Executive Room 7)

I know I have some overlapping sessions, but I will decide on the date which of these I will attend.

As you an see I will be following the BI stream mainly, with a few sessions on the Database and Development streams too.

This year there is a smart phone app help us organise our agenda, meetings, etc, The only downside is that the app does not import the agenda that I created on the website. So I have to do it again. Maybe for next year they will have an import agenda feature.

ODM–PL/SQL API for Exporting & Importing Models

In a previous blog post I talked about how you can take a copy of a workflow developed in Oracle Data Miner, and load it into a new schema.

When you data mining project gets to a mature stage and you need to productionalise the data mining process and model updates, you will need to use a different set of tools.

As you gather more and more data and cases, you will be updating/refreshing your models to reflect this new data. The new update data mining model needs to be moved from the development/test environment to the production environment. As with all things in IT we would like to automate this updating of the model in production.

There are a number of database features and packages that we can use to automate the update and it involves the setting up of some scripts on the development/test database and also on the production database.

These steps include:

- Creation of a directory on the development/test database

- Exporting of the updated Data Mining model

- Copying of the exported Data Mining model to the production server

- Removing the existing Data Mining model from production

- Importing of the new Data Mining model.

- Rename the imported mode to the standard name

The DBMS_DATA_MINING PL/SQL package has 2 functions that allow us to export a model and to import a model. These functions are an API to the Oracle Data Pump. The function to export a model is DBMS_DATA_MINING.EXPORT_MODEL and the function to import a model is DBMS_DATA_MINING.IMPORT_MODEL.The parameters to these function are what you would expect use if you were to use Data Pump directly, but have been tailored for the data mining models.

Lets start with listing the models that we have in our development/test schema:

SQL> connect dmuser2/dmuser2

Connected.

SQL> SELECT model_name FROM user_mining_models;

MODEL_NAME

——————————

CLAS_DT_1_6

CLAS_SVM_1_6

CLAS_NB_1_6

CLAS_GLM_1_6

Create/define the directory on the server where the models will be exported to.

CREATE OR REPLACE DIRECTORY DataMiningDir_Exports AS ‘c:\app\Data_Mining_Exports’;

The schema you are using will need to have the CREATE ANY DIRECTORY privilege.

Now we can export our mode. In this example we are going to export the Decision Tree model (CLAS_DT_1_6)

DBMS_DATA_MINING.EXPORT_MODEL function

The function has the following structure

DBMS_DATA_MINING.EXPORT_MODEL (

filename IN VARCHAR2,

directory IN VARCHAR2,

model_filter IN VARCHAR2 DEFAULT NULL,

filesize IN VARCHAR2 DEFAULT NULL,

operation IN VARCHAR2 DEFAULT NULL,

remote_link IN VARCHAR2 DEFAULT NULL,

jobname IN VARCHAR2 DEFAULT NULL);

If we wanted to export all the models into a file called Exported_DM_Models, we would run:

DBMS_DATA_MINING.EXPORT_MODEL(‘Exported_DM_Models’, ‘DataMiningDir’);

If we just wanted to export our Decision Tree model to file Exported_CLASS_DT_Model, we would run:

DBMS_DATA_MINING.EXPORT_MODEL(‘Exported_CLASS_DT_Model’, ‘DataMiningDir’, ‘name in (”CLAS_DT_1_6”)’);

DBMS_DATA_MINING.DROP_MODEL function

Before you can load the new update data mining model into your production database we need to drop the existing model. Before we do this we need to ensure that this is done when the model is not in use, so it would be advisable to schedule the dropping of the model during a quiet time, like before or after the nightly backups/processes.

DBMS_DATA_MINING.DROP_MODEL(‘CLAS_DECISION_TREE’, TRUE)

DBMS_DATA_MINING.IMPORT_MODEL function

Warning : When importing the data mining model, you need to import into a tablespace that has the same name as the tablespace in the development/test database. If the USERS tablespace is used in the development/test database, then the model will be imported into the USERS tablespace in the production database.

Hint : Create a DATAMINING tablespace in your development/test and production databases. This tablespace can be used solely for data mining purposes.

To import the decision tree model we exported previously, we would run

DBMS_DATA_MINING.IMPORT_MODEL(‘Exported_CLASS_DT_Model’, ‘DataMiningDir’, ‘name=’CLAS_DT_1_6”’, ‘IMPORT’, null, null, ‘dmuser2:dmuser3’);

We now have the new updated data mining model loaded into the production database.

DBMS_DATA_MINING.RENAME_MODEL function

The final step before we can start using the new updated model in our production database is to rename the imported model to the standard name that is being used in the production database.

DBMS_DATA_MINING.RENAME_MODEL(‘CLAS_DT_1_6’, ‘CLAS_DECISION_TREE’);

Scheduling of these steps

We can wrap most of this up into stored procedures and have schedule it to run on a semi-regular bases, using the DBMS_JOB function. The following example schedules a procedure that controls the importing, dropping and renaming of the models.

DBMS_JOB.SUBMIT(jobnum.nextval, ‘import_new_data_mining_model’, trunc(sysdate), add_month(trunc(sysdate)+1);

This schedules the the running of the procedure to import the new data mining models, to run immediately and then to run every month.

You must be logged in to post a comment.