Oracle Data Miner

Association Rules in ODM–Part 1

This is a the first part of a four part blog post on building and using Association Rules in Oracle Data Miner. The following outlines the contents of each post in the series on Association Rules

- This first part will focus on how to building an Association Rule model

- The second post will be on examining the Association Rules produced by ODM – This blog post

- The third post will focus on using the Association Rules on your data.

- The final post will look at how you can do some of the above steps using the ODM SQL and PL/SQL functions.

The data set we will be using for Association Rule Analysis will be the sample data that comes with the SH schema in the database. Access to this schema and it’s data was setup when we created our data mining schema and ODM Repository.

Step 1 – Getting setup

As with all data mining projects you will need a workspace that will contain your workflows. Based on my previous ODM blog posts you will have already created a Project and some workflows. You can either reuse an existing workflow you have used for one of the other ODM modeling algorithms or you can create a new Workflow called Association Rules.

Step 2 – Define your Data Set

Assuming that your database has been setup to have the Sample schemas and their corresponding data, we will be using the data that is in the SH schema. In a previous post, I gave some instructions on setting up your database to use ODM and part of that involved a step to give your ODM schema access to the sample schema data.

We will start off by creating a Data Source Node. Click on the Data Source Node under the Component Palette. Then move your mouse to your your workspace area and click. A Data Source Node will be created and a window will open. Scroll down the list of Available Tables until you find the SH.SALES table. Click on this table and then click on the Next button. We want to include all the data so we can now click the Finish Button.

Our Data Source Node will now be renamed to SALES.

Step 3 – Setup the Association Build Node

Under the Model section of the Component Palette select Association. Move the mouse to your work area (and perhaps just the to right of the SALES node) click. Our Association Node will be created.

For the next step we need to join the our data source (SALES) with the Association Build Node. Right click on the SALES data node and select Connect from the drop down menu. Then move the mouse to the Association Build node and click. You should now have the two nodes connected.

We will now get the Edit Association Build Node property window opening for us. We will need to enter the following information:

- Transaction ID: This is the attribute(s) that can be used to uniquely identify each transaction. In our example the Customer ID and the Time ID of the transaction allows us to identify what we want to analyse by i.e. the basket. This will group all the related transactions together

- Item ID: What is the attribute of the thing you want to analyse. In our case we want to analyse the Products purchased, so select PROD_ID in this case

- Value: This is an identifier used to specify another column with the transaction data to combine with the Item ID. means that you want to see if there are any type of common bundling among all values of the selected Item ID. Use this.

Like all data mining products, Oracle has just one Algorithm to use for Association Rule Analysis, the Apriori Algorithm.

Click the OK button. You are now ready to run the Association Build Node. Right click on the node and select Run from the menu. After a short time everything should finish and we will have the little green tick makes on each of the nodes.

Check out the next post in the series (Part 2) where we will look at how you can examine the rules produced by our model in ODM.

Accepted for BIWA Summit–9th to 10th January

I received an email today to say that I had a presentation accepted for the BIWA Summit. This conference will be in the Sofitel Hotel beside the Oracle HQ in Redwood City.

The title of the presentation is “The Oracle Data Scientist” and the abstract is

Over the past 18 months we have seen a significant increase in the demand for Data Scientists. But how does someone become a data scientist. If we examine the requirements and job descriptions of this role we can see that being able to understand and process data are fundamental skills. So an Oracle developer is ideally suited to being a Data Scientist. The presentation will show how an Oracle developer can evolve into a data scientist through a number of stages, including BI developer, OBIEE developer, statistical analysis, data miner and data scientist. The tasks and tools will be discussed and explored through each of these roles. The second half of the presentation will focus on the data mining functionality available in SQL and PL/SQL. This will consist of a demonstration of an Analytics Development environment and how you can migrate (and use) your models in a Production environment

For some reason Simon Cowell of XFactor fame kept on popping into my head and it now looks like he will be making an appearance in the presentation too. You will have to wait until the conference to find out what Simon Cowell and Being an Oracle Data Scientist have in common.

Check out the BIWA Summit website for more details and to register for the event.

I’ll see you there ![]()

Events for Oracle Users in Ireland-November 2012

November (2012) is going to be a busy month for Oracle users in Ireland. There is a mixture of Oracle User Group events, with Oracle Day and the OTN Developer Days. To round off the year we have the UKOUG Conference during the first week in December.

Here are the dates and web links for each event.

Oracle User Group

The BI & EPM SIG will be having their next meeting on the Tuesday 20th November. This is almost a full day event, with presentations from End Users, Partners and Oracle product management. The main focus of the day will be on EPM, but will also be of interest to BI people.

As with all SIG meetings, this SIG will be held in the Oracle office in East Point (Block H). Things kick off at 9am and are due to finish around 4pm with plenty of tea/coffee and a free lunch too.

Remember to follow OUG Ireland on twitter using #oug_ire

Oracle Day

Oracle will be having their Oracle Day 2012, on Thursday 15th, in Croke Park. Here is some of the blurb about the event, “…to learn how Oracle simplifies IT, whether it’s by engineering hardware and software to work together or making new technologies work for the modern enterprise. Sessions and keynotes feature an elite roster of Oracle solutions experts, partners and business associates, as well as fascinating user case studies and live demos.”

This is a full day event from 9am to 5pm with 3 parallel streams focusing on Big Data, Enterprise Applications and the Cloud.

Click here to register for this event.

Click here for the full details and agenda.

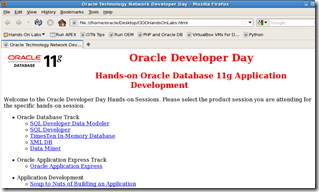

OTN Developer Days

Oracle run their developer days about 3 times a year in Dublin. These events are run like a Hands-on Lab. So most of the work during the day is by yourself. You are provided with a workbook, a laptop and a virtual machine configured for the hands-on lab. This November we have the following developers days in the Oracle office in East Point, Dublin.

Tuesday 27th November (9:45-15:00) : Real Application Testing

Wednesday 28th November (9:00-14:00) : Partitioning/Advanced Compression

Thursday 29th November (9:15-13:30) : Database Security

Friday 30th November (9:45-16:00) : Business Process Management Using BPM Suite 11g

As you can see we have almost a full week of FREE training from Oracle. So there is no reason not to sign up for these days.

UKOUG Conference – in Birmingham

In December we have the annual UKOUG Conference. This is the largest Oracle User Group conference in Europe and the largest outside of the USA. At this conference you will have some of the main speakers and presentations from Oracle Open World, along with a range of speakers from all over the work.

In keeping with previous years there will be the OakTable Sunday and new this year there will be a Middleware Sunday. You need to register separately for these events. Here are the links

The main conference kicks off on the Monday morning with a very full agenda for Monday, Tuesday and Wednesday. There are a number of social events on the Monday and Tuesday, so come well rested.

On the Monday evening there is the focus pubs. This year it seems to have an Irish Pub theme. At the focus pub event there will be table for each of the user group SIGs.

Come and join me at the Ireland table on the Monday evening.

The full agenda in now live and you can get all the details here.

I will be giving a presentation on the Tuesday afternoon titled Getting Real Business Value from Predictive Analytics (OBIEE and Oracle Data Mining). This is a joint presentation with Antony Heljula of Peak Indicators.

Oracle Advanced Analytics Option in Oracle 12c

At Oracle Open World a few weeks ago there was a large number of presentations on Big Data and Analytics. Most of these were marketing type presentations, with a couple of presentations on using R and how it can not be integrated into the Oracle Database 11.2.

In addition this these there was one presentation that focused on the Oracle Advanced Analytics (OAA) Option.

The Oracle Advanced Analytics Option covers the Oracle Data Mining features and the Oracle R Enterprise features in the Database.

The purpose of this blog post is to outline and summarise what was mentioned at these presentations, and will include what changes are/may be coming in the “Next Release” of the database i.e. Oracle 12c.

Health Warning: As with all the presentations at OOW that talked about what may be in or may be in the next release, there is not guarantee that the features will actually be in the release version of the database. Here is the slide that gives the Safe Harbor statement.

- 12c will come with R embedded into it. So there will be no need for any configurations.

- Oracle R client will come as part of the server install.

- Oracle R client will be able to use the Analytics functions that exist in the database.

- Will be able to run R code in the database.

- The database (12c) will be able to spawn multiple R engines.

- Will be able to emulate map-reduce style algorithms.

- There will be new PREDICTION function, replacing the existing (11g) functionality. This will combine a number of steps of building a model and applying it to the data to be scored into one function. But we will still need the functionality of the existing PREDICTION function that is in 11g. So it will be interesting to see how this functionality will be kept in addition to the new functionality being proposed in 12c.

- Although the Oracle Data Miner tool will still exits and will have many new features. It was also referred to as the ‘OAA Workflow’. So those this indicate a potential name change? We will have to wait and see.

- Oracle Data Miner will come with a new additional graphing feature. This will be in addition to the Explore Node and will allow us to produce more typical attribute related graphs. From what I could see these would be similar to the type of box plot, scatter, bar chart, etc. graphs that you can get from R.

- There will be a number of new algorithms too, including a useful One Class Support Vector Machine. This can be used when we have a data set with just one class value. This algorithm will work out what records/cases are more important and others.

- There will be a new SQL node. This will allow us to write our own data transformation code.

- There will be a new node to allow the calling of R code.

- The tool also comes with a slightly modified layout and colour scheme.

Again, the points that I have given above are just my observations. They may or may not appear in 12c, or maybe I misunderstood what was being said.

It certainly looks like we will have a integrate analytics environment in 12c with full integration of R and the ODM in-database features.

Extracting the rules from an ODM Decision Tree model

One of the most interesting of important aspects of a Decision Model is that we as a user can get to see what rules the machine learning algorithm has generated for our data.

I’ve give a number of examples in various blog posts over the past few years on how to generate a number of classification models. An example of the workflow is below.

In the Class Build node we get four models being generated. These include a Generalised Linear Model, Support Vector Machine, Naive Bayes and a Decision Tree model.

We can explore the Decision Tree model by right clicking on the Class Build Node, selecting View Models and then the Decision Tree model, which will be labelled with a ‘DT’ in the name.

As we explore the nodes and branches of the Decision Tree we can see the rule that was generated for a node in the lower pane of the applications. So by clicking on each node we get a different rule appearing in this pane

Sometimes there is a need to extract this rules so that they can be presented to a number of different types of users, to explain to them what is going on.

How can we extract the Decision Tree rules?

To do this, you will need to complete the following steps:

- From the Models section of the Component Palette select the Model Details node.

- Click on the Workflow pane and the Model Details node will be created

- Connect the Class Build node to the Model Details node. To do this right click on the Class Build node and select Connect. Then move the mouse to the Model Details node and click. The two nodes should now be connected.

- Edit the Model Details node, uncheck the Auto Settings, select Model Type to be Decision Tree, Output to be Full Tree and all the columns.

- Run the Model Details node. Right click on the node and select run. When complete you you will have the little green box with a tick mark, on the top right hand corner.

- To view the details produced, right click on the Model Details node and select View Data

- The rules for each node will now be displayed. You will need to scroll to the right of this pane to get to the rules and you will need to expand the columns for the rules to see the full details

My Presentations on Oracle Advanced Analytics Option

I’ve recently compiled my list of presentation on the Oracle Analytics Option. All these presentations are for a 45 minute period.

I have two versions of the presentation ‘How to do Data Mining in SQL & PL/SQL’, one is for 45 minutes and the second version is for 2 hour.

I have given most of these presentations at conferences or SIGS.

Let me know if you are interesting in having one of these presentations at your SIG or conference.

- Oracle Analytics Option – 12c New Features – available 2013

- Real-time prediction in SQL & Oracle Analytics Option – Using the 12c PREDICTION function – available 2013

- How to do Data Mining in SQL & PL/SQL

- From BIG Data to Small Data and Everything in Between

- Oracle R Enterprise : How to get started

- Oracle Analytics Option : R vs Oracle Data Mining

- Building Predictive Analysts into your Forms Applications

- Getting Real Business Value from OBIEE and Oracle Data Mining (This is a cut down and merged version of the follow two presentations)

- Getting Real Business Value from OBIEE and Oracle Data Mining – Part 1 : The Oracle Data Miner part

- Getting Real Business Value from OBIEE and Oracle Data Mining – Part 2 : The OBIEE part

- How to Deploying and Using your Oracle Data Miner Models in Production

- Oracle Analytics Option 101

- From SQL Programmer to Data Scientist: evolving roles of an Oracle programmer

- Using an Oracle Oracle Data Mining Model in SQL & PL/SQL

- Getting Started with Oracle Data Mining

- You don’t need a PhD to do Data Mining

Check out the ‘My Presentations’ page for updates on new presentations.

Big Data videos by Oracle

Here are the links to the 2 different sets of Big Data videos that Oracle have produced over the past 12 months

Oracle Big Data Videos – Version 1

Episode 2 – Gold Mine or Just Stuff

Episode 4 – Everything You Always Wanted to Know

Oracle Big Data Videos – Version 2

Episode 1 – Overview for the Boss

Episode 3 – Acquiring Big Data

Episode 4 – Organising Big Data

Episode 5 – Analysing Big Data

Other videos include

Part 2 of the Leaning Tower of Pisa problem in ODM

In previous post I gave the details of how you can use Regression in Oracle Data Miner to predict/forecast the lean of the tower in future years. This was based on building a regression model in ODM using the known lean/tilt of the tower for a range of years.

In this post I will show you how you can do the same tasks using the Oracle Data Miner functions in SQL and PL/SQL.

Step 1 – Create the table and data

The easiest way to do this is to make a copy of the PISA table we created in the previous blog post. If you haven’t completed this, then go to the blog post and complete step 1 and step 2.

create table PISA_2

as select * from PISA;

Step 2 – Create the ODM Settings table

We need to create a ‘settings’ table before we can use the ODM API’s in PL/SQL. The purpose of this table is to store all the configuration parameters needed for the algorithm to work. In our case we only need to set two parameters.

BEGIN

delete from pisa_2_settings;

INSERT INTO PISA_2_settings (setting_name, setting_value) VALUES

(dbms_data_mining.algo_name, dbms_data_mining.ALGO_GENERALIZED_LINEAR_MODEL);

INSERT INTO PISA_2_settings (setting_name, setting_value) VALUES

(dbms_data_mining.prep_auto,dbms_data_mining.prep_auto_off );

COMMIT;

END;

Step 3 – Build the Regression Model

To build the regression model we need to use the CREATE_MODEL function that is part of the DBMS_DATA_MINING package. When calling this function we need to pass in the name of the model, the algorithm to use, the source data, the setting table and the target column we are interested in.

BEGIN

DBMS_DATA_MINING.CREATE_MODEL(

model_name => ‘PISA_REG_2’,

mining_function => dbms_data_mining.regression,

data_table_name => ‘pisa_2_build_v’,

case_id_column_name => null,

target_column_name => ’tilt’,

settings_table_name => ‘pisa_2_settings’);

END;

After this we should have our regression model.

Step 4 – Query the Regression Model details

To find out what was produced as in the previous step we can query the data dictionary.

SELECT model_name,

mining_function,

algorithm,

build_duration,

model_size

from USER_MINING_MODELS

where model_name like ‘P%’;

select setting_name,

setting_value,

setting_type

from all_mining_model_settings

where model_name like ‘P%’;

Step 5 – Apply the Regression Model to new data

Our final step would be to apply it to our new data i.e. the years that we want to know what the lean/tilt would be.

SELECT year_measured, prediction(pisa_reg_2 using *)

FROM pisa_2_apply_v;

Using ODM Regression for the Leaning Tower of Pisa tilt problem

This blog post will look at how you can use the Regression feature in Oracle Data Miner (ODM) to predict the lean/tilt of the Leaning Tower of Pisa in the future.

This is a well know regression exercise, and it typically comes with a set of know values and the year for these values. There are lots of websites that contain the details of the problem. A summary of it is:

The following table gives measurements for the years 1975-1985 of the “lean” of the Leaning Tower of Pisa. The variable “lean” represents the difference between where a point on the tower would be if the tower were straight and where it actually is. The data is coded as tenths of a millimetre in excess of 2.9 meters, so that the 1975 lean, which was 2.9642.

Given the lean for the years 1975 to 1985, can you calculate the lean for a future date like 200, 2009, 2012.

Step 1 – Create the table

Connect to a schema that you have setup for use with Oracle Data Miner. Create a table (PISA) with 2 attributes, YEAR_MEASURED and TILT. Both of these attributes need to have the datatype of NUMBER, as ODM will ignore any of the attributes if they are a VARCHAR or you might get an error.

CREATE TABLE PISA

(

YEAR_MEASURED NUMBER(4,0),

TILT NUMBER(9,4)

);

Step 2 – Insert the data

There are 2 sets of data that need to be inserted into this table. The first is the data from 1975 to 1985 with the known values of the lean/tilt of the tower. The second set of data is the future years where we do not know the lean/tilt and we want ODM to calculate the value based on the Regression model we want to create.

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1975,2.9642);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1976,2.9644);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1977,2.9656);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1978,2.9667);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1979,2.9673);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1980,2.9688);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1981,2.9696);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1982,2.9698);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1983,2.9713);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1984,2.9717);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1985,2.9725);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1986,2.9742);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1987,2.9757);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1988,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1989,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1990,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (1995,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (2000,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (2005,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (2010,null);

Insert into DMUSER.PISA (YEAR_MEASURED,TILT) values (2009,null);

Step 3 – Start ODM and Prepare the data

Open SQL Developer and open the ODM Connections tab. Connect to the schema that you have created the PISA table in. Create a new Project or use an existing one and create a new Workflow for your PISA ODM work.

Create a Data Source node in the workspace and assign the PISA table to it. You can select all the attributes..

The table contains the data that we need to build our regression model (our training data set) and the data that we will use for predicting the future lean/tilt (our apply data set).

We need to apply a filter to the PISA data source to only look at the training data set. Select the Filter Rows node and drag it to the workspace. Connect the PISA data source to the Filter Rows note. Double click on the Filter Row node and select the Expression Builder icon. Create the where clause to select only the rows where we know the lean/tilt.

Step 4 – Create the Regression model

Select the Regression Node from the Models component palette and drop it onto your workspace. Connect the Filter Rows node to the Regression Build Node.

Double click on the Regression Build node and set the Target to the TILT variable. You can leave the Case ID at . You can also select if you want to build a GLM or SVM regression model or both of them. Set the AUTO check box to unchecked. By doing this Oracle will not try to do any data processing or attribute elimination.

You are now ready to create your regression models.

To do this right click the Regression Build node and select Run. When everything is finished you will get a little green tick on the top right hand corner of each node.

Step 5 – Predict the Lean/Tilt for future years

The PISA table that we used above, also contains our apply data set

We need to create a new Filter Rows node on our workspace. This will be used to only look at the rows in PISA where TILT is null. Connect the PISA data source node to the new filter node and edit the expression builder.

Next we need to create the Apply Node. This allows us to run the Regression model(s) against our Apply data set. Connect the second Filter Rows node to the Apply Node and the Regression Build node to the Apply Node.

Double click on the Apply Node. Under the Apply Columns we can see that we will have 4 attributes created in the output. 3 of these attributes will be for the GLM model and 1 will be for the SVM model.

Click on the Data Columns tab and edit the data columns so that we get the YEAR_MEASURED attribute to appear in the final output.

Now run the Apply node by right clicking on it and selecting Run.

Step 6 – Viewing the results

Where we get the little green tick on the Apply node we know that everything has run and completed successfully.

To view the predictions right click on the Apply Node and select View Data from the menu.

We can see the the GLM mode gives the results we would expect but the SVM does not.

Data Science Is Multidisciplinary

A few weeks ago I had a blog post called Domain Knowledge + Data Skills = Data Miner.

In that blog post I was saying that to be a Data Scientist all you needed was Domain Knowledge and some Data Skills, which included Data Mining.

The reality is that the skill set of a Data Scientist will be much larger. There is a saying ‘A jack of all trades and a master of none’. When it comes to being a data scientist you need to be a bit like this but perhaps a better saying would be ‘A jack of all trades and a master of some’.

I’ve put together the following diagram, which includes most of the skills with an out circle of more fundamental skills. It is this outer ring of skills that are fundamental in becoming a data scientist. The skills in the inner part of the diagram are skills that most people will have some experience in one or more of them. The other skills can be developed and learned over time, all depending on the type of person you are.

Can we train someone to become a data scientist or are they born to be a data scientist. It is a little bit of both really but you need to have some of the fundamental skills and the right type of personality. The learning of the other skills should be easy(ish)

What do you think? Are their Skill that I’m missing?

VM for Oracle Data Miner

Recently the OTN team have updated the ‘Database App Development’ Developer Day virtual machine to include Oracle 11.2.0.2 DB and SQL Developer 3.1. This is all you need to try out Oracle Data Miner.

So how do you get started with using Oracle Data Miner on your PC. The first step is to download and install the latest version of Oracle VirtualBox.

The next step is to download and install the OTN Developer Day appliance. Click on the above link to go to the webpage and follow the instructions to download and install the appliance. Download the first appliance on this page ‘Database App Development’ VM. This is a large download and depending on your internet connection it can take anything from 30 minutes to hours. So I wouldn’t recommend doing this over a wifi.

When you start up the VM your OS username and password is oracle. Yes it is case sensitive.

When the get logged into the VM you can close or minimise the host window

There are two important icons, the SQL Developer and the ODDHandsOnLab.html icons.

The ODDHandsOnLab.html icon loads a webpage what contains a number of tutorials for you to follow.

The tutorial we are interest in is the Oracle Data Miner Tutorial. There are 4 tutorials given for ODM. The first two tutorials need to be followed in the order that they are given. The second two tutorials can be done in any order.

If you have not used SQL Developer before then you should work through this tutorial before starting the Oracle Data Miner tutorials.

The first tutorial takes you through the steps needed to create your ODM schema and to create the ODM repository within the database. This tutorial will only take you 10 to 15 minutes to complete.

In the second tutorial you get to use the ODM to build your first ODM model. This tutorial steps your through how to get started with an ODM project, workflow, the different ODM features, how to explore the data, how to create classification models, how to explore the model and then how to apply one of these models to new data. This second tutorial will take approx. 30 to 40 minutes to complete.

It is all very simple and easy to use.

2 Day Oracle Data Miner course material

Last week I managed to get my hands on the training material for the 2 Day Oracle Data Miner course. This course is run by Oracle University.

Many thanks to Michael O’Callaghan who is a BI Sales person here in Ireland and Oracle University, for arranging this.

The 2 days are pretty packed with a mixture of lecture type material, lots of hands on exercises and some time for open discussions. In particular, day 2 will be very busy day.

Check out the course outline and published schedule – click here

You can have this course on site at your organisation. If this is something that interests you then contact your Oracle University account manager. There is also the traditional face-to-face delivery and the newer online delivery, where people from around the world come together for the online class.

You must be logged in to post a comment.