Oracle Advanced Analytics

12c New Data Mining functions

With the release of Oracle 12c we get new functions/procedures and some updated ones for Oracle Data Miner that is part of the Advanced Analytics option.

The following are the new functions/procedures and the functions/procedures that have been updated in 12c, with a link to the 12c Documentation that explains what they do.

-

CLUSTER_DETAILS is a new function that predicts cluster membership for each row. It can use a pre-defined clustering model or perform dynamic clustering. The function returns an XML string that describes the predicted cluster or a specified cluster.

-

CLUSTER_DISTANCE is a new function that predicts cluster membership for each row. It can use a pre-defined clustering model or perform dynamic clustering. The function returns the raw distance between each row and the centroid of either the predicted cluster or a specified.

-

CLUSTER_ID has been enhanced so that it can either use a pre-defined clustering model or perform dynamic clustering.

-

CLUSTER_PROBABILITY has been enhanced so that it can either use a pre-defined clustering model or perform dynamic clustering. The data type of the return value has been changed from

NUMBERtoBINARY_DOUBLE. -

CLUSTER_SET has been enhanced so that it can either use a pre-defined clustering model or perform dynamic clustering. The data type of the returned probability has been changed from

NUMBERtoBINARY_DOUBLE -

FEATURE_DETAILS is a new function that predicts feature matches for each row. It can use a pre-defined feature extraction model or perform dynamic feature extraction. The function returns an XML string that describes the predicted feature or a specified feature.

-

FEATURE_ID has been enhanced so that it can either use a pre-defined feature extraction model or perform dynamic feature extraction.

-

FEATURE_SET has been enhanced so that it can either use a pre-defined feature extraction model or perform dynamic feature extraction. The data type of the returned probability has been changed from

NUMBERtoBINARY_DOUBLE. -

FEATURE_VALUE has been enhanced so that it can either use a pre-defined feature extraction model or perform dynamic feature extraction. The data type of the return value has been changed from

NUMBERtoBINARY_DOUBLE. -

PREDICTION has been enhanced so that it can either use a pre-defined predictive model or perform dynamic prediction.

-

PREDICTION_BOUNDS now returns the upper and lower bounds of the prediction as the

BINARY_DOUBLEdata type. It previously returned these values as theNUMBERdata type. -

PREDICTION_COST has been enhanced so that it can either use a pre-defined predictive model or perform dynamic prediction. The data type of the returned cost has been changed from

NUMBERtoBINARY_DOUBLE. -

PREDICTION_DETAILS has been enhanced so that it can either use a pre-defined predictive model or perform dynamic prediction.

-

PREDICTION_PROBABILITY has been enhanced so that it can either use a pre-defined predictive model or perform dynamic prediction. The data type of the returned probability has been changed from

NUMBERtoBINARY_DOUBLE. -

PREDICTION_SET has been enhanced so that it can either use a pre-defined predictive model or perform dynamic prediction. The data type of the returned probability has been changed from

NUMBERtoBINARY_DOUBLE.

Oracle Data Miner New Features (SQL Dev 4)

With the release of the new Oracle 12c database and SQL Developer 4 we have a range of Oracle Data Miner new features . Some of these are embedded into the database and are only available in 12c. Check out my previous blog post on these new features.

In this blog post I will look at the new Oracle Data Miner features that come with the ODM tool in SQL Developer4.

The new features of the Oracle Data Miner tool can be grouped into 2 categories. The first category contains the new features that are available to all user of the tool (11.2g and 12c). The second category contains the new features that are only available in 12c. The new features of each of these categories will be explained below.

Category 1 – Common new features for 11.2g and 12c Database users

There is a new View Data feature that allows you to drill down to view the customer object and to view nested tables.

A new Graph Node that allows you to create graphs such as line, bar, scatter and boxplots for data at any stage of a workflow. You can specify any of the attributes from the data source for the graphs. You don’t seem to be limited to the number of graphs you can create.

A new SQL Node. This is welcome addition, as there has been many times that I’ve need to write some SQL or PL/SQL to do a specific piece of processing on the data that was not available with the other nodes. There are 2 important elements to this SQL node really. The first is that you can write SQL and PL/SQL code to do whatever processing you want to do. But you can only do it on the Data node you are connected to.

The second is that you can use it to call some ORE code. This allows you to use the power of R and extensive range of packages that are available to expand the analytic functionality that is available in the database. If there is some particular function that you cannot do in Oracle and it is available in R, you can now embed this function/code as an ORE object in the database. You can then called using SQL.

WARNING: this particular feature will only work if you have ORE installed on your 11.2.0.3g or 12.1c database

New Model Build Node features, include node level text specifications for text transformations, displays the heuristic rules responsible for excluding predictor columns and being able to control the amount of classification and regression test results that are generated. I’ll be covering these in later blog posts.

New Workflow SQL Script Deployment features. Up to now the workflow SQL script, I found to be of limited use. The development team have put a lot of work into generating a proper script that can be used by developers and DBA. But there are some limitations still. You can use the script will run the workflow automatically in the database without having the use the ODM tool. But it can only be run the in the schema that the workflow was generated. You will still have to do a lot of coding (although a lot less than you used to) to get your ODM models and workflows to run in another schema or database.

This will output the script to a file buried deep somewhere inside you SQL Developer directory. Unfortunately in the EA1 release, the size of this location field is small and scrolling has not been enabled. So you cannot (currently) scroll to the end of the field to see the actual location. You can edit this location to have a different shorter location.

Maybe this will be fixed for the official release.

Category 2 – New features for 12c Database users.

Now for the new features that are only visible when you are running ODM / SQL Dev 4 against a 12c database. No configuration changes are needed. The ODM tool checks to see what version of the database you are logging into. It will then present the available features based on the version of the database.

New Predictive Query nodes allows you to build a node based on the new non-transient feature in 12c called Predictive Queries (PQs). In SQL Developer we get 3 addition types of Predictive Queries. These can be used for Anomaly Detection, Clustering and Feature Extraction

It is important to remember that underlying model produced by these PQs to not exist in the database after the query has executed. The model is created, used on the data and then the model deleted.

The Clustering node has the new algorithm Expectation Maximization in addition to the existing algorithms of K-Means and O-Cluster.

The Feature Extraction node has the new algorithm called Principal Component Analysis in addition to the existing Non-Negative Matrix Factorization algorithm.

Text Transformations are now built into the model build nodes. These text transformations will be part of the Automatic Data Processing steps for the model build nodes. This is illustrated in the above images.

The Generalized Linear Model that is part of the Classification Node has a Feature Selection option in the Algorithm Settings. The default setting is Ridge Regression. Now there is an additional option of using Feature Selection.

Prediction Result Explanations gives the scoring details used to to explain why the prediction was made.

Look out for blog post on each of these new features.

Upgrading your ODM Repository for SQL Dev 4

For those users of Oracle Data Miner (ODM) that is part of SQL Developer, now that Oracle have finally released SQL Developer 4, you might want to upgrade to this new release. There are a lot of new features. Some of these are available for 11.2g and 12.1c databases and some are only available for 12.1c users.

I will have another blog post soon on the new Oracle Data Miner (ODM) features that are available in SQL Developer 4.

The instructions given below are what I did to upgrade so that I could use the new ODM tool/SQL Developer 4.

Step 1 – Install SQL Developer 4 : I have another blog post on what this involves, so check it out and complete the steps before you continue with the result of the steps below.

Step 2 – Make ODM Visible : After SQL Developer 4 opens you should see all your migrated connections. To make ODM visible you need to click on the Tools menu, select Oracle Data Miner and then Make Visible. This will open a number of tabs on the left hand side of SQL Developer. These will include Data Miner (connections), Workflow Structure and Workflow Jobs.

Step 3 – Open an ODM Connection : Take one your ODM connections and double click on it. SQL Developer 4 / ODM will check what versions of the ODM repository exists in your database. If this is your first time connecting from SQL Developer 4, you will be told that you will need to upgrade your repository

Step 4 – Upgrade the ODM Repository : Select the Yes button on the Upgrade Repository window. You will then be asked for the SYS password. If you do not have access to this you can talk nicely to your DBA and ask them to enter the password for you.

You may or may not get a warning message like the following. Just click OK to continue.

Step 5 – Start the Repository Upgrade : When the Migrate Data Miner Repository window opens, just click the Start button.

This might be a good time to go off an make yourself a coffee. The upgrade process tool approx. 8 minutes on my laptop. If you were running this on a server located somewhere then the script will take a little bit longer to run!

The progress bar will let you know how things are progressing. It also gives some messages to let you known at what stage of the process it is at.

Step 6 – All finished : When the Repository Migration has finished you will get a window with a message saying Task Successfully Complete. Click on the Close button to close this window.

Step 7 – Open an Existing Workflow : Just to make sure that everything has worked with the install and ODM Repository migration, open one of your existing workflows. If it opens then everything should be OK.

When you open the workflow, the new Workflow Editor tab opens on the right hand side of SQL Developer. This seems to have replaced the Component Palette we had with the pervious version of the ODM tool. Expand the headings under the Workflow Editor to see the different nodes that are available. Most of these are the same but we have 2 new nodes under the Data section. These are Graph and SQL Query. I’ll have more on these in another post or posts.

Oracle 12c Advanced Analytics Option new features

With the release of Oracle 12c (finally) now have a lot of learning to do. Oracle 12c is a different beast to what we have been used to up to now.

As part of the 12c there are a number of new in-database Advanced Analytics features. These are separate to the Advanced Analytics new features that come as part of the Oracle Data Miner tool, that is part of SQL Developer.

This post will only look at the new features that are part of the 12c Database. The new in-Database Advanced Analytics features include:

- Using Decisions Trees for Text analysis is now possible. Up to now (11.2g) when you wanted to do text classification you had to exclude Decision Trees from the process. This was because the Decision Trees algorithm could not support nested data.

- Additionally for text mining some of the text processing has been moved from having a separate step, to being part of the some of the algorithms.

- A number of additional features are available for Clustering. These include a cluster distance (from the centroid) and details functions.

- There is a new clustering algorithm (in addition to the K-Means and O-Cluster algorithms), called Expectation Maximization algorithm. This creates a density model that can be give better results when data from different domains are combined for clustering. This algorithm will also determine the optimal number of clusters.

- There are two new Feature Extraction methods that are scalable for high dimensional data, large number of records, for both structured and unstructured. This can be used to reduce the number of dimensions to use as input to the data mining algorithms. The first of these is called Singular Value Decomposition (SVD) and is widely used in text mining. The second method can be considered a special scoring method of SVD is called Principal Component Analysis (PCA). With this method it produces projections that are scaled with the data variance.

- A new feature of the GLM algorithm is that it will perform a feature section step. This is used to reduce the number of predictors used by the algorithm and allow for faster builds. This will makes the outputs more understandable and model more transparent. This feature is not default so you will need to set this on if you want to use it with the GLM algorithm.

- In previous versions of the database, there could be some performance issues that relate to the data types used. In 12c these has been addressed for BINARY_DOUBLE and BINARY_FLOAT. So if you are using these data types you should now see faster scoring of the data in 12c

- There is new in-database feature called Predictive Queries. This allows on-the-fly models that are temporary models that are formed as part of an analytics clause. These models cannot be tuned and you cannot see the details of the model produced. They are formed for the query and do not exist afterwards.

SELECT cust_id, age, pred_age, age-pred_age age_diff, pred_det FROM

(SELECT cust_id, age, pred_age, pred_det,

RANK() OVER (ORDER BY ABS(age-pred_age) DESC) rnk FROM

(SELECT cust_id, age,

PREDICTION(FOR age USING *) OVER () pred_age,

PREDICTION_DETAILS(FOR age ABS USING *) OVER () pred_det

FROM mining_data_apply_v))

WHERE rnk <= 5;

These are the new in-database Advanced Analytics (Data Mining) features. Apart from the new algorithms or changes to them, most of the other changes gives greater transparency into what the algorithms/models are doing. This is good as it allows us to better understand and see what is happening.

The rest of the new Advanced Analytics Option new features will be part of Oracle Data Miner tool in SQL Developer 4. My next blog post will cover the new features in SQL Developer 4.

I haven’t mentioned anything about ORE. The reason for that is that it comes as a separate install and its current version 1.3 works the same in 11.2.0.3g as well as 12c. I’ve had some previous blog posts on this and you can check out the ORE website on OTN.

12c Roundup so far and Events

I’m on vacation at the moment. As a result I’ve missed all the 12c launch and excitement that goes with it. I’ve managed to get a few minutes to put this post together. The aim of this post is to list some interesting blog posts (by other people over the past few days). I intend to expand the list when I get time.

I also wanted to highlight two 12c launch events. The first of these is the official Oracle 12c webcast. It is on Wednesday 10th July. Click on the following image to register etc. The webcast will have Mark Hurd, Andy Mendelsohn and Tom Kyte.

The second 12c launch event will be hosted by Oracle in Ireland. This will be on the 5th September in the Gibson Hotel (Dublin) between 13:00 and 17:30. I believe their might be some 12c goodies available for the attendees. Again click on the image below to register and to check out the agenda.

The following are some articles and blog posts that have been published since 12c has been launched. This is not a complete list or and indication of quality, but I’ve noted them for me to come back to after my vacation to read. You might have come across others. If so let me know and I will add them to the list.

Oracle Advanced Analytics Option 12c and SQL Dev 4 new features

Oracle Database 12c: Oracle Multitenant Option

Oracle website for Multitenent

New DB12c feature involves invisibility

Oracle 12c Magazine by @leight0nn in Flipboard

How long can you hold off on Oracle 12c

Oracle 12c Install articles by Tim Hall (oraclebase) on Linux5 and Linux6

Over the coming weeks (after my vacation) I will be posting some articles on the Advanced Analytics Option in 12c. There are a number of new features. Also when SQL Developer 4 comes out I will be including all the new functionality that is included in the updated ODM tool.

DBMS_PREDICTIVE_ANALYTICS & Predict

In this blog post I will look at the PREDICT procedure that is part of the DBMS_PREDICTIVE_ANALTYICS package. This package allows you to perform data mining in an automated way without having to go through the steps of building, testing and scoring data.

The predictive analytics procedures analyze and prepare the input data, create and test mining models using the input data, and then use the input data for scoring. The results of scoring are returned to the user. The models and supporting objects are not persisted and are removed from the database when the procedure is finished.

The PREDICT procedure should only be used for a Classification problem and data set.

The PREDICT procedure create a model based on the supplied data (out input table) and a target value, and returns scored data set in a new table. When using PREDICT you do not get to select an algorithm to use.

The input data source should contain records that already have the target value populated. It can also contain records where you do not have the target value. In this case the PREDICT function will use the records that have a target value to generate the model. This model will then score all records a the predicted target value

The syntax of the PREDICT procedure is:

DBMS_PREDICTIVE_ANALYTICS.PREDICT (

accuracy OUT NUMBER,

data_table_name IN VARCHAR2,

case_id_column_name IN VARCHAR2,

target_column_name IN VARCHAR2,

result_table_name IN VARCHAR2,

data_schema_name IN VARCHAR2 DEFAULT NULL);

Where

| Parameter Name | Description |

| accuracy | This output parameter from the procedure. You do not pass anything into this parameter. The Accuracy value returned is the predictive confidence of the model generated/used by the PREDICT procedure |

| data_table_name | The name of the table that contains the data you want to use |

| case_id_column_name | The case id for each record. This is unique for each record/case. |

| target_column_name | The name of the column that contains the target column to be predicted |

| result_table_name | The name of the table that will contain the results. This table should not exist in your schema, otherwise an error will occur. |

| data_schema_name | The name of the schema where the table containing the input data is located. This is probably in your current schema, so you can leave this parameter NULL. |

The PREDICT procedure will produce an output tables (result_table_name parameter) and will contain 3 attributes.

| CASE_ID | This is the Case Id of the record from the original data_table_name. This will allow you to link up the data in the source table to the prediction in the result_table_name |

| PREDICTION | This will be the predicted value of the target attribute |

| PROBABILITY | This is the probability of the prediction being correct |

Using the sample example data set that I have given in previous blog posts and in the blog post on the EXPLAIN procedure, the following code illustrates how to use the PREDICT procedure.

set serveroutput on

DECLARE

v_accuracy NUMBER(10,9);

BEGIN

DBMS_PREDICTIVE_ANALYTICS.PREDICT(

accuracy => v_accuracy,

data_table_name => ‘mining_data_build_v’,

case_id_column_name => ‘cust_id’,

target_column_name => ‘affinity_card’,

result_table_name => ‘PA_PREDICT’);

DBMS_OUTPUT.PUT_LINE(‘Accuracy of model = ‘ || v_accuracy);

END;

This took about 15 seconds to run on my laptop, which is surprisingly quick given all the work that is doing internally. To see the predictions and the results from the PREDICT procedure, you will need to query the PA_PREDICT table.

The final step that you might be interested in is to compare the original target value with the prediction value.

SELECT v.cust_id,

v.affinity_card,

p.prediction,

p.probability

FROM mining_data_build_v v,

pa_predict p

WHERE v.cust_id = p.cust_id

AND rownum <= 12;

Remember we do not get to see how or what Oracle did to generate these results. We do not get the opportunity to tune the process and the model.

So you have to be careful when you use the PREDICT function and on what data. Would you use this as a way to explore your data and to see if predictive analytics/data mining might be useful for your? Yes it would. Would you use it in a production scenario? the answer is maybe but it depends on the scenario. In reality if you want to do this in a production environment you will put some work into developing data mining models that best fit your data. To do this you will need to move onto the ODM tool and the DBMS_DATA_MINING package. But the PREDICT function is a quick way to get some small data scored (in some way) based on your existing data. If your marketing department says they want to start a tele marketing campaign in a couple of hours then PREDICT is what you need to use. It may not give you the most accurate of results, but it does give you results that you can start using quickly.

DBMS_PREDICTIVE_ANALYTICS & Explain

There are 2 PL/SQL packages for performing data mining/predictive analytics in Oracle. The main PL/SQL package is DBMS_DATA_MINING. This package allows you to build data mining models and to apply them to new data. But there is another PL/SQL package.

The DBMS_PREDICTIVE_ANALYTICS package is very different to the DBMS_DATA_MINING package. The DBMS_PREDICTIVE_ANALYTICS package includes routines for predictive analytics, an automated form of data mining. With predictive analytics, you do not need to be aware of model building or scoring. All mining activities are handled internally by the predictive analytics procedure.

Predictive analytics routines prepare the data, build a model, score the model, and return the results of model scoring. Before exiting, they delete the model and supporting objects.

The package comes with the following functions: EXPLAIN, PREDICT and PROFILE. To get some of details about these functions we can run the following in SQL.

This blog post will look at the EXPLAIN function.

EXPLAIN creates an attribute importance model. Attribute importance uses the Minimum Description Length algorithm to determine the relative importance of attributes in predicting a target value. EXPLAIN returns a list of attributes ranked in relative order of their impact on the prediction. This information is derived from the model details for the attribute importance model.

Attribute importance models are not scored against new data. They simply return information (model details) about the data you provide.

I’ve written two previous blog posts on Attribute Importance. One of these was on how to calculate Attribute Importance using the Oracle Data Miner tool. In the ODM tool it is now called Feature Selection and is part of the Filter Columns node and the Attribute Importance model is not persisted in the database. The second blog post was how you can create the Attribute Importance using the DBMS_DATA_MINING package.

EXPLAIN ranks attributes in order of influence in explaining a target column.

The syntax of the function is

DBMS_PREDICTIVE_ANALYTICS.EXPLAIN (

data_table_name IN VARCHAR2,

explain_column_name IN VARCHAR2,

result_table_name IN VARCHAR2,

data_schema_name IN VARCHAR2 DEFAULT NULL);

where

data_table_name = Name of input table or view

explain_column_name = Name of column to be explained

result_table_name = Name of table where results are saved. It creates a new table in your schema.

data_schema_name = Name of schema where the input table or view resides. Default: the current schema.

So when calling the function you do not have to include the last parameter.

Using the same example what I have given in the previous blog posts (see about for the links to these) the following command can be run to generate the Attribute Importance.

BEGIN

DBMS_PREDICTIVE_ANALYTICS.EXPLAIN(

data_table_name => ‘mining_data_build_v’,

explain_column_name => ‘affinity_card’,

result_table_name => ‘PA_EXPLAIN’);

END;

One thing that stands out is that it is a bit slower to run than the DBMS_DATA_MINING method. On my laptop it took approx. twice to three time longer to run. But in total it was less than a minute.

To display the results,

The results are ranked in a 0 to 1 range. Any attribute that had a negative value are set to zero.

Outputting your data using inbuilt SQL Dev formatting

Oracle has build a number of formatting options into SQL Developer to allow you to output your data in some standard formats. This removes the need to use other tools or to write extra code or performs various follow up steps.

All you need to do is to add a comment and use the Scrip button

SELECT /*csv*/ * FROM scott.emp;

SELECT /*xml*/ * FROM scott.emp;

SELECT /*html*/ * FROM scott.emp;

SELECT /*delimited*/ * FROM scott.emp;

SELECT /*insert*/ * FROM SCOTT.EMP;

SELECT /*loader*/ * FROM scott.emp;

SELECT /*fixed*/ * FROM scott.emp;

SELECT /*text*/ * FROM scott.emp;Hint: for some of these it is best to list the schema and table name in upper case

These are comments and not hints so they will not work in SQL*Plus.

Getting Real Business Value from Oracle Data Mining and OBIEE

Over the past 16 months (or so) I have give a join presentation with Anthony Heljula called ‘Getting Real Business Value from Oracle Data Mining and OBIEE’, at a number of conferences and OUG SIGs.

We have had a lot of very positive feedback on this presentation. The presentation is a busy 45 minutes (questions only at the end) that walks through a pilot data science project we did for a University in the UK.

We used Oracle Data Miner to build a predictive model that looks at student churn. We then integrated this Student Churn model into OBIEE Dashboards to illustrate how combining an Oracle Data Miner model into our data analysis we can gain a greater insight of our data.

We have submitted this presentation for Oracle Open World 2013 but we have renamed the title of the presentation to

“How UK Universities are using Oracle Data Science to protect their income”

If you are involved in presentation selection or know someone who is then maybe you might select this to be presented at OOW13 in September.

We submitted the presentation for OOW12 with not luck. So fingers crossed this time.

Oracle Data Miner Videos–Updated list

Over the past couple of week Charlie Berger has put together a few videos on Oracle Data Miner and has posted these on YouTube. Below are the links to these videos and to the YouTube videos I made back in 2011 on the Oracle Data Miner.

Oracle Data Miner Comes of Age – Brendan Tierney

ODM 11g R2 – Creating ODM User & Repository – Brendan Tierney

ODM 11gR2 – Exporting and Importing ODM Workflows – Brendan Tierney

ODM 11gR2 – Dropping the Repository – Brendan Tierney

I must get back to making a few more videos!

Overview presentation and demonstration of Oracle Advanced Analytics Option – by Charlie Berger

Fraud and Anomaly Detection using Oracle Advanced Anlaytics Part 1 Concepts – by Charlie Berger

Fraud and Anomaly Detection using Oracle Advanced Anlaytics Part 2 Demo – by Charlie Berger

Oracle buys Darwin back in 1999

The following is an extract from 1999 September/October edition of Oracle Magazine, about Oracle buying Thinking Machines. Their data mining software Darwin was integrated into the Oracle Database and renamed Oracle Data Miner.

“Oracle Corporation’s recent acquisition of Thinking Machines’ data mining business extends Oracle’s data warehouse platform and business intelligence solution to include enterprise reporting, ad hoc query, advanced analysis and data mining software based on a common internet platform.

Oracle plans to incorporate the data mining software as an integral feature of Oracle Applications Customer Relationship Management site, which will facilitate the implementation of the e0business solutions developed by Oracle customers. In addition o the software technology, Oracle will receive rights to the domains think.com and thinkingmachines.com.

About Thinking Machines

Originally founded in 1983, Thinking Machines Corporation revolutionized high performance computing with its massively parallel supercomputing technology. The company has since evolved to focus exclusively on its Darwin data mining software for database marketing in the financial services and telecommunications industries. Darwin analyzes massive volumes of customer transaction, demographic and psychographic data, which can often amount to hundreds of millions of customer data records.

These advanced analyses help companies profile and target customers with greater accuracy, which allows companies to reduce customer attrition, assess customer profitability, cross sell to existing customers and detect fraud.

Darwin puts powerful data mining techniques in the hands of general business users and experienced analysts alike. Each to use wizards automate data mining while providing advanced users with full control over all options and parameters. The Darwin software combines advanced analytics – including neural networks, decisions trees and memory based reasoning, with impressive power and performance.

The solution’s one button model code generation, powerful scripting language and robust software development kit bring prediction capabilities to sales, call center, marking and the web.

Platforms and Languages

Darwin runs on Sun Microsystems and Hewlett-Packard servers and exports data mining models in C, C++ and Java for execution within Oracle Databases. A Microsoft Windows NT release is planned for later this year.”

Part 2–Getting start with Statistics for Oracle Data Science projects

This is the second blog on getting started with Statistics for Oracle Data Science projects.

- The first blog post in the series looked at the DBMS_STAT_FUNCS PL/SQL package, what it can be used for and I give some sample code on how to use it in your data science projects. I also give some sample code that I typically run to gather some additional stats.

- The second blog post will look at some of the other statistical functions that exist in SQL that you will/may use regularly in your data science projects.

- The third blog post will provide a summary of the other statistical functions that exist in the database.

In this blog post I will look at 3 more useful statistical functions that are available in the Oracle database. Remember these come are standard with the database. The first function I will look at is the WIDTH_BUCKET function. This can be used to create some histograms of the data. A common task in analytics projects is to produce some cross tabs of the data. Oracle has the STATS_CROSSTAB. The last function I will look the different ways you an sample the data.

Histograms using WIDTH_BUCKET

When exploring your data it is useful to group values together into a number of buckets. Typically you might want to define the width of each bucket yourself before passing the data into your data mining tools, but before you can decide what these are you need to do some exploring using a variety of widths. A good way to do this is to use the WIDTH_BUCKET function. This takes the following inputs:

Expression: This is the expression or attribute on which the you want to build the histogram.

Min Value: This is the lower or starting value of the first bucket

Max Value: This is the last or highest value for the last bucket

Num Buckets: This is the number of buckets you want created.

Typically the Min Value and the Max Value can be calculated using the MIN and MAX functions. As a starting point you generally would select 10 for the number of buckets. This is the number you will change, downwards as well as upwards, to if a particular pattern exists in the attribute.

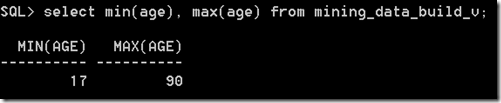

Using the example scenario that I used in the first blog post, let us start by calculating the MIN and MAX for the AGE attribute.

Lets say that we wanted to create 10 buckets. This would create a bucket width of 7.3 for each bucket, giving us the following.

Bucket 1 : 17-24.3

Bucket 2: 24.3-31.6

Bucket 3: 31.6-38.8

Bucket 4: 38.8-46.1

Bucket 5: 46.1-53.4

Bucket 6: 53.4-60.7

Bucket 7: 60.7-68

Bucket 8: 68-75.3

Bucket 9: 75.3-82.6

Bucket 10: 82.6-90

These are the buckets that the WIDTH_BUCKET function gives us in the following:

SELECT cust_id,

age,

width_bucket(age,

(SELECT min(age) from mining_data_build_v),

(select max(age)+1 from mining_data_build_v),

10) bucket

from mining_data_build_v

where rownum <=12

group by cust_id, age

An additional level of detail that is needed to allow us to plot the histograms for AGE, we need to aggregate up for all the records by bucket.

select intvl, count(*) freq

from (select width_bucket(salary,

(select min(salary) from employees),

(select max(salary)+1 from employees), 10) intvl

from HR.employees)

group by intvl

order by intvl;

We can take this code and embed it into the GATHER_DATA_STATS procedure that I gave in my Part 1 blog post.

Cross Tabs using STATS_CROSSTAB

Typically cross tabulation (or crosstabs for short) is a statistical process that summarises categorical data to create a contingency table. They provide a basic picture of the interrelation between two variables and can help find interactions between them.

Because Crosstabs creates a row for each value in one variable and a column for each value in the other, the procedure is not suitable for continuous variables that assume many values.

In Oracle we can perform crosstabs using one of their reporting tools. But if you don’t have one of these we will need to use the in-database function STATS_CROSSTAB. This function takes three parameters, the first two of these are the attributes you want to compare and the third is what test we want to perform. The tests available include:

- CHISQ_OBS: Observed value of chi-squared

- CHISQ_SIG: Significance of observed chi-squared

- CHISQ_DF: Degree of freedom for chi-squared

- PHI_COEFFICIENT: Phi coefficient

- CRAMERS_V: Cramer’s V statistic

- CONT_COEFFICIENT: Contingency coefficient

- COHENS_K: Cohen’s kappa

CHISQ_SIG is the default.

Now let us look at some examples using our same data set.

Sampling Data

When our datasets are of relatively small size consisting of a few hundred thousand records we can explore the data is a relatively short period of time. But if your data sets are larger that that you may need to explore the data by taking a sample of it. What sampling does is that it takes a “random” selection of records from our data set up to the new number of records we have specified in the sample.

In Oracle the SAMPLE function takes a percentage figure. This is the percentage of the entire data set you want to have in the Sampled result.

There is also a variant called SAMPLE BLOCK and the figure given is the percentage of records to select from each block.

Each time you use the SAMPLE function Oracle will generate a random seed number that it will use as a Seed for the SAMPLE function. If you omit a Seed number (like in the above examples), you will get a different result set in each case and the result set will have a slightly different number of records. If you run the sample code above over and over again you will see that the number of records returned varies by a small amount.

If you would like to have the same Sample data set returned each time then you will need to specify a Seed value. The Seed much be an integer between 0 and 4294967295.

In this case because we have specified the Seed we get the same “random” records being returned with each execution.

You must be logged in to post a comment.