2024 Leaving Certificate Results – Inline

The 2024 Leaving Certificate results are out. Up, down and across the country there have been tears of joy and some muted celebrations. But there have been some disappointments too. Although the government has been talking about how the marks have been increased, with post mark adjustments, this doesn’t help students come to terms with their results.

In previous years, I’ve looked at the profile of marks across some (not all) of the Leaving Certificate tools (the tools I used included an Oracle Database, and used Oracle Analytics Cloud to do some of the analysis along with other tools). Check out these previous posts

- Leaving Certificate 2023 – Inline or more adjustments

- CAO Points 2023

- Leaving Certificate 2022 – Inflation, deflation or in-line

- CAO Points 2022

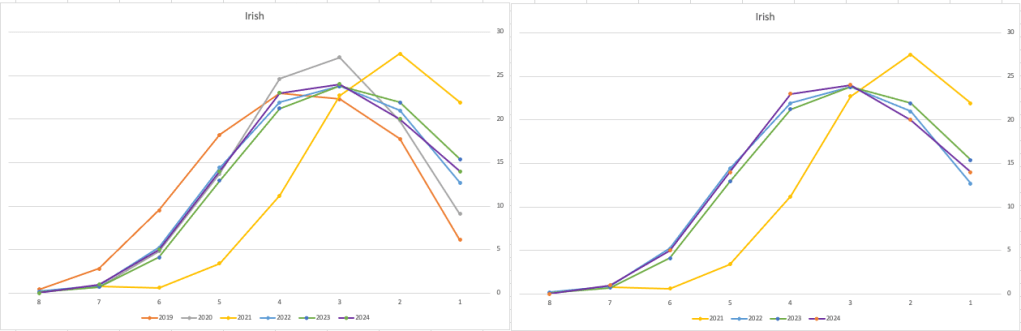

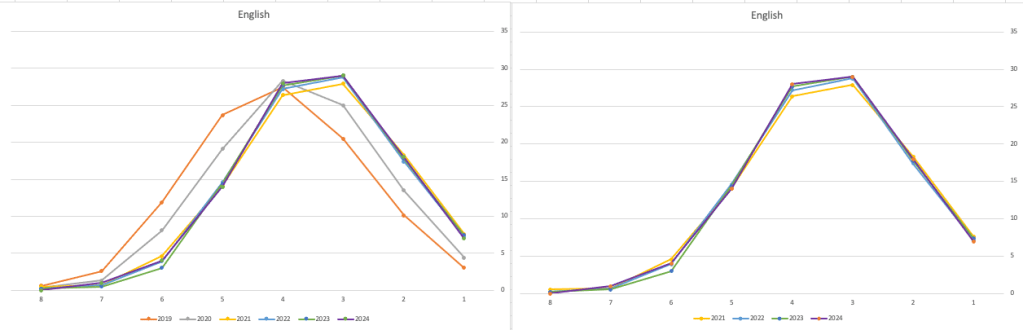

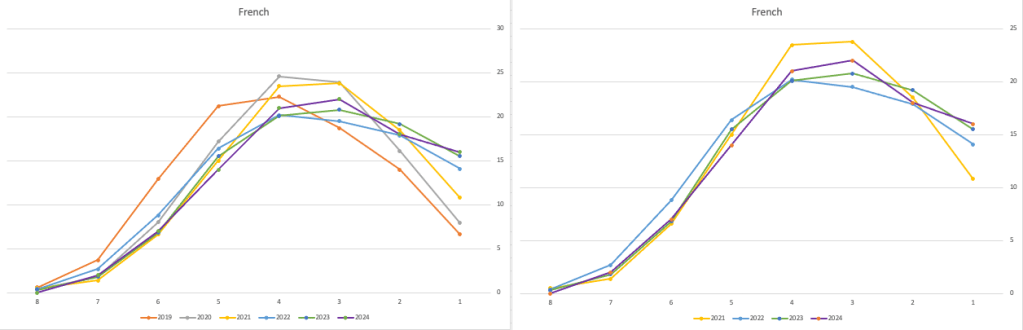

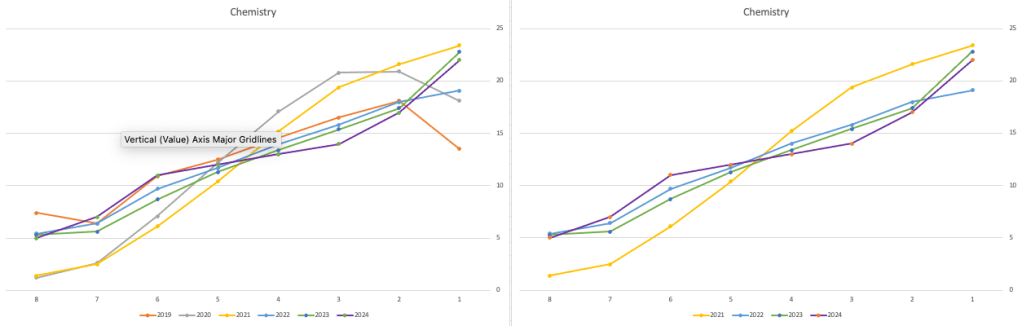

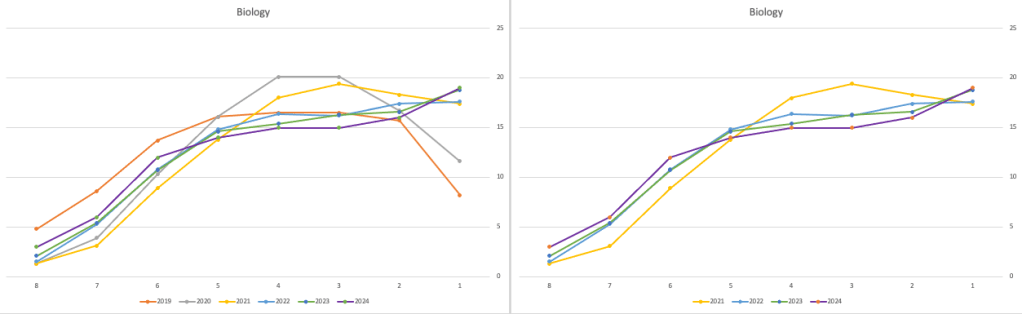

From analysing the results for 2024, both numerically and graphically, we can see the results this year are broadly inline with last year. That news might bring some joy to some students but will be slightly disappointing for others. You can see this for yourself from the graphics below. However, in some subjects, there appears to be some minor (yes very minor) changes in the profiles, with a slight shift to the left, indicating a slight decrease in the higher grades and a corresponding increase in lower grades. But across many subjects, we have seen a slight increase in those achieving a H1 grade. The impact of these slight changes will be seen in the CAO points needed for the courses in the 550+ courses. But for the courses below 500 points, we might not see much of a change in CAO points. Although there might be some minor fluctuations based on demand for certain courses, which is typical most years.

I’ll have another post looking at the CAO points after they are released, so look out for that post.

The charts below give the profile of marks for each year between 2019 (pre-Covid) and 2024. The chart on the left includes all years, and the chart on the right is for the last four years. This allows us to see if there have been any adjustments in the profiles over those years. For most subjects, we can see a marked reduction of marks for certain subjects since the 2021 exceptionally high marks. While some subjects are almost back to matching the 2019 profile (science subjects, Irish), for others the stepback needed is small. Based on the messaging from the government, the stepping back will commence in 2025

Back in April, an Irish Times article discussed the changes coming from 2025, where there will be a gradual return to “normal” (pre-Covid) profile of marks. Looking at the profile of marks over the past couple of years we can clearly see there has been some stepping back in the profile of marks. Some subjects are back to pre-Covid 2019 profiles. In some subjects, this is more evident than in others. They’ve used the phrase “on aggregate” to hide the stepping back in some subjects and less so in others.

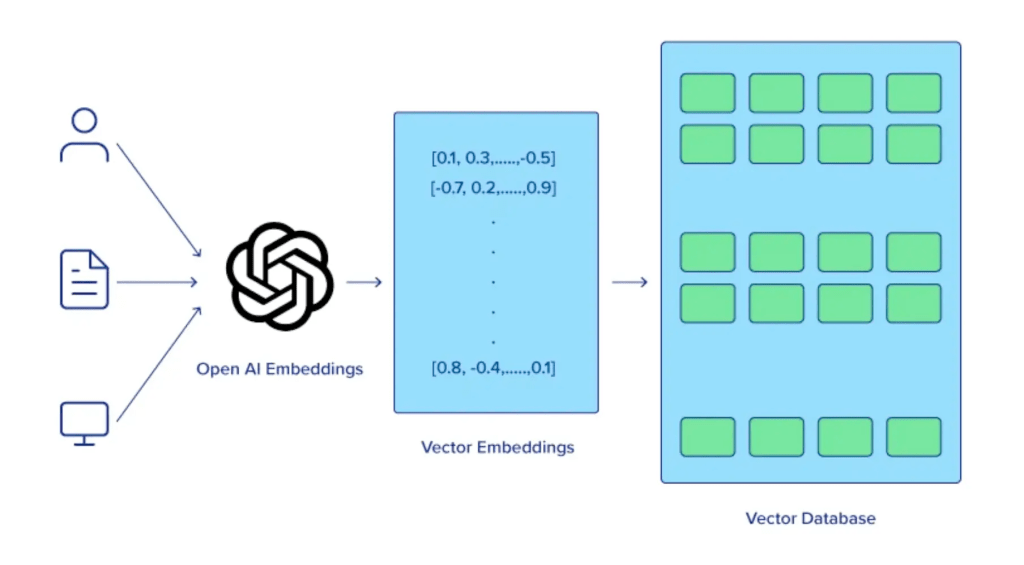

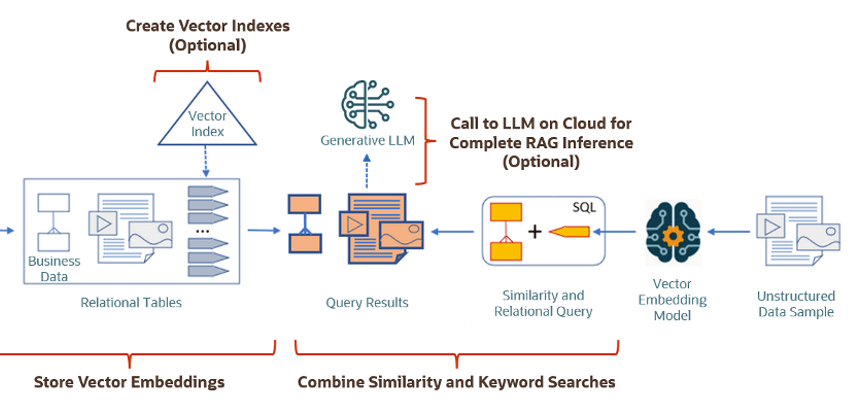

Using Cohere to Generate Vector Embeddings

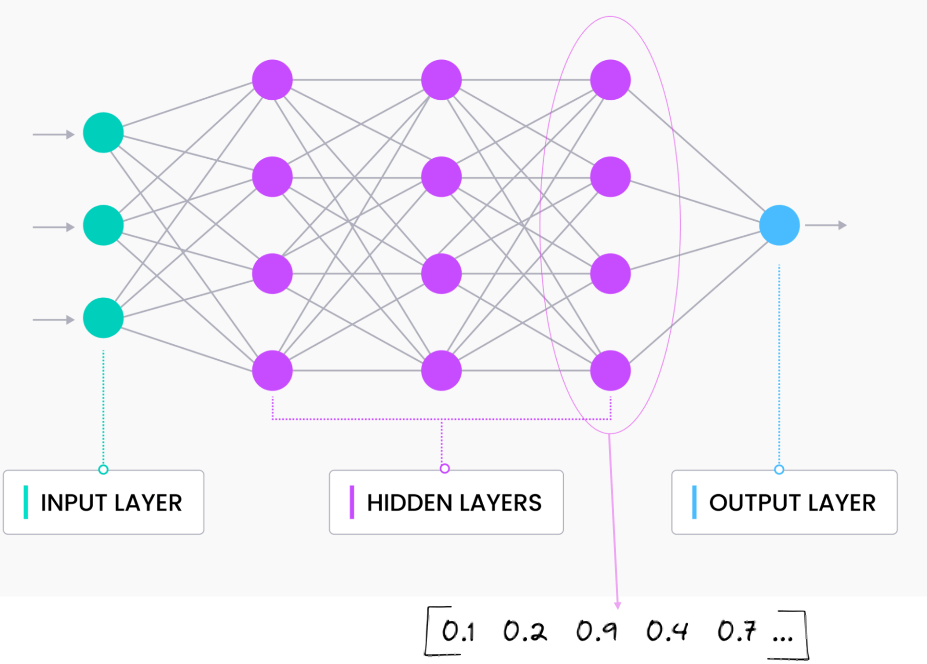

When using Vector Databases or Vector Embedding features in most (modern, multi-model) Databases, you can gain additional insights. The Vector Embeddings can be utilised for semantic searches, where you can find related data or information based on the values of the Vectors. One of the challenges is generating suitable vectors. There are a lot of options available for generating these Vector Embeddings. In this post, I’ll illustrate how to generate these using Cohere, and in a future post, I’ll illustrate using OpenAI. There are advantages and disadvantages to using these solutions. I’ll point out some of these for each solution (Cohere and OpenAI), using their Python API library.

The first step is to install the Cohere Python library

pip install cohereNext, you need to create an account with Cohere. This will allow you to get an API key. You can get a Trial API key, but you will be restricted to the number of calls you are allowed. As with most free or trial keys these allow you to do a limited number of calls. This is commonly referred to as Rate Limiting. The trial key for the embedding models allows you to have up to 40 calls per minute. This is very very limited and each call is very slow. (I’ll discuss related issues about OpenAI rate limiting in another post)

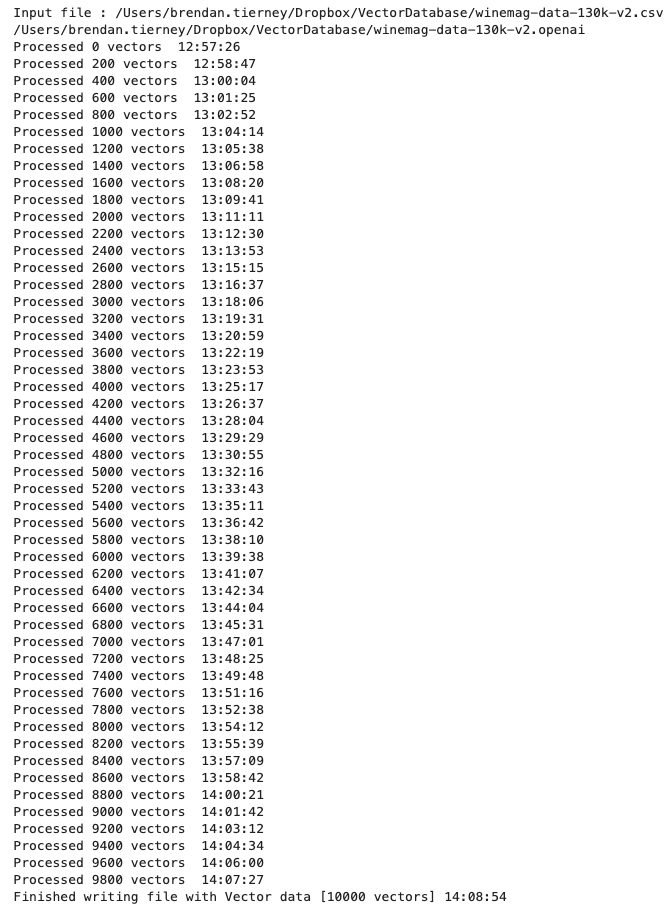

The dataset I’ll be using is the Wine Reviews 130K (dropbox link). This is widely available on many sites. I want to create Vector Embeddings for the ‘Description’ field in this dataset which contains a review of each wine. There are some columns with no values, and these need to be handled. For each wine review, I’ll create a SQL INSERT statement and print this out to a file. This file will contain an INSERT statement for each wine review, including the vector embedding.

Here’s the code, (you’ll need to enter an API key and change the directory for the data file)

import numpy as np

import os

import time

import pandas as pd

import cohere

co = cohere.Client(api_key="...")

data_file = ".../VectorDatabase/winemag-data-130k-v2.csv"

df = pd.read_csv(data_file)

print_every = 200

rate_limit = 1000 #Cohere limits to 40 API calls per minute

print("Input file :", data_file)

v_file = os.path.splitext(data_file)[0]+'.cohere'

print(v_file)

#Open file with write (over-writes previous file)

f=open(v_file,"w")

for index,row in df.head(rate_limit).iterrows():

phrases=list(row['description'])

model="embed-english-v3.0"

input_type="search_query"

#####

res = co.embed(texts=phrases,

model=model,

input_type=input_type) #,

# embedding_types=['float'])

v_embedding = str(res.embeddings[0])

tab_insert="INSERT into WINE_REVIEWS_130K VALUES ("+str(row["Seq"])+"," \

+'"'+str(row["description"])+'",' \

+'"'+str(row["designation"])+'",' \

+str(row["points"])+"," \

+'"'+str(row["province"])+'",' \

+str(row["price"])+"," \

+'"'+str(row["region_1"])+'",' \

+'"'+str(row["region_2"])+'",' \

+'"'+str(row["taster_name"])+'",' \

+'"'+str(row["taster_twitter_handle"])+'",' \

+'"'+str(row["title"])+'",' \

+'"'+str(row["variety"])+'",' \

+'"'+str(row["winery"])+'",' \

+"'"+v_embedding+"'"+");\n"

f.write(tab_insert)

if (index%print_every == 0):

print(f'Processed {index} vectors ', time.strftime("%H:%M:%S", time.localtime()))

#Close vector file

f.close()

print(f"Finished writing file with Vector data [{index+1} vectors]", time.strftime("%H:%M:%S", time.localtime()))The vector generated has 1024 dimensions. At this time there isn’t a parameter to change/reduce the number of dimensions.

The output file can now be run in your database, assuming you’ve created a table called WINE_REVIEWS_130K and has a column with the appropriate data type (e.g. VECTOR)

Warnings: When using the Cohere API you are limited to maximum of 40 calls per minute. I’ve found this to be incorrect and it was more like 38 calls (for me). I also found the ‘per minute’ to be incorrect. I had to wait several minutes and up to five minutes before I could attempt another run.

In an attempt to overcome this, I create a production API key. This involved giving some payment details, and this in theory should remove the ‘per minute’ rate limit, among other things. Unfortunately, for me this was not a good experience, as I had to make multiple attempts to run for 1000 records before I could have a successful outcome. I experienced multiple Server 500 errors and other errors that related to Cohere server problems.

I wasn’t able to process more that 600 records before the errors occurred and I wasn’t able to generate for a larger percentage of the dataset.

An additional issue is with the response time from Cohere. It was taking approx. 5 minutes to process 200 API calls.

So overall a rather poor experience. I then switched to OpenAI and had a slightly different experience. Check out that post for more details.

EU AI Act: Key Dates and Impact on AI Developers

The official text of the EU AI Act has been published in the EU Journal. This is another landmark point for the EU AI Act, as these regulations are set to enter into force on 1st August 2024. If you haven’t started your preparations for this, you really need to start now. See the timeline for the different stages of the EU AI Act below.

The EU AI Act is a landmark piece of legislation and similar legislation is being drafted/enacted in various geographic regions around the world. The EU AI Act is considered the most extensive legal framework for AI developers, deployers, importers, etc and aims to ensure AI systems introduced or currently being used in the EU internal market (even if they are developed and located outside of the EU) are secure, compliant with existing and new laws on fundamental rights and align with EU principles.

The key dates are:

- 2 February 2025: Prohibitions on Unacceptable Risk AI

- 2 August 2025: Obligations come into effect for providers of general purpose AI models. Appointment of member state competent authorities. Annual Commission review of and possible legislative amendments to the list of prohibited AI.

- 2 February 2026: Commission implements act on post market monitoring

- 2 August 2026: Obligations go into effect for high-risk AI systems specifically listed in Annex III, including systems in biometrics, critical infrastructure, education, employment, access to essential public services, law enforcement, immigration and administration of justice. Member states to have implemented rules on penalties, including administrative fines. Member state authorities to have established at least one operational AI regulatory sandbox. Commission review, and possible amendment of, the list of high-risk AI systems.

- 2 August 2027: Obligations go into effect for high-risk AI systems not prescribed in Annex III but intended to be used as a safety component of a product. Obligations go into effect for high-risk AI systems in which the AI itself is a product and the product is required to undergo a third-party conformity assessment under existing specific EU laws, for example, toys, radio equipment, in vitro diagnostic medical devices, civil aviation security and agricultural vehicles.

- By End of 2030: Obligations go into effect for certain AI systems that are components of the large-scale information technology systems established by EU law in the areas of freedom, security and justice, such as the Schengen Information System.

Here is the link to the official text in the EU Journal publication.

You must be logged in to post a comment.