Select AI – OpenAI changes

A few weeks ago I wrote a few blog posts about using SelectAI. These illustrated integrating and using Cohere and OpenAI with SQL commands in your Oracle Cloud Database. See these links below.

- SelectAI – the beginning of a journey

- SelectAI – Doing something useful

- SelectAI – Can metadata help

- SelectAI – the APEX version

With the constantly changing world of APIs, has impacted the steps I outlined in those posts, particularly if you are using the OpenAI APIs. Two things have changed since writing those posts a few weeks ago. The first is with creating the OpenAI API keys. When creating a new key you need to define a project. For now, just select ‘Default Project’. This is a minor change, but it has caused some confusion for those following my steps in this blog post. I’ve updated that post to reflect the current setup in defining a new key in OpenAI. This is a minor change, oh and remember to put a few dollars into your OpenAI account for your key to work. I put an initial $10 into my account and a few minutes later API key for me from my Oracle (OCI) Database.

The second change is related to how the OpenAI API is called from Oracle (OCI) Databases. The API is now expecting a model name. From talking to the Oracle PMs, they will be implementing a fix in their Cloud Databases where the default model will be ‘gpt-3.5-turbo’, but in the meantime, you have to explicitly define the model when creating your OpenAI profile.

BEGIN

--DBMS_CLOUD_AI.drop_profile(profile_name => 'COHERE_AI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'COHERE_AI',

attributes => '{"provider": "cohere",

"credential_name": "COHERE_CRED",

"object_list": [{"owner": "SH", "name": "customers"},

{"owner": "SH", "name": "sales"},

{"owner": "SH", "name": "products"},

{"owner": "SH", "name": "countries"},

{"owner": "SH", "name": "channels"},

{"owner": "SH", "name": "promotions"},

{"owner": "SH", "name": "times"}],

"model":"gpt-3.5-turbo"

}');

END;Other model names you could use include gpt-4 or gpt-4o.

Oracle Object Storage – Parallel Downloading

In previous posts, I’ve given example Python code (and functions) for processing files into and out of OCI Object and Bucket Storage. One of these previous posts includes code and a demonstration of uploading files to an OCI Bucket using the multiprocessing package in Python.

Building upon these previous examples, the code below will download a Bucket using parallel processing. Like my last example, this code is based on the example code I gave in an earlier post on functions within a Jupyter Notebook.

Here’s the code.

import oci

import os

import argparse

from multiprocessing import Process

from glob import glob

import time

####

def upload_file(config, NAMESPACE, b, f, num):

file_exists = os.path.isfile(f)

if file_exists == True:

try:

start_time = time.time()

object_storage_client = oci.object_storage.ObjectStorageClient(config)

object_storage_client.put_object(NAMESPACE, b, os.path.basename(f), open(f,'rb'))

print(f'. Finished {num} uploading {f} in {round(time.time()-start_time,2)} seconds')

except Exception as e:

print(f'Error uploading file {num}. Try again.')

print(e)

else:

print(f'... File {f} does not exist or cannot be found. Check file name and full path')

####

def check_bucket_exists(config, NAMESPACE, b_name):

#check if Bucket exists

is_there = False

object_storage_client = oci.object_storage.ObjectStorageClient(config)

l_b = object_storage_client.list_buckets(NAMESPACE, config.get("tenancy")).data

for bucket in l_b:

if bucket.name == b_name:

is_there = True

if is_there == True:

print(f'Bucket {b_name} exists.')

else:

print(f'Bucket {b_name} does not exist.')

return is_there

####

def download_bucket_file(config, NAMESPACE, b, d, f, num):

print(f'..Starting Download File ({num}):',f, ' from Bucket', b, ' at ', time.strftime("%H:%M:%S"))

try:

start_time = time.time()

object_storage_client = oci.object_storage.ObjectStorageClient(config)

get_obj = object_storage_client.get_object(NAMESPACE, b, f)

with open(os.path.join(d, f), 'wb') as f:

for chunk in get_obj.data.raw.stream(1024 * 1024, decode_content=False):

f.write(chunk)

print(f'..Finished Download ({num}) in ', round(time.time()-start_time,2), 'seconds.')

except:

print(f'Error trying to download file {f}. Check parameters and try again')

####

if __name__ == "__main__":

#setup for OCI

config = oci.config.from_file()

object_storage = oci.object_storage.ObjectStorageClient(config)

NAMESPACE = object_storage.get_namespace().data

####

description = "\n".join(["Upload files in parallel to OCI storage.",

"All files in <directory> will be uploaded. Include '/' at end.",

"",

"<bucket_name> must already exist."])

parser = argparse.ArgumentParser(description=description,

formatter_class=argparse.RawDescriptionHelpFormatter)

parser.add_argument(dest='bucket_name',

help="Name of object storage bucket")

parser.add_argument(dest='directory',

help="Path to local directory containing files to upload.")

args = parser.parse_args()

####

bucket_name = args.bucket_name

directory = args.directory

if not os.path.isdir(directory):

parser.usage()

else:

dir = directory + os.path.sep + "*"

start_time = time.time()

print('Starting Downloading Bucket - Parallel:', bucket_name, ' at ', time.strftime("%H:%M:%S"))

object_storage_client = oci.object_storage.ObjectStorageClient(config)

object_list = object_storage_client.list_objects(NAMESPACE, bucket_name).data

count = 0

for i in object_list.objects:

count+=1

print(f'... {count} files to download')

proc_list = []

num=0

for o in object_list.objects:

p = Process(target=download_bucket_file, args=(config, NAMESPACE, bucket_name, directory, o.name, num))

p.start()

num+=1

proc_list.append(p)

for job in proc_list:

job.join()

print('---')

print(f'Download Finished in {round(time.time()-start_time,2)} seconds.({time.strftime("%H:%M:%S")})')

#### the end ####

I’ve saved the code to a file called bucket_parallel_download.py.

To call this, I run the following using the same DEMO_Bucket and directory of files I used in my previous posts.

python bucket_parallel_download.py DEMO_Bucket /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/

This creates the following output, and between 3.6 seconds to 4.4 seconds to download the 13 files, based on my connection.

[16:30~/Dropbox]> python bucket_parallel_download.py DEMO_Bucket /Users/brendan.tierney/DEMO_BUCKET

Starting Downloading Bucket - Parallel: DEMO_Bucket at 16:30:05

... 13 files to download

..Starting Download File (0): 2017-08-31 19.46.42.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (1): 2017-10-16 13.13.20.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (2): 2017-11-22 20.18.58.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (3): 2018-12-03 11.04.57.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (11): thumbnail_IMG_2333.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (5): IMG_2347.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (9): thumbnail_IMG_1711.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (4): 347397087_620984963239631_2131524631626484429_n.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (10): thumbnail_IMG_1712.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (8): thumbnail_IMG_1710.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (7): oug_ire18_1.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (6): IMG_6779.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (12): thumbnail_IMG_2336.jpg from Bucket DEMO_Bucket at 16:30:08

..Finished Download (9) in 0.67 seconds.

..Finished Download (11) in 0.74 seconds.

..Finished Download (10) in 0.7 seconds.

..Finished Download (5) in 0.8 seconds.

..Finished Download (7) in 0.7 seconds.

..Finished Download (1) in 1.0 seconds.

..Finished Download (12) in 0.81 seconds.

..Finished Download (4) in 1.02 seconds.

..Finished Download (6) in 0.97 seconds.

..Finished Download (2) in 1.25 seconds.

..Finished Download (8) in 1.16 seconds.

..Finished Download (0) in 1.47 seconds.

..Finished Download (3) in 1.47 seconds.

---

Download Finished in 4.09 seconds.(16:30:09)Oracle Object Storage – Parallel Uploading

In my previous posts on using Python to work with OCI Object Storage, I gave code examples and illustrated how to create Buckets, explore Buckets, upload files, download files and delete files and buckets, all using Python and files on your computer.

- Oracle Object Storage – Setup and Explore

- Oracle Object Storage – Buckets & Loading files

- Oracle Object Storage – Downloading and Deleting

- Oracle Object Storage – Parallel Uploading

Building upon the code I’ve given for uploading files, which did so sequentially, in his post I’ve taken that code and expanded it to allow the files to be uploaded in parallel to an OCI Bucket. This is achieved using the Python multiprocessing library.

Here’s the code.

import oci

import os

import argparse

from multiprocessing import Process

from glob import glob

import time

####

def upload_file(config, NAMESPACE, b, f, num):

file_exists = os.path.isfile(f)

if file_exists == True:

try:

start_time = time.time()

object_storage_client = oci.object_storage.ObjectStorageClient(config)

object_storage_client.put_object(NAMESPACE, b, os.path.basename(f), open(f,'rb'))

print(f'. Finished {num} uploading {f} in {round(time.time()-start_time,2)} seconds')

except Exception as e:

print(f'Error uploading file {num}. Try again.')

print(e)

else:

print(f'... File {f} does not exist or cannot be found. Check file name and full path')

####

def check_bucket_exists(config, NAMESPACE, b_name):

#check if Bucket exists

is_there = False

object_storage_client = oci.object_storage.ObjectStorageClient(config)

l_b = object_storage_client.list_buckets(NAMESPACE, config.get("tenancy")).data

for bucket in l_b:

if bucket.name == b_name:

is_there = True

if is_there == True:

print(f'Bucket {b_name} exists.')

else:

print(f'Bucket {b_name} does not exist.')

return is_there

####

if __name__ == "__main__":

#setup for OCI

config = oci.config.from_file()

object_storage = oci.object_storage.ObjectStorageClient(config)

NAMESPACE = object_storage.get_namespace().data

####

description = "\n".join(["Upload files in parallel to OCI storage.",

"All files in <directory> will be uploaded. Include '/' at end.",

"",

"<bucket_name> must already exist."])

parser = argparse.ArgumentParser(description=description,

formatter_class=argparse.RawDescriptionHelpFormatter)

parser.add_argument(dest='bucket_name',

help="Name of object storage bucket")

parser.add_argument(dest='directory',

help="Path to local directory containing files to upload.")

args = parser.parse_args()

####

bucket_name = args.bucket_name

directory = args.directory

if not os.path.isdir(directory):

parser.usage()

else:

dir = directory + os.path.sep + "*"

#### Check if Bucket Exists ####

b_exists = check_bucket_exists(config, NAMESPACE, bucket_name)

if b_exists == True:

try:

proc_list = []

num=0

start_time = time.time()

#### Start uploading files ####

for file_path in glob(dir):

print(f"Starting {num} upload for {file_path}")

p = Process(target=upload_file, args=(config, NAMESPACE, bucket_name, file_path, num))

p.start()

num+=1

proc_list.append(p)

except Exception as e:

print(f'Error uploading file ({num}). Try again.')

print(e)

else:

print('... Create Bucket before uploading Directory.')

for job in proc_list:

job.join()

print('---')

print(f'Finished uploading all files ({num}) in {round(time.time()-start_time,2)} seconds')

#### the end ####

I’ve saved the code to a file called bucket_parallel.py.

To call this, I run the following using the same DEMO_Bucket and directory of files I used in my previous posts.

python bucket_parallel.py DEMO_Bucket /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/

This creates the following output, and between 3.3 seconds to 4.6 seconds to upload the 13 files, based on my connection.

[15:29~/Dropbox]> python bucket_parallel.py DEMO_Bucket /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/

Bucket DEMO_Bucket exists.

Starting 0 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_2336.jpg

Starting 1 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-08-31 19.46.42.jpg

Starting 2 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_2333.jpg

Starting 3 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/347397087_620984963239631_2131524631626484429_n.jpg

Starting 4 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1712.jpg

Starting 5 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1711.jpg

Starting 6 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-11-22 20.18.58.jpg

Starting 7 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1710.jpg

Starting 8 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2018-12-03 11.04.57.jpg

Starting 9 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/IMG_6779.jpg

Starting 10 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/oug_ire18_1.jpg

Starting 11 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-10-16 13.13.20.jpg

Starting 12 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/IMG_2347.jpg

. Finished 2 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_2333.jpg in 0.752561092376709 seconds

. Finished 5 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1711.jpg in 0.7750208377838135 seconds

. Finished 4 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1712.jpg in 0.7535321712493896 seconds

. Finished 0 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_2336.jpg in 0.8419861793518066 seconds

. Finished 7 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1710.jpg in 0.7582859992980957 seconds

. Finished 10 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/oug_ire18_1.jpg in 0.8714470863342285 seconds

. Finished 12 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/IMG_2347.jpg in 0.8753311634063721 seconds

. Finished 1 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-08-31 19.46.42.jpg in 1.2201581001281738 seconds

. Finished 11 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-10-16 13.13.20.jpg in 1.2848408222198486 seconds

. Finished 3 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/347397087_620984963239631_2131524631626484429_n.jpg in 1.325110912322998 seconds

. Finished 9 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/IMG_6779.jpg in 1.6633048057556152 seconds

. Finished 8 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2018-12-03 11.04.57.jpg in 1.8549730777740479 seconds

. Finished 6 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-11-22 20.18.58.jpg in 2.018144130706787 seconds

---

Finished uploading all files (13) in 3.9126579761505127 secondsOracle Object Storage – Downloading and Deleting

In my previous posts on using Object Storage I illustrated what you needed to do to setup your connect, explore Object Storage, create Buckets and how to add files. In this post, I’ll show you how to download files from a Bucket, and to delete Buckets.

- Oracle Object Storage – Setup and Explore

- Oracle Object Storage – Buckets & Loading files

- Oracle Object Storage – Downloading and Deleting

- Oracle Object Storage – Parallel Uploading

Let’s start with downloading the files in a Bucket. In my previous post, I gave some Python code and functions to perform these steps for you. The Python function below will perform this for you. A Bucket needs to be empty before it can be deleted. The function checks for files and if any exist, will delete these files before proceeding with deleting the Bucket.

Namespace needs to be defined, and you can see how that is defined by looking at my early posts on this topic.

def download_bucket(b, d):

if os.path.exists(d) == True:

print(f'{d} already exists.')

else:

print(f'Creating {d}')

os.makedirs(d)

print('Downloading Bucket:',b)

object_list = object_storage_client.list_objects(NAMESPACE, b).data

count = 0

for i in object_list.objects:

count+=1

print(f'... {count} files')

for o in object_list.objects:

print(f'Downloading object {o.name}')

get_obj = object_storage_client.get_object(NAMESPACE, b, o.name)

with open(os.path.join(d,o.name), 'wb') as f:

for chunk in get_obj.data.raw.stream(1024 * 1024, decode_content=False):

f.write(chunk)

print('Download Finished.')Here’s an example of this working.

download_dir = '/Users/brendan.tierney/DEMO_BUCKET'

download_bucket(BUCKET_NAME, download_dir)

/Users/brendan.tierney/DEMO_BUCKET already exists.

Downloading Bucket: DEMO_Bucket

... 14 files

Downloading object .DS_Store

Downloading object 2017-08-31 19.46.42.jpg

Downloading object 2017-10-16 13.13.20.jpg

Downloading object 2017-11-22 20.18.58.jpg

Downloading object 2018-12-03 11.04.57.jpg

Downloading object 347397087_620984963239631_2131524631626484429_n.jpg

Downloading object IMG_2347.jpg

Downloading object IMG_6779.jpg

Downloading object oug_ire18_1.jpg

Downloading object thumbnail_IMG_1710.jpg

Downloading object thumbnail_IMG_1711.jpg

Downloading object thumbnail_IMG_1712.jpg

Downloading object thumbnail_IMG_2333.jpg

Downloading object thumbnail_IMG_2336.jpg

Download Finished.

We can also download individual files. Here’s a function to do that. It’s a simplified version of the previous function

def download_bucket_file(b, d, f):

print('Downloading File:',f, ' from Bucket', b)

try:

get_obj = object_storage_client.get_object(NAMESPACE, b, f)

with open(os.path.join(d, f), 'wb') as f:

for chunk in get_obj.data.raw.stream(1024 * 1024, decode_content=False):

f.write(chunk)

print('Download Finished.')

except:

print('Error trying to download file. Check parameters and try again')

download_dir = '/Users/brendan.tierney/DEMO_BUCKET'

file_download = 'oug_ire18_1.jpg'

download_bucket_file(BUCKET_NAME, download_dir, file_download)

Downloading File: oug_ire18_1.jpg from Bucket DEMO_Bucket

Download Finished.The final function is to delete a Bucket from your OCI account.

def delete_bucket(b_name):

bucket_exists = check_bucket_exists(b_name)

objects_exist = False

if bucket_exists == True:

print('Starting - Deleting Bucket '+b_name)

print('... checking if objects exist in Bucket (bucket needs to be empty)')

try:

object_list = object_storage_client.list_objects(NAMESPACE, b_name).data

objects_exist = True

except Exception as e:

objects_exist = False

if objects_exist == True:

print('... ... Objects exists in Bucket. Deleting these objects.')

count = 0

for o in object_list.objects:

count+=1

object_storage_client.delete_object(NAMESPACE, b_name, o.name)

if count > 0:

print(f'... ... Deleted {count} objects in {b_name}')

else:

print(f'... ... Bucket is empty. No objects to delete.')

else:

print(f'... No objects to delete, Bucket {b_name} is empty')

print(f'... Deleting bucket {b_name}')

response = object_storage_client.delete_bucket(NAMESPACE, b_name)

print(f'Deleted bucket {b_name}') Before running this function, lets do a quick check to see what Buckets I have in my OCI account.

list_bucket_counts()

Bucket name: ADW_Bucket

... num of objects : 2

Bucket name: Cats-and-Dogs-Small-Dataset

... num of objects : 100

Bucket name: DEMO_Bucket

... num of objects : 14

Bucket name: Demo

... num of objects : 210

Bucket name: Finding-Widlake-Bucket

... num of objects : 424

Bucket name: Planes-in-Satellites

... num of objects : 89

Bucket name: Vision-Demo-1

... num of objects : 10

Bucket name: root-bucket

... num of objects : 2I’ve been using DEMO_Bucket in my previous examples and posts. We’ll use this to demonstrate the deleting of a Bucket.

delete_bucket(BUCKET_NAME)

Bucket DEMO_Bucket exists.

Starting - Deleting Bucket DEMO_Bucket

... checking if objects exist in Bucket (bucket needs to be empty)

... ... Objects exists in Bucket. Deleting these objects.

... ... Deleted 14 objects in DEMO_Bucket

... Deleting bucket DEMO_Bucket

Deleted bucket DEMO_Bucket

Oracle Object Storage – Buckets & Loading files

In a previous post, I showed what you need to do to setup your local PC/laptop to be able to connect to OCI. I also showed how to perform some simple queries on your Object Storage environment. Go check out that post before proceeding with the examples in this blog.

- Oracle Object Storage – Setup and Explore

- Oracle Object Storage – Buckets & Loading files

- Oracle Object Storage – Downloading and Deleting

- Oracle Object Storage – Parallel Uploading

In this post, I’ll build upon my previous post by giving some Python functions to:

- Check if Bucket exists

- Create a Buckets

- Delete a Bucket

- Upload an individual file

- Upload an entire directory

Let’s start with a function to see if a Bucket already exists.

def check_bucket_exists(b_name):

#check if Bucket exists

is_there = False

l_b = object_storage_client.list_buckets(NAMESPACE, COMPARTMENT_ID).data

for bucket in l_b:

if bucket.name == b_name:

is_there = True

if is_there == True:

print(f'Bucket {b_name} exists.')

else:

print(f'Bucket {b_name} does not exist.')

return is_thereA simple test for a bucket called ‘DEMO_bucket’. This was defined in a variable previously (see previous post). I’ll use this ‘DEMO_bucket’ throughout these examples.

b_exists = check_bucket_exists(BUCKET_NAME)

print(b_exists)

---

Bucket DEMO_Bucket does not exist.

FalseNext we can more onto a function for creating a Bucket.

def create_bucket(b):

#create Bucket if it does not exist

bucket_exists = check_bucket_exists(b)

if bucket_exists == False:

try:

create_bucket_response = object_storage_client.create_bucket(

NAMESPACE,

oci.object_storage.models.CreateBucketDetails(

name=demo_bucket_name,

compartment_id=COMPARTMENT_ID

)

)

bucket_exists = True

# Get the data from response

print(f'Created Bucket {create_bucket_response.data.name}')

except Exception as e:

print(e.message)

else:

bucket_exists = True

print(f'... nothing to create.')

return bucket_existsA simple test for a bucket called ‘DEMO_bucket’. This was defined in a variable previously (see previous post).

b_exists = create_bucket(BUCKET_NAME)

---

Bucket DEMO_Bucket does not exist.

Created Bucket DEMO_BucketNext, let’s delete a Bucket and any files stored in it.

def delete_bucket(b_name):

bucket_exists = check_bucket_exists(b_name)

objects_exist = False

if bucket_exists == True:

print('Starting - Deleting Bucket '+b_name)

print('... checking if objects exist in Bucket (bucket needs to be empty)')

try:

object_list = object_storage_client.list_objects(NAMESPACE, b_name).data

objects_exist = True

except Exception as e:

objects_exist = False

if objects_exist == True:

print('... ... Objects exists in Bucket. Deleting these objects.')

count = 0

for o in object_list.objects:

count+=1

object_storage_client.delete_object(NAMESPACE, b_name, o.name)

if count > 0:

print(f'... ... Deleted {count} objects in {b_name}')

else:

print(f'... ... Bucket is empty. No objects to delete.')

else:

print(f'... No objects to delete, Bucket {b_name} is empty')

print(f'... Deleting bucket {b_name}')

response = object_storage_client.delete_bucket(NAMESPACE, b_name)

print(f'Deleted bucket {b_name}') The example output below shows what happens when I’ve already loaded data into the Bucket (which I haven’t shown in the examples so far – but I will soon).

delete_bucket(BUCKET_NAME)

---

Bucket DEMO_Bucket exists.

Starting - Deleting Bucket DEMO_Bucket

... checking if objects exist in Bucket (bucket needs to be empty)

... ... Objects exists in Bucket. Deleting these objects.

... ... Bucket is empty. No objects to delete.

... Deleting bucket DEMO_Bucket

Deleted bucket DEMO_Bucket

Now that we have our functions for managing Buckets, we can now have a function for uploading a file to a bucket.

def upload_file(b, f):

file_exists = os.path.isfile(f)

if file_exists == True:

#check to see if Bucket exists

b_exists = check_bucket_exists(b)

if b_exists == True:

print(f'... uploading {f}')

try:

object_storage_client.put_object(NAMESPACE, b, os.path.basename(f), io.open(f,'rb'))

print(f'. finished uploading {f}')

except Exception as e:

print(f'Error uploading file. Try again.')

print(e)

else:

print('... Create Bucket before uploading file.')

else:

print(f'... File {f} does not exist or cannot be found. Check file name and full path')Just select a file from your computer and give the full path to that file and the Bucket name.

up_file = '/Users/brendan.tierney/Dropbox/bill.xls'

upload_file(BUCKET_NAME, up_file)

---

Bucket DEMO_Bucket does not exist.

... Create Bucket before uploading file.Our final function is an extended version of the previous one. This function takes a Directory path and uploads all the files to the Bucket.

def upload_directory(b, d):

count = 0

#check to see if Bucket exists

b_exists = check_bucket_exists(b)

if b_exists == True:

#loop files

for filename in os.listdir(d):

print(f'... uploading {filename}')

try:

object_storage_client.put_object(NAMESPACE, b, filename, io.open(os.path.join(d,filename),'rb'))

count += 1

except Exception as e:

print(f'... ... Error uploading file. Try again.')

print(e)

else:

print('... Create Bucket before uploading files.')

if count == 0:

print('... Empty directory. No files uploaded.')

else:

print(f'Finished uploading Directory : {count} files into {b} bucket')and to call it …

up_directory = '/Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/'

upload_directory(BUCKET_NAME, up_directory)

---

Bucket DEMO_Bucket exists.

... uploading thumbnail_IMG_2336.jpg

... uploading .DS_Store

... uploading 2017-08-31 19.46.42.jpg

... uploading thumbnail_IMG_2333.jpg

... uploading 347397087_620984963239631_2131524631626484429_n.jpg

... uploading thumbnail_IMG_1712.jpg

... uploading thumbnail_IMG_1711.jpg

... uploading 2017-11-22 20.18.58.jpg

... uploading thumbnail_IMG_1710.jpg

... uploading 2018-12-03 11.04.57.jpg

... uploading IMG_6779.jpg

... uploading oug_ire18_1.jpg

... uploading 2017-10-16 13.13.20.jpg

... uploading IMG_2347.jpg

Finished uploading Directory : 14 files into DEMO_Bucket bucketOracle Object Storage – Setup and Explore

This blog post will walk you through how to access Oracle OCI Object Storage and explore what buckets and files you have there, using Python and the OCI Python library. There will be additional posts which will walk through some of the other typical tasks you’ll need to perform with moving files into and out of OCI Object Storage.

- Oracle Object Storage – Buckets & Loading files

- Oracle Object Storage – Downloading and Deleting

- Oracle Object Storage – Parallel Uploading

The first thing you’ll need to do is to install the OCI Python library. You can do this by running pip command or if using Anaconda using their GUI for doing this. For example,

pip3 install ociCheck out the OCI Python documentation for more details.

Next, you’ll need to get and setup the configuration settings and download the pem file.

We need to create the config file that will contain the required credentials and information for working with OCI. By default, this file is stored in : ~/.oci/config

mkdir ~/oci

cd oci

Now create the config file, using vi or something similar.

vi config

Edit the file to contain the following, but look out for the parts that need to be changed/updated to match your OCI account details.

[ADMIN_USER]user=ocid1.user.oc1..<unique_ID>

fingerprint=<your_fingerprint>

tenancy = ocid1.tenancy.oc1..<unique_ID>

region = us-phoenix-1key_file=

<path to key .pem file>The above details can be generated by creating an API key for your OCI user. Copy and paste the default details to the config file.

- [ADMIN_USER] > you can name this anything you want, but it will referenced in Python.

- user > enter the user ocid. OCID is the unique resource identifier that OCI provides for each resource.

- fingerprint > refers to the fingerprint of the public key you configured for the user.

- tenancy > your tenancy OCID.

- region > the region that you are subscribed to.

- key_file > the path to the .pem file you generated.

Just download the .pem file and the config file details. Add them to the config file, and give the full path to the .epm file, including its name.

You are now ready to use the OCI Python library to access and use your OCI cloud environment. Let’s run some tests to see if everything works and connects ok.

#import libraries

import oci

import json

import os

import io

#load the config file

config = oci.config.from_file("~/.oci/config")

config

#only part of the output is displayed due to security reasons

{'log_requests': False, 'additional_user_agent': '', 'pass_phrase': None, 'user': 'oci...........We can now define some core variables.

#My Compartment ID

COMPARTMENT_ID = "ocid1.tenancy.oc1..............

#Object storage Namespace

object_storage_client = oci.object_storage.ObjectStorageClient(config)

NAMESPACE = object_storage_client.get_namespace().data

#Name of Bucket for this demo

BUCKET_NAME = 'DEMO_Bucket'We can now define some functions to:

- List the Buckets in my OCI account

- List the number of files in each Bucket

- Number of files in a particular Bucket

- Check for Bucket Existence

def list_buckets():

l_buckets = object_storage_client.list_buckets(NAMESPACE, COMPARTMENT_ID).data

# Get the data from response

for bucket in l_buckets:

print(bucket.name)

def list_bucket_counts():

l_buckets = object_storage_client.list_buckets(NAMESPACE, COMPARTMENT_ID).data

for bucket in l_buckets:

print("Bucket name: ",bucket.name)

buck_name = bucket.name

objects = object_storage_client.list_objects(NAMESPACE, buck_name).data

count = 0

for i in objects.objects:

count+=1

print('... num of objects :', count)

def check_bucket_exists(b_name):

#check if Bucket exists

is_there = False

l_b = object_storage_client.list_buckets(NAMESPACE, COMPARTMENT_ID).data

for bucket in l_b:

if bucket.name == b_name:

is_there = True

if is_there == True:

print(f'Bucket {b_name} exists.')

else:

print(f'Bucket {b_name} does not exist.')

return is_there

def list_bucket_details(b):

bucket_exists = check_bucket_exists(b)

if bucket_exists == True:

objects = object_storage_client.list_objects(NAMESPACE, b).data

count = 0

for i in objects.objects:

count+=1

print(f'Bucket {b} has objects :', count)

Now we can run these functions to test them. Before running these make sure you can create a connection to OCI.

SelectAI – the APEX version

I’ve written a few blog posts about the new Select AI feature on the Oracle Database. In this post, I’ll explore how to use this within APEX, because you have to do things in a different way.

The previous posts on Select AI are:

- SelectAI – the beginning of a journey

- SelectAI – Doing something useful

- SelectAI – Can metadata help

- SelectAI – the APEX version

We have seen in my previous posts how the PL/SQL package called DBMS_CLOUD_AI was used to create a profile. This profile provided details of what provided to use (Cohere or OpenAI in my examples), and what metadata (schemas, tables, etc) to send to the LLM. When you look at the DBMS_CLOUD_AI PL/SQL package it only contains seven functions (at time of writing this post). Most of these functions are for managing the profile, such as creating, deleting, enabling, disabling and setting the profile attributes. But there is one other important function called GENERATE. This function can be used to send your request to the LLM.

Why is the DBMS_CLOUD_AI.GENERATE function needed? We have seen in my previous posts using Select AI using common SQL tools such as SQL Developer, SQLcl and SQL Developer extension for VSCode. When using these tools we need to enable the SQL session to use Select AI by setting the profile. When using APEX or creating your own PL/SQL functions, etc. You’ll still need to set the profile, using

EXEC DBMS_CLOUD_AI.set_profile('OPEN_AI');We can now use the DBMS_CLOUD_AI.GENERATE function to run our equivalent Select AI queries. We can use this to run most of the options for Select AI including showsql, narrate and chat. It’s important to note here that runsql is not supported. This was the default action when using Select AI. Instead, you obtain the necessary SQL using showsql, and you can then execute the returned SQL yourself in your PL/SQL code.

Here are a few examples from my previous posts:

SELECT DBMS_CLOUD_AI.GENERATE(prompt => 'what customer is the largest by sales',

profile_name => 'OPEN_AI',

action => 'showsql')

FROM dual;

SELECT DBMS_CLOUD_AI.GENERATE(prompt => 'how many customers in San Francisco are married',

profile_name => 'OPEN_AI',

action => 'narrate')

FROM dual;

SELECT DBMS_CLOUD_AI.GENERATE(prompt => 'who is the president of ireland',

profile_name => 'OPEN_AI',

action => 'chat')

FROM dual;If using Oracle 23c or higher you no longer need to include the FROM DUAL;

SelectAI – Can metadata help

Continuing with the exploration of Select AI, in this post I’ll look at how metadata can help. In my previous posts on Select AI, I’ve walked through examples of exploring the data in the SH schema and how you can use some of the conversational features. These really give a lot of potential for developing some useful features in your apps.

Many of you might have encountered schemas here either the table names and/or column names didn’t make sense. Maybe their names looked like some weird code or something, and you had to look up a document, often referred to as a data dictionary, to decode the actual meaning. In some instances, these schemas cannot be touched and in others, minor changes are allowed. In these later cases, we can look at adding some metadata to the tables to give meaning to these esoteric names.

For the following example, I’ve taken the simple EMP-DEPT tables and renamed the table and column names to something very generic. You’ll see I’ve added comments to explain the Tables and for each of the Columns. These comments should correspond to the original EMP-DEPT tables.

CREATE TABLE TABLE1(

c1 NUMBER(2) not null primary key,

c2 VARCHAR2(50) not null,

c3 VARCHAR2(50) not null);

COMMENT ON TABLE table1 IS 'Department table. Contains details of each Department including Department Number, Department Name and Location for the Department';

COMMENT ON COLUMN table1.c1 IS 'Department Number. Primary Key. Unique. Used to join to other tables';

COMMENT ON COLUMN table1.c1 IS 'Department Name. Name of department. Description of function';

COMMENT ON COLUMN table1.c3 IS 'Department Location. City where the department is located';

-- create the EMP table as TABLE2

CREATE TABLE TABLE2(

c1 NUMBER(4) not null primary key,

c2 VARCHAR2(50) not null,

c3 VARCHAR2(50) not null,

c4 NUMBER(4),

c5 DATE,

c6 NUMBER(10,2),

c7 NUMBER(10,2),

c8 NUMBER(2) not null);

COMMENT ON TABLE table2 IS 'Employee table. Contains details of each Employee. Employees';

COMMENT ON COLUMN table2.c1 IS 'Employee Number. Primary Key. Unique. How each employee is idendifed';

COMMENT ON COLUMN table2.c1 IS 'Employee Name. Name of each Employee';

COMMENT ON COLUMN table2.c3 IS 'Employee Job Title. Job Role. Current Position';

COMMENT ON COLUMN table2.c4 IS 'Manager for Employee. Manager Responsible. Who the Employee reports to';

COMMENT ON COLUMN table2.c5 IS 'Hire Date. Date the employee started in role. Commencement Date';

COMMENT ON COLUMN table2.c6 IS 'Salary. How much the employee is paid each month. Dollars';

COMMENT ON COLUMN table2.c7 IS 'Commission. How much the employee can earn each month in commission. This is extra on top of salary';

COMMENT ON COLUMN table2.c8 IS 'Department Number. Foreign Key. Join to Department Table';

insert into table1 values (10,'Accounting','New York');

insert into table1 values (20,'Research','Dallas');

insert into table1 values (30,'Sales','Chicago');

insert into table1 values (40,'Operations','Boston');

alter session set nls_date_format = 'YY/MM/DD';

insert into table2 values (7369,'SMITH','CLERK',7902,'93/6/13',800,0.00,20);

insert into table2 values (7499,'ALLEN','SALESMAN',7698,'98/8/15',1600,300,30);

insert into table2 values (7521,'WARD','SALESMAN',7698,'96/3/26',1250,500,30);

insert into table2 values (7566,'JONES','MANAGER',7839,'95/10/31',2975,null,20);

insert into table2 values (7698,'BLAKE','MANAGER',7839,'92/6/11',2850,null,30);

insert into table2 values (7782,'CLARK','MANAGER',7839,'93/5/14',2450,null,10);

insert into table2 values (7788,'SCOTT','ANALYST',7566,'96/3/5',3000,null,20);

insert into table2 values (7839,'KING','PRESIDENT',null,'90/6/9',5000,0,10);

insert into table2 values (7844,'TURNER','SALESMAN',7698,'95/6/4',1500,0,30);

insert into table2 values (7876,'ADAMS','CLERK',7788,'99/6/4',1100,null,20);

insert into table2 values (7900,'JAMES','CLERK',7698,'00/6/23',950,null,30);

insert into table2 values (7934,'MILLER','CLERK',7782,'00/1/21',1300,null,10);

insert into table2 values (7902,'FORD','ANALYST',7566,'97/12/5',3000,null,20);

insert into table2 values (7654,'MARTIN','SALESMAN',7698,'98/12/5',1250,1400,30);Can Select AI be used to query this data? The simple answer is ‘ish’. Yes, Select AI can query this data but some care is needed on how you phrase the questions, and some care is needed to refine the metadata descriptions given in the table and column Comments.

To ensure these metadata Comments are exposed to the LLMs, we need to include the following line in our Profile

"comments":"true",Using the same Profile setup I used for OpenAI, we need to include the tables and the (above) comments:true command. See below in bold

BEGIN

DBMS_CLOUD_AI.drop_profile(profile_name => 'OPEN_AI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'OPEN_AI',

attributes => '{"provider": "openai",

"credential_name": "OPENAI_CRED",

"comments":"true",

"object_list": [{"owner": "BRENDAN", "name": "TABLE1"},

{"owner": "BRENDAN", "name": "TABLE2"}],

"model":"gpt-3.5-turbo"

}');

END;After we set the profile for our session, we can now write some statements to explore the data.

Warning: if you don’t include “comments”:”true”, you’ll get no results being returned.

Here are a few of what I wrote.

select ai what departments do we have;

select AI showsql what departments do we have;

select ai count departments;

select AI showsql count department;

select ai how many employees;

select ai how many employees work in department 30;

select ai count unique job titles;

select ai list cities where departments are located;

select ai how many employees work in New York;

select ai how many people work in each city;

select ai where are the departments located;

select ai what is the average salary for each department;Check out the other posts about Select AI.

SelectAI – Doing something useful

In a previous post, I introduced Select AI and gave examples of how to do some simple things. These included asking it using some natural language questions, to query some data in the Database. That post used both Cohere and OpenAI to process the requests. There were mixed results and some gave a different, somewhat disappointing, outcome. But with using OpenAI the overall outcome was a bit more positive. To build upon the previous post, this post will explore some of the additional features of Select AI, which can give more options for incorporating Select AI into your applications/solutions.

Select AI has five parameters, as shown in the table below. In the previous post, the examples focused on using the first parameter. Although those examples didn’t include the parameter name ‘runsql‘. It is the default parameter and can be excluded from the Select AI statement. Although there were mixed results from using this default parameter ‘runsql’, it is the other parameters that make things a little bit more interesting and gives you opportunities to include these in your applications. In particular, the ‘narrate‘ and ‘explainsql‘ parameters and to a lesser extent the ‘chat‘ parameter. Although for the ‘chat’ parameter there are perhaps slightly easier and more efficient ways of doing this.

Let’s start by looking at the ‘chat‘ parameter. This allows you to ‘chat’ LLM just like you would with ChatGPT and other similar. A useful parameter to set in the CREATE_PROFILE is to set the conversation to TRUE, as that can give more useful results as the conversation develops.

BEGIN

DBMS_CLOUD_AI.drop_profile(profile_name => 'OPEN_AI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'OPEN_AI',

attributes => '{"provider": "openai",

"credential_name": "OPENAI_CRED",

"object_list": [{"owner": "SH", "name": "customers"},

{"owner": "SH", "name": "sales"},

{"owner": "SH", "name": "products"},

{"owner": "SH", "name": "countries"},

{"owner": "SH", "name": "channels"},

{"owner": "SH", "name": "promotions"},

{"owner": "SH", "name": "times"}],

"conversation": "true", "model":"gpt-3.5-turbo" }');

END;There are a few statements I’ve used.

select AI chat who is the president of ireland;

select AI chat what role does NAMA have in ireland;

select AI chat what are the annual revenues of Oracle;

select AI chat who is the largest cloud computing provider;

select AI chat can you rank the cloud providers by income over the last 5 years;

select AI chat what are the benefits of using Oracle Cloud;As you’d expect the results can be ‘kind of correct’, with varying levels of information given. I’ve tried these using Cohere and OpenAI, and their responses illustrate the need for careful testing and evaluation of the various LLMs to see which one suits your needs.

In my previous post, I gave some examples of using Select AI to query data in the Database based on a natural language request. Select AI takes that request and sends it, along with details of the objects listed in the create_profile, to the LLM. The LLM then sends back the SQL statement, which is then executed in the Database and the results are displayed. But what if you want to see the SQL generated by the LLM. To see the SQL you can use the ‘showsql‘ parameter. Here are a couple of examples:

SQL> select AI showsql how many customers in San Francisco are married;

RESPONSE

_____________________________________________________________________________SELECT COUNT(*) AS total_married_customers

FROM SH.CUSTOMERS c

WHERE c.CUST_CITY = 'San Francisco'

AND c.CUST_MARITAL_STATUS = 'Married'

SQL> select AI what customer is the largest by sales;

CUST_ID CUST_FIRST_NAME CUST_LAST_NAME TOTAL_SALES

__________ __________________ _________________ ______________

11407 Dora Rice 103412.66

SQL> select AI showsql what customer is the largest by sales;

RESPONSE

_____________________________________________________________________________SELECT C.CUST_ID, C.CUST_FIRST_NAME, C.CUST_LAST_NAME, SUM(S.AMOUNT_SOLD) AS TOTAL_SALES

FROM SH.CUSTOMERS C

JOIN SH.SALES S ON C.CUST_ID = S.CUST_ID

GROUP BY C.CUST_ID, C.CUST_FIRST_NAME, C.CUST_LAST_NAME

ORDER BY TOTAL_SALES DESC

FETCH FIRST 1 ROW ONLY The examples above that illustrate the ‘showsql‘ is kind of interesting. Careful consideration of how and where to use this is needed.

Where things get a little bit more interesting with the ‘narrate‘ parameter, which attempts to narrate or explain the output from the query. There are many use cases where this can be used to supplement existing dashboards, etc. The following are examples of using ‘narrate‘ for the same two queries used above.

SQL> select AI narrate how many customers in San Francisco are married;

RESPONSE

________________________________________________________________

The total number of married customers in San Francisco is 18.

SQL> select AI narrate what customer is the largest by sales;

RESPONSE

_____________________________________________________________________________To find the customer with the largest sales, you can use the following SQL query:

```sql

SELECT c.CUST_FIRST_NAME || ' ' || c.CUST_LAST_NAME AS CUSTOMER_NAME, SUM(s.AMOUNT_SOLD) AS TOTAL_SALES

FROM "SH"."CUSTOMERS" c

JOIN "SH"."SALES" s ON c.CUST_ID = s.CUST_ID

GROUP BY c.CUST_FIRST_NAME, c.CUST_LAST_NAME

ORDER BY TOTAL_SALES DESC

FETCH FIRST 1 ROW ONLY;

```

This query joins the "CUSTOMERS" and "SALES" tables on the customer ID and calculates the total sales for each customer. It then sorts the results in descending order of total sales and fetches only the first row, which represents the customer with the largest sales.

The result will be in the following format:

| CUSTOMER_NAME | TOTAL_SALES |

|---------------|-------------|

| Tess Drumm | 161882.79 |

In this example, the customer with the largest sales is "Tess Drumm" with a total sales amount of 161,882.79.

Looking at the outputs from using the ‘narrate‘ parameter, we can see the level of detail given and see the potential of including this kind of information in our applications, dashboards, etc. But to use this output some additional filtering and subsetting will need to be performed.

The final parameter is called ‘explainsql‘. While it may not be as useful as the ‘narrate‘ parameter, it does have some potential uses. The ‘explainsql‘ attempts to explain in natural language what a SQL query is attempting to do, based on a natural language statement. Again using the same two examples, we get the following.

SQL> select AI explainsql what customer is the largest by sales;

RESPONSE

_____________________________________________________________________________SELECT C.CUST_ID, C.CUST_FIRST_NAME, C.CUST_LAST_NAME, SUM(S.AMOUNT_SOLD) AS TOTAL_SALES

FROM SH.CUSTOMERS C

JOIN SH.SALES S ON C.CUST_ID = S.CUST_ID

GROUP BY C.CUST_ID, C.CUST_FIRST_NAME, C.CUST_LAST_NAME

ORDER BY TOTAL_SALES DESC

FETCH FIRST 1 ROW ONLY;

Explanation:

- The query selects the customer ID, first name, last name, and the sum of the amount sold from the CUSTOMERS and SALES tables.

- The CUSTOMERS and SALES tables are joined on the CUST_ID column.

- The results are grouped by customer ID, first name, and last name.

- The results are then ordered in descending order by the total sales.

- The FETCH FIRST 1 ROW ONLY clause is used to retrieve only the top row with the highest total sales.

SQL> select AI explainsql how many customers in San Francisco are married;

RESPONSE

_____________________________________________________________________________

SELECT COUNT(*) AS total_married_customers

FROM SH.CUSTOMERS c

WHERE c.CUST_CITY = 'San Francisco'

AND c.CUST_MARITAL_STATUS = 'Married';

This query selects the count of customers who are married and live in San Francisco. The table alias "c" is used for the CUSTOMERS table. The condition "c.CUST_CITY = 'San Francisco'" filters the customers who live in San Francisco, and the condition "c.CUST_MARITAL_STATUS = 'Married'" filters the customers who are married. The result is the total number of married customers in San Francisco. Check out the other posts about Select AI.

SelectAI – the beginning of a journey

Oracle released Select AI a few months ago, and with any new product it is always a good idea to give it a little time to fix any “bugs” or “features”. To a certain extent, the release of this capability is a long time behind the marketplace. Similar products have been available in different ways, in different products, in different languages, etc for some time now. I’m not going to get into the benefits of this feature/product, as lots have been written about this and most of those are just rehashing the documentation and the marketing materials created for the release. But over all this time, Oracle seems to have been focused on deploying generative AI and LLM related features into their vast collection of applications. Yes, they have done some really cool work with those applications. But during that period the everyday developer, outside of those Apps development teams, has been left waiting for too long to get proper access to this functionality. In most cases, they have gone elsewhere. One thing Oracle does need to address is the public messaging around certain behavioural aspects of Select AI. There has been some contradictory information between what it says in the documentation and what the various Product Managers are saying. This is a problem, as it just confuses customers who will then use something else.

I’m building a particular application that utilizes various OCI products, including some of their AI products, to create a hands-free way of interacting with data and is suitable for those who have various physical and visual impairments. Should I consider including Select AI? Let’s see if it is up to the task.

Let’s get on with setting up and using Select AI. This post focuses on getting it set-up and running with some basic commands, plus a few warnings too as it isn’t all that it’s made out to be! Check out my other posts that explore different aspects (most other posts only show one or two statements), and some of the issues you need to watch out for, as it may not entirely live up to expectations.

The first thing you need to be aware of, this functionality is only available on an ADW/ATP on Oracle Cloud. At some point, we might have it on-premises, but that might be a while coming as I’m sure the developers are still working on improving how it works (and yes it does need some work).

Step 1 – Connect as ADMIN of ADW/ATP

As the ADMIN user for the database, you need to set-up a few things for other users of the Database before they can use Select AI.

Firstly we add the schema which will be using Select AI to the Access Control List. This will allow them to reach things outside of the Database. The following illustrates adding the BRENDAN schema to the list and allowing HTTP calls to the Cohere API interface.

BEGIN

DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE(

host => 'api.cohere.ai',

ace => xs$ace_type(privilege_list => xs$name_list('http'),

principal_name => 'BRENDAN',

principal_type => xs_acl.ptype_db)

);

END;Next, we need to grant some privileges to some PL/SQL packages.

grant execute on DBMS_CLOUD_AI to BRENDAN;

grant execute on DBMS_CLOUD to BRENDAN;That’s the admin steps

Step 2 – Connect to your Schema/User – Cohere Example (see OpenAI later in this post)

In my BRENDAN schema, I need to create a Credential.

BEGIN

-- DBMS_CLOUD.DROP_CREDENTIAL (credential_name => 'COHERE_CRED');

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'COHERE_CRED',

username => 'COHERE',

password => '...' );

END;The … in the above example, indicates where you can place your Cohere API key. It’s very easy to get this and this explains the steps.

Next, you need to create a CLOUD_AI profile.

BEGIN

--DBMS_CLOUD_AI.drop_profile(profile_name => 'COHERE_AI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'COHERE_AI',

attributes => '{"provider": "cohere",

"credential_name": "COHERE_CRED",

"object_list": [{"owner": "SH", "name": "customers"},

{"owner": "SH", "name": "sales"},

{"owner": "SH", "name": "products"},

{"owner": "SH", "name": "countries"},

{"owner": "SH", "name": "channels"},

{"owner": "SH", "name": "promotions"},

{"owner": "SH", "name": "times"}]

}');

END;When creating the CLOUD_AI profile for your schema, you can list the objects/tables you want to expose to the Cohere or OpenAI models. In theory (so the documentation says) it shares various metadata about these objects/tables, which the models in turn interpret, and use this to formulate their response. I said in theory, as that is what the documentation says, but the PMs on a recent webcast said it did use things like primary keys, foreign keys, etc. There are many other challenges here, and I’ll come back to those at a later time.

At this point, you are all set up to use Select AI.

Step 3 – See if you can get Select AI to work!

Before you can use Select AI, you need to enable it for your session. To do this run,

EXEC DBMS_CLOUD_AI.set_profile('COHERE_AI');If you start a new session/connection or your session/connection gets reset, you will need to run the above command again.

No onto the fun or less fun part. The Fun part is using it and getting results displayed back to you. When this happens (i.e. when it works) it can look like magic is happening. For example here are some commands that worked for me.

select ai how many customers exist;

select AI which customer is the biggest;

select AI what customer is the largest by revenue;

select AI what customer is the largest by sales;The real challenge with using Select AI is crafting a statement that works i.e. a query is run in the Database and the results are displayed back to you. This can be a real challenge. There are many blog posts out there with lots of examples of using Select AI, along with all the ‘canned’ examples in the documentation and in demos from PMs. I’ve tried all that I could find, and most/all of them didn’t work for me. Something isn’t working correctly behind the scenes. For example here are some examples of statements that didn’t work for me.

select AI how many customers in San Francisco are married;

select AI what is our best selling product by country;

select AI what is our biggest selling product by country;

select AI how many items with the product sub category of Cameras were sold in 1998;

select AI what customer is the biggest;

select AI which customer is the largest by revenue; Yet some of these statements (above) have been given in docs/posts/demos as working. For a little surprise, have a look at the comment at the bottom of this post.

Don’t let this put you off from trying it. What I’ve shown here is just one part of what Select AI can do. Check out my next post on Select AI where I’ll show examples of the other features, which work and can be used to build some interesting solutions for your users.

Set-up for OpenAI

The steps I’ve given above are for using Cohere. A few others can be used including the popular OpenAI. The setup is very similar to what I’ve shown above and the main difference is the Hostname, OpenAI API key and username. See here for how to get an OpenAI API key.

As ADMIN run.

BEGIN

DBMS_NETWORK_ACL_ADMIN.APPEND_HOST_ACE(

host => 'api.openai.com',

ace => xs$ace_type(privilege_list => xs$name_list('http'),

principal_name => 'BRENDAN',

principal_type => xs_acl.ptype_db)

);

END;Then in your Schema/user.

BEGIN

DBMS_CLOUD.DROP_CREDENTIAL (credential_name => 'OPENAI_CRED');

DBMS_CLOUD.CREATE_CREDENTIAL(

credential_name => 'OPENAI_CRED',

username => '.....',

password => '...' );

END;BEGIN

DBMS_CLOUD_AI.drop_profile(profile_name => 'OPEN_AI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'OPEN_AI',

attributes => '{"provider": "openai",

"credential_name": "OPENAI_CRED",

"object_list": [{"owner": "SH", "name": "customers"},

{"owner": "SH", "name": "sales"},

{"owner": "SH", "name": "products"},

{"owner": "SH", "name": "countries"},

{"owner": "SH", "name": "channels"},

{"owner": "SH", "name": "promotions"},

{"owner": "SH", "name": "times"}],

"model":"gpt-3.5-turbo"

}');

END;And then run the following before trying any use Select AI.

EXEC DBMS_CLOUD_AI.set_profile('OPEN_AI');If you look earlier in this post, I listed some questions that couldn’t be answered using Cohere. When I switched to using OpenAPI, all of these worked for me. The question then is, which LLM should you use? based on this simple experiment use Open API and avoid Cohere. But things might be different for you and at a later time when Cohere has time to improve.

Check out the other posts about Select AI.

EU AI Act has been passed by EU parliament

It feels like we’ve been hearing about and talking about the EU AI Act for a very long time now. But on Wednesday 13th March 2024, the EU Parliament finally voted to approve the Act. While this is a major milestone, we haven’t crossed the finish line. There are a few steps to complete, although these are minor steps and are part of the process.

The remaining timeline is:

- The EU AI Act will undergo final linguistic approval by lawyer-linguists in April. This is considered a formality step.

- It will then be published in the Official EU Journal

- 21 days after being published it will come into effect (probably in May)

- The Prohibited Systems provisions will come into force six months later (probably by end of 2024)

- All other provisions in the Act will come into force over the next 2-3 years

If you haven’t already started looking at and evaluating the various elements of AI deployed in your organisation, now is the time to start. It’s time to prepare and explore what changes, if any, you need to make. If you don’t the penalties for non-compliance are hefty, with fines of up to €35 million or 7% of global turnover.

The first thing you need to address is the Prohibited AI Systems and the EU AI Act outlines the following and will need to be addressed before the end of 2024:

- Manipulative and Deceptive Practices: systems that use subliminal techniques to materially distort a person’s decision-making capacity, leading to significant harm. This includes systems that manipulate behaviour or decisions in a way that the individual would not have otherwise made.

- Exploitation of Vulnerabilities: systems that target individuals or groups based on age, disability, or socio-economic status to distort behaviour in harmful ways.

- Biometric Categorisation: systems that categorise individuals based on biometric data to infer sensitive information like race, political opinions, or sexual orientation. This prohibition does not cover any labelling or filtering of lawfully acquired biometric datasets, such as images. There are also exceptions for law enforcement.

- Social Scoring: systems designed to evaluate individuals or groups over time based on their social behaviour or predicted personal characteristics, leading to detrimental treatment.

- Real-time Biometric Identification: The use of real-time remote biometric identification systems in publicly accessible spaces for law enforcement is heavily restricted, with allowances only under narrowly defined circumstances that require judicial or independent administrative approval.

- Risk Assessment in Criminal Offences: systems that assess the risk of individuals committing criminal offences based solely on profiling, except when supporting human assessment already based on factual evidence.

- Facial Recognition Databases: systems that create or expand facial recognition databases through untargeted scraping of images are prohibited.

- Emotion Inference in Workplaces and Educational Institutions: The use of AI to infer emotions in sensitive environments like workplaces and schools is banned, barring exceptions for medical or safety reasons.

In addition to the timeline given above we also have:

- 12 months after entry into force: Obligations on providers of general purpose AI models go into effect. Appointment of member state competent authorities. Annual Commission review of and possible amendments to the list of prohibited AI.

- after 18 months: Commission implementing act on post-market monitoring

- after 24 months: Obligations on high-risk AI systems specifically listed in Annex III, which includes AI systems in biometrics, critical infrastructure, education, employment, access to essential public services, law enforcement, immigration and administration of justice. Member states to have implemented rules on penalties, including administrative fines. Member state authorities to have established at least one operational AI regulatory sandbox. Commission review and possible amendment of the last of high-risk AI systems.

- after 36 months: Obligations for high-rish AI systems that are not prescribed in Annex III but are intended to be used as a safety component of a product, or the AI is itself a product, and the product is required to undergo a third-party conformity assessment under existing specific laws, for example, toys, radio equipment, in vitro diagnostic medical devices, civil aviation security and agricultural vehicles.

The EU has provided an official compliance check that helps identify which parts of the EU AI Act apply in a given use case.

Cohere and OpenAI API Keys

To access and use the Generative AI features in the Oracle Database you’ll need access to the API of a LLM. In this post, I’ll step through what you need to do to get API keys from Cohere and OpenAI. These are the two main LLMs for use with the database and others will be accessible over time.

Cohere API

First, go to the Cohere API Dashboard. You can sign-up using your Google or GitHub accounts to sign in. Or create an account by clicking on Sign-up? (Bottom right-hand corner of page). Fill in your email address and a suitable password. Then confirm your sign-up using the email they just sent to you.

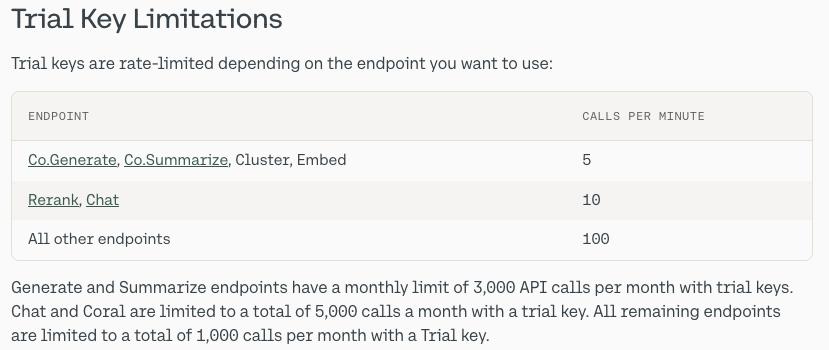

When you sign-up to Cohere, you are initially creating a Trial (Free) account. For now, this will be enough for playing with Select AI. There are some restrictions (shown below) but these might change over time so make sure to check this out.

From your Cohere Dashboard, you can access your API key, and use this to set-up your access to the LLM from your remote (app, database, etc) environment. This is a Trial API Key, that is rate-limited, so is kind of ok for testing and evaluation. If you need more, you’ll need to upgrade your account.

Open API

For Open API, you’ll need to create an account – Sign-up here.

Initially, you’ll be set-up with a Trial Account but you may need to upgrade this by depositing some money into your account. The minimum is $10 (plus taxes) and this should be enough to allow you to have a good play with using the API, and only top-up as needed after that, particularly if you go into production use. When you get logged into OpenAI, go to your Dashboard and click on the ‘Create new secret key’ button.

When you’ve obtained the API keys check out my other posts on how to allow access to these from the database and start using the Oracle Select AI features (and other products and code libraries) .

You only need an API key from one of these, but I’ve shown both. This allows you to decide which one you’d like to use.

Check out the other posts about Select AI.

You must be logged in to post a comment.