Python

Handling Multi-Column Indexes in Pandas Dataframes

It’s a little annoying when an API changes the structure of the data it returns and you end up with your code breaking. In my case, I experienced it when a dataframe having a single column index went to having a multi-column index. This was a new experience for me, at this time, as I hadn’t really come across it before. The following illustrates one particular case similar (not the same) that you might encounter. In this test/demo scenario I’ll be using the yfinance API to illustrate how you can remove the multi-column index and go back to having a single column index.

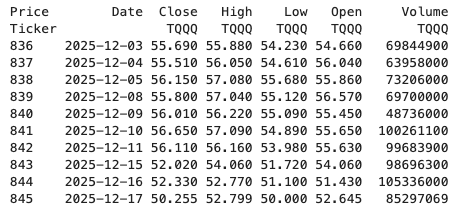

In this demo scenario, we are using yfinance to get data on various stocks. After the data is downloaded we get something like this.

The first step is to do some re-organisation.

df = data.stack(level="Ticker", future_stack=True)

df.index.names = ["Date", "Symbol"]

df = df[["Open", "High", "Low", "Close", "Volume"]]

df = df.swaplevel(0, 1)

df = df.sort_index()

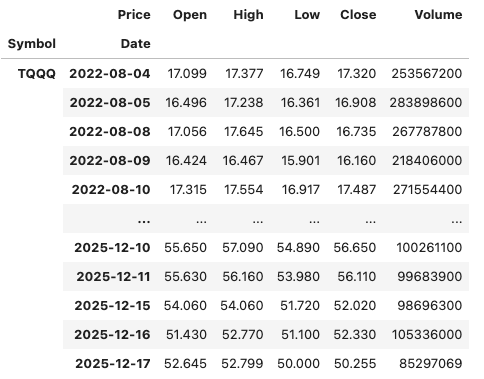

dfThis gives us the data in the following format.

The final part is to extract the data we want by applying a filter.

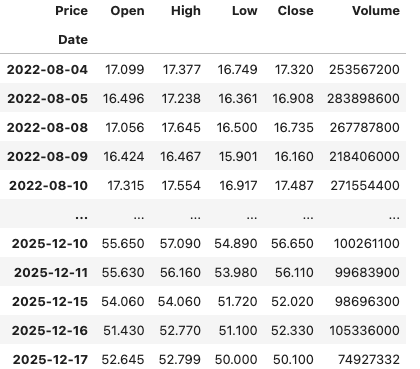

df.xs(“TQQQ”)

And there we have it.

As I said at the beginning the example above is just to illustrate what you can do.

If this was a real work example of using yfinance, I could just change a parameter setting in the download function, to not use multi_level_index.

data = yf.download(t, start=s_date, interval=time_interval, progress=False, multi_level_index=False)

Creating Test Data in your Database using Faker

A some point everyone needs some test data for their database. There area a number of ways of doing this, and in this post I’ll walk through using the Python library Faker to create some dummy test data (that kind of looks real) in my Oracle Database. I’ll have another post using the GenAI in-database feature available in the Oracle Autonomous Database. So keep an eye out for that.

Faker is one of the available libraries in Python for creating dummy/test data that kind of looks realistic. There are several more. I’m not going to get into the relative advantages and disadvantages of each, so I’ll leave that task to yourself. I’m just going to give you a quick demonstration of what is possible.

One of the key elements of using Faker is that we can set a geograpic location for the data to be generated. We can also set multiples of these and by setting this we can get data generated specific for that/those particular geographic locations. This is useful for when testing applications for different potential markets. In my example below I’m setting my local for USA (en_US).

Here’s the Python code to generate the Test Data with 15,000 records, which I also save to a CSV file.

import pandas as pd

from faker import Faker

import random

from datetime import date, timedelta

#####################

NUM_RECORDS = 15000

LOCALE = 'en_US'

#Initialise Faker

Faker.seed(42)

fake = Faker(LOCALE)

#####################

#Create a function to generate the data

def create_customer_record():

#Customer Gender

gender = random.choice(['Male', 'Female', 'Non-Binary'])

#Customer Name

if gender == 'Male':

name = fake.name_male()

elif gender == 'Female':

name = fake.name_female()

else:

name = fake.name()

#Date of Birth

dob = fake.date_of_birth(minimum_age=18, maximum_age=90)

#Customer Address and other details

address = fake.street_address()

email = fake.email()

city = fake.city()

state = fake.state_abbr()

zip_code = fake.postcode()

full_address = f"{address}, {city}, {state} {zip_code}"

phone_number = fake.phone_number()

#Customer Income

# - annual income between $30,000 and $250,000

income = random.randint(300, 2500) * 100

#Credit Rating

credit_rating = random.choices(['A', 'B', 'C', 'D'], weights=[0.40, 0.30, 0.20, 0.10], k=1)[0]

#Credit Card and Banking details

card_type = random.choice(['visa', 'mastercard', 'amex'])

credit_card_number = fake.credit_card_number(card_type=card_type)

routing_number = fake.aba()

bank_account = fake.bban()

return {

'CUSTOMERID': fake.unique.uuid4(), # Unique identifier

'CUSTOMERNAME': name,

'GENDER': gender,

'EMAIL': email,

'DATEOFBIRTH': dob.strftime('%Y-%m-%d'),

'ANNUALINCOME': income,

'CREDITRATING': credit_rating,

'CUSTOMERADDRESS': full_address,

'ZIPCODE': zip_code,

'PHONENUMBER': phone_number,

'CREDITCARDTYPE': card_type.capitalize(),

'CREDITCARDNUMBER': credit_card_number,

'BANKACCOUNTNUMBER': bank_account,

'ROUTINGNUMBER': routing_number,

}

#Generate the Demo Data

print(f"Generating {NUM_RECORDS} customer records...")

data = [create_customer_record() for _ in range(NUM_RECORDS)]

print("Sample Data Generation complete")

#Convert to Pandas DataFrame

df = pd.DataFrame(data)

print("\n--- DataFrame Sample (First 10 Rows) : sample of columns ---")

# Display relevant columns for verification

display_cols = ['CUSTOMERNAME', 'GENDER', 'DATEOFBIRTH', 'PHONENUMBER', 'CREDITCARDNUMBER', 'CREDITRATING', 'ZIPCODE']

print(df[display_cols].head(10).to_markdown(index=False))

print("\n--- DataFrame Information ---")

print(f"Total Rows: {len(df)}")

print(f"Total Columns: {len(df.columns)}")

print("Data Types:")

print(df.dtypes)The output from the above code gives the following:

Generating 15000 customer records...

Sample Data Generation complete

--- DataFrame Sample (First 10 Rows) : sample of columns ---

| CUSTOMERNAME | GENDER | DATEOFBIRTH | PHONENUMBER | CREDITCARDNUMBER | CREDITRATING | ZIPCODE |

|:-----------------|:-----------|:--------------|:-----------------------|-------------------:|:---------------|----------:|

| Allison Hill | Non-Binary | 1951-03-02 | 479.540.2654 | 2271161559407810 | A | 55488 |

| Mark Ferguson | Non-Binary | 1952-09-28 | 724.523.8849x696 | 348710122691665 | A | 84760 |

| Kimberly Osborne | Female | 1973-08-02 | 001-822-778-2489x63834 | 4871331509839301 | B | 70323 |

| Amy Valdez | Female | 1982-01-16 | +1-880-213-2677x3602 | 4474687234309808 | B | 07131 |

| Eugene Green | Male | 1983-10-05 | (442)678-4980x841 | 4182449353487409 | A | 32519 |

| Timothy Stanton | Non-Binary | 1937-10-13 | (707)633-7543x3036 | 344586850142947 | A | 14669 |

| Eric Parker | Male | 1964-09-06 | 577-673-8721x48951 | 2243200379176935 | C | 86314 |

| Lisa Ball | Non-Binary | 1971-09-20 | 516.865.8760 | 379096705466887 | A | 93092 |

| Garrett Gibson | Male | 1959-07-05 | 001-437-645-2991 | 349049663193149 | A | 15494 |

| John Petersen | Male | 1978-02-14 | 367.683.7770 | 2246349578856859 | A | 11722 |

--- DataFrame Information ---

Total Rows: 15000

Total Columns: 14

Data Types:

CUSTOMERID object

CUSTOMERNAME object

GENDER object

EMAIL object

DATEOFBIRTH object

ANNUALINCOME int64

CREDITRATING object

CUSTOMERADDRESS object

ZIPCODE object

PHONENUMBER object

CREDITCARDTYPE object

CREDITCARDNUMBER object

BANKACCOUNTNUMBER object

ROUTINGNUMBER objectHaving generated the Test data, we now need to get it into the database. There a various ways of doing this. As we are already using Python I’ll illustrate getting the data into the Database below. An alternative option is to use SQL Command Line (SQLcl) and the LOAD feature in that tool.

Here’s the Python code to load the data. I’m using the oracledb python library.

### Connect to Database

import oracledb

p_username = "..."

p_password = "..."

#Give OCI Wallet location and details

try:

con = oracledb.connect(user=p_username, password=p_password, dsn="adb26ai_high",

config_dir="/Users/brendan.tierney/Dropbox/Wallet_ADB26ai",

wallet_location="/Users/brendan.tierney/Dropbox/Wallet_ADB26ai",

wallet_password=p_walletpass)

except Exception as e:

print('Error connecting to the Database')

print(f'Error:{e}')

print(con)### Create Customer Table

drop_table = 'DROP TABLE IF EXISTS demo_customer'

cre_table = '''CREATE TABLE DEMO_CUSTOMER (

CustomerID VARCHAR2(50) PRIMARY KEY,

CustomerName VARCHAR2(50),

Gender VARCHAR2(10),

Email VARCHAR2(50),

DateOfBirth DATE,

AnnualIncome NUMBER(10,2),

CreditRating VARCHAR2(1),

CustomerAddress VARCHAR2(100),

ZipCode VARCHAR2(10),

PhoneNumber VARCHAR2(50),

CreditCardType VARCHAR2(10),

CreditCardNumber VARCHAR2(30),

BankAccountNumber VARCHAR2(30),

RoutingNumber VARCHAR2(10) )'''

cur = con.cursor()

print('--- Dropping DEMO_CUSTOMER table ---')

cur.execute(drop_table)

print('--- Creating DEMO_CUSTOMER table ---')

cur.execute(cre_table)

print('--- Table Created ---')### Insert Data into Table

insert_data = '''INSERT INTO DEMO_CUSTOMER values (:1, :2, :3, :4, :5, :6, :7, :8, :9, :10, :11, :12, :13, :14)'''

print("--- Inserting records ---")

cur.executemany(insert_data, df )

con.commit()

print("--- Saving to CSV ---")

df.to_csv('/Users/brendan.tierney/Dropbox/DEMO_Customer_data.csv', index=False)

print("- Finished -")### Close Connections to DB

con.close()and to prove the records got inserted we can connect to the schema using SQLcl and check.

What a difference a Bind Variable makes

To bind or not to bind, that is the question?

Over the years, I heard and read about using Bind variables and how important they are, particularly when it comes to the efficient execution of queries. By using bind variables, the optimizer will reuse the execution plan from the cache rather than generating it each time. Recently, I had conversations about this with a couple of different groups, and they didn’t really believe me and they asked me to put together a demonstration. One group said they never heard of ‘prepared statements’, ‘bind variables’, ‘parameterised query’, etc., which was a little surprising.

The following is a subset of what I demonstrated to them to illustrate the benefits and potential benefits.

Here is a basic example of a typical scenario where we have a SQL query being constructed using concatenation.

select * from order_demo where order_id = || 'i';That statement looks simple and harmless. When we try to check the EXPLAIN plan from the optimizer we will get an error, so let’s just replace it with a number, because that’s what the query will end up being like.

select * from order_demo where order_id = 1;When we check the Explain Plan, we get the following. It looks like a good execution plan as it is using the index and then doing a ROWID lookup on the table. The developers were happy, and that’s what those recent conversations were about and what they are missing.

-------------------------------------------------------------

| Id | Operation | Name | E-Rows |

-------------------------------------------------------------

| 0 | SELECT STATEMENT | | |

| 1 | TABLE ACCESS BY INDEX ROWID| ORDER_DEMO | 1 |

|* 2 | INDEX UNIQUE SCAN | SYS_C0014610 | 1 |

-------------------------------------------------------------

The missing part in their understanding was what happens every time they run their query. The Explain Plan looks good, so what’s the problem? The problem lies with the Optimizer evaluating the execution plan every time the query is issued. But the developers came back with the idea that this won’t happen because the execution plan is cached and will be reused. The problem with this is how we can test this, and what is the alternative, in this case, using bind variables (which was my suggestion).

Let’s setup a simple test to see what happens. Here is a simple piece of PL/SQL code which will look 100K times to retrieve just one row. This is very similar to what they were running.

DECLARE

start_time TIMESTAMP;

end_time TIMESTAMP;

BEGIN

start_time := systimestamp;

dbms_output.put_line('Start time : ' || to_char(start_time,'HH24:MI:SS:FF4'));

--

for i in 1 .. 100000 loop

execute immediate 'select * from order_demo where order_id = '||i;

end loop;

--

end_time := systimestamp;

dbms_output.put_line('End time : ' || to_char(end_time,'HH24:MI:SS:FF4'));

END;

/When we run this test against a 23.7 Oracle Database running in a VM on my laptop, this completes in little over 2 minutes

Start time : 16:26:04:5527

End time : 16:28:13:4820

PL/SQL procedure successfully completed.

Elapsed: 00:02:09.158The developers seemed happy with that time! Ok, but let’s test it using bind variables and see if it’s any different. There are a few different ways of setting up bind variables. The PL/SQL code below is one example.

DECLARE

order_rec ORDER_DEMO%rowtype;

start_time TIMESTAMP;

end_time TIMESTAMP;

BEGIN

start_time := systimestamp;

dbms_output.put_line('Start time : ' || to_char(start_time,'HH24:MI:SS:FF9'));

--

for i in 1 .. 100000 loop

execute immediate 'select * from order_demo where order_id = :1' using i;

end loop;

--

end_time := systimestamp;

dbms_output.put_line('End time : ' || to_char(end_time,'HH24:MI:SS:FF9'));

END;

/

Start time : 16:31:39:162619000

End time : 16:31:40:479301000

PL/SQL procedure successfully completed.

Elapsed: 00:00:01.363This just took a little over one second to complete. Let me say that again, a little over one second to complete. We went from taking just over two minutes to run, down to just over one second to run.

The developers were a little surprised or more correctly, were a little shocked. But they then said the problem with that demonstration is that it is running directly in the Database. It will be different running it in Python across the network.

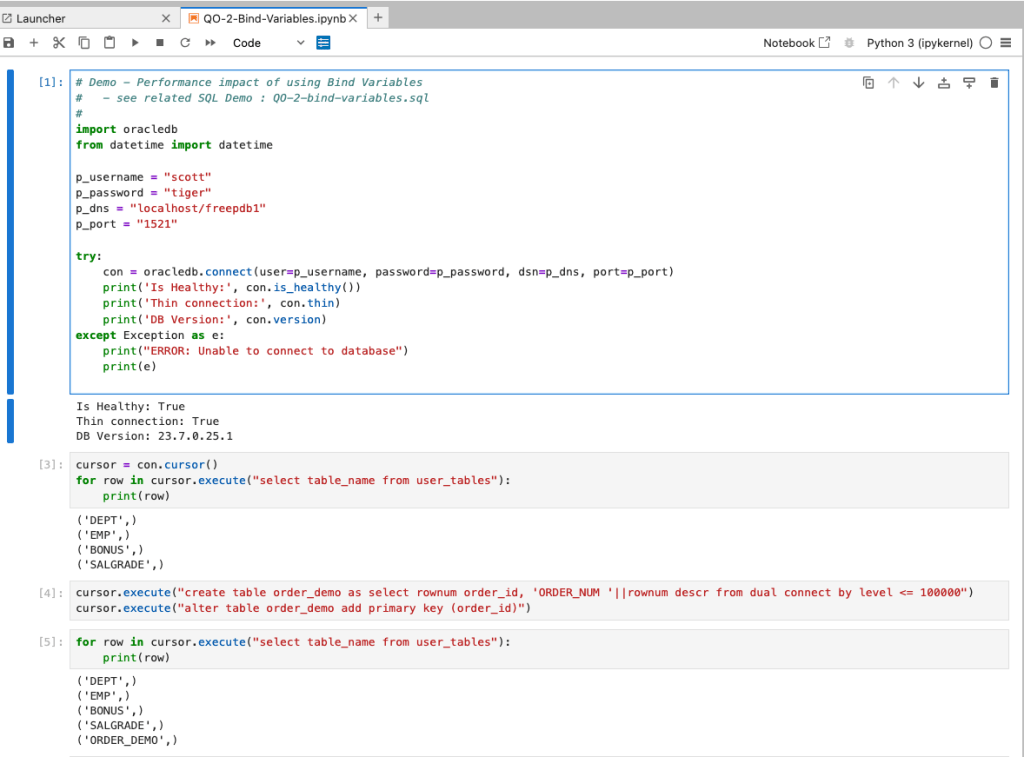

Ok, let me set up the same/similar demonstration using Python. The image below show some back Python code to connect to the database, list the tables in the schema and to create the test table for our demonstration

The first demonstration is to measure the timing for 100K records using the concatenation approach. I

# Demo - The SLOW way - concat values

#print start-time

print('Start time: ' + datetime.now().strftime("%H:%M:%S:%f"))

# only loop 10,000 instead of 100,000 - impact of network latency

for i in range(1, 100000):

cursor.execute("select * from order_demo where order_id = " + str(i))

#print end-time

print('End time: ' + datetime.now().strftime("%H:%M:%S:%f"))

----------

Start time: 16:45:29:523020

End time: 16:49:15:610094This took just under four minutes to complete. With PL/SQL it took approx two minutes. The extrat time is due to the back and forth nature of the client-server communications between the Python code and the Database. The developers were a little unhappen with this result.

The next step for the demonstrataion was to use bind variables. As with most languages there are a number of different ways to write and format these. Below is one example, but some of the others were also tried and give the same timing.

#Bind variables example - by name

#print start-time

print('Start time: ' + datetime.now().strftime("%H:%M:%S:%f"))

for i in range(1, 100000):

cursor.execute("select * from order_demo where order_id = :order_num", order_num=i )

#print end-time

print('End time: ' + datetime.now().strftime("%H:%M:%S:%f"))

----------

Start time: 16:53:00:479468

End time: 16:54:14:197552This took 1 minute 14 seconds. [Read that sentence again]. Compared to approx four minutes, and yes the other bind variable options has similar timing.

To answer the quote at the top of this post, “To bind or not to bind, that is the question?”, the answer is using Bind Variables, Prepared Statements, Parameterised Query, etc will make you queries and applications run a lot quicker. The optimizer will see the structure of the query, will see the parameterised parts of it, will see the execution plan already exists in the cache and will use it instead of generating the execution plan again. Thereby saving time for frequently executed queries which might just have a different value for one or two parts of a WHERE clause.

OCI Speech Real-time Capture

Capturing Speech-to-Text is a straight forward step. I’ve written previously about this, giving an example. But what if you want the code to constantly monitor for text input, giving a continuous. For this we need to use the asyncio python library. Using the OCI Speech-to-Text API in combination with asyncio we can monitor a microphone (speech input) on a continuous basis.

There are a few additional configuration settings needed, including configuring a speech-to-text listener. Here is an example of what is needed

lass MyListener(RealtimeSpeechClientListener):

def on_result(self, result):

if result["transcriptions"][0]["isFinal"]:

print(f"1-Received final results: {transcription}")

else:

print(f"2-{result['transcriptions'][0]['transcription']} \n")

def on_ack_message(self, ackmessage):

return super().on_ack_message(ackmessage)

def on_connect(self):

return super().on_connect()

def on_connect_message(self, connectmessage):

return super().on_connect_message(connectmessage)

def on_network_event(self, ackmessage):

return super().on_network_event(ackmessage)

def on_error(self, error_message):

return super().on_error(error_message)

def on_close(self, error_code, error_message):

print(f'\nOCI connection closing.')

async def start_realtime_session(customizations=[], compartment_id=None, region=None):

rt_client = RealtimeSpeechClient(

config=config,

realtime_speech_parameters=realtime_speech_parameters,

listener=MyListener(),

service_endpoint=realtime_speech_url,

signer=None, #authenticator(),

compartment_id=compartment_id,

)

asyncio.create_task(send_audio(rt_client))

if __name__ == "__main__":

asyncio.run(

start_realtime_session(

customizations=customization_ids,

compartment_id=COMPARTMENT_ID,

region=REGION_ID,

)

)Additional customizations can be added to the Listener, for example, what to do with the Audio captured, what to do with the text, how to mange the speech-to-text (there are lots of customizations)

python-oracledb driver version 3 – load data into pandas df

The Python Oracle driver had a new release recently (version 3) and with it comes a new way to load data from a Table into a Pandas dataframe. This can now be done using the pyarrow library. Here’s an example:

import oracledb ora

import pyarrow py

import pandas

#create a connection to the database

con = ora.connect( <enter your connection details> )

query = "select cust_id, cust_first_name, cust_last_name, cust_city from customers"

#get Oracle DF and set array size - care is needed for setting this

ora_df = con.fetch_df_all(statement=query, arraysize=2000)

#run query and return into Pandas Dataframe

# using pyarrow and the to_pandas() function

df = py.Table.from_arrays(ora_df.column_arrays(), names=ora_df.columns()).to_pandas()

print(df.columns)Once you get used to the syntax it is a simpler way to get the data into dataframe.

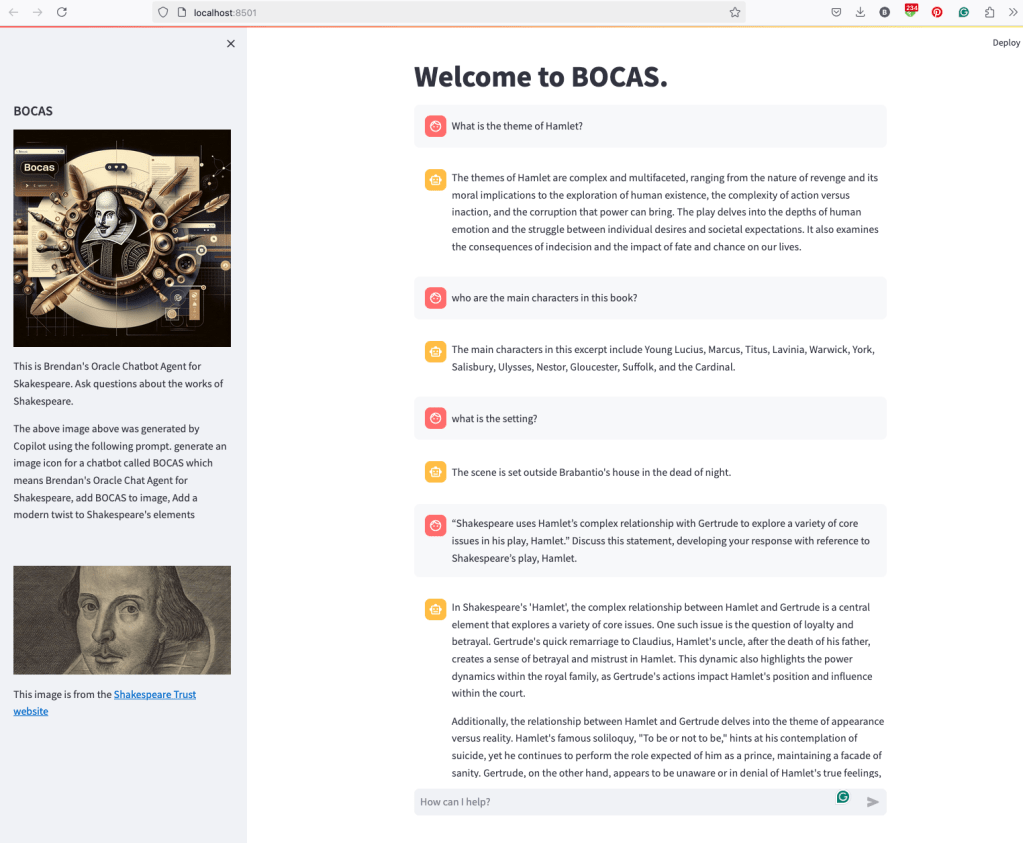

BOCAS – using OCI GenAI Agent and Stremlit

BOCAS stands for Brendan’s Oracle Chatbot Agent for Shakespeare. I’ve previously posted on how to go about creating a GenAI Agent on a specific data set. In this post, I’ll share code on how I did this using Python Streamlit.

And here’s the code

import streamlit as st

import time

import oci

from oci import generative_ai_agent_runtime

import json

# Page Title

welcome_msg = "Welcome to BOCAS."

welcome_msg2 = "This is Brendan's Oracle Chatbot Agent for Skakespeare. Ask questions about the works of Shakespeare."

st.title(welcome_msg)

# Sidebar Image

st.sidebar.header("BOCAS")

st.sidebar.image("bocas-3.jpg", use_column_width=True)

#with st.sidebar:

# with st.echo:

# st.write(welcome_msg2)

st.sidebar.markdown(welcome_msg2)

st.sidebar.markdown("The above image above was generated by Copilot using the following prompt. generate an image icon for a chatbot called BOCAS which means Brendan's Oracle Chat Agent for Shakespeare, add BOCAS to image, Add a modern twist to Shakespeare's elements")

st.sidebar.write("")

st.sidebar.write("")

st.sidebar.write("")

st.sidebar.image("https://media.shakespeare.org.uk/images/SBT_SR_OS_37_Shakespeare_Firs.ec42f390.fill-1200x600-c75.jpg")

link="This image is from the [Shakespeare Trust website](https://media.shakespeare.org.uk/images/SBT_SR_OS_37_Shakespeare_Firs.ec42f390.fill-1200x600-c75.jpg)"

st.sidebar.write(link,unsafe_allow_html=True)

# OCI GenAI settings

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', CONFIG_PROFILE)

###

SERVICE_EP = <your service endpoint>

AGENT_EP_ID = <your agent endpoint>

###

# Response Generator

def response_generator(text_input):

#Initiate AI Agent runtime client

genai_agent_runtime_client = generative_ai_agent_runtime.GenerativeAiAgentRuntimeClient(config, service_endpoint=SERVICE_EP, retry_strategy=oci.retry.NoneRetryStrategy())

create_session_details = generative_ai_agent_runtime.models.CreateSessionDetails()

create_session_details.display_name = "Welcome to BOCAS"

create_session_details.idle_timeout_in_seconds = 20

create_session_details.description = welcome_msg

create_session_response = genai_agent_runtime_client.create_session(create_session_details, AGENT_EP_ID)

#Define Chat details and input message/question

session_details = generative_ai_agent_runtime.models.ChatDetails()

session_details.session_id = create_session_response.data.id

session_details.should_stream = False

session_details.user_message = text_input

#Get AI Agent Respose

session_response = genai_agent_runtime_client.chat(agent_endpoint_id=AGENT_EP_ID, chat_details=session_details)

#print(str(response.data))

response = session_response.data.message.content.text

return response

# Initialize chat history

if "messages" not in st.session_state:

st.session_state.messages = []

# Display chat messages from history on app rerun

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Accept user input

if prompt := st.chat_input("How can I help?"):

# Add user message to chat history

st.session_state.messages.append({"role": "user", "content": prompt})

# Display user message in chat message container

with st.chat_message("user"):

st.markdown(prompt)

# Display assistant response in chat message container

with st.chat_message("assistant"):

response = response_generator(prompt)

write_response = st.write(response)

st.session_state.messages.append({"role": "ai", "content": response})

# Add assistant response to chat historyOracle Object Storage – Parallel Downloading

In previous posts, I’ve given example Python code (and functions) for processing files into and out of OCI Object and Bucket Storage. One of these previous posts includes code and a demonstration of uploading files to an OCI Bucket using the multiprocessing package in Python.

Building upon these previous examples, the code below will download a Bucket using parallel processing. Like my last example, this code is based on the example code I gave in an earlier post on functions within a Jupyter Notebook.

Here’s the code.

import oci

import os

import argparse

from multiprocessing import Process

from glob import glob

import time

####

def upload_file(config, NAMESPACE, b, f, num):

file_exists = os.path.isfile(f)

if file_exists == True:

try:

start_time = time.time()

object_storage_client = oci.object_storage.ObjectStorageClient(config)

object_storage_client.put_object(NAMESPACE, b, os.path.basename(f), open(f,'rb'))

print(f'. Finished {num} uploading {f} in {round(time.time()-start_time,2)} seconds')

except Exception as e:

print(f'Error uploading file {num}. Try again.')

print(e)

else:

print(f'... File {f} does not exist or cannot be found. Check file name and full path')

####

def check_bucket_exists(config, NAMESPACE, b_name):

#check if Bucket exists

is_there = False

object_storage_client = oci.object_storage.ObjectStorageClient(config)

l_b = object_storage_client.list_buckets(NAMESPACE, config.get("tenancy")).data

for bucket in l_b:

if bucket.name == b_name:

is_there = True

if is_there == True:

print(f'Bucket {b_name} exists.')

else:

print(f'Bucket {b_name} does not exist.')

return is_there

####

def download_bucket_file(config, NAMESPACE, b, d, f, num):

print(f'..Starting Download File ({num}):',f, ' from Bucket', b, ' at ', time.strftime("%H:%M:%S"))

try:

start_time = time.time()

object_storage_client = oci.object_storage.ObjectStorageClient(config)

get_obj = object_storage_client.get_object(NAMESPACE, b, f)

with open(os.path.join(d, f), 'wb') as f:

for chunk in get_obj.data.raw.stream(1024 * 1024, decode_content=False):

f.write(chunk)

print(f'..Finished Download ({num}) in ', round(time.time()-start_time,2), 'seconds.')

except:

print(f'Error trying to download file {f}. Check parameters and try again')

####

if __name__ == "__main__":

#setup for OCI

config = oci.config.from_file()

object_storage = oci.object_storage.ObjectStorageClient(config)

NAMESPACE = object_storage.get_namespace().data

####

description = "\n".join(["Upload files in parallel to OCI storage.",

"All files in <directory> will be uploaded. Include '/' at end.",

"",

"<bucket_name> must already exist."])

parser = argparse.ArgumentParser(description=description,

formatter_class=argparse.RawDescriptionHelpFormatter)

parser.add_argument(dest='bucket_name',

help="Name of object storage bucket")

parser.add_argument(dest='directory',

help="Path to local directory containing files to upload.")

args = parser.parse_args()

####

bucket_name = args.bucket_name

directory = args.directory

if not os.path.isdir(directory):

parser.usage()

else:

dir = directory + os.path.sep + "*"

start_time = time.time()

print('Starting Downloading Bucket - Parallel:', bucket_name, ' at ', time.strftime("%H:%M:%S"))

object_storage_client = oci.object_storage.ObjectStorageClient(config)

object_list = object_storage_client.list_objects(NAMESPACE, bucket_name).data

count = 0

for i in object_list.objects:

count+=1

print(f'... {count} files to download')

proc_list = []

num=0

for o in object_list.objects:

p = Process(target=download_bucket_file, args=(config, NAMESPACE, bucket_name, directory, o.name, num))

p.start()

num+=1

proc_list.append(p)

for job in proc_list:

job.join()

print('---')

print(f'Download Finished in {round(time.time()-start_time,2)} seconds.({time.strftime("%H:%M:%S")})')

#### the end ####

I’ve saved the code to a file called bucket_parallel_download.py.

To call this, I run the following using the same DEMO_Bucket and directory of files I used in my previous posts.

python bucket_parallel_download.py DEMO_Bucket /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/

This creates the following output, and between 3.6 seconds to 4.4 seconds to download the 13 files, based on my connection.

[16:30~/Dropbox]> python bucket_parallel_download.py DEMO_Bucket /Users/brendan.tierney/DEMO_BUCKET

Starting Downloading Bucket - Parallel: DEMO_Bucket at 16:30:05

... 13 files to download

..Starting Download File (0): 2017-08-31 19.46.42.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (1): 2017-10-16 13.13.20.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (2): 2017-11-22 20.18.58.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (3): 2018-12-03 11.04.57.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (11): thumbnail_IMG_2333.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (5): IMG_2347.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (9): thumbnail_IMG_1711.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (4): 347397087_620984963239631_2131524631626484429_n.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (10): thumbnail_IMG_1712.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (8): thumbnail_IMG_1710.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (7): oug_ire18_1.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (6): IMG_6779.jpg from Bucket DEMO_Bucket at 16:30:08

..Starting Download File (12): thumbnail_IMG_2336.jpg from Bucket DEMO_Bucket at 16:30:08

..Finished Download (9) in 0.67 seconds.

..Finished Download (11) in 0.74 seconds.

..Finished Download (10) in 0.7 seconds.

..Finished Download (5) in 0.8 seconds.

..Finished Download (7) in 0.7 seconds.

..Finished Download (1) in 1.0 seconds.

..Finished Download (12) in 0.81 seconds.

..Finished Download (4) in 1.02 seconds.

..Finished Download (6) in 0.97 seconds.

..Finished Download (2) in 1.25 seconds.

..Finished Download (8) in 1.16 seconds.

..Finished Download (0) in 1.47 seconds.

..Finished Download (3) in 1.47 seconds.

---

Download Finished in 4.09 seconds.(16:30:09)Oracle Object Storage – Parallel Uploading

In my previous posts on using Python to work with OCI Object Storage, I gave code examples and illustrated how to create Buckets, explore Buckets, upload files, download files and delete files and buckets, all using Python and files on your computer.

- Oracle Object Storage – Setup and Explore

- Oracle Object Storage – Buckets & Loading files

- Oracle Object Storage – Downloading and Deleting

- Oracle Object Storage – Parallel Uploading

Building upon the code I’ve given for uploading files, which did so sequentially, in his post I’ve taken that code and expanded it to allow the files to be uploaded in parallel to an OCI Bucket. This is achieved using the Python multiprocessing library.

Here’s the code.

import oci

import os

import argparse

from multiprocessing import Process

from glob import glob

import time

####

def upload_file(config, NAMESPACE, b, f, num):

file_exists = os.path.isfile(f)

if file_exists == True:

try:

start_time = time.time()

object_storage_client = oci.object_storage.ObjectStorageClient(config)

object_storage_client.put_object(NAMESPACE, b, os.path.basename(f), open(f,'rb'))

print(f'. Finished {num} uploading {f} in {round(time.time()-start_time,2)} seconds')

except Exception as e:

print(f'Error uploading file {num}. Try again.')

print(e)

else:

print(f'... File {f} does not exist or cannot be found. Check file name and full path')

####

def check_bucket_exists(config, NAMESPACE, b_name):

#check if Bucket exists

is_there = False

object_storage_client = oci.object_storage.ObjectStorageClient(config)

l_b = object_storage_client.list_buckets(NAMESPACE, config.get("tenancy")).data

for bucket in l_b:

if bucket.name == b_name:

is_there = True

if is_there == True:

print(f'Bucket {b_name} exists.')

else:

print(f'Bucket {b_name} does not exist.')

return is_there

####

if __name__ == "__main__":

#setup for OCI

config = oci.config.from_file()

object_storage = oci.object_storage.ObjectStorageClient(config)

NAMESPACE = object_storage.get_namespace().data

####

description = "\n".join(["Upload files in parallel to OCI storage.",

"All files in <directory> will be uploaded. Include '/' at end.",

"",

"<bucket_name> must already exist."])

parser = argparse.ArgumentParser(description=description,

formatter_class=argparse.RawDescriptionHelpFormatter)

parser.add_argument(dest='bucket_name',

help="Name of object storage bucket")

parser.add_argument(dest='directory',

help="Path to local directory containing files to upload.")

args = parser.parse_args()

####

bucket_name = args.bucket_name

directory = args.directory

if not os.path.isdir(directory):

parser.usage()

else:

dir = directory + os.path.sep + "*"

#### Check if Bucket Exists ####

b_exists = check_bucket_exists(config, NAMESPACE, bucket_name)

if b_exists == True:

try:

proc_list = []

num=0

start_time = time.time()

#### Start uploading files ####

for file_path in glob(dir):

print(f"Starting {num} upload for {file_path}")

p = Process(target=upload_file, args=(config, NAMESPACE, bucket_name, file_path, num))

p.start()

num+=1

proc_list.append(p)

except Exception as e:

print(f'Error uploading file ({num}). Try again.')

print(e)

else:

print('... Create Bucket before uploading Directory.')

for job in proc_list:

job.join()

print('---')

print(f'Finished uploading all files ({num}) in {round(time.time()-start_time,2)} seconds')

#### the end ####

I’ve saved the code to a file called bucket_parallel.py.

To call this, I run the following using the same DEMO_Bucket and directory of files I used in my previous posts.

python bucket_parallel.py DEMO_Bucket /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/

This creates the following output, and between 3.3 seconds to 4.6 seconds to upload the 13 files, based on my connection.

[15:29~/Dropbox]> python bucket_parallel.py DEMO_Bucket /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/

Bucket DEMO_Bucket exists.

Starting 0 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_2336.jpg

Starting 1 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-08-31 19.46.42.jpg

Starting 2 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_2333.jpg

Starting 3 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/347397087_620984963239631_2131524631626484429_n.jpg

Starting 4 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1712.jpg

Starting 5 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1711.jpg

Starting 6 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-11-22 20.18.58.jpg

Starting 7 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1710.jpg

Starting 8 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2018-12-03 11.04.57.jpg

Starting 9 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/IMG_6779.jpg

Starting 10 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/oug_ire18_1.jpg

Starting 11 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-10-16 13.13.20.jpg

Starting 12 upload for /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/IMG_2347.jpg

. Finished 2 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_2333.jpg in 0.752561092376709 seconds

. Finished 5 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1711.jpg in 0.7750208377838135 seconds

. Finished 4 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1712.jpg in 0.7535321712493896 seconds

. Finished 0 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_2336.jpg in 0.8419861793518066 seconds

. Finished 7 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/thumbnail_IMG_1710.jpg in 0.7582859992980957 seconds

. Finished 10 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/oug_ire18_1.jpg in 0.8714470863342285 seconds

. Finished 12 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/IMG_2347.jpg in 0.8753311634063721 seconds

. Finished 1 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-08-31 19.46.42.jpg in 1.2201581001281738 seconds

. Finished 11 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-10-16 13.13.20.jpg in 1.2848408222198486 seconds

. Finished 3 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/347397087_620984963239631_2131524631626484429_n.jpg in 1.325110912322998 seconds

. Finished 9 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/IMG_6779.jpg in 1.6633048057556152 seconds

. Finished 8 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2018-12-03 11.04.57.jpg in 1.8549730777740479 seconds

. Finished 6 uploading /Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/2017-11-22 20.18.58.jpg in 2.018144130706787 seconds

---

Finished uploading all files (13) in 3.9126579761505127 secondsOracle Object Storage – Downloading and Deleting

In my previous posts on using Object Storage I illustrated what you needed to do to setup your connect, explore Object Storage, create Buckets and how to add files. In this post, I’ll show you how to download files from a Bucket, and to delete Buckets.

- Oracle Object Storage – Setup and Explore

- Oracle Object Storage – Buckets & Loading files

- Oracle Object Storage – Downloading and Deleting

- Oracle Object Storage – Parallel Uploading

Let’s start with downloading the files in a Bucket. In my previous post, I gave some Python code and functions to perform these steps for you. The Python function below will perform this for you. A Bucket needs to be empty before it can be deleted. The function checks for files and if any exist, will delete these files before proceeding with deleting the Bucket.

Namespace needs to be defined, and you can see how that is defined by looking at my early posts on this topic.

def download_bucket(b, d):

if os.path.exists(d) == True:

print(f'{d} already exists.')

else:

print(f'Creating {d}')

os.makedirs(d)

print('Downloading Bucket:',b)

object_list = object_storage_client.list_objects(NAMESPACE, b).data

count = 0

for i in object_list.objects:

count+=1

print(f'... {count} files')

for o in object_list.objects:

print(f'Downloading object {o.name}')

get_obj = object_storage_client.get_object(NAMESPACE, b, o.name)

with open(os.path.join(d,o.name), 'wb') as f:

for chunk in get_obj.data.raw.stream(1024 * 1024, decode_content=False):

f.write(chunk)

print('Download Finished.')Here’s an example of this working.

download_dir = '/Users/brendan.tierney/DEMO_BUCKET'

download_bucket(BUCKET_NAME, download_dir)

/Users/brendan.tierney/DEMO_BUCKET already exists.

Downloading Bucket: DEMO_Bucket

... 14 files

Downloading object .DS_Store

Downloading object 2017-08-31 19.46.42.jpg

Downloading object 2017-10-16 13.13.20.jpg

Downloading object 2017-11-22 20.18.58.jpg

Downloading object 2018-12-03 11.04.57.jpg

Downloading object 347397087_620984963239631_2131524631626484429_n.jpg

Downloading object IMG_2347.jpg

Downloading object IMG_6779.jpg

Downloading object oug_ire18_1.jpg

Downloading object thumbnail_IMG_1710.jpg

Downloading object thumbnail_IMG_1711.jpg

Downloading object thumbnail_IMG_1712.jpg

Downloading object thumbnail_IMG_2333.jpg

Downloading object thumbnail_IMG_2336.jpg

Download Finished.

We can also download individual files. Here’s a function to do that. It’s a simplified version of the previous function

def download_bucket_file(b, d, f):

print('Downloading File:',f, ' from Bucket', b)

try:

get_obj = object_storage_client.get_object(NAMESPACE, b, f)

with open(os.path.join(d, f), 'wb') as f:

for chunk in get_obj.data.raw.stream(1024 * 1024, decode_content=False):

f.write(chunk)

print('Download Finished.')

except:

print('Error trying to download file. Check parameters and try again')

download_dir = '/Users/brendan.tierney/DEMO_BUCKET'

file_download = 'oug_ire18_1.jpg'

download_bucket_file(BUCKET_NAME, download_dir, file_download)

Downloading File: oug_ire18_1.jpg from Bucket DEMO_Bucket

Download Finished.The final function is to delete a Bucket from your OCI account.

def delete_bucket(b_name):

bucket_exists = check_bucket_exists(b_name)

objects_exist = False

if bucket_exists == True:

print('Starting - Deleting Bucket '+b_name)

print('... checking if objects exist in Bucket (bucket needs to be empty)')

try:

object_list = object_storage_client.list_objects(NAMESPACE, b_name).data

objects_exist = True

except Exception as e:

objects_exist = False

if objects_exist == True:

print('... ... Objects exists in Bucket. Deleting these objects.')

count = 0

for o in object_list.objects:

count+=1

object_storage_client.delete_object(NAMESPACE, b_name, o.name)

if count > 0:

print(f'... ... Deleted {count} objects in {b_name}')

else:

print(f'... ... Bucket is empty. No objects to delete.')

else:

print(f'... No objects to delete, Bucket {b_name} is empty')

print(f'... Deleting bucket {b_name}')

response = object_storage_client.delete_bucket(NAMESPACE, b_name)

print(f'Deleted bucket {b_name}') Before running this function, lets do a quick check to see what Buckets I have in my OCI account.

list_bucket_counts()

Bucket name: ADW_Bucket

... num of objects : 2

Bucket name: Cats-and-Dogs-Small-Dataset

... num of objects : 100

Bucket name: DEMO_Bucket

... num of objects : 14

Bucket name: Demo

... num of objects : 210

Bucket name: Finding-Widlake-Bucket

... num of objects : 424

Bucket name: Planes-in-Satellites

... num of objects : 89

Bucket name: Vision-Demo-1

... num of objects : 10

Bucket name: root-bucket

... num of objects : 2I’ve been using DEMO_Bucket in my previous examples and posts. We’ll use this to demonstrate the deleting of a Bucket.

delete_bucket(BUCKET_NAME)

Bucket DEMO_Bucket exists.

Starting - Deleting Bucket DEMO_Bucket

... checking if objects exist in Bucket (bucket needs to be empty)

... ... Objects exists in Bucket. Deleting these objects.

... ... Deleted 14 objects in DEMO_Bucket

... Deleting bucket DEMO_Bucket

Deleted bucket DEMO_Bucket

Oracle Object Storage – Buckets & Loading files

In a previous post, I showed what you need to do to setup your local PC/laptop to be able to connect to OCI. I also showed how to perform some simple queries on your Object Storage environment. Go check out that post before proceeding with the examples in this blog.

- Oracle Object Storage – Setup and Explore

- Oracle Object Storage – Buckets & Loading files

- Oracle Object Storage – Downloading and Deleting

- Oracle Object Storage – Parallel Uploading

In this post, I’ll build upon my previous post by giving some Python functions to:

- Check if Bucket exists

- Create a Buckets

- Delete a Bucket

- Upload an individual file

- Upload an entire directory

Let’s start with a function to see if a Bucket already exists.

def check_bucket_exists(b_name):

#check if Bucket exists

is_there = False

l_b = object_storage_client.list_buckets(NAMESPACE, COMPARTMENT_ID).data

for bucket in l_b:

if bucket.name == b_name:

is_there = True

if is_there == True:

print(f'Bucket {b_name} exists.')

else:

print(f'Bucket {b_name} does not exist.')

return is_thereA simple test for a bucket called ‘DEMO_bucket’. This was defined in a variable previously (see previous post). I’ll use this ‘DEMO_bucket’ throughout these examples.

b_exists = check_bucket_exists(BUCKET_NAME)

print(b_exists)

---

Bucket DEMO_Bucket does not exist.

FalseNext we can more onto a function for creating a Bucket.

def create_bucket(b):

#create Bucket if it does not exist

bucket_exists = check_bucket_exists(b)

if bucket_exists == False:

try:

create_bucket_response = object_storage_client.create_bucket(

NAMESPACE,

oci.object_storage.models.CreateBucketDetails(

name=demo_bucket_name,

compartment_id=COMPARTMENT_ID

)

)

bucket_exists = True

# Get the data from response

print(f'Created Bucket {create_bucket_response.data.name}')

except Exception as e:

print(e.message)

else:

bucket_exists = True

print(f'... nothing to create.')

return bucket_existsA simple test for a bucket called ‘DEMO_bucket’. This was defined in a variable previously (see previous post).

b_exists = create_bucket(BUCKET_NAME)

---

Bucket DEMO_Bucket does not exist.

Created Bucket DEMO_BucketNext, let’s delete a Bucket and any files stored in it.

def delete_bucket(b_name):

bucket_exists = check_bucket_exists(b_name)

objects_exist = False

if bucket_exists == True:

print('Starting - Deleting Bucket '+b_name)

print('... checking if objects exist in Bucket (bucket needs to be empty)')

try:

object_list = object_storage_client.list_objects(NAMESPACE, b_name).data

objects_exist = True

except Exception as e:

objects_exist = False

if objects_exist == True:

print('... ... Objects exists in Bucket. Deleting these objects.')

count = 0

for o in object_list.objects:

count+=1

object_storage_client.delete_object(NAMESPACE, b_name, o.name)

if count > 0:

print(f'... ... Deleted {count} objects in {b_name}')

else:

print(f'... ... Bucket is empty. No objects to delete.')

else:

print(f'... No objects to delete, Bucket {b_name} is empty')

print(f'... Deleting bucket {b_name}')

response = object_storage_client.delete_bucket(NAMESPACE, b_name)

print(f'Deleted bucket {b_name}') The example output below shows what happens when I’ve already loaded data into the Bucket (which I haven’t shown in the examples so far – but I will soon).

delete_bucket(BUCKET_NAME)

---

Bucket DEMO_Bucket exists.

Starting - Deleting Bucket DEMO_Bucket

... checking if objects exist in Bucket (bucket needs to be empty)

... ... Objects exists in Bucket. Deleting these objects.

... ... Bucket is empty. No objects to delete.

... Deleting bucket DEMO_Bucket

Deleted bucket DEMO_Bucket

Now that we have our functions for managing Buckets, we can now have a function for uploading a file to a bucket.

def upload_file(b, f):

file_exists = os.path.isfile(f)

if file_exists == True:

#check to see if Bucket exists

b_exists = check_bucket_exists(b)

if b_exists == True:

print(f'... uploading {f}')

try:

object_storage_client.put_object(NAMESPACE, b, os.path.basename(f), io.open(f,'rb'))

print(f'. finished uploading {f}')

except Exception as e:

print(f'Error uploading file. Try again.')

print(e)

else:

print('... Create Bucket before uploading file.')

else:

print(f'... File {f} does not exist or cannot be found. Check file name and full path')Just select a file from your computer and give the full path to that file and the Bucket name.

up_file = '/Users/brendan.tierney/Dropbox/bill.xls'

upload_file(BUCKET_NAME, up_file)

---

Bucket DEMO_Bucket does not exist.

... Create Bucket before uploading file.Our final function is an extended version of the previous one. This function takes a Directory path and uploads all the files to the Bucket.

def upload_directory(b, d):

count = 0

#check to see if Bucket exists

b_exists = check_bucket_exists(b)

if b_exists == True:

#loop files

for filename in os.listdir(d):

print(f'... uploading {filename}')

try:

object_storage_client.put_object(NAMESPACE, b, filename, io.open(os.path.join(d,filename),'rb'))

count += 1

except Exception as e:

print(f'... ... Error uploading file. Try again.')

print(e)

else:

print('... Create Bucket before uploading files.')

if count == 0:

print('... Empty directory. No files uploaded.')

else:

print(f'Finished uploading Directory : {count} files into {b} bucket')and to call it …

up_directory = '/Users/brendan.tierney/Dropbox/OCI-Vision-Images/Blue-Peter/'

upload_directory(BUCKET_NAME, up_directory)

---

Bucket DEMO_Bucket exists.

... uploading thumbnail_IMG_2336.jpg

... uploading .DS_Store

... uploading 2017-08-31 19.46.42.jpg

... uploading thumbnail_IMG_2333.jpg

... uploading 347397087_620984963239631_2131524631626484429_n.jpg

... uploading thumbnail_IMG_1712.jpg

... uploading thumbnail_IMG_1711.jpg

... uploading 2017-11-22 20.18.58.jpg

... uploading thumbnail_IMG_1710.jpg

... uploading 2018-12-03 11.04.57.jpg

... uploading IMG_6779.jpg

... uploading oug_ire18_1.jpg

... uploading 2017-10-16 13.13.20.jpg

... uploading IMG_2347.jpg

Finished uploading Directory : 14 files into DEMO_Bucket bucketOracle Object Storage – Setup and Explore

This blog post will walk you through how to access Oracle OCI Object Storage and explore what buckets and files you have there, using Python and the OCI Python library. There will be additional posts which will walk through some of the other typical tasks you’ll need to perform with moving files into and out of OCI Object Storage.

- Oracle Object Storage – Buckets & Loading files

- Oracle Object Storage – Downloading and Deleting

- Oracle Object Storage – Parallel Uploading

The first thing you’ll need to do is to install the OCI Python library. You can do this by running pip command or if using Anaconda using their GUI for doing this. For example,

pip3 install ociCheck out the OCI Python documentation for more details.

Next, you’ll need to get and setup the configuration settings and download the pem file.

We need to create the config file that will contain the required credentials and information for working with OCI. By default, this file is stored in : ~/.oci/config

mkdir ~/oci

cd oci

Now create the config file, using vi or something similar.

vi config

Edit the file to contain the following, but look out for the parts that need to be changed/updated to match your OCI account details.

[ADMIN_USER]user=ocid1.user.oc1..<unique_ID>

fingerprint=<your_fingerprint>

tenancy = ocid1.tenancy.oc1..<unique_ID>

region = us-phoenix-1key_file=

<path to key .pem file>The above details can be generated by creating an API key for your OCI user. Copy and paste the default details to the config file.

- [ADMIN_USER] > you can name this anything you want, but it will referenced in Python.

- user > enter the user ocid. OCID is the unique resource identifier that OCI provides for each resource.

- fingerprint > refers to the fingerprint of the public key you configured for the user.

- tenancy > your tenancy OCID.

- region > the region that you are subscribed to.

- key_file > the path to the .pem file you generated.

Just download the .pem file and the config file details. Add them to the config file, and give the full path to the .epm file, including its name.

You are now ready to use the OCI Python library to access and use your OCI cloud environment. Let’s run some tests to see if everything works and connects ok.

#import libraries

import oci

import json

import os

import io

#load the config file

config = oci.config.from_file("~/.oci/config")

config

#only part of the output is displayed due to security reasons

{'log_requests': False, 'additional_user_agent': '', 'pass_phrase': None, 'user': 'oci...........We can now define some core variables.

#My Compartment ID

COMPARTMENT_ID = "ocid1.tenancy.oc1..............

#Object storage Namespace

object_storage_client = oci.object_storage.ObjectStorageClient(config)

NAMESPACE = object_storage_client.get_namespace().data

#Name of Bucket for this demo

BUCKET_NAME = 'DEMO_Bucket'We can now define some functions to:

- List the Buckets in my OCI account

- List the number of files in each Bucket

- Number of files in a particular Bucket

- Check for Bucket Existence

def list_buckets():

l_buckets = object_storage_client.list_buckets(NAMESPACE, COMPARTMENT_ID).data

# Get the data from response

for bucket in l_buckets:

print(bucket.name)

def list_bucket_counts():

l_buckets = object_storage_client.list_buckets(NAMESPACE, COMPARTMENT_ID).data

for bucket in l_buckets:

print("Bucket name: ",bucket.name)

buck_name = bucket.name

objects = object_storage_client.list_objects(NAMESPACE, buck_name).data

count = 0

for i in objects.objects:

count+=1

print('... num of objects :', count)

def check_bucket_exists(b_name):

#check if Bucket exists

is_there = False

l_b = object_storage_client.list_buckets(NAMESPACE, COMPARTMENT_ID).data

for bucket in l_b:

if bucket.name == b_name:

is_there = True

if is_there == True:

print(f'Bucket {b_name} exists.')

else:

print(f'Bucket {b_name} does not exist.')

return is_there

def list_bucket_details(b):

bucket_exists = check_bucket_exists(b)

if bucket_exists == True:

objects = object_storage_client.list_objects(NAMESPACE, b).data

count = 0

for i in objects.objects:

count+=1

print(f'Bucket {b} has objects :', count)

Now we can run these functions to test them. Before running these make sure you can create a connection to OCI.

Changing/Increasing Cell Width in Juypter Notebook

When working with Jupyter Notebook you might notice the cell width can vary from time to time, and mostly when you use different screens, with different resolutions.

This can make your code appear slightly odd on the screen with only a certain amount being used. You can of into the default settings to change the sizing, but this might not suit in most cases.

It would be good to be able to adjust this dynamically. In such a situation, you can use one of the following options.

The first option is to use the IPython option to change the display settings. This first example adjusts everything (menu, toolbar and cells) to 50% of the screen width.

from IPython.display import display, HTML

display(HTML("<style>.container { width:50% !important; }</style>"))This might not give you the result you want, but it helps to illustrate how to use this command. By changing the percentage, you can get a better outcome. For example, by changing the percentage to 100%.

from IPython.display import display, HTML

display(HTML("<style>.container { width:100% !important; }</style>"))Keep a careful eye on making these changes, as I’ve found Jupyter stops responding to these changes. A quick refresh of the page will reset everything back to the default settings. Then just run the command you want.

An alternative is to make the changes to the CSS.

from IPython.display import display, HTML

display(HTML(data="""

<style>

div#notebook-container { width: 95%; }

div#menubar-container { width: 65%; }

div#maintoolbar-container { width: 99%; }

</style>

"""))You might want to change those percentages to 100%.

If we need to make the changes permanent we can locate the CSS file: custom.css. Depending on your system it will be located in different places.

For Linux and virtual environments have a look at the following directories.

~/.jupyter/custom/custom.css

You must be logged in to post a comment.