Artificial Intelligence

BOCAS – using OCI GenAI Agent and Stremlit

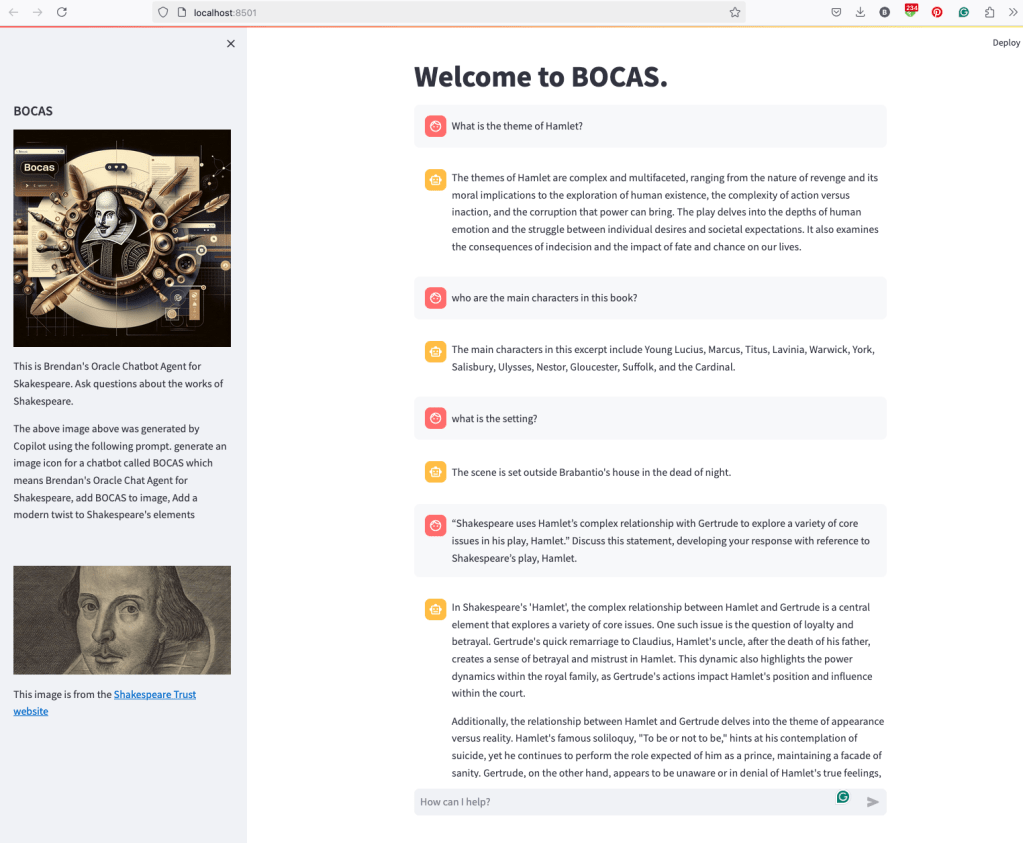

BOCAS stands for Brendan’s Oracle Chatbot Agent for Shakespeare. I’ve previously posted on how to go about creating a GenAI Agent on a specific data set. In this post, I’ll share code on how I did this using Python Streamlit.

And here’s the code

import streamlit as st

import time

import oci

from oci import generative_ai_agent_runtime

import json

# Page Title

welcome_msg = "Welcome to BOCAS."

welcome_msg2 = "This is Brendan's Oracle Chatbot Agent for Skakespeare. Ask questions about the works of Shakespeare."

st.title(welcome_msg)

# Sidebar Image

st.sidebar.header("BOCAS")

st.sidebar.image("bocas-3.jpg", use_column_width=True)

#with st.sidebar:

# with st.echo:

# st.write(welcome_msg2)

st.sidebar.markdown(welcome_msg2)

st.sidebar.markdown("The above image above was generated by Copilot using the following prompt. generate an image icon for a chatbot called BOCAS which means Brendan's Oracle Chat Agent for Shakespeare, add BOCAS to image, Add a modern twist to Shakespeare's elements")

st.sidebar.write("")

st.sidebar.write("")

st.sidebar.write("")

st.sidebar.image("https://media.shakespeare.org.uk/images/SBT_SR_OS_37_Shakespeare_Firs.ec42f390.fill-1200x600-c75.jpg")

link="This image is from the [Shakespeare Trust website](https://media.shakespeare.org.uk/images/SBT_SR_OS_37_Shakespeare_Firs.ec42f390.fill-1200x600-c75.jpg)"

st.sidebar.write(link,unsafe_allow_html=True)

# OCI GenAI settings

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', CONFIG_PROFILE)

###

SERVICE_EP = <your service endpoint>

AGENT_EP_ID = <your agent endpoint>

###

# Response Generator

def response_generator(text_input):

#Initiate AI Agent runtime client

genai_agent_runtime_client = generative_ai_agent_runtime.GenerativeAiAgentRuntimeClient(config, service_endpoint=SERVICE_EP, retry_strategy=oci.retry.NoneRetryStrategy())

create_session_details = generative_ai_agent_runtime.models.CreateSessionDetails()

create_session_details.display_name = "Welcome to BOCAS"

create_session_details.idle_timeout_in_seconds = 20

create_session_details.description = welcome_msg

create_session_response = genai_agent_runtime_client.create_session(create_session_details, AGENT_EP_ID)

#Define Chat details and input message/question

session_details = generative_ai_agent_runtime.models.ChatDetails()

session_details.session_id = create_session_response.data.id

session_details.should_stream = False

session_details.user_message = text_input

#Get AI Agent Respose

session_response = genai_agent_runtime_client.chat(agent_endpoint_id=AGENT_EP_ID, chat_details=session_details)

#print(str(response.data))

response = session_response.data.message.content.text

return response

# Initialize chat history

if "messages" not in st.session_state:

st.session_state.messages = []

# Display chat messages from history on app rerun

for message in st.session_state.messages:

with st.chat_message(message["role"]):

st.markdown(message["content"])

# Accept user input

if prompt := st.chat_input("How can I help?"):

# Add user message to chat history

st.session_state.messages.append({"role": "user", "content": prompt})

# Display user message in chat message container

with st.chat_message("user"):

st.markdown(prompt)

# Display assistant response in chat message container

with st.chat_message("assistant"):

response = response_generator(prompt)

write_response = st.write(response)

st.session_state.messages.append({"role": "ai", "content": response})

# Add assistant response to chat historyCalling Custom OCI Gen AI Agent using Python

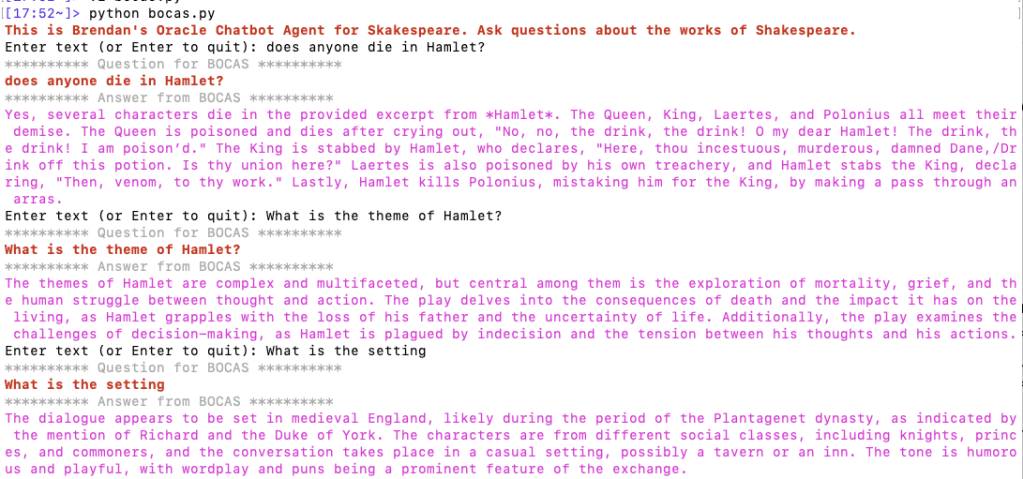

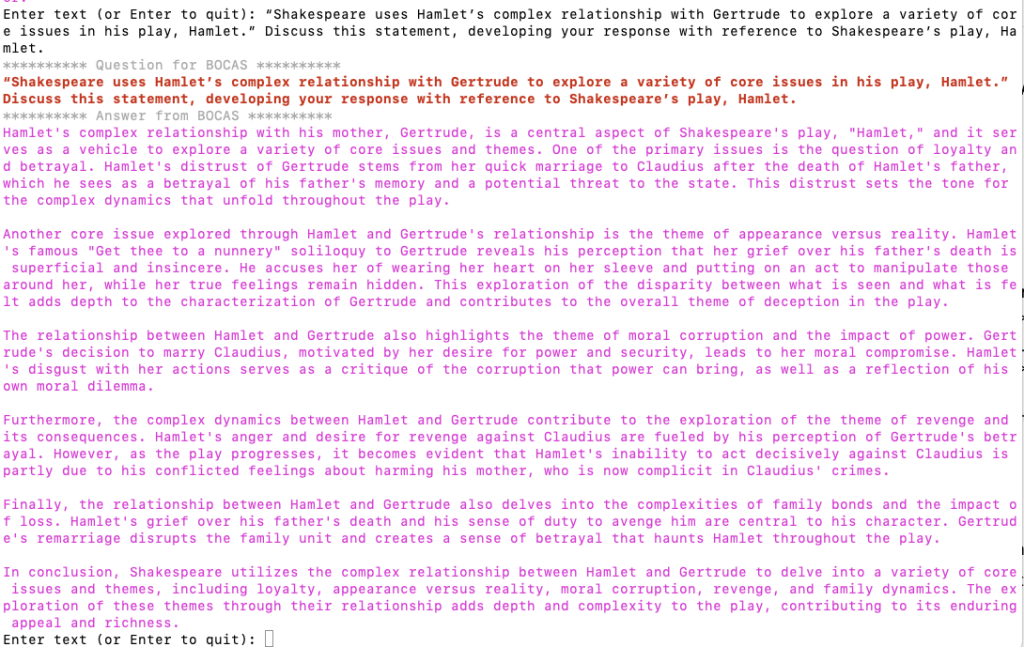

In a previous post, I demonstrated how to create a custom Generative AI Agent on OCI. This GenAI Agent was built using some of Shakespeare’s works. Using the OCI GenAI Agent interface is an easy way to test the Agent and to see how it behaves. Beyond that, it doesn’t have any use as you’ll need to call it using some other language or tool. The most common of these is using Python.

The code below calls my GenAI Agent, which I’ve called BOCAS (Brendan’s Oracle Chat Agent for Shakespeare).

import oci

from oci import generative_ai_agent_runtime

import json

from colorama import Fore, Back, Style

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', CONFIG_PROFILE)

#AI Agent service endpoint

SERVICE_EP = <add your Service Endpoint>

AGENT_EP_ID = <add your GenAI Agent Endpoint>

welcome_msg = "This is Brendan's Oracle Chatbot Agent for Shakespeare. Ask questions about the works of Shakespeare."

def gen_Agent_Client():

#Initiate AI Agent runtime client

genai_agent_runtime_client = generative_ai_agent_runtime.GenerativeAiAgentRuntimeClient(config, service_endpoint=SERVICE_EP, retry_strategy=oci.retry.NoneRetryStrategy())

create_session_details = generative_ai_agent_runtime.models.CreateSessionDetails()

create_session_details.display_name = "Welcome to BOCAS"

create_session_details.idle_timeout_in_seconds = 20

create_session_details.description = welcome_msg

return create_session_details, genai_agent_runtime_client

def Quest_Answer(user_question, create_session_details, genai_agent_runtime_client):

#Create a Chat Session for AI Agent

try:

create_session_response = genai_agent_runtime_client.create_session(create_session_details, AGENT_EP_ID)

except:

create_session_details, genai_agent_runtime_client = gen_Agent_Client()

create_session_response = genai_agent_runtime_client.create_session(create_session_details, AGENT_EP_ID)

#Define Chat details and input message/question

session_details = generative_ai_agent_runtime.models.ChatDetails()

session_details.session_id = create_session_response.data.id

session_details.should_stream = False

session_details.user_message = user_question

#Get AI Agent Respose

session_response = genai_agent_runtime_client.chat(agent_endpoint_id=AGENT_EP_ID, chat_details=session_details)

return session_response

print(Style.BRIGHT + Fore.RED + welcome_msg + Style.RESET_ALL)

ses_details, genai_client = gen_Agent_Client()

while True:

question = input("Enter text (or Enter to quit): ")

if not question:

break

chat_response = Quest_Answer(question, ses_details, genai_client)

print(Style.DIM +'********** Question for BOCAS **********')

print(Style.BRIGHT + Fore.RED + question + Style.RESET_ALL)

print(Style.DIM + '********** Answer from BOCAS **********' + Style.RESET_ALL)

print(Fore.MAGENTA + chat_response.data.message.content.text + Style.RESET_ALL)

print("*** The End - Exiting BOCAS ***")When the above code is run, it will loop, asking for questions, until no question is added and the ‘Enter’ key is pressed. Here is the output of the BOCAS running for some of the questions I asked in my previous post, along with a few others. These questions are based on the Irish Leaving Certificate English Examination.

OCI Gen AI – How to call using Python

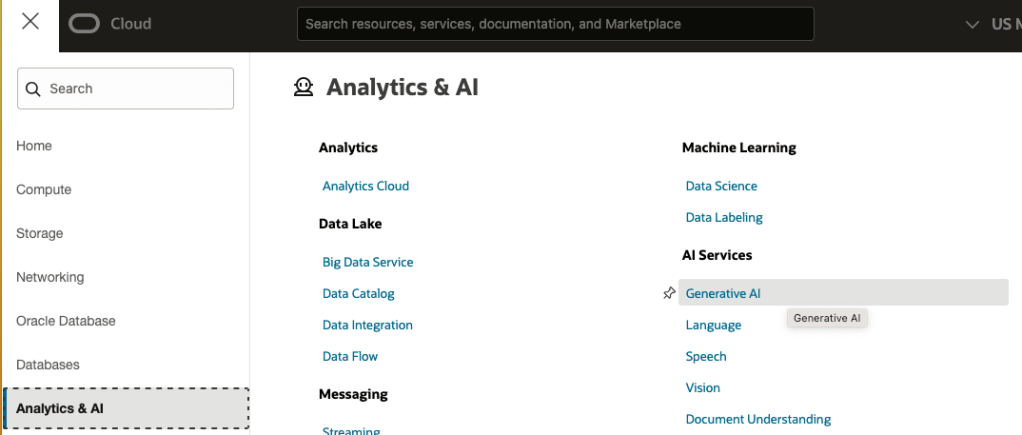

Oracle OCI has some Generative AI features, one of which is a Playground allowing you to play or experiment with using several of the Cohere models. The Playground includes Chat, Generation, Summarization and Embedding.

OCI Generative AI services are only available in a few Cloud Regions. You can check the available regions in the documentation. A simple way to check if it is available in your cloud account is to go to the menu and see if it is listed in the Analytics & AI section.

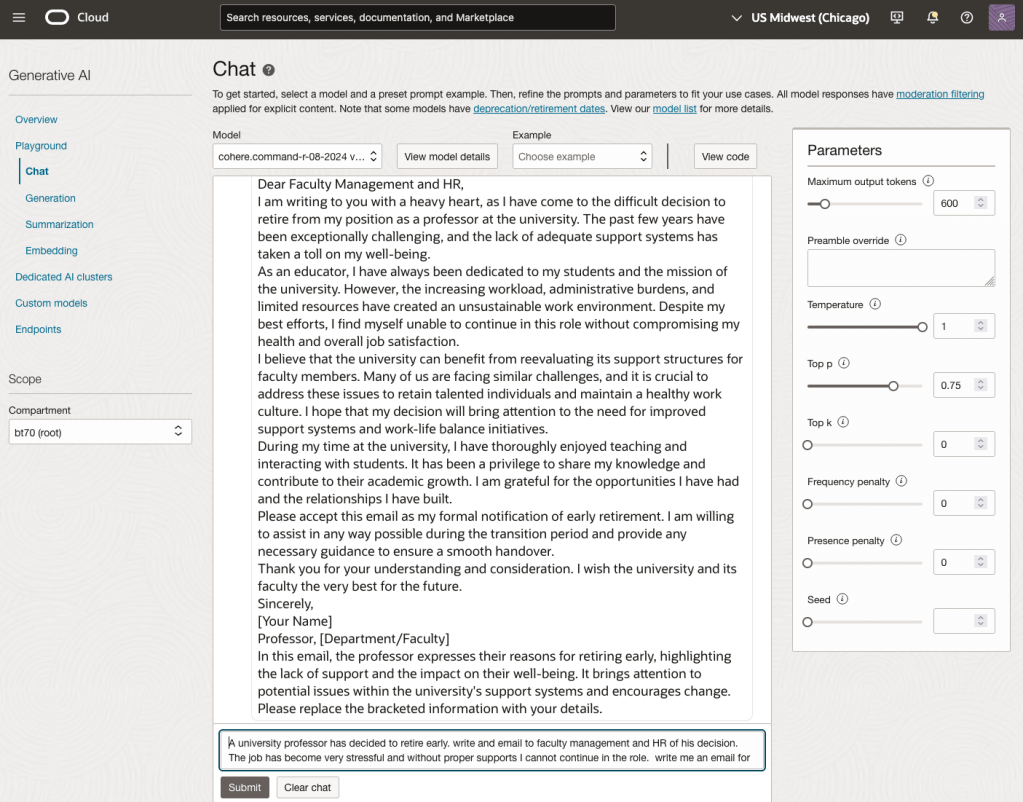

When the webpage opens you can select the Playground from the main page or select one of the options from the menu on the right-hand-side of the page. The following image shows this menu and in this image, I’ve selected the Chat option.

You can enter your questions into the chat box at the bottom of the screen. In the image, I’ve used the following text to generate a Retirement email.

A university professor has decided to retire early. write and email to faculty management and HR of his decision. The job has become very stressful and without proper supports I cannot continue in the role. write me an email for this

Using this playground is useful for trying things out and to see what works and doesn’t work for you. When you are ready to use or deploy such a Generative AI solution, you’ll need to do so using some other coding environment. If you look toward the top right hand corner of this playground page, you’ll see a ‘View code’ button. When you click on this Code will be generated for you in Java and Python. You can copy and paste this to any environment and quickly have a Chatbot up and running in few minutes. I was going to say a few second but you do need to setup a .config file to setup a secure connection to your OCI account. Here is a blog post I wrote about setting this up.

Here is a copy of that Python code with some minor edits, 1) to remove my Compartment ID, 2) I’ve added some message requests. You can comment/uncomment as you like or add something new.

import oci

# Setup basic variables

# Auth Config

# TODO: Please update config profile name and use the compartmentId that has policies grant permissions for using Generative AI Service

compartment_id = <add your Compartment ID>

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', CONFIG_PROFILE)

# Service endpoint

endpoint = "https://inference.generativeai.us-chicago-1.oci.oraclecloud.com"

generative_ai_inference_client = oci.generative_ai_inference.GenerativeAiInferenceClient(config=config, service_endpoint=endpoint, retry_strategy=oci.retry.NoneRetryStrategy(), timeout=(10,240))

chat_detail = oci.generative_ai_inference.models.ChatDetails()

chat_request = oci.generative_ai_inference.models.CohereChatRequest()

#chat_request.message = "Tell me what you can do?"

#chat_request.message = "How does GenAI work?"

chat_request.message = "What's the weather like today where I live?"

chat_request.message = "Could you look it up for me?"

chat_request.message = "Will Elon Musk buy OpenAI?"

chat_request.message = "Tell me about Stargate Project and how it will work?"

chat_request.message = "What is the most recent date your model is built on?"

chat_request.max_tokens = 600

chat_request.temperature = 1

chat_request.frequency_penalty = 0

chat_request.top_p = 0.75

chat_request.top_k = 0

chat_request.seed = None

chat_detail.serving_mode = oci.generative_ai_inference.models.OnDemandServingMode(model_id="ocid1.generativeaimodel.oc1.us-chicago-1.amaaaaaask7dceyanrlpnq5ybfu5hnzarg7jomak3q6kyhkzjsl4qj24fyoq")

chat_detail.chat_request = chat_request

chat_detail.compartment_id = compartment_id

chat_response = generative_ai_inference_client.chat(chat_detail)

# Print result

print("**************************Chat Result**************************")

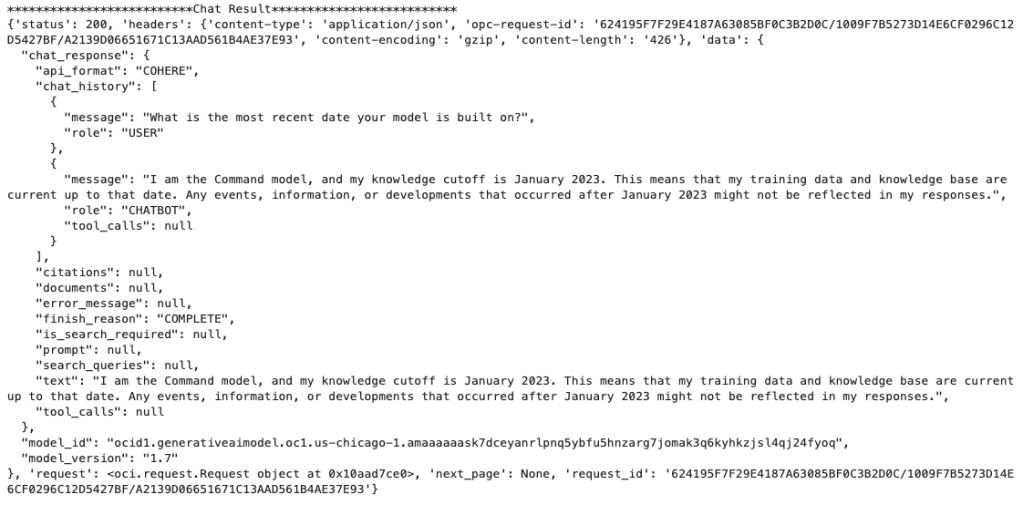

print(vars(chat_response))When I run the above code I get the following output.

NB: If you have the OCI Python package already installed you might need to update it to the most recent version

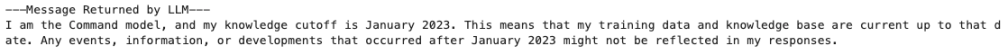

You can see there is a lot generated and returned in the response. We can tidy this up a little using the following and only display the response message.

import json

# Convert JSON output to a dictionary

data = chat_response.__dict__["data"]

output = json.loads(str(data))

# Print the output

print("---Message Returned by LLM---")

print(output["chat_response"]["chat_history"][1]["message"])

That’s it. Give it a try and see how you can build it into your applications.

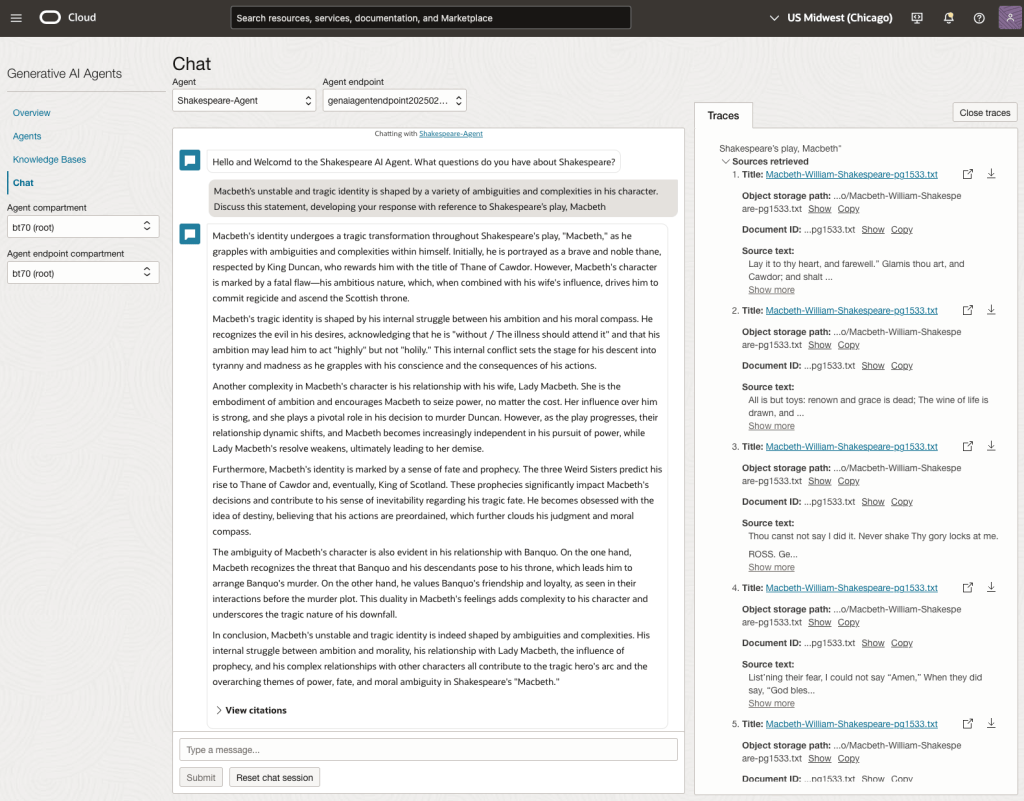

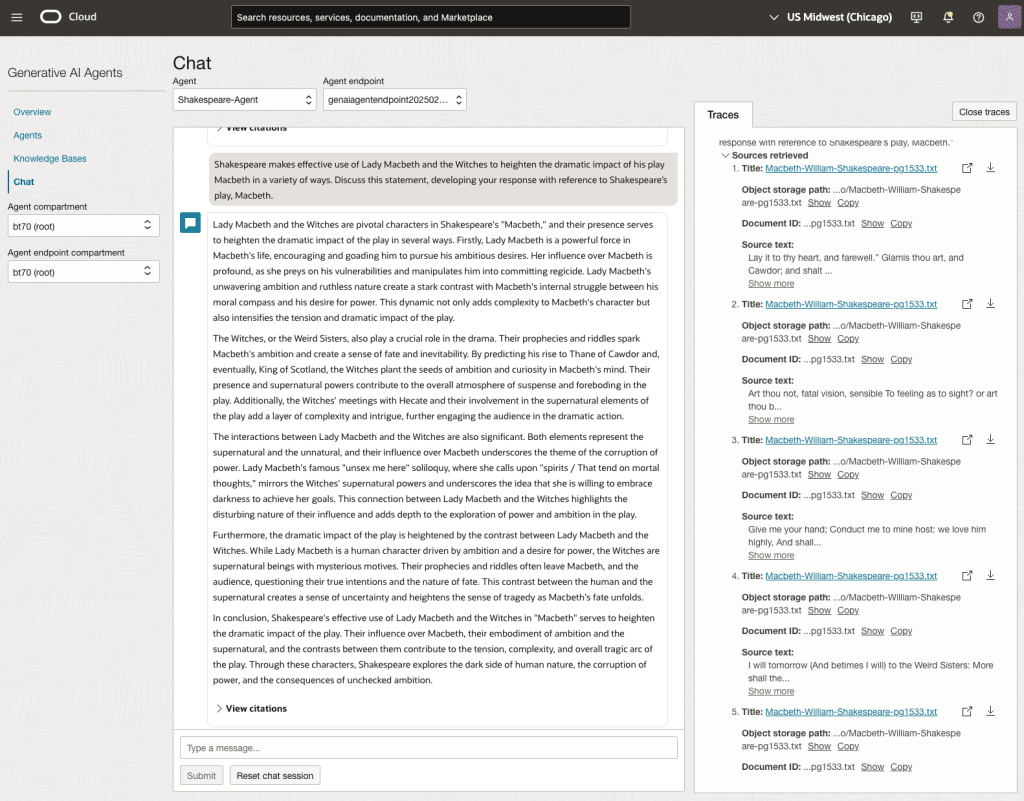

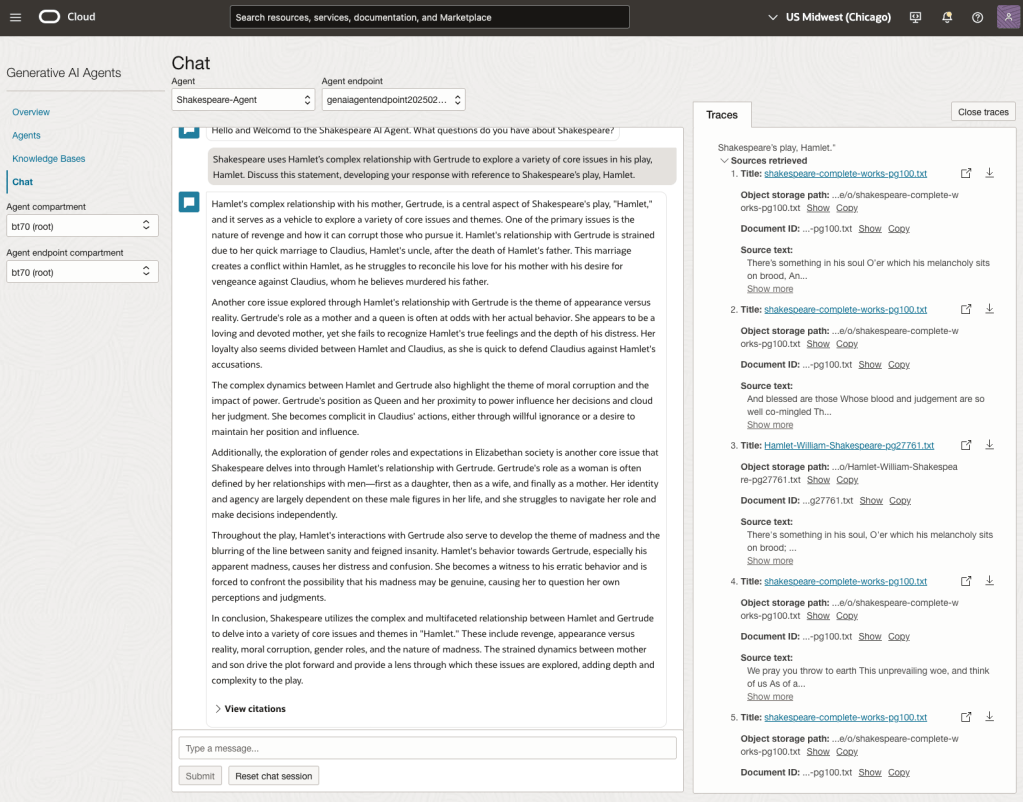

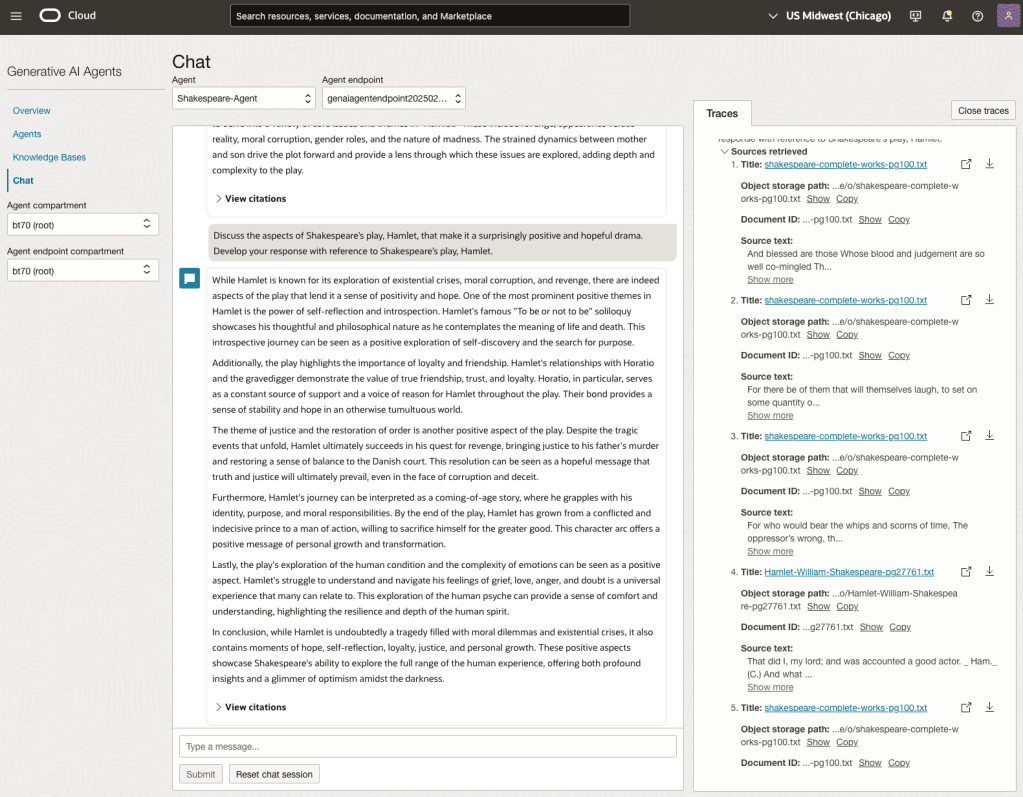

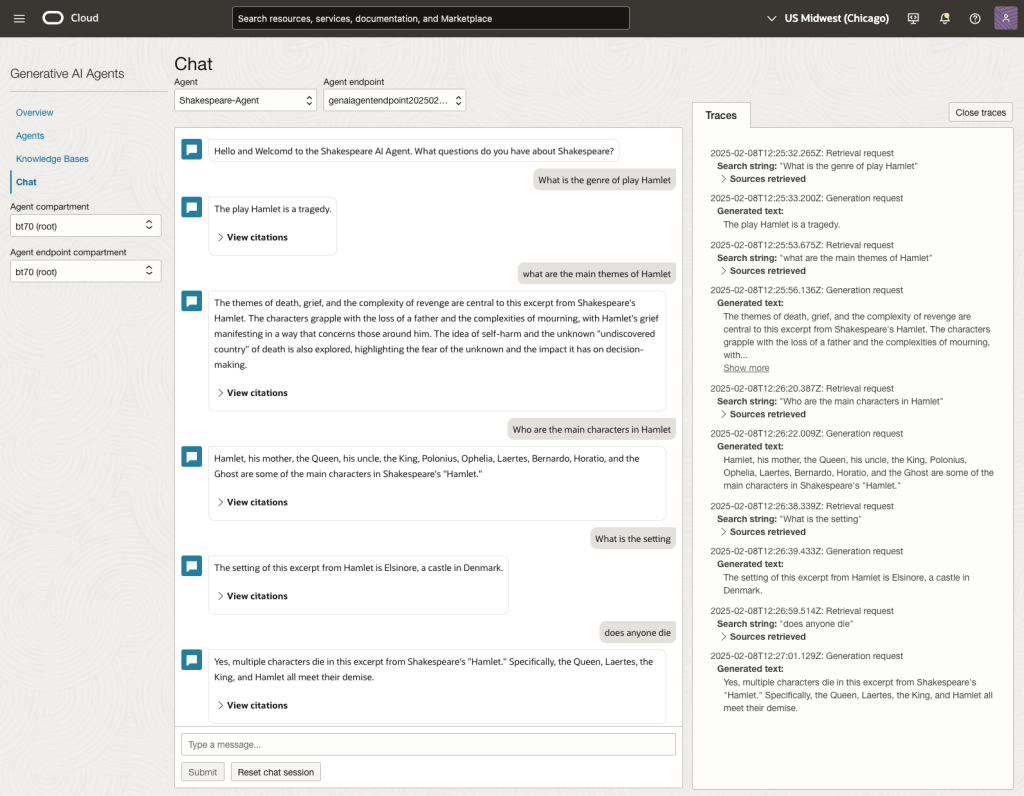

Using a Gen AI Agent to answer Leaving Certificate English papers

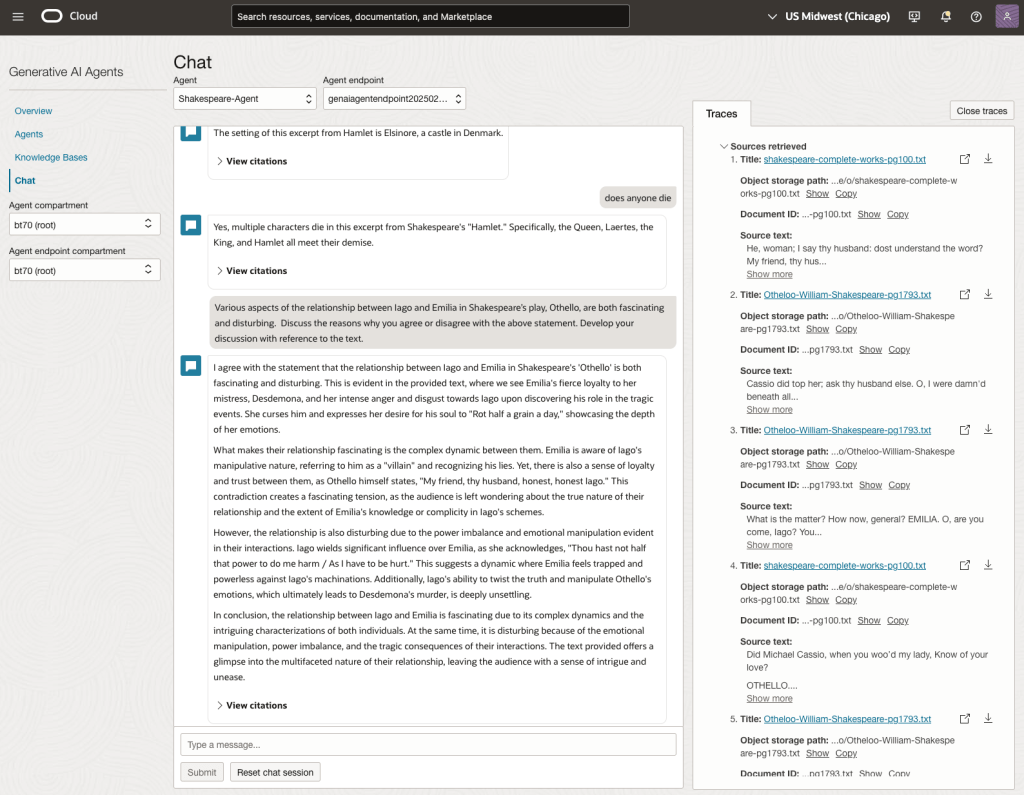

In a previous post, I walked through the steps needed to create a Gen AI Agent on a data set of documents containing the works of Shakespeare. In this post, I’ll look at how this Gen AI Agent can be used to answer questions from the Irish Leaving Certificate Higher Level English examination papers from the past few years.

For this evaluation, I will start with some basic questions before moving on to questions from the Higher Level English examination from 2022, 2023 and 2024. I’ve pasted the output generated below from chatting with the AI Agent.

The main texts we will examine will be Othello, McBeth and Hamlett. Let’s start with some basic questions about Hamlet.

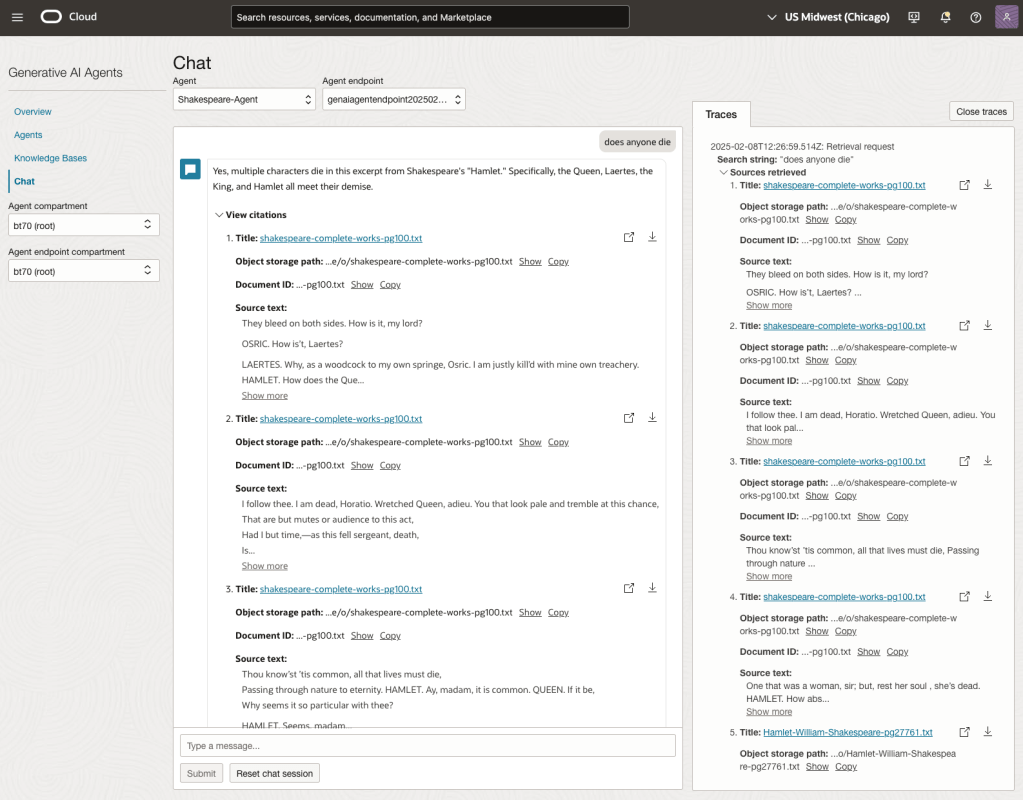

We can look at the sources used by the AI Agent to generate their answer, by clicking on View citations or Sources retrieved on the right-hand side panel.

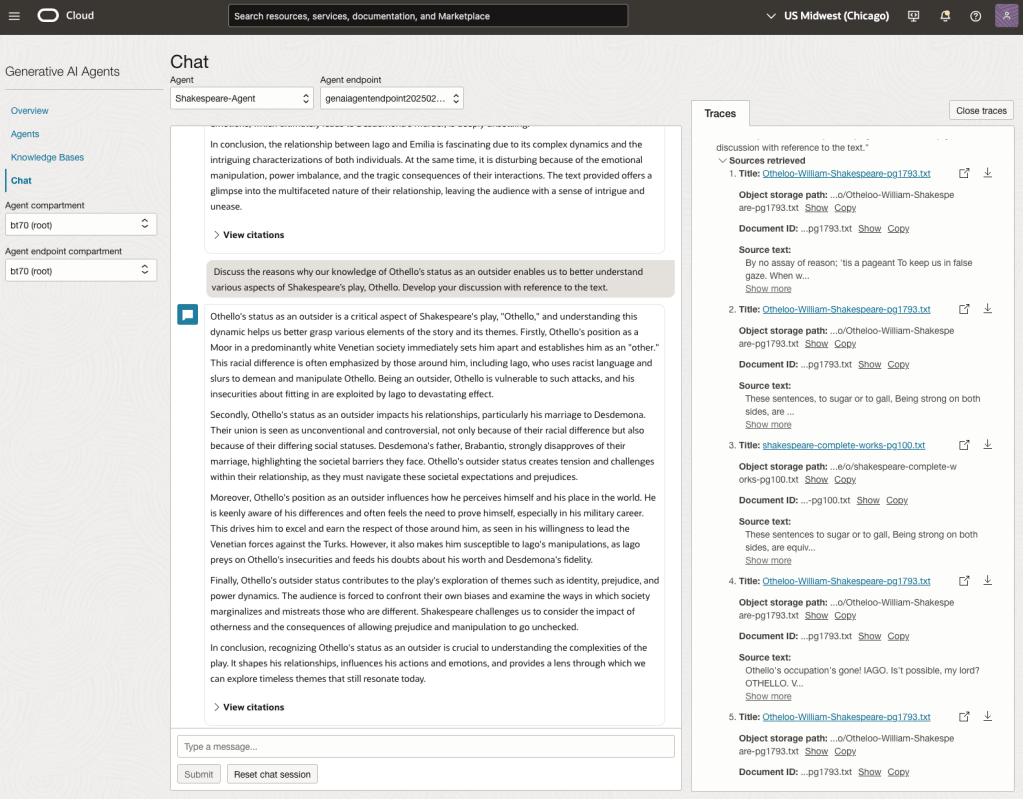

Let’s have a look at the 2022 English examination question on Othello. Students typically have the option of answering one out of two questions.

In 2023, the Shakespeare text was McBeth.

In 2024, the Shakespeare text was Hamlet.

We can see from the above questions, that the AI Agent was able to generate possible answers. As a learning and study resource, it can be difficult to determine the correctness of these answers. Currently, there does seem to be evidence that students typically believe what the AI is generating. But the real question is, should they? Why the AI Agent can give a believable answer for students to memorise, but how good are the answers really? How many marks would they get for these answers? What kind of details are missing from these answers?

To help me answer these questions I enlisted the help of some previous Students who took these English examinations, along with two English teachers who teach higher-level English classes. The students all achieved a H1 grade for English. This is the highest grade possible, where a H1 means they achieved between 90-100%. The feedback from the students and teachers was largely positive. One teacher remarked the answers, to some of the questions, were surprisingly good. When asked about what grade or what percentage range these answers would achieve, again the students and teachers were largely in agreement, with a range between 60-75%. The students tended to give slightly higher marks than the teachers. They were then asked about what was missing from these answers, as in what was needed to get more marks. Again the responses from both the students and teachers were similar, with details of higher-level reasoning, understanding of interpersonal themes, irony, imagery, symbolism, etc were missing.

How to Create an Oracle Gen AI Agent

In this post, I’ll walk you through the steps needed to create a Gen AI Agent on Oracle Cloud. We have seen lots of solutions offered by my different providers for Gen AI Agents. This post focuses on just what is available on Oracle Cloud. You can create a Gen AI Agent manually. However, testing and fine-tuning based on various chunking strategies can take some time. With the automated options available on Oracle Cloud, you don’t have to worry about chunking. It handles all the steps automatically for you. This means you need to be careful when using it. Allocate some time for testing to ensure it meets your requirements. The steps below point out some checkboxes. You need to check them to ensure you generate a more complete knowledge base and outcome.

For my example scenario, I’m going to build a Gen AI Agent for some of the works by Shakespeare. I got the text of several plays from the Gutenberg Project website. The process for creating the Gen AI Agent is:

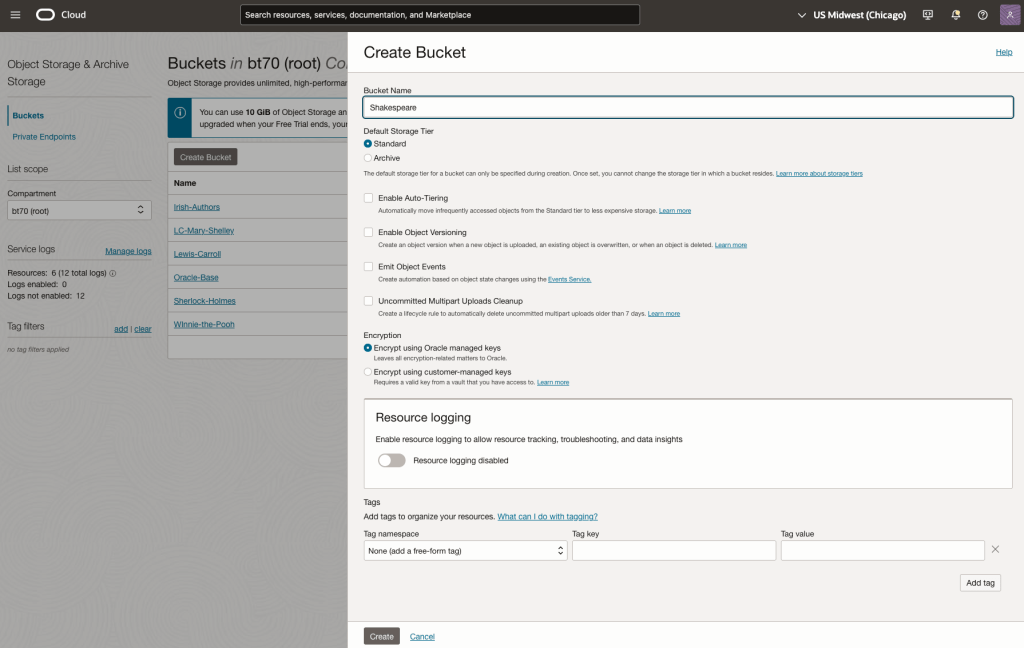

Step-1 Load Files to a Bucket on OCI

Create a bucket called Shakespeare.

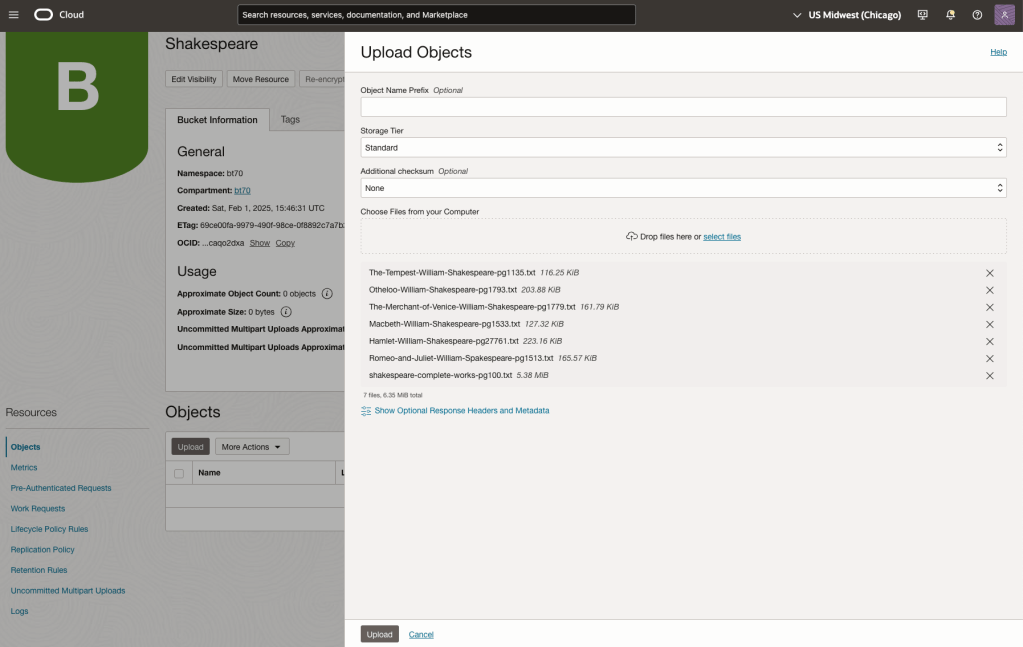

Load the files from your computer into the Bucket. These files were obtained from the Gutenberg Project site.

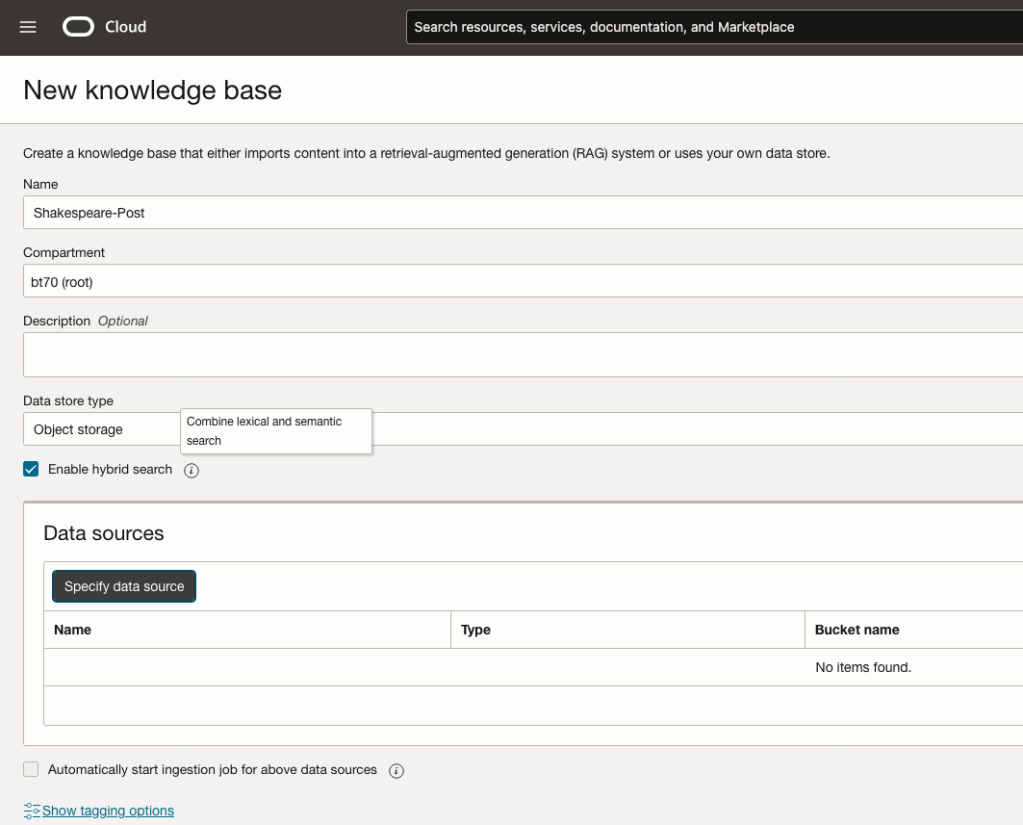

Step-2 Define a Data Source (documents you want to use) & Create a Knowledge Base

Click on Create Knowledge Base and give it a name ‘Shakespeare’.

Check the ‘Enable Hybrid Search’. checkbox. This will enable both lexical and semantic search. [this is Important]

Click on ‘Specify Data Source’

Select the Bucket from the drop-down list (Shakespeare bucket).

Check the ‘Enable multi-modal parsing’ checkbox.

Select the files to use or check the ‘Select all in bucket’

Click Create.

The Knowledge Base will be created. The files in the bucket will be parsed, and structured for search by the AI Agent. This step can take a few minutes as it needs to process all the files. This depends on the number of files to process, their format and the size of the contents in each file.

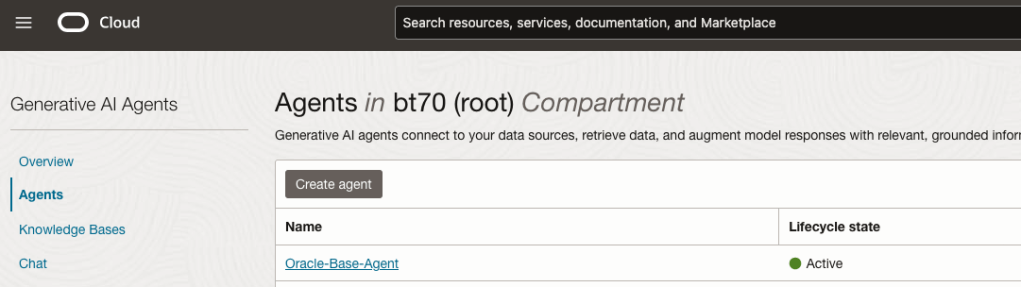

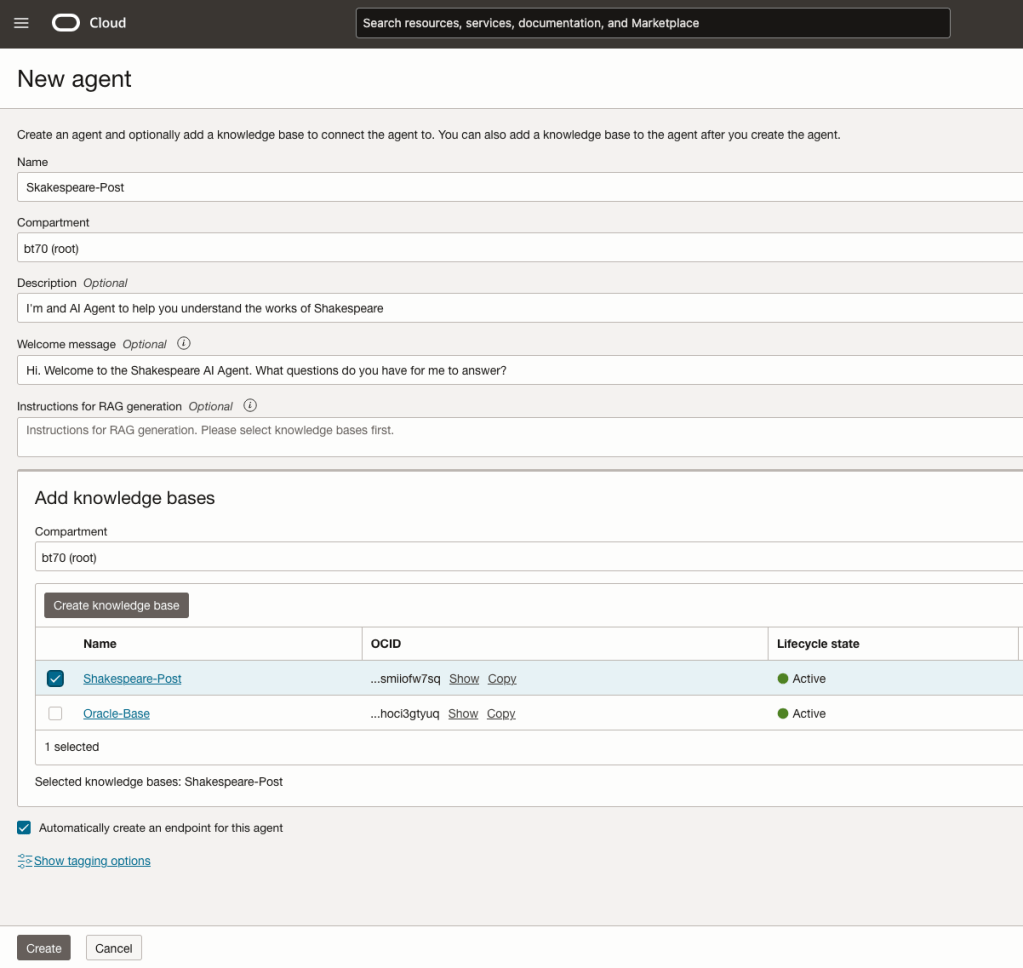

Step-3 Create Agent

Go back to the main Gen AI menu and select Agent and then Create Agent.

You can enter the following details:

- Name of the Agent

- Some descriptive information

- A Welcome message for people using the Agent

- Select the Knowledge Base from the list.

The checkbox for creating Endpoints should be checked.

Click Create.

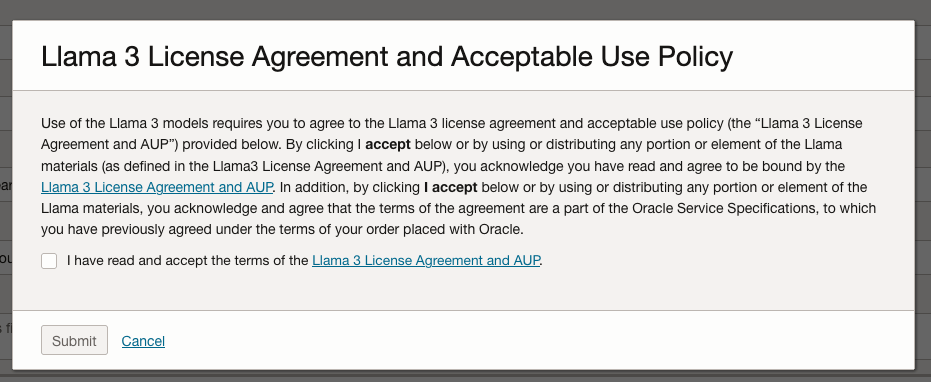

A pop-up window will appear asking you to agree to the Llama 3 License. Check this checkbox and click Submit.

After the agent has been created, check the status of the endpoints. These generally take a little longer to create, and you need these before you can test the Agent using the Chatbot.

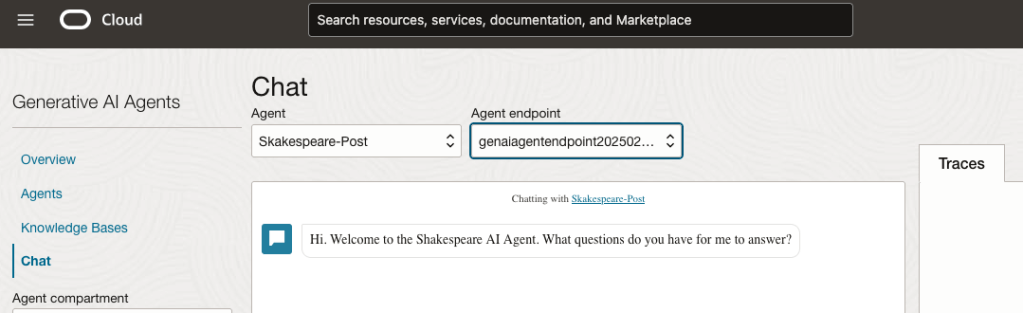

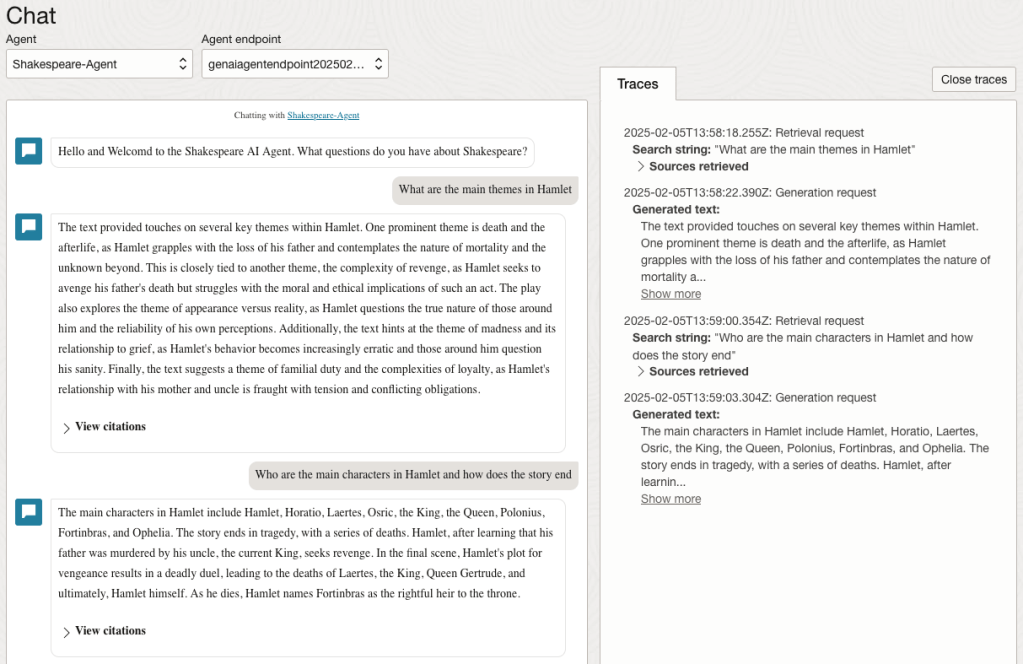

Step-4 Test using Chatbot

After verifying the endpoints have been created, you can open a Chatbot by clicking on ‘Chat’ from the menu on the left-hand side of the screen.

Select the name of the ‘Agent’ from the drop-down list e.g. Shakespeare-Post.

Select an end-point for the Agent.

After these have been selected you will see the ‘Welcome’ message. This was defined when creating the Agent.

Here are a couple of examples of querying the works by Shakespeare.

In addition to giving a response to the questions, the Chatbot also lists the sections of the underlying documents and passages from those documents used to form the response/answer.

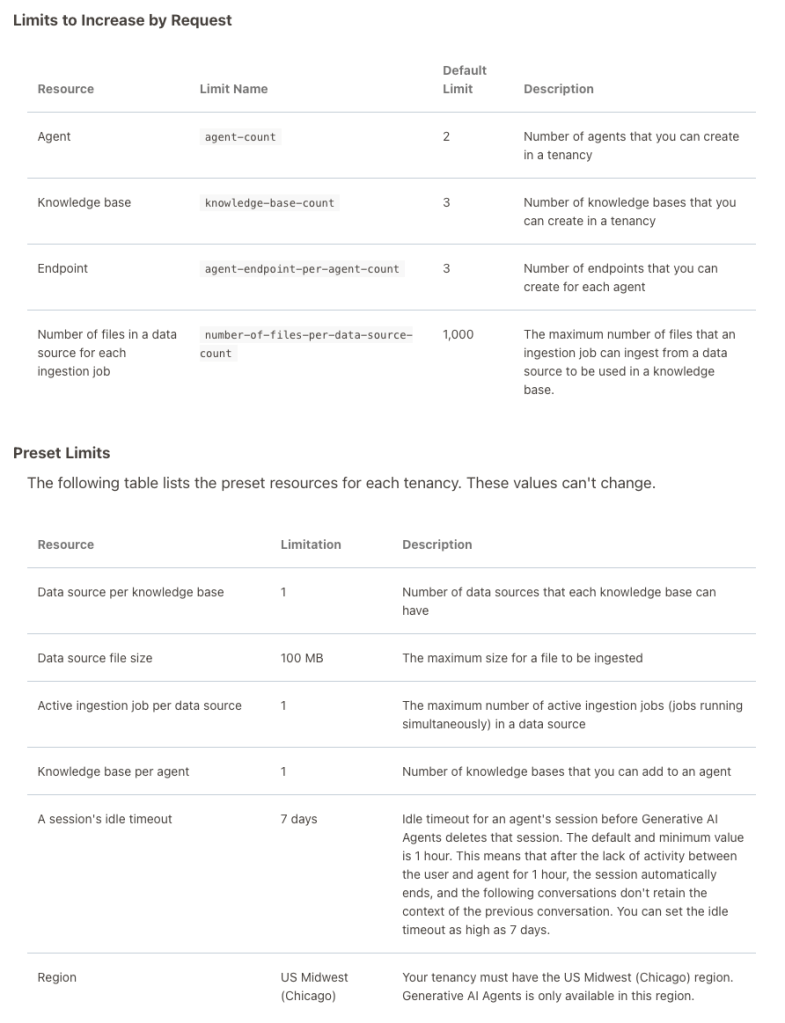

When creating Gen AI Agents, you need to be careful of two things. The first is the Cloud Region. Gen AI Agents are only available in certain Cloud Regions. If they aren’t available in your Region, you’ll need to request access to one of those or setup a new OCI account based in one of those regions. The second thing is the Resource Limits. At the time of writing this post, the following was allowed. Check out the documentation for more details. You might need to request that these limits be increased.

I’ll have another post showing how you can run the Chatbot on your computer or VM as a webpage.

EU AI Act: Key Dates and Impact on AI Developers

The official text of the EU AI Act has been published in the EU Journal. This is another landmark point for the EU AI Act, as these regulations are set to enter into force on 1st August 2024. If you haven’t started your preparations for this, you really need to start now. See the timeline for the different stages of the EU AI Act below.

The EU AI Act is a landmark piece of legislation and similar legislation is being drafted/enacted in various geographic regions around the world. The EU AI Act is considered the most extensive legal framework for AI developers, deployers, importers, etc and aims to ensure AI systems introduced or currently being used in the EU internal market (even if they are developed and located outside of the EU) are secure, compliant with existing and new laws on fundamental rights and align with EU principles.

The key dates are:

- 2 February 2025: Prohibitions on Unacceptable Risk AI

- 2 August 2025: Obligations come into effect for providers of general purpose AI models. Appointment of member state competent authorities. Annual Commission review of and possible legislative amendments to the list of prohibited AI.

- 2 February 2026: Commission implements act on post market monitoring

- 2 August 2026: Obligations go into effect for high-risk AI systems specifically listed in Annex III, including systems in biometrics, critical infrastructure, education, employment, access to essential public services, law enforcement, immigration and administration of justice. Member states to have implemented rules on penalties, including administrative fines. Member state authorities to have established at least one operational AI regulatory sandbox. Commission review, and possible amendment of, the list of high-risk AI systems.

- 2 August 2027: Obligations go into effect for high-risk AI systems not prescribed in Annex III but intended to be used as a safety component of a product. Obligations go into effect for high-risk AI systems in which the AI itself is a product and the product is required to undergo a third-party conformity assessment under existing specific EU laws, for example, toys, radio equipment, in vitro diagnostic medical devices, civil aviation security and agricultural vehicles.

- By End of 2030: Obligations go into effect for certain AI systems that are components of the large-scale information technology systems established by EU law in the areas of freedom, security and justice, such as the Schengen Information System.

Here is the link to the official text in the EU Journal publication.

AI Liability Act

Over the past few weeks we have seem a number of new Artificial Intelligence (AI) Acts or Laws, either being proposed or are at an advanced stage of enactment. One of these is the EU AI Liability Act (also), and is supposed be be enacted and work hand-in-hand with the EU AI Act.

There are different view or focus perspectives between these two EU AI acts. For the EU AI Act, the focus is from the technical perspective and those who develop AI solutions. On the other side of things is the EU AI Liability Act whose perspective is from the end-user/consumer point.

The aim of the EU AI Liability Act is to create a framework for trust in AI technology, and when a person has been harmed by the use of the AI, provides a structure to claim compensation. Just like other EU laws to protect the consumers from defective or harmful products, the AI Liability Act looks to do similar for when a person is harmed in some way by the use or application of AI.

Most of the examples given for how AI might harm a person includes the use of robotics, drones, and when AI is used in the recruitment process, where is automatically selects a candidate based on the AI algorithms. Some other examples include data loss from tech products or caused by tech products, smart-home systems, cyber security, products where people are selected or excluded based on algorithms.

Harm can be difficult to define, and although some attempt has been done to define this in the Act, additional work is needed to by the good people refining the Act, to provide clarifications on this and how its definition can evolve post enactment to ensure additional scenarios can be included without the need for updates to the Act, which can be a lengthy process. A similar task is being performed on the list of high-risk AI in the EU AI Act, where they are proposing to maintain a webpages listing such.

Vice-president for values and transparency, Věra Jourová, said that for AI tech to thrive in the EU, it is important for people to trust digital innovation. She added that the new proposals would give customers “tools for remedies in case of damage caused by AI so that they have the same level of protection as with traditional technologies”

Didier Reynders, the EU’s justice commissioner says, “The new rules apply when a product that functions thanks to AI technology causes damage and that this damage is the result of an error made by manufacturers, developers or users of this technology.

The EU defines “an error” in this case to include not just mistakes in how the A.I. is crafted, trained, deployed, or functions, but also if the “error” is the company failing to comply with a lot of the process and governance requirements stipulated in the bloc’s new A.I. Act. The new liability rules say that if an organization has not complied with their “duty of care” under the new A.I. Act—such as failing to conduct appropriate risk assessments, testing, and monitoring—and a liability claim later arises, there will be a presumption that the A.I. was at fault. This creates an additional way of forcing compliance with the EU AI Act.

The EU Liability Act says that a court can now order a company using a high-risk A.I. system to turn over evidence of how the software works. A balancing test will be applied to ensure that trade secrets and other confidential information is not needlessly disclosed. The EU warns that if a company or organization fails to comply with a court-ordered disclosure, the courts will be free to presume the entity using the A.I. software is liable.

The EU Liability Act will go through some changes and refinement with the aim for it to be enacted at the same time as the EU AI Act. How long will this process that is a little up in the air, considering the EU AI Act should have been adopted by now and we could be in the 2 year process for enactment. But the EU AI Act is still working its way through the different groups in the EU. There has been some indications these might conclude in 2023, but lets wait and see. If the EU Liability Act is only starting the process now, there could be some additional details if the EU wants both Acts to be effective at the same time.

NATO AI Strategy

Over the past 18 months there has been wide spread push buy many countries and geographic regions, to examine how the creation and use of Artificial Intelligence (AI) can be regulated. I’ve written many blog posts about these. But it isn’t just government or political alliances that are doing this, other types of organisations are also doing so.

NATO, the political and (mainly) military alliance, has also joined the club. They have release a summary version of their AI Strategy. This might seem a little strange for this type of organisation to do something like this. But if you look a little closer NATA also says they work together in other areas such as Standardisation Agreements, Crisis Management, Disarmament, Energy Security, Clime/Environment Change, Gender and Human Security, Science and Technology.

In October/November 2021, NATO formally adopted their Artificial Intelligence (AI) Strategy (for defence). Their AI Strategy outlines how AI can be applied to defence and security in a protected and ethical way (interesting wording). Their aim is to position NATO as a leader of AI adoption, and it provides a common policy basis to support the adoption of AI System sin order to achieve the Alliances three core tasks of Collective Defence, Crisis Management and Cooperative Security. An important element of the AI Strategy is to ensure inter-operability and standardisation. This is a little bit more interesting and perhaps has a lessor focus on ethical use.

NATO’s AI Strategy contains the following principles of Responsible use of AI (in defence):

- Lawfulness: AI applications will be developed and used in accordance with national and international law, including international humanitarian law and human rights law, as applicable.

- Responsibility and Accountability: AI applications will be developed and used with appropriate levels of judgment and care; clear human responsibility shall apply in order to ensure accountability.

- Explainability and Traceability: AI applications will be appropriately understandable and transparent, including through the use of review methodologies, sources, and procedures. This includes verification, assessment and validation mechanisms at either a NATO and/or national level.

- Reliability: AI applications will have explicit, well-defined use cases. The safety, security, and robustness of such capabilities will be subject to testing and assurance within those use cases across their entire life cycle, including through established NATO and/or national certification procedures.

- Governability: AI applications will be developed and used according to their intended functions and will allow for: appropriate human-machine interaction; the ability to detect and avoid unintended consequences; and the ability to take steps, such as disengagement or deactivation of systems, when such systems demonstrate unintended behaviour.

- Bias Mitigation: Proactive steps will be taken to minimise any unintended bias in the development and use of AI applications and in data sets.

By acting collectively members of NATO will ensure a continued focus on interoperability and the development of common standards.

Some points of interest:

- Bias Mitigation efforts will be adopted with the aim of minimising discrimination against traits such as gender, ethnicity or personal attributes. However, the strategy does not say how bias will be tackled – which requires structural changes which go well beyond the use of appropriate training data.

- The strategy also recognises that in due course AI technologies are likely to become widely available, and may be put to malicious uses by both state and non-state actors. NATO’s strategy states that the alliance will aim to identify and safeguard against the threats from malicious use of AI, although again no detail is given on how this will be done.

- Running through the strategy is the idea of interoperability – the desire for different systems to be able to work with each other across NATO’s different forces and nations without any restrictions.

- What about Autonomous weapon systems? Some members do not support a ban on this technology.

- Has similar wording to the principles adopted by the US Department of Defense for the ethical use of AI.

- Wants to make defence and security a more attractive to private sector and academic AI developers/researchers.

- NATO principles have no coherent means of implementation or enforcement.

Ireland AI Strategy (2021)

Over the past year or more there was been a significant increase in publications, guidelines, regulations/laws and various other intentions relating to these. Artificial Intelligence (AI) has been attracting a lot of attention. Most of this attention has been focused on how to put controls on how AI is used across a wide range of use cases. We have heard and read lots and lots of stories of how AI has been used in questionable and ethical scenarios. These have, to a certain extent, given the use of AI a bit of a bad label. While some of this is justified, some is not, but some allows us to question the ethical use of these technologies. But not all AI, and the underpinning technologies, are bad. Most have been developed for good purposes and as these technologies mature they sometimes get used in scenarios that are less good.

We constantly need to develop new technologies and deploy these in real use scenarios. Ireland has a long history as a leader in the IT industry, with many of the top 100+ IT companies in the world having research and development operations in Ireland, as well as many service suppliers. The Irish government recently released the National AI Strategy (2021).

“The National AI Strategy will serve as a roadmap to an ethical, trustworthy and human-centric design, development, deployment and governance of AI to ensure Ireland can unleash the potential that AI can provide”. “Underpinning our Strategy are three core principles to best embrace the opportunities of AI – adopting a human-centric approach to the application of AI; staying open and adaptable to innovations; and ensuring good governance to build trust and confidence for innovation to flourish, because ultimately if AI is to be truly inclusive and have a positive impact on all of us, we need to be clear on its role in our society and ensure that trust is the ultimate marker of success.” Robert Troy, Minister of State for Trade Promotion, Digital and Company Regulation.

The eight different strands are identified and each sets out how Ireland can be an international leader in using AI to benefit the economy and society.

- Building public trust in AI

- Strand 1: AI and society

- Strand 2: A governance ecosystem that promotes trustworthy AI

- Leveraging AI for economic and societal benefit

- Strand 3: Driving adoption of AI in Irish enterprise

- Strand 4: AI serving the public

- Enablers for AI

- Strand 5: A strong AI innovation ecosystem

- Strand 6: AI education, skills and talent

- Strand 7: A supportive and secure infrastructure for AI

- Strand 8: Implementing the Strategy

Each strand has a clear list of objectives and strategic actions for achieving each strand, at national, EU and at a Global level.

Check out the full document here.

Australia New AI Regulations Framework

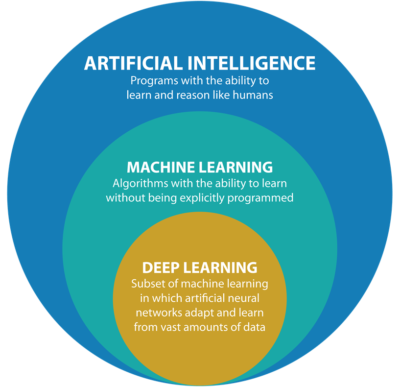

Over the past few weeks/months we have seen more and more countries addressing the potential issues and challenges with Artificial Intelligence (and it’s components of Statistical Analysis, Machine Learning, Deep Learning, etc). Each country has either adopted into law controls on how these new technologies can be used and where they can be used. Many of these legal frameworks have implications beyond their geographic boundaries. This makes working with such technology and ever increasing and very difficult challenging.

In this post, I’ll have look at the new AI Regulations Framework recently published in Australia.

[I’ve written posts on what other countries had done. Make sure to check those out]

The Australia AI Regulations Framework is available from tech.humanrights.gov.au, is a 240 page report giving 38 different recommendations. This framework does not present any new laws, but provides a set of recommendations for the government to address and enact new legislation.

It should be noted that a large part of this framework is focused on Accessible Technology. It is great to see such recommendations. Apart from the section relating to Accessibility, the report contains 2 main sections addressing the use of Artificial Intelligence (AI) and how to support the implementation and regulation of any new laws with the appointment of an AI Safety Commissioner.

Focusing on the section on the use of Artificial Intelligence, the following is a summary of the 20 recommendations:

Chapter 5 – Legal Accountability for Government use of AI

Introduce legislation to require that a human rights impact assessment (HRIA) be undertaken before any department or agency uses an AI-informed decision-making system to make administrative decisions. When an AI decision is made measures are needed to improve transparency, including notification of the use of AI and strengthening a right to reasons or an explanation for AI-informed administrative decisions, and an independent review for all AI-informed administrative decisions.

Chapter 6 – Legal Accountability for Private use of AI

In a similar manner to governmental use of AI, human rights and accountability are also important when corporations and other non-government entities use AI to make decisions. Corporations and other non-government bodies are encouraged to undertake HRIAs before using AI-informed decision-making systems and individuals be notified about the use of AI-informed decisions affecting them.

Chapter 7 – Encouraging Better AI Informed Decision Making

Complement self-regulation with legal regulation to create better AI-informed decision-making systems with standards and certification for the use of AI in decision making, creating ‘regulatory sandboxes’ that allow for experimentation and innovation, and rules for government procurement of decision-making tools and systems.

Chapter 8 – AI, Equality and Non-Discrimination (Bias)

Bias occurs when AI decision making produces outputs that result in unfairness or discrimination. Examples of AI bias has arisen in in the criminal justice system, advertising, recruitment, healthcare, policing and elsewhere. The recommendation is to provide guidance for government and non-government bodies in complying with anti-discrimination law in the context of AI-informed decision making

Chapter 9 – Biometric Surveillance, Facial Recognition and Privacy

There is lot of concern around the use of biometric technology, especially Facial Recognition. The recommendations include law reform to provide better human rights and privacy protection regarding the development and use of these technologies through regulation facial and biometric technology (Recommendations 19, 21), and a moratorium on the use of biometric technologies in high-risk decision making until such protections are in place (Recommendation 20).

In addition to the recommendations on the use of AI technologies, the framework also recommends the establishment of a AI Safety Commissioner to support the ongoing efforts with building capacity and implementation of regulations, to monitor and investigate use of AI, and support the government and private sector with complying with laws and ethical requirements with the use of AI.

Regulating AI around the World

Continuing my series of blog posts on various ML and AI regulations and laws, this post will look at what some other countries are doing to regulate ML and AI, with a particular focus on facial recognition and more advanced applications of ML. Some of the examples listed below are work-in-progress, while others such as EU AI Regulations are at a more advanced stage with introduction of regulations and laws.

[Note: What is listed below is in addition to various data protection regulations each country or region has implemented in recent years, for example EU GDPR and similar]

Things are moving fast in this area with more countries introducing regulations all the time. The following list is by no means exhaustive but it gives you a feel for what is happening around the world and what will be coming to your country very soon. The EU and (parts of) USA are leading in these areas, it is important to know these regulations and laws will impact on most AI/ML applications and work around the world. If you are processing data about an individual in these geographic regions then these laws affect you and what you can do. It doesn’t matter where you live.

New Zealand

New Zealand along wit the World Economic Forum (WEF) are developing a governance framework for AI regulations. It is focusing on three areas:

- Inclusive national conversation on the use of AI

- Enhancing the understand of AI and it’s application to inform policy making

- Mitigation of risks associated with AI applications

Singapore

The Personal Data Protection Commission has released a framework called ‘Model AI Governance Framework‘, to provide a model on implementing ethical and governance issues when deploying AI application. It supports having explainable AI, allowing for clear and transparent communications on how the AI applications work. The idea is to build understanding and trust in these technological solutions. It consists of four principles:

- Internal Governance Structures and Measures

- Determining the Level of Human Involvement in AI-augmented Decision Making

- Operations Management, minimizing bias, explainability and robustness

- Stakeholder Interaction and Communication.

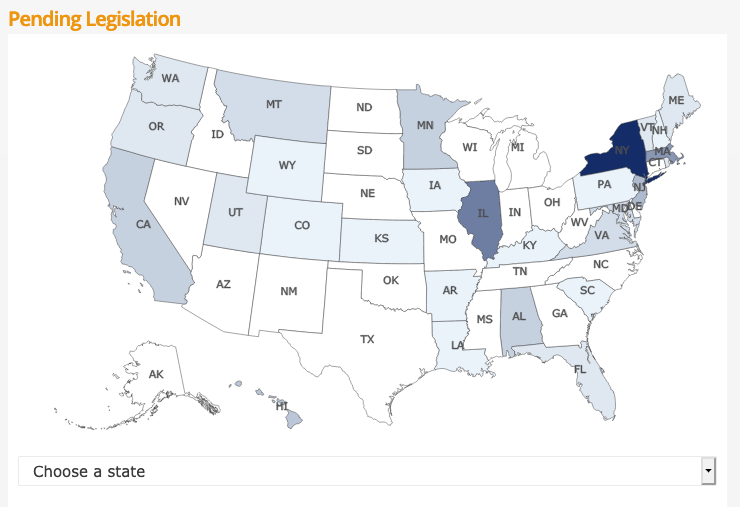

USA

Progress within the USA has been divided between local state level initiatives, for example California where different regions have implemented their own laws, while at a state level there has been attempts are laws. But California is not along with almost half of the states introducing laws restricting the use of facial recognition and personal data protection. In addition to what is happening at State level, there has been some orders and laws introduced at government level.

- Executive Order on Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government

- This provides guidelines to help Federal Agencies with AI adoption and to foster public trust in the technology. It directs agencies to ensure the design, development, acquisition and use of AI is done in a manner to protects privacy, civil rights, and civil liberties. It includes the following actions:

- Principles for the Use of AI in Government

- Common Policy form Implementing Principles

- Catalogue of Agency Use Cases of AI

- Enhanced AI Implementation Expertise

- This provides guidelines to help Federal Agencies with AI adoption and to foster public trust in the technology. It directs agencies to ensure the design, development, acquisition and use of AI is done in a manner to protects privacy, civil rights, and civil liberties. It includes the following actions:

- Government – Facial Recognition and Biometric Technology Moratorium Act of 2020. Limits the use of biometric surveillance systems such as facial recognition systems by federal and state government entities

USA – Washington State

Many of the States in USA have enacted laws on Facial Recognition and the use of AI. There are too many to list here, but go to this website to explore what each State has done. Taking Washington State as an example, it has enacted a law prohibiting the use of facial recognition technology for ongoing surveillance and limits its use to acquiring evidence of serious criminal offences following authorization of a search warrant.

Canada

The Privacy Commissioner of Canada introduced the Regulatory Framework for AI, and calls for legislation supporting the benefits of AI while upholding privacy of individuals. Recommendations include:

- allow personal information to be used for new purposes towards responsible AI innovation and for societal benefits

- authorize these uses within a rights-based framework that would entrench privacy as a human right and a necessary element for the exercise of other fundamental rights

- create a right to meaningful explanation for automated decisions and a right to contest those decisions to ensure they are made fairly and accurately

- strengthen accountability by requiring a demonstration of privacy compliance upon request by the regulator

- empower the OPC to issue binding orders and proportional financial penalties to incentivize compliance with the law

- require organizations to design AI systems from their conception in a way that protects privacy and human rights

The above list is just a sample of what is happening around the World, and we are sure to see lots more of this over the next few years. There are lots of pros and cons to these regulations and laws. One of the biggest challenges being faced by people with AI and ML technologies is knowing what is and isn’t possible/allowed, as most solutions/applications will be working across many geographic regions

Truth, Fairness & Equality in AI – US Federal Trade Commission

Over the past few months we have seen a growing level of communication, guidelines, regulations and legislation for the use of Machine Learning (ML) and Artificial Intelligence (AI). Where Artificial Intelligence is a superset containing all possible machine or computer generated or apply intelligence consisting of any logic that makes a decision or calculation.

Although the EU has been leading the charge in this area, other countries have been following suit with similar guidelines and legislation.

There has been several examples of this in the USA over the past couple of years. Some of this has been prefaced by the debates and issues around the use of facial recognition. Some States in USA have introduced laws to control what can and cannot be done, but, at time of writing, where is no federal law governing the whole of USA.

In April 2021, the US Federal Trade Commission published and article on titled ‘Aiming for truth, fairness, and equity in Company’s use of AI‘.

They provide guidelines on how to build AI applications while avoiding potential issues such as bias and unfair outcomes, and at the same time incorporating transparency. In addition to the recommendations in the report, they point to three laws (which have been around for some time) which are important for developers of AI applications. These include:

- Section 5 of the FTC Act: The FTC Act prohibits unfair or deceptive practices. That would include the sale or use of – for example – racially biased algorithms.

- Fair Credit Reporting Act: The FCRA comes into play in certain circumstances where an algorithm is used to deny people employment, housing, credit, insurance, or other benefits.

- Equal Credit Opportunity Act: The ECOA makes it illegal for a company to use a biased algorithm that results in credit discrimination on the basis of race, color, religion, national origin, sex, marital status, age, or because a person receives public assistance.

These guidelines aims for truthfully, fairly and equitably. With these covering the technical and non-technical side of AI applications. The guidelines include:

- Start with the right direction: Get your data set right, what is missing, is it balanced, what’s missing, etc. Look at how to improve the data set and address any shortcomings, and this may limit you use model

- Watch out of discriminatory outcomes: Are the outcomes biased? If it works for you data set and scenario, will it work in others eg. Applying the model in a different hospital? Regular and detail testing is needed to ensure no discrimination gets included

- Embrace transparency and independence: Think about how to incorporate transparency from the beginning of the AI project. Use international best practice and standards, have independent audits and publish results, by opening the data and source code to outside inspection.

- Don’t exaggerate what you algorithm can do or whether it can deliver fair or unbiased results: That kind of says it all really. Under the FTC Act, your statements to business customers and consumers must be truthful, no-deceptive and backed up by evidence. Typically with the rush to introduce new technologies and products there can be a tendency to over exaggerate what it can do. Don’t do this

- Tell the truth about how you use data: Be careful about what data you used and how you got this data. For example, Facebook using facial recognition software on pictures default, when they asked for your permission but ignored what you said. Misrepresentation of what the customer/consumer was told.

- Do more good than harm: A practice is unfair if it causes more harm than good. Making decisions based on race, color, religion, sex, etc. If the model causes more harm than good, if it causes or is likely to cause substantial injury to consumers that I not reasonably avoidable by consumers and not outweighed by countervailing benefits to consumers or to competition, their model is unfair.

- Hold yourself accountable: If you use AI, in any form, you will be held accountable for the algorithm’s performance.

Some of these guidelines build upon does from April 2020, on Using Artificial Intelligence and Algorithms, where there is a focus on fair use of data, transparency of data usage, algorithms and models, ability to clearly explain how a decision was made, and ensure all decisions made are fair and unbiased

Working with AI products and applications can be challenging in many different ways. Most of the focus, information and examples is about building these. But that can be the easy part. With the growing number of legal aspects from different regions around the world the task of managing AI products and applications is becoming more and more complicated.

The EU AI Regulations supports the role of person to oversee these different aspects, and this is something we will see job adverts for very very soon, no matter what country or region you live in. The people in these roles will help steer and support companies through this difficult and evolving area, to ensure compliance with local as well and global compliance and legal requirements.

You must be logged in to post a comment.