AI

OCI Text to Speech example

In this post, I’ll walk through the steps to get a very simple example of Text-to-Speech working. This example builds upon my previous posts on OCI Language, OCI Speech and others, so make sure you check out those posts.

The first thing you need to be aware of, and to check, before you proceed, is whether the Text-to-Speech is available in your region. At the time of writing, this feature was only available in Phoenix, which is one of the cloud regions I have access to. There are plans to roll it out to other regions, but I’m not aware of the timeline for this. Although you might see Speech listed on your AI menu in OCI, that does not guarantee the Text-to-Speech feature is available. What it does mean is the text trans scribing feature is available.

So if Text-to-Speech is available in your region, the following will get you up and running.

The first thing you need to do is read in the Config file from the OS.

#initial setup, read Config file, create OCI Client

import oci

from oci.config import from_file

##########

from oci_ai_speech_realtime import RealtimeSpeechClient, RealtimeSpeechClientListener

from oci.ai_speech.models import RealtimeParameters

##########

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', profile_name=CONFIG_PROFILE)

###

ai_speech_client = ai_speech_client = oci.ai_speech.AIServiceSpeechClient(config)

###

print(config)

### Update region to point to Phoenix

config.update({'region':'us-phoenix-1'})A simple little test to see if the Text-to-Speech feature is enabled for your region is to display the available list of voices.

list_voices_response = ai_speech_client.list_voices(

compartment_id=COMPARTMENT_ID,

display_name="Text-to-Speech")

# opc_request_id="1GD0CV5QIIS1RFPFIOLF<unique_ID>")

# Get the data from response

print(list_voices_response.data)This produces a long json object with many characteristics of the available voices. A simpler listing gives the names and gender)

for i in range(len(list_voices_response.data.items)):

print(list_voices_response.data.items[i].display_name + ' [' + list_voices_response.data.items[i].gender + ']\t' + list_voices_response.data.items[i].language_description )

------

Brian [MALE] English (United States)

Annabelle [FEMALE] English (United States)

Bob [MALE] English (United States)

Stacy [FEMALE] English (United States)

Phil [MALE] English (United States)

Cindy [FEMALE] English (United States)

Brad [MALE] English (United States)

Richard [MALE] English (United States)Now lets setup a Text-to-Speech example using the simple text, Hello. My name is Brendan and this is an example of using Oracle OCI Speech service. First lets define a function to save the audio to a file.

def save_audi_response(data):

with open(filename, 'wb') as f:

for b in data.iter_content():

f.write(b)

f.close()We can now establish a connection, define the text, call the OCI Speech function to create the audio, and then to save the audio file.

import IPython.display as ipd

# Initialize service client with default config file

ai_speech_client = oci.ai_speech.AIServiceSpeechClient(config)

TEXT_DEMO = "Hello. My name is Brendan and this is an example of using Oracle OCI Speech service"

#speech_response = ai_speech_client.synthesize_speech(compartment_id=COMPARTMENT_ID)

speech_response = ai_speech_client.synthesize_speech(

synthesize_speech_details=oci.ai_speech.models.SynthesizeSpeechDetails(

text=TEXT_DEMO,

is_stream_enabled=True,

compartment_id=COMPARTMENT_ID,

configuration=oci.ai_speech.models.TtsOracleConfiguration(

model_family="ORACLE",

model_details=oci.ai_speech.models.TtsOracleTts2NaturalModelDetails(

model_name="TTS_2_NATURAL",

voice_id="Annabelle"),

speech_settings=oci.ai_speech.models.TtsOracleSpeechSettings(

text_type="SSML",

sample_rate_in_hz=18288,

output_format="MP3",

speech_mark_types=["WORD"])),

audio_config=oci.ai_speech.models.TtsBaseAudioConfig(config_type="BASE_AUDIO_CONFIG") #, save_path='I'm not sure what this should be')

) )

# Get the data from response

#print(speech_response.data)

save_audi_response(speech_response.data)Unlock Text Analytics with Oracle OCI Python – Part 2

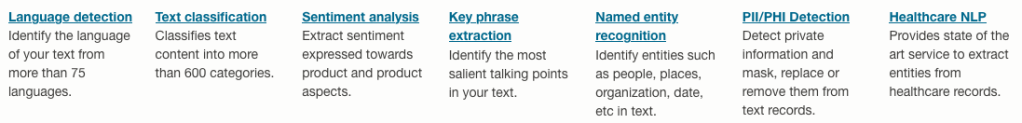

This is my second post on using Oracle OCI Language service to perform Text Analytics. These include Language Detection, Text Classification, Sentiment Analysis, Key Phrase Extraction, Named Entity Recognition, Private Data detection and masking, and Healthcare NLP.

In my Previous post (Part 1), I covered examples on Language Detection, Text Classification and Sentiment Analysis.

In this post (Part 2), I’ll cover:

- Key Phrase

- Named Entity Recognition

- Detect private information and marking

Make sure you check out Part 1 for details on setting up the client and establishing a connection. These details are omitted in the examples below.

Key Phrase Extraction

With Key Phrase Extraction, it aims to identify the key works and/or phrases from the text. The keywords/phrases are selected based on what are the main topics in the text along with the confidence score. The text is parsed to extra the words/phrase that are important in the text. This can aid with identifying the key aspects of the document without having to read it. Care is needed as these words/phrases do not represent the meaning implied in the text.

Using some of the same texts used in Part-1, let’s see what gets generated for the text about a Hotel experience.

t_doc = oci.ai_language.models.TextDocument(

key="Demo",

text="This hotel is a bad place, I would strongly advise against going there. There was one helpful member of staff",

language_code="en")

key_phrase = ai_language_client.batch_detect_language_key_phrases((oci.ai_language.models.BatchDetectLanguageKeyPhrasesDetails(documents=[t_doc])))

print(key_phrase.data)

print('==========')

for i in range(len(key_phrase.data.documents)):

for j in range(len(key_phrase.data.documents[i].key_phrases)):

print("phrase: ", key_phrase.data.documents[i].key_phrases[j].text +' [' + str(key_phrase.data.documents[i].key_phrases[j].score) + ']'){

"documents": [

{

"key": "Demo",

"key_phrases": [

{

"score": 0.9998106383818767,

"text": "bad place"

},

{

"score": 0.9998106383818767,

"text": "one helpful member"

},

{

"score": 0.9944029848214838,

"text": "staff"

},

{

"score": 0.9849306609397931,

"text": "hotel"

}

],

"language_code": "en"

}

],

"errors": []

}

==========

phrase: bad place [0.9998106383818767]

phrase: one helpful member [0.9998106383818767]

phrase: staff [0.9944029848214838]

phrase: hotel [0.9849306609397931]The output from the Key Phrase Extraction is presented into two formats about. The first is the JSON object returned from the function, containing the phrases and their confidence score. The second (below the ==========) is a formatted version of the same JSON object but parsed to extract and present the data in a more compact manner.

The next piece of text to be examined is taken from an article on the F1 website about a change of divers.

text_f1 = "Red Bull decided to take swift action after Liam Lawsons difficult start to the 2025 campaign, demoting him to Racing Bulls and promoting Yuki Tsunoda to the senior team alongside reigning world champion Max Verstappen. F1 Correspondent Lawrence Barretto explains why… Sergio Perez had endured a painful campaign that saw him finish a distant eighth in the Drivers Championship for Red Bull last season – while team mate Verstappen won a fourth successive title – and after sticking by him all season, the team opted to end his deal early after Abu Dhabi finale."

t_doc = oci.ai_language.models.TextDocument(

key="Demo",

text=text_f1,

language_code="en")

key_phrase = ai_language_client.batch_detect_language_key_phrases(oci.ai_language.models.BatchDetectLanguageKeyPhrasesDetails(documents=[t_doc]))

print(key_phrase.data)

print('==========')

for i in range(len(key_phrase.data.documents)):

for j in range(len(key_phrase.data.documents[i].key_phrases)):

print("phrase: ", key_phrase.data.documents[i].key_phrases[j].text +' [' + str(key_phrase.data.documents[i].key_phrases[j].score) + ']')I won’t include all the output and the following shows the key phrases in the compact format

phrase: red bull [0.9991468440416812]

phrase: swift action [0.9991468440416812]

phrase: liam lawsons difficult start [0.9991468440416812]

phrase: 2025 campaign [0.9991468440416812]

phrase: racing bulls [0.9991468440416812]

phrase: promoting yuki tsunoda [0.9991468440416812]

phrase: senior team [0.9991468440416812]

phrase: sergio perez [0.9991468440416812]

phrase: painful campaign [0.9991468440416812]

phrase: drivers championship [0.9991468440416812]

phrase: red bull last season [0.9991468440416812]

phrase: team mate verstappen [0.9991468440416812]

phrase: fourth successive title [0.9991468440416812]

phrase: all season [0.9991468440416812]

phrase: abu dhabi finale [0.9991468440416812]

phrase: team [0.9420016064526977]While some aspects of this is interesting, care is needed to not overly rely upon it. It really depends on the usecase.

Named Entity Recognition

For Named Entity Recognition is a natural language process for finding particular types of entities listed as words or phrases in the text. The named entities are a defined list of items. For OCI Language there is a list available here. Some named entities have a sub entities. The return JSON object from the function has a format like the following.

{

"documents": [

{

"entities": [

{

"length": 5,

"offset": 5,

"score": 0.969588577747345,

"sub_type": "FACILITY",

"text": "hotel",

"type": "LOCATION"

},

{

"length": 27,

"offset": 82,

"score": 0.897526216506958,

"sub_type": null,

"text": "one helpful member of staff",

"type": "QUANTITY"

}

],

"key": "Demo",

"language_code": "en"

}

],

"errors": []

}For each named entity discovered the returned object will contain the Text identifed, the Entity Type, the Entity Subtype, Confidence Score, offset and length.

Using the text samples used previous, let’s see what gets produced. The first example is for the hotel review.

t_doc = oci.ai_language.models.TextDocument(

key="Demo",

text="This hotel is a bad place, I would strongly advise against going there. There was one helpful member of staff",

language_code="en")

named_entities = ai_language_client.batch_detect_language_entities(

batch_detect_language_entities_details=oci.ai_language.models.BatchDetectLanguageEntitiesDetails(documents=[t_doc]))

for i in range(len(named_entities.data.documents)):

for j in range(len(named_entities.data.documents[i].entities)):

print("Text: ", named_entities.data.documents[i].entities[j].text, ' [' + named_entities.data.documents[i].entities[j].type + ']'

+ '[' + str(named_entities.data.documents[i].entities[j].sub_type) + ']' + '{offset:'

+ str(named_entities.data.documents[i].entities[j].offset) + '}')Text: hotel [LOCATION][FACILITY]{offset:5}

Text: one helpful member of staff [QUANTITY][None]{offset:82}The last two lines above are the formatted output of the JSON object. It contains two named entities. The first one is for the text “hotel” and it has a Entity Type of Location, and a Sub Entitity Type of Location. The second named entity is for a long piece of string and for this it has a Entity Type of Quantity, but has no Sub Entity Type.

Now let’s see what is creates for the F1 text. (the text has been given above and the code is very similar/same as above).

Text: Red Bull [ORGANIZATION][None]{offset:0}

Text: swift [ORGANIZATION][None]{offset:25}

Text: Liam Lawsons [PERSON][None]{offset:44}

Text: 2025 [DATETIME][DATE]{offset:80}

Text: Yuki Tsunoda [PERSON][None]{offset:138}

Text: senior [QUANTITY][AGE]{offset:158}

Text: Max Verstappen [PERSON][None]{offset:204}

Text: F1 [ORGANIZATION][None]{offset:220}

Text: Lawrence Barretto [PERSON][None]{offset:237}

Text: Sergio Perez [PERSON][None]{offset:269}

Text: campaign [EVENT][None]{offset:304}

Text: eighth in the [QUANTITY][None]{offset:343}

Text: Drivers Championship [EVENT][None]{offset:357}

Text: Red Bull [ORGANIZATION][None]{offset:382}

Text: Verstappen [PERSON][None]{offset:421}

Text: fourth successive title [QUANTITY][None]{offset:438}

Text: Abu Dhabi [LOCATION][GPE]{offset:545}Detect Private Information and Marking

The ability to perform data masking has been available in SQL for a long time. There are lots of scenarios where masking is needed and you are not using a Database or not at that particular time.

With Detect Private Information or Personal Identifiable Information the OCI AI function search for data that is personal and gives you options on how to present this back to the users. Examples of the types of data or Entity Types it will detect include Person, Adddress, Age, SSN, Passport, Phone Numbers, Bank Accounts, IP Address, Cookie details, Private and Public keys, various OCI related information, etc. The list goes on. Check out the documentation for more details on these. Unfortunately the documentation for how the Python API works is very limited.

The examples below illustrate some of the basic options. But there is lots more you can do with this feature like defining you own rules.

For these examples, I’m going to use the following text which I’ve assigned to a variable called text_demo.

Hi Martin. Thanks for taking my call on 1/04/2025. Here are the details you requested. My Bank Account Number is 1234-5678-9876-5432 and my Bank Branch is Main Street, Dublin. My Date of Birth is 29/02/1993 and I’ve been living at my current address for 15 years. Can you also update my email address to brendan.tierney@email.com. If toy have any problems with this you can contact me on +353-1-493-1111. Thanks for your help. Brendan.

m_mode = {"ALL":{"mode":'MASK'}}

t_doc = oci.ai_language.models.TextDocument(key="Demo", text=text_demo,language_code="en")

pii_entities = ai_language_client.batch_detect_language_pii_entities(oci.ai_language.models.BatchDetectLanguagePiiEntitiesDetails(documents=[t_doc], masking=m_mode))

print(text_demo)

print('--------------------------------------------------------------------------------')

print(pii_entities.data.documents[0].masked_text)

print('--------------------------------------------------------------------------------')

for i in range(len(pii_entities.data.documents)):

for j in range(len(pii_entities.data.documents[i].entities)):

print("phrase: ", pii_entities.data.documents[i].entities[j].text +' [' + str(pii_entities.data.documents[i].entities[j].type) + ']')

Hi Martin. Thanks for taking my call on 1/04/2025. Here are the details you requested. My Bank Account Number is 1234-5678-9876-5432 and my Bank Branch is Main Street, Dublin. My Date of Birth is 29/02/1993 and I've been living at my current address for 15 years. Can you also update my email address to brendan.tierney@email.com. If toy have any problems with this you can contact me on +353-1-493-1111. Thanks for your help. Brendan.

--------------------------------------------------------------------------------

Hi ******. Thanks for taking my call on *********. Here are the details you requested. My Bank Account Number is ******************* and my Bank Branch is Main Street, Dublin. My Date of Birth is ********** and I've been living at my current address for ********. Can you also update my email address to *************************. If toy have any problems with this you can contact me on ***************. Thanks for your help. *******.

--------------------------------------------------------------------------------

phrase: Martin [PERSON]

phrase: 1/04/2025 [DATE_TIME]

phrase: 1234-5678-9876-5432 [CREDIT_DEBIT_NUMBER]

phrase: 29/02/1993 [DATE_TIME]

phrase: 15 years [DATE_TIME]

phrase: brendan.tierney@email.com [EMAIL]

phrase: +353-1-493-1111 [TELEPHONE_NUMBER]

phrase: Brendan [PERSON]The above this the basic level of masking.

A second option is to use the REMOVE mask. For this, change the mask format to the following.

m_mode = {"ALL":{'mode':'REMOVE'}} For this option the indentified information is removed from the text.

Hi . Thanks for taking my call on . Here are the details you requested. My Bank Account Number is and my Bank Branch is Main Street, Dublin. My Date of Birth is and I've been living at my current address for . Can you also update my email address to . If toy have any problems with this you can contact me on . Thanks for your help. .

--------------------------------------------------------------------------------

phrase: Martin [PERSON]

phrase: 1/04/2025 [DATE_TIME]

phrase: 1234-5678-9876-5432 [CREDIT_DEBIT_NUMBER]

phrase: 29/02/1993 [DATE_TIME]

phrase: 15 years [DATE_TIME]

phrase: brendan.tierney@email.com [EMAIL]

phrase: +353-1-493-1111 [TELEPHONE_NUMBER]

phrase: Brendan [PERSON]For the REPLACE option we have.

m_mode = {"ALL":{'mode':'REPLACE'}} Which gives us the following, where we can see the key information is removed and replace with the name of Entity Type.

Hi <PERSON>. Thanks for taking my call on <DATE_TIME>. Here are the details you requested. My Bank Account Number is <CREDIT_DEBIT_NUMBER> and my Bank Branch is Main Street, Dublin. My Date of Birth is <DATE_TIME> and I've been living at my current address for <DATE_TIME>. Can you also update my email address to <EMAIL>. If toy have any problems with this you can contact me on <TELEPHONE_NUMBER>. Thanks for your help. <PERSON>.

--------------------------------------------------------------------------------

phrase: Martin [PERSON]

phrase: 1/04/2025 [DATE_TIME]

phrase: 1234-5678-9876-5432 [CREDIT_DEBIT_NUMBER]

phrase: 29/02/1993 [DATE_TIME]

phrase: 15 years [DATE_TIME]

phrase: brendan.tierney@email.com [EMAIL]

phrase: +353-1-493-1111 [TELEPHONE_NUMBER]

phrase: Brendan [PERSON]We can also change the character used for the masking. In this example we change the masking character to + symbol.

m_mode = {"ALL":{'mode':'MASK','maskingCharacter':'+'}} Hi ++++++. Thanks for taking my call on +++++++++. Here are the details you requested. My Bank Account Number is +++++++++++++++++++ and my Bank Branch is Main Street, Dublin. My Date of Birth is ++++++++++ and I've been living at my current address for ++++++++. Can you also update my email address to +++++++++++++++++++++++++. If toy have any problems with this you can contact me on +++++++++++++++. Thanks for your help. +++++++.I mentioned at the start of this section there was lots of options available to you, including defining your own rules, using regular expressions, etc. Let me know if you’re interested in exploring some of these and I can share a few more examples.

Unlock Text Analytics with Oracle OCI Python – Part 1

Oracle OCI has a number of features that allows you to perform Text Analytics such as Language Detection, Text Classification, Sentiment Analysis, Key Phrase Extraction, Named Entity Recognition, Private Data detection and masking, and Healthcare NLP.

While some of these have particular (and in some instances limited) use cases, the following examples will illustrate some of the main features using the OCI Python library. Why am I using Python to illustrate these? This is because most developers are using Python to build applications.

In this post, the Python examples below will cover the following:

- Language Detection

- Text Classification

- Sentiment Analysis

In my next post on this topic, I’ll cover:

- Key Phrase

- Named Entity Recognition

- Detect private information and marking

Before you can use any of the OCI AI Services, you need to set up a config file on your computer. This will contain the details necessary to establish a secure connection to your OCI tendency. Check out this blog post about setting this up.

The following Python examples illustrate what is possible for each feature. In the first example, I include what is needed for the config file. This is not repeated in the examples that follow, but it is still needed.

Language Detection

Let’s begin with a simple example where we provide a simple piece of text and as OCI Language Service, using OCI Python, to detect the primary language for the text and display some basic information about this prediction.

import oci

from oci.config import from_file

#Read in config file - this is needed for connecting to the OCI AI Services

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', profile_name=CONFIG_PROFILE)

###

ai_language_client = oci.ai_language.AIServiceLanguageClient(config)

# French :

text_fr = "Bonjour et bienvenue dans l'analyse de texte à l'aide de ce service cloud"

response = ai_language_client.detect_dominant_language(

oci.ai_language.models.DetectLanguageSentimentsDetails(

text=text_fr

)

)

print(response.data.languages[0].name)

----------

FrenchIn this example, I’ve a simple piece of French (for any native French speakers, I do apologise). We can see the language was identified as French. Let’s have a closer look at what is returned by the OCI function.

print(response.data)

----------

{

"languages": [

{

"code": "fr",

"name": "French",

"score": 1.0

}

]

}We can see from the above, the object contains the language code, the full name of the language and the score to indicate how strong or how confident the function is with the prediction. When the text contains two or more languages, the function will return the primary language used.

Note: OCI Language can detect at least 113 different languages. Check out the full list here.

Let’s give it a try with a few other languages, including Irish, which localised to certain parts of Ireland. Using the same code as above, I’ve included the same statement (google) translated into other languages. The code loops through each text statement and detects the language.

import oci

from oci.config import from_file

###

CONFIG_PROFILE = "DEFAULT"

config = oci.config.from_file('~/.oci/config', profile_name=CONFIG_PROFILE)

###

ai_language_client = oci.ai_language.AIServiceLanguageClient(config)

# French :

text_fr = "Bonjour et bienvenue dans l'analyse de texte à l'aide de ce service cloud"

# German:

text_ger = "Guten Tag und willkommen zur Textanalyse mit diesem Cloud-Dienst"

# Danish

text_dan = "Goddag, og velkommen til at analysere tekst ved hjælp af denne skytjeneste"

# Italian

text_it = "Buongiorno e benvenuti all'analisi del testo tramite questo servizio cloud"

# English:

text_eng = "Good day, and welcome to analysing text using this cloud service"

# Irish

text_irl = "Lá maith, agus fáilte romhat chuig anailís a dhéanamh ar théacs ag baint úsáide as an tseirbhís scamall seo"

for text in [text_eng, text_ger, text_dan, text_it, text_irl]:

response = ai_language_client.detect_dominant_language(

oci.ai_language.models.DetectLanguageSentimentsDetails(

text=text

)

)

print('[' + response.data.languages[0].name + ' ('+ str(response.data.languages[0].score) +')' + '] '+ text)

----------

[English (1.0)] Good day, and welcome to analysing text using this cloud service

[German (1.0)] Guten Tag und willkommen zur Textanalyse mit diesem Cloud-Dienst

[Danish (1.0)] Goddag, og velkommen til at analysere tekst ved hjælp af denne skytjeneste

[Italian (1.0)] Buongiorno e benvenuti all'analisi del testo tramite questo servizio cloud

[Irish (1.0)] Lá maith, agus fáilte romhat chuig anailís a dhéanamh ar théacs ag baint úsáide as an tseirbhís scamall seoWhen you run this code yourself, you’ll notice how quick the response time is for each.

Text Classification

Now that we can perform some simple language detections, we can move on to some more insightful functions. The first of these is Text Classification. With Text Classification, it will analyse the text to identify categories and a confidence score of what is covered in the text. Let’s have a look at an example using the English version of the text used above. This time, we need to perform two steps. The first is to set up and prepare the document to be sent. The second step is to perform the classification.

### Text Classification

text_document = oci.ai_language.models.TextDocument(key="Demo", text=text_eng, language_code="en")

text_class_resp = ai_language_client.batch_detect_language_text_classification(

batch_detect_language_text_classification_details=oci.ai_language.models.BatchDetectLanguageTextClassificationDetails(

documents=[text_document]

)

)

print(text_class_resp.data)

----------

{

"documents": [

{

"key": "Demo",

"language_code": "en",

"text_classification": [

{

"label": "Internet and Communications/Web Services",

"score": 1.0

}

]

}

],

"errors": []

}We can see it has correctly identified the text is referring to or is about “Internet and Communications/Web Services”. For a second example, let’s use some text about F1. The following is taken from an article on F1 app and refers to the recent Driver issues, and we’ll use the first two paragraphs.

{

"documents": [

{

"key": "Demo",

"language_code": "en",

"text_classification": [

{

"label": "Sports and Games/Motor Sports",

"score": 1.0

}

]

}

],

"errors": []

}We can format this response object as follows.

print(text_class_resp.data.documents[0].text_classification[0].label

+ ' [' + str(text_class_resp.data.documents[0].text_classification[0].score) + ']')

----------

Sports and Games/Motor Sports [1.0]It is possible to get multiple classifications being returned. To handle this we need to use a couple of loops.

for i in range(len(text_class_resp.data.documents)):

for j in range(len(text_class_resp.data.documents[i].text_classification)):

print("Label: ", text_class_resp.data.documents[i].text_classification[j].label)

print("Score: ", text_class_resp.data.documents[i].text_classification[j].score)

----------

Label: Sports and Games/Motor Sports

Score: 1.0Yet again, it correctly identified the type of topic area for the text. At this point, you are probably starting to get ideas about how this can be used and in what kinds of scenarios. This list will probably get longer over time.

Sentiement Analysis

For Sentiment Analysis we are looking to gauge the mood or tone of a text. For example, we might be looking to identify opinions, appraisals, emotions, attitudes towards a topic or person or an entity. The function returned an object containing a positive, neutral, mixed and positive sentiments and a confidence score. This feature currently supports English and Spanish.

The Sentiment Analysis function provides two way of analysing the text:

- At a Sentence level

- Looks are certain Aspects of the text. This identifies parts/words/phrase and determines the sentiment for each

Let’s start with the Sentence level Sentiment Analysis with a piece of text containing two sentences with both negative and positive sentiments.

#Sentiment analysis

text = "This hotel was in poor condition and I'd recommend not staying here. There was one helpful member of staff"

text_document = oci.ai_language.models.TextDocument(key="Demo", text=text, language_code="en")

text_doc=oci.ai_language.models.BatchDetectLanguageSentimentsDetails(documents=[text_document])

text_sentiment_resp = ai_language_client.batch_detect_language_sentiments(text_doc, level=["SENTENCE"])

print (text_sentiment_resp.data)The response object gives us:

{

"documents": [

{

"aspects": [],

"document_scores": {

"Mixed": 0.3458947,

"Negative": 0.41229093,

"Neutral": 0.0061426135,

"Positive": 0.23567174

},

"document_sentiment": "Negative",

"key": "Demo",

"language_code": "en",

"sentences": [

{

"length": 68,

"offset": 0,

"scores": {

"Mixed": 0.17541811,

"Negative": 0.82458186,

"Neutral": 0.0,

"Positive": 0.0

},

"sentiment": "Negative",

"text": "This hotel was in poor condition and I'd recommend not staying here."

},

{

"length": 37,

"offset": 69,

"scores": {

"Mixed": 0.5163713,

"Negative": 0.0,

"Neutral": 0.012285227,

"Positive": 0.4713435

},

"sentiment": "Mixed",

"text": "There was one helpful member of staff"

}

]

}

],

"errors": []

}There are two parts to this object. The first part gives us the overall Sentiment for the text, along with the confidence scores for all possible sentiments. The second part of the object breaks the test into individual sentences and gives the Sentiment and confidence scores for the sentence. Overall, the text used in “Negative” with a confidence score of 0.41229093. When we look at the sentences, we can see the first sentence is “Negative” and the second sentence is “Mixed”.

When we switch to using Aspect we can see the difference in the response.

text_sentiment_resp = ai_language_client.batch_detect_language_sentiments(text_doc, level=["ASPECT"])

print (text_sentiment_resp.data)The response object gives us:

{

"documents": [

{

"aspects": [

{

"length": 5,

"offset": 5,

"scores": {

"Mixed": 0.17299445074935532,

"Negative": 0.8268503302365734,

"Neutral": 0.0,

"Positive": 0.0001552190140712097

},

"sentiment": "Negative",

"text": "hotel"

},

{

"length": 9,

"offset": 23,

"scores": {

"Mixed": 0.0020200687053503,

"Negative": 0.9971282906307877,

"Neutral": 0.0,

"Positive": 0.0008516406638620019

},

"sentiment": "Negative",

"text": "condition"

},

{

"length": 6,

"offset": 91,

"scores": {

"Mixed": 0.0,

"Negative": 0.002300517913679934,

"Neutral": 0.023815747524769032,

"Positive": 0.973883734561551

},

"sentiment": "Positive",

"text": "member"

},

{

"length": 5,

"offset": 101,

"scores": {

"Mixed": 0.10319573538533408,

"Negative": 0.2070680870320537,

"Neutral": 0.0,

"Positive": 0.6897361775826122

},

"sentiment": "Positive",

"text": "staff"

}

],

"document_scores": {},

"document_sentiment": "",

"key": "Demo",

"language_code": "en",

"sentences": []

}

],

"errors": []

}The different aspects are extracted, and the sentiment for each within the text is determined. What you need to look out for are the labels “text” and “sentiment.

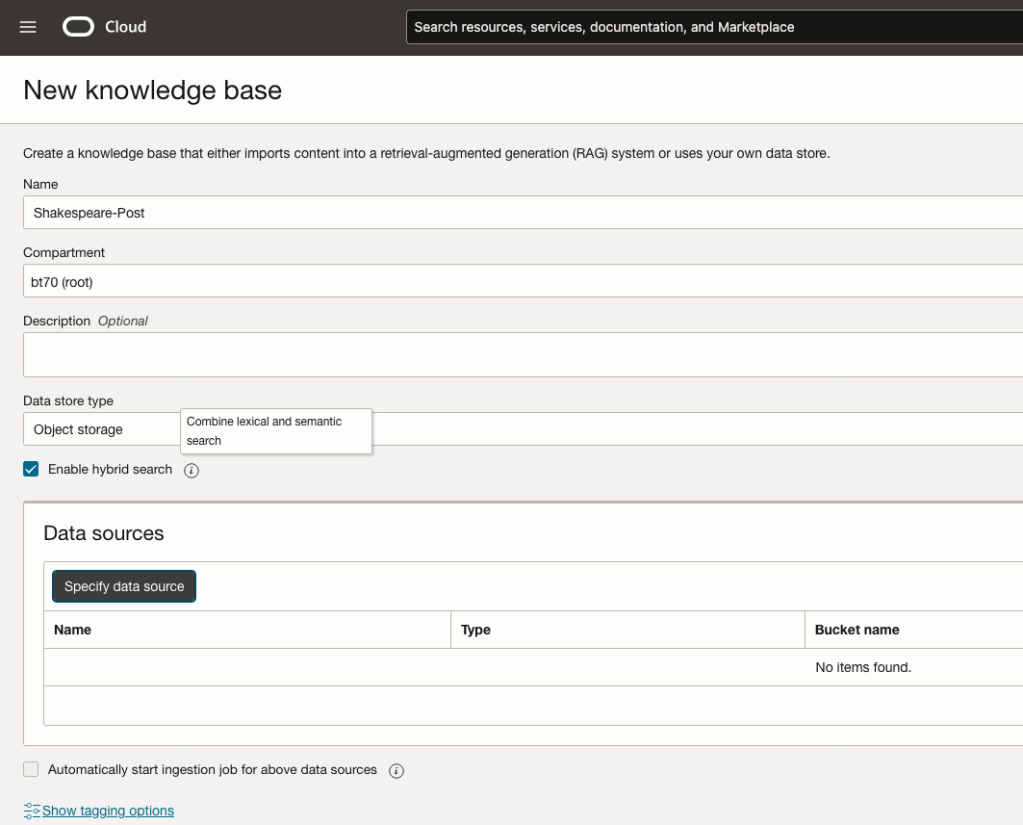

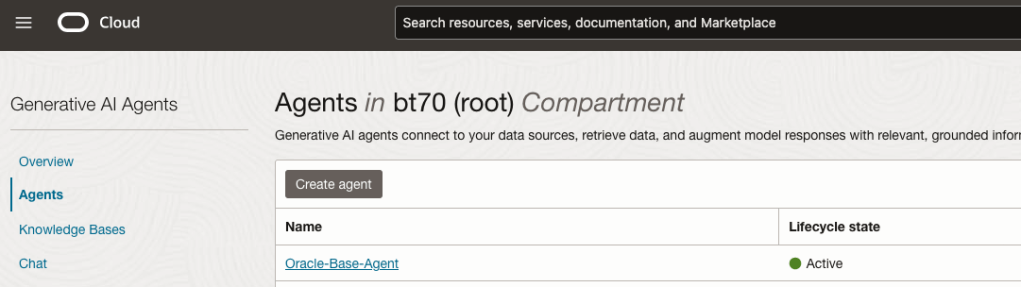

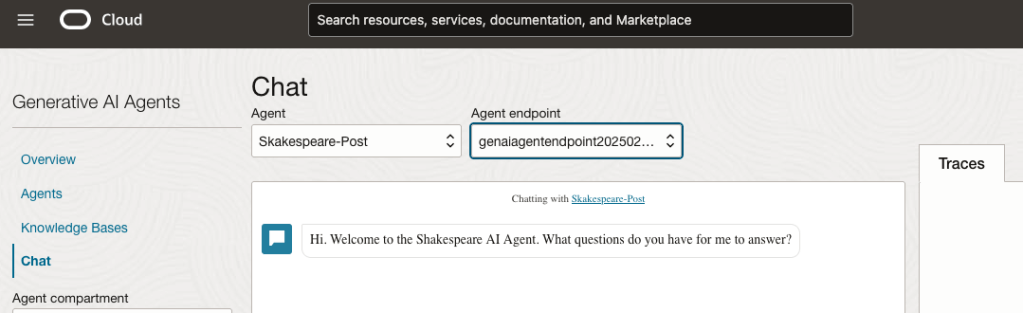

How to Create an Oracle Gen AI Agent

In this post, I’ll walk you through the steps needed to create a Gen AI Agent on Oracle Cloud. We have seen lots of solutions offered by my different providers for Gen AI Agents. This post focuses on just what is available on Oracle Cloud. You can create a Gen AI Agent manually. However, testing and fine-tuning based on various chunking strategies can take some time. With the automated options available on Oracle Cloud, you don’t have to worry about chunking. It handles all the steps automatically for you. This means you need to be careful when using it. Allocate some time for testing to ensure it meets your requirements. The steps below point out some checkboxes. You need to check them to ensure you generate a more complete knowledge base and outcome.

For my example scenario, I’m going to build a Gen AI Agent for some of the works by Shakespeare. I got the text of several plays from the Gutenberg Project website. The process for creating the Gen AI Agent is:

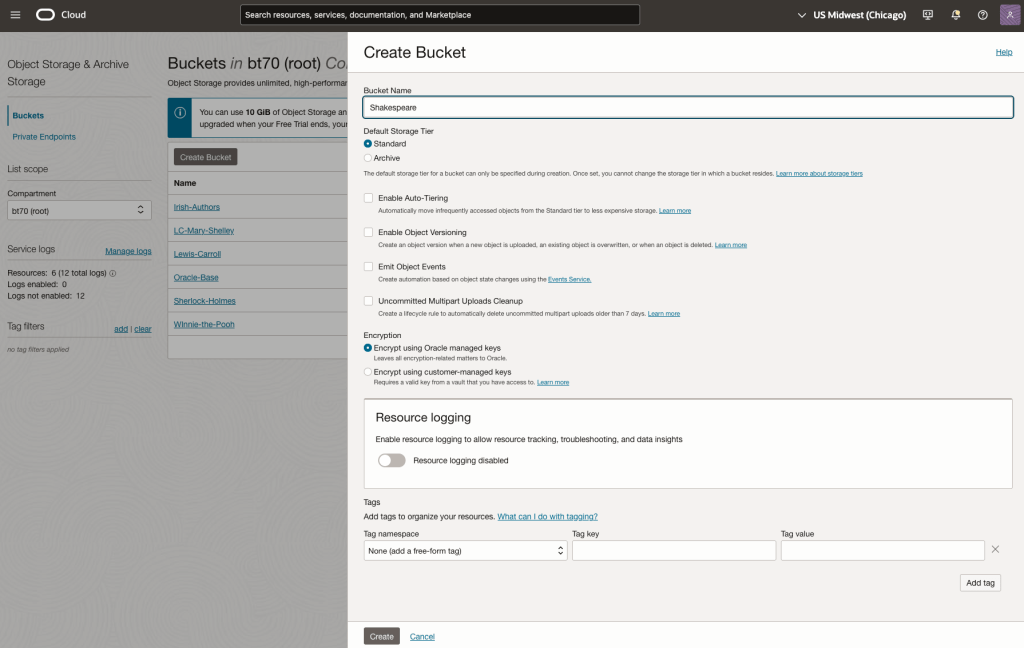

Step-1 Load Files to a Bucket on OCI

Create a bucket called Shakespeare.

Load the files from your computer into the Bucket. These files were obtained from the Gutenberg Project site.

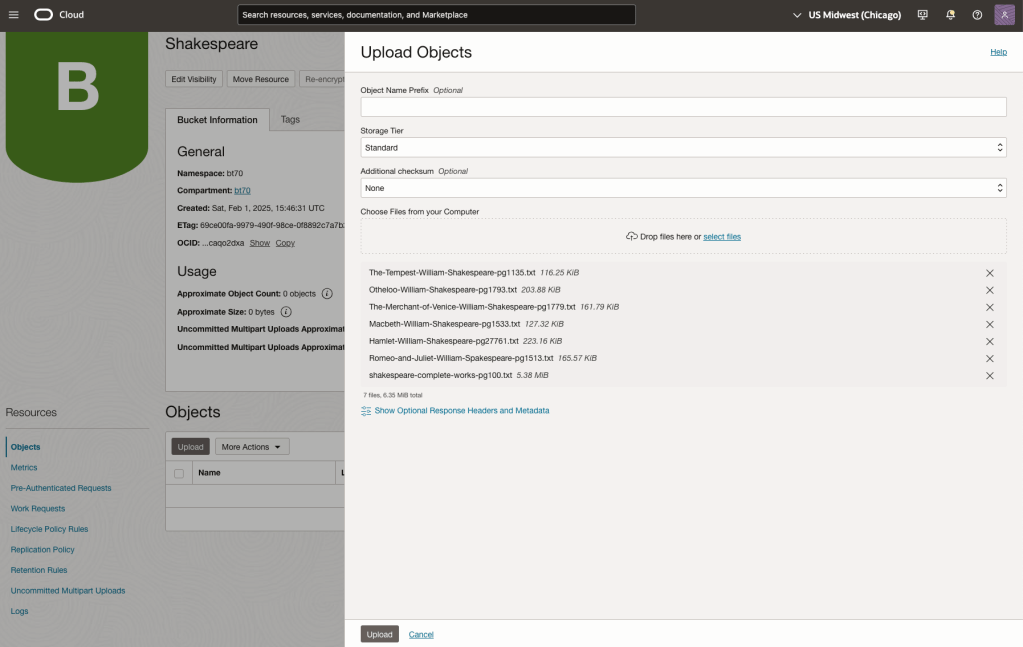

Step-2 Define a Data Source (documents you want to use) & Create a Knowledge Base

Click on Create Knowledge Base and give it a name ‘Shakespeare’.

Check the ‘Enable Hybrid Search’. checkbox. This will enable both lexical and semantic search. [this is Important]

Click on ‘Specify Data Source’

Select the Bucket from the drop-down list (Shakespeare bucket).

Check the ‘Enable multi-modal parsing’ checkbox.

Select the files to use or check the ‘Select all in bucket’

Click Create.

The Knowledge Base will be created. The files in the bucket will be parsed, and structured for search by the AI Agent. This step can take a few minutes as it needs to process all the files. This depends on the number of files to process, their format and the size of the contents in each file.

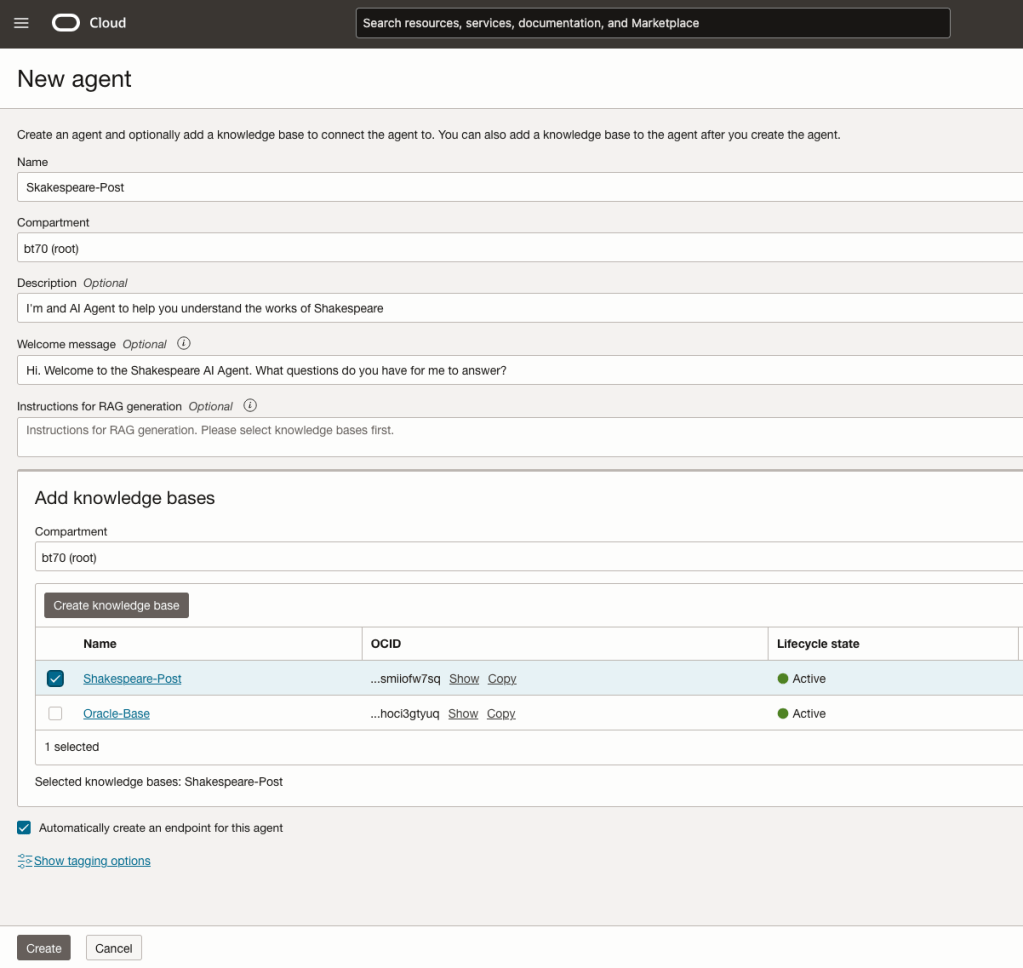

Step-3 Create Agent

Go back to the main Gen AI menu and select Agent and then Create Agent.

You can enter the following details:

- Name of the Agent

- Some descriptive information

- A Welcome message for people using the Agent

- Select the Knowledge Base from the list.

The checkbox for creating Endpoints should be checked.

Click Create.

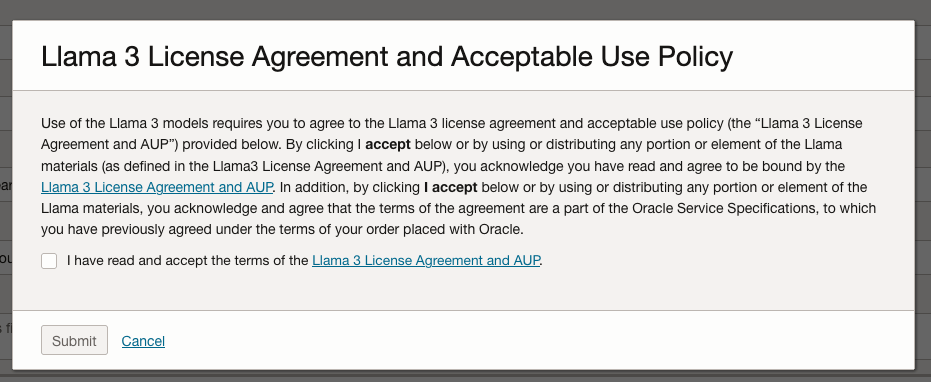

A pop-up window will appear asking you to agree to the Llama 3 License. Check this checkbox and click Submit.

After the agent has been created, check the status of the endpoints. These generally take a little longer to create, and you need these before you can test the Agent using the Chatbot.

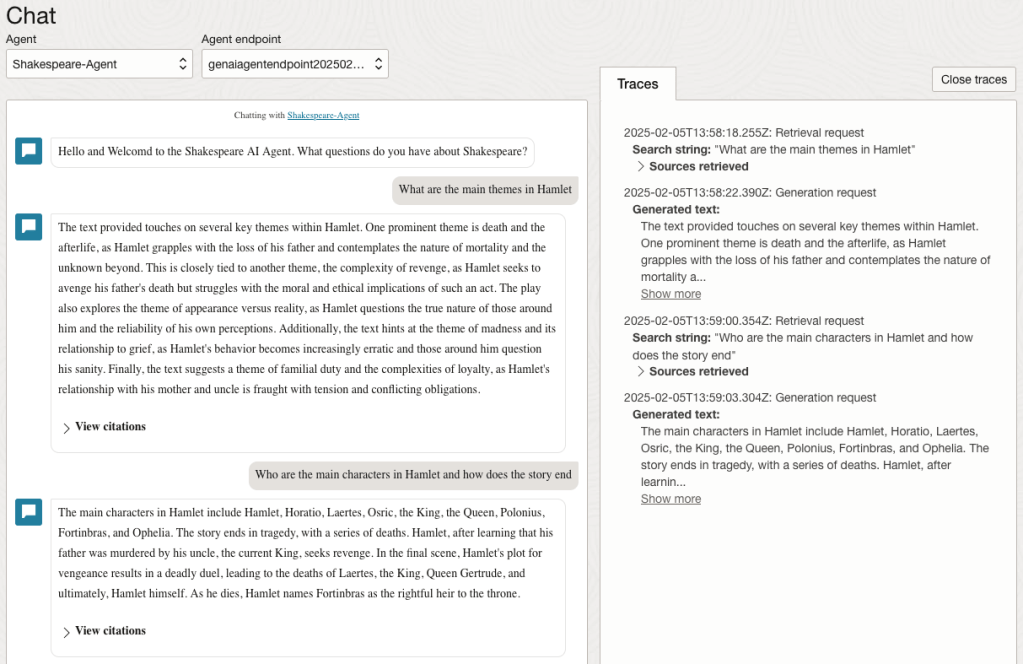

Step-4 Test using Chatbot

After verifying the endpoints have been created, you can open a Chatbot by clicking on ‘Chat’ from the menu on the left-hand side of the screen.

Select the name of the ‘Agent’ from the drop-down list e.g. Shakespeare-Post.

Select an end-point for the Agent.

After these have been selected you will see the ‘Welcome’ message. This was defined when creating the Agent.

Here are a couple of examples of querying the works by Shakespeare.

In addition to giving a response to the questions, the Chatbot also lists the sections of the underlying documents and passages from those documents used to form the response/answer.

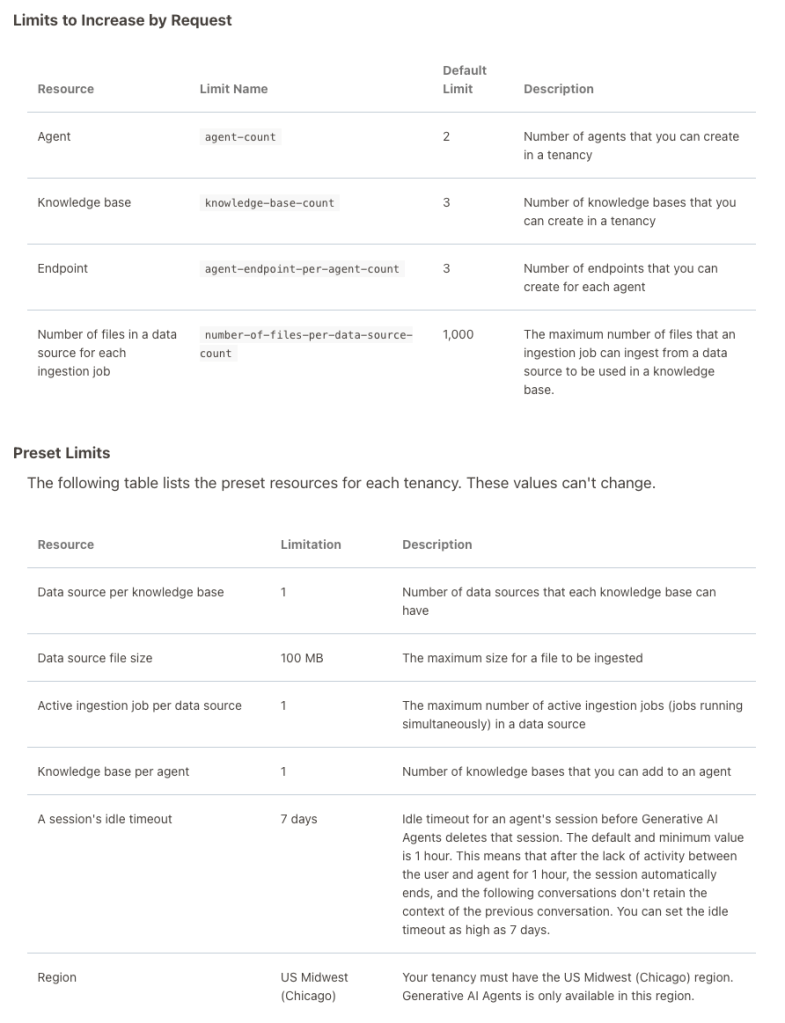

When creating Gen AI Agents, you need to be careful of two things. The first is the Cloud Region. Gen AI Agents are only available in certain Cloud Regions. If they aren’t available in your Region, you’ll need to request access to one of those or setup a new OCI account based in one of those regions. The second thing is the Resource Limits. At the time of writing this post, the following was allowed. Check out the documentation for more details. You might need to request that these limits be increased.

I’ll have another post showing how you can run the Chatbot on your computer or VM as a webpage.

Select AI – OpenAI changes

A few weeks ago I wrote a few blog posts about using SelectAI. These illustrated integrating and using Cohere and OpenAI with SQL commands in your Oracle Cloud Database. See these links below.

- SelectAI – the beginning of a journey

- SelectAI – Doing something useful

- SelectAI – Can metadata help

- SelectAI – the APEX version

With the constantly changing world of APIs, has impacted the steps I outlined in those posts, particularly if you are using the OpenAI APIs. Two things have changed since writing those posts a few weeks ago. The first is with creating the OpenAI API keys. When creating a new key you need to define a project. For now, just select ‘Default Project’. This is a minor change, but it has caused some confusion for those following my steps in this blog post. I’ve updated that post to reflect the current setup in defining a new key in OpenAI. This is a minor change, oh and remember to put a few dollars into your OpenAI account for your key to work. I put an initial $10 into my account and a few minutes later API key for me from my Oracle (OCI) Database.

The second change is related to how the OpenAI API is called from Oracle (OCI) Databases. The API is now expecting a model name. From talking to the Oracle PMs, they will be implementing a fix in their Cloud Databases where the default model will be ‘gpt-3.5-turbo’, but in the meantime, you have to explicitly define the model when creating your OpenAI profile.

BEGIN

--DBMS_CLOUD_AI.drop_profile(profile_name => 'COHERE_AI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'COHERE_AI',

attributes => '{"provider": "cohere",

"credential_name": "COHERE_CRED",

"object_list": [{"owner": "SH", "name": "customers"},

{"owner": "SH", "name": "sales"},

{"owner": "SH", "name": "products"},

{"owner": "SH", "name": "countries"},

{"owner": "SH", "name": "channels"},

{"owner": "SH", "name": "promotions"},

{"owner": "SH", "name": "times"}],

"model":"gpt-3.5-turbo"

}');

END;Other model names you could use include gpt-4 or gpt-4o.

SelectAI – the APEX version

I’ve written a few blog posts about the new Select AI feature on the Oracle Database. In this post, I’ll explore how to use this within APEX, because you have to do things in a different way.

The previous posts on Select AI are:

- SelectAI – the beginning of a journey

- SelectAI – Doing something useful

- SelectAI – Can metadata help

- SelectAI – the APEX version

We have seen in my previous posts how the PL/SQL package called DBMS_CLOUD_AI was used to create a profile. This profile provided details of what provided to use (Cohere or OpenAI in my examples), and what metadata (schemas, tables, etc) to send to the LLM. When you look at the DBMS_CLOUD_AI PL/SQL package it only contains seven functions (at time of writing this post). Most of these functions are for managing the profile, such as creating, deleting, enabling, disabling and setting the profile attributes. But there is one other important function called GENERATE. This function can be used to send your request to the LLM.

Why is the DBMS_CLOUD_AI.GENERATE function needed? We have seen in my previous posts using Select AI using common SQL tools such as SQL Developer, SQLcl and SQL Developer extension for VSCode. When using these tools we need to enable the SQL session to use Select AI by setting the profile. When using APEX or creating your own PL/SQL functions, etc. You’ll still need to set the profile, using

EXEC DBMS_CLOUD_AI.set_profile('OPEN_AI');We can now use the DBMS_CLOUD_AI.GENERATE function to run our equivalent Select AI queries. We can use this to run most of the options for Select AI including showsql, narrate and chat. It’s important to note here that runsql is not supported. This was the default action when using Select AI. Instead, you obtain the necessary SQL using showsql, and you can then execute the returned SQL yourself in your PL/SQL code.

Here are a few examples from my previous posts:

SELECT DBMS_CLOUD_AI.GENERATE(prompt => 'what customer is the largest by sales',

profile_name => 'OPEN_AI',

action => 'showsql')

FROM dual;

SELECT DBMS_CLOUD_AI.GENERATE(prompt => 'how many customers in San Francisco are married',

profile_name => 'OPEN_AI',

action => 'narrate')

FROM dual;

SELECT DBMS_CLOUD_AI.GENERATE(prompt => 'who is the president of ireland',

profile_name => 'OPEN_AI',

action => 'chat')

FROM dual;If using Oracle 23c or higher you no longer need to include the FROM DUAL;

SelectAI – Can metadata help

Continuing with the exploration of Select AI, in this post I’ll look at how metadata can help. In my previous posts on Select AI, I’ve walked through examples of exploring the data in the SH schema and how you can use some of the conversational features. These really give a lot of potential for developing some useful features in your apps.

Many of you might have encountered schemas here either the table names and/or column names didn’t make sense. Maybe their names looked like some weird code or something, and you had to look up a document, often referred to as a data dictionary, to decode the actual meaning. In some instances, these schemas cannot be touched and in others, minor changes are allowed. In these later cases, we can look at adding some metadata to the tables to give meaning to these esoteric names.

For the following example, I’ve taken the simple EMP-DEPT tables and renamed the table and column names to something very generic. You’ll see I’ve added comments to explain the Tables and for each of the Columns. These comments should correspond to the original EMP-DEPT tables.

CREATE TABLE TABLE1(

c1 NUMBER(2) not null primary key,

c2 VARCHAR2(50) not null,

c3 VARCHAR2(50) not null);

COMMENT ON TABLE table1 IS 'Department table. Contains details of each Department including Department Number, Department Name and Location for the Department';

COMMENT ON COLUMN table1.c1 IS 'Department Number. Primary Key. Unique. Used to join to other tables';

COMMENT ON COLUMN table1.c1 IS 'Department Name. Name of department. Description of function';

COMMENT ON COLUMN table1.c3 IS 'Department Location. City where the department is located';

-- create the EMP table as TABLE2

CREATE TABLE TABLE2(

c1 NUMBER(4) not null primary key,

c2 VARCHAR2(50) not null,

c3 VARCHAR2(50) not null,

c4 NUMBER(4),

c5 DATE,

c6 NUMBER(10,2),

c7 NUMBER(10,2),

c8 NUMBER(2) not null);

COMMENT ON TABLE table2 IS 'Employee table. Contains details of each Employee. Employees';

COMMENT ON COLUMN table2.c1 IS 'Employee Number. Primary Key. Unique. How each employee is idendifed';

COMMENT ON COLUMN table2.c1 IS 'Employee Name. Name of each Employee';

COMMENT ON COLUMN table2.c3 IS 'Employee Job Title. Job Role. Current Position';

COMMENT ON COLUMN table2.c4 IS 'Manager for Employee. Manager Responsible. Who the Employee reports to';

COMMENT ON COLUMN table2.c5 IS 'Hire Date. Date the employee started in role. Commencement Date';

COMMENT ON COLUMN table2.c6 IS 'Salary. How much the employee is paid each month. Dollars';

COMMENT ON COLUMN table2.c7 IS 'Commission. How much the employee can earn each month in commission. This is extra on top of salary';

COMMENT ON COLUMN table2.c8 IS 'Department Number. Foreign Key. Join to Department Table';

insert into table1 values (10,'Accounting','New York');

insert into table1 values (20,'Research','Dallas');

insert into table1 values (30,'Sales','Chicago');

insert into table1 values (40,'Operations','Boston');

alter session set nls_date_format = 'YY/MM/DD';

insert into table2 values (7369,'SMITH','CLERK',7902,'93/6/13',800,0.00,20);

insert into table2 values (7499,'ALLEN','SALESMAN',7698,'98/8/15',1600,300,30);

insert into table2 values (7521,'WARD','SALESMAN',7698,'96/3/26',1250,500,30);

insert into table2 values (7566,'JONES','MANAGER',7839,'95/10/31',2975,null,20);

insert into table2 values (7698,'BLAKE','MANAGER',7839,'92/6/11',2850,null,30);

insert into table2 values (7782,'CLARK','MANAGER',7839,'93/5/14',2450,null,10);

insert into table2 values (7788,'SCOTT','ANALYST',7566,'96/3/5',3000,null,20);

insert into table2 values (7839,'KING','PRESIDENT',null,'90/6/9',5000,0,10);

insert into table2 values (7844,'TURNER','SALESMAN',7698,'95/6/4',1500,0,30);

insert into table2 values (7876,'ADAMS','CLERK',7788,'99/6/4',1100,null,20);

insert into table2 values (7900,'JAMES','CLERK',7698,'00/6/23',950,null,30);

insert into table2 values (7934,'MILLER','CLERK',7782,'00/1/21',1300,null,10);

insert into table2 values (7902,'FORD','ANALYST',7566,'97/12/5',3000,null,20);

insert into table2 values (7654,'MARTIN','SALESMAN',7698,'98/12/5',1250,1400,30);Can Select AI be used to query this data? The simple answer is ‘ish’. Yes, Select AI can query this data but some care is needed on how you phrase the questions, and some care is needed to refine the metadata descriptions given in the table and column Comments.

To ensure these metadata Comments are exposed to the LLMs, we need to include the following line in our Profile

"comments":"true",Using the same Profile setup I used for OpenAI, we need to include the tables and the (above) comments:true command. See below in bold

BEGIN

DBMS_CLOUD_AI.drop_profile(profile_name => 'OPEN_AI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'OPEN_AI',

attributes => '{"provider": "openai",

"credential_name": "OPENAI_CRED",

"comments":"true",

"object_list": [{"owner": "BRENDAN", "name": "TABLE1"},

{"owner": "BRENDAN", "name": "TABLE2"}],

"model":"gpt-3.5-turbo"

}');

END;After we set the profile for our session, we can now write some statements to explore the data.

Warning: if you don’t include “comments”:”true”, you’ll get no results being returned.

Here are a few of what I wrote.

select ai what departments do we have;

select AI showsql what departments do we have;

select ai count departments;

select AI showsql count department;

select ai how many employees;

select ai how many employees work in department 30;

select ai count unique job titles;

select ai list cities where departments are located;

select ai how many employees work in New York;

select ai how many people work in each city;

select ai where are the departments located;

select ai what is the average salary for each department;Check out the other posts about Select AI.

SelectAI – Doing something useful

In a previous post, I introduced Select AI and gave examples of how to do some simple things. These included asking it using some natural language questions, to query some data in the Database. That post used both Cohere and OpenAI to process the requests. There were mixed results and some gave a different, somewhat disappointing, outcome. But with using OpenAI the overall outcome was a bit more positive. To build upon the previous post, this post will explore some of the additional features of Select AI, which can give more options for incorporating Select AI into your applications/solutions.

Select AI has five parameters, as shown in the table below. In the previous post, the examples focused on using the first parameter. Although those examples didn’t include the parameter name ‘runsql‘. It is the default parameter and can be excluded from the Select AI statement. Although there were mixed results from using this default parameter ‘runsql’, it is the other parameters that make things a little bit more interesting and gives you opportunities to include these in your applications. In particular, the ‘narrate‘ and ‘explainsql‘ parameters and to a lesser extent the ‘chat‘ parameter. Although for the ‘chat’ parameter there are perhaps slightly easier and more efficient ways of doing this.

Let’s start by looking at the ‘chat‘ parameter. This allows you to ‘chat’ LLM just like you would with ChatGPT and other similar. A useful parameter to set in the CREATE_PROFILE is to set the conversation to TRUE, as that can give more useful results as the conversation develops.

BEGIN

DBMS_CLOUD_AI.drop_profile(profile_name => 'OPEN_AI');

DBMS_CLOUD_AI.create_profile(

profile_name => 'OPEN_AI',

attributes => '{"provider": "openai",

"credential_name": "OPENAI_CRED",

"object_list": [{"owner": "SH", "name": "customers"},

{"owner": "SH", "name": "sales"},

{"owner": "SH", "name": "products"},

{"owner": "SH", "name": "countries"},

{"owner": "SH", "name": "channels"},

{"owner": "SH", "name": "promotions"},

{"owner": "SH", "name": "times"}],

"conversation": "true", "model":"gpt-3.5-turbo" }');

END;There are a few statements I’ve used.

select AI chat who is the president of ireland;

select AI chat what role does NAMA have in ireland;

select AI chat what are the annual revenues of Oracle;

select AI chat who is the largest cloud computing provider;

select AI chat can you rank the cloud providers by income over the last 5 years;

select AI chat what are the benefits of using Oracle Cloud;As you’d expect the results can be ‘kind of correct’, with varying levels of information given. I’ve tried these using Cohere and OpenAI, and their responses illustrate the need for careful testing and evaluation of the various LLMs to see which one suits your needs.

In my previous post, I gave some examples of using Select AI to query data in the Database based on a natural language request. Select AI takes that request and sends it, along with details of the objects listed in the create_profile, to the LLM. The LLM then sends back the SQL statement, which is then executed in the Database and the results are displayed. But what if you want to see the SQL generated by the LLM. To see the SQL you can use the ‘showsql‘ parameter. Here are a couple of examples:

SQL> select AI showsql how many customers in San Francisco are married;

RESPONSE

_____________________________________________________________________________SELECT COUNT(*) AS total_married_customers

FROM SH.CUSTOMERS c

WHERE c.CUST_CITY = 'San Francisco'

AND c.CUST_MARITAL_STATUS = 'Married'

SQL> select AI what customer is the largest by sales;

CUST_ID CUST_FIRST_NAME CUST_LAST_NAME TOTAL_SALES

__________ __________________ _________________ ______________

11407 Dora Rice 103412.66

SQL> select AI showsql what customer is the largest by sales;

RESPONSE

_____________________________________________________________________________SELECT C.CUST_ID, C.CUST_FIRST_NAME, C.CUST_LAST_NAME, SUM(S.AMOUNT_SOLD) AS TOTAL_SALES

FROM SH.CUSTOMERS C

JOIN SH.SALES S ON C.CUST_ID = S.CUST_ID

GROUP BY C.CUST_ID, C.CUST_FIRST_NAME, C.CUST_LAST_NAME

ORDER BY TOTAL_SALES DESC

FETCH FIRST 1 ROW ONLY The examples above that illustrate the ‘showsql‘ is kind of interesting. Careful consideration of how and where to use this is needed.

Where things get a little bit more interesting with the ‘narrate‘ parameter, which attempts to narrate or explain the output from the query. There are many use cases where this can be used to supplement existing dashboards, etc. The following are examples of using ‘narrate‘ for the same two queries used above.

SQL> select AI narrate how many customers in San Francisco are married;

RESPONSE

________________________________________________________________

The total number of married customers in San Francisco is 18.

SQL> select AI narrate what customer is the largest by sales;

RESPONSE

_____________________________________________________________________________To find the customer with the largest sales, you can use the following SQL query:

```sql

SELECT c.CUST_FIRST_NAME || ' ' || c.CUST_LAST_NAME AS CUSTOMER_NAME, SUM(s.AMOUNT_SOLD) AS TOTAL_SALES

FROM "SH"."CUSTOMERS" c

JOIN "SH"."SALES" s ON c.CUST_ID = s.CUST_ID

GROUP BY c.CUST_FIRST_NAME, c.CUST_LAST_NAME

ORDER BY TOTAL_SALES DESC

FETCH FIRST 1 ROW ONLY;

```

This query joins the "CUSTOMERS" and "SALES" tables on the customer ID and calculates the total sales for each customer. It then sorts the results in descending order of total sales and fetches only the first row, which represents the customer with the largest sales.

The result will be in the following format:

| CUSTOMER_NAME | TOTAL_SALES |

|---------------|-------------|

| Tess Drumm | 161882.79 |

In this example, the customer with the largest sales is "Tess Drumm" with a total sales amount of 161,882.79.

Looking at the outputs from using the ‘narrate‘ parameter, we can see the level of detail given and see the potential of including this kind of information in our applications, dashboards, etc. But to use this output some additional filtering and subsetting will need to be performed.

The final parameter is called ‘explainsql‘. While it may not be as useful as the ‘narrate‘ parameter, it does have some potential uses. The ‘explainsql‘ attempts to explain in natural language what a SQL query is attempting to do, based on a natural language statement. Again using the same two examples, we get the following.

SQL> select AI explainsql what customer is the largest by sales;

RESPONSE

_____________________________________________________________________________SELECT C.CUST_ID, C.CUST_FIRST_NAME, C.CUST_LAST_NAME, SUM(S.AMOUNT_SOLD) AS TOTAL_SALES

FROM SH.CUSTOMERS C

JOIN SH.SALES S ON C.CUST_ID = S.CUST_ID

GROUP BY C.CUST_ID, C.CUST_FIRST_NAME, C.CUST_LAST_NAME

ORDER BY TOTAL_SALES DESC

FETCH FIRST 1 ROW ONLY;

Explanation:

- The query selects the customer ID, first name, last name, and the sum of the amount sold from the CUSTOMERS and SALES tables.

- The CUSTOMERS and SALES tables are joined on the CUST_ID column.

- The results are grouped by customer ID, first name, and last name.

- The results are then ordered in descending order by the total sales.

- The FETCH FIRST 1 ROW ONLY clause is used to retrieve only the top row with the highest total sales.

SQL> select AI explainsql how many customers in San Francisco are married;

RESPONSE

_____________________________________________________________________________

SELECT COUNT(*) AS total_married_customers

FROM SH.CUSTOMERS c

WHERE c.CUST_CITY = 'San Francisco'

AND c.CUST_MARITAL_STATUS = 'Married';

This query selects the count of customers who are married and live in San Francisco. The table alias "c" is used for the CUSTOMERS table. The condition "c.CUST_CITY = 'San Francisco'" filters the customers who live in San Francisco, and the condition "c.CUST_MARITAL_STATUS = 'Married'" filters the customers who are married. The result is the total number of married customers in San Francisco. Check out the other posts about Select AI.

EU AI Act has been passed by EU parliament

It feels like we’ve been hearing about and talking about the EU AI Act for a very long time now. But on Wednesday 13th March 2024, the EU Parliament finally voted to approve the Act. While this is a major milestone, we haven’t crossed the finish line. There are a few steps to complete, although these are minor steps and are part of the process.

The remaining timeline is:

- The EU AI Act will undergo final linguistic approval by lawyer-linguists in April. This is considered a formality step.

- It will then be published in the Official EU Journal

- 21 days after being published it will come into effect (probably in May)

- The Prohibited Systems provisions will come into force six months later (probably by end of 2024)

- All other provisions in the Act will come into force over the next 2-3 years

If you haven’t already started looking at and evaluating the various elements of AI deployed in your organisation, now is the time to start. It’s time to prepare and explore what changes, if any, you need to make. If you don’t the penalties for non-compliance are hefty, with fines of up to €35 million or 7% of global turnover.

The first thing you need to address is the Prohibited AI Systems and the EU AI Act outlines the following and will need to be addressed before the end of 2024:

- Manipulative and Deceptive Practices: systems that use subliminal techniques to materially distort a person’s decision-making capacity, leading to significant harm. This includes systems that manipulate behaviour or decisions in a way that the individual would not have otherwise made.

- Exploitation of Vulnerabilities: systems that target individuals or groups based on age, disability, or socio-economic status to distort behaviour in harmful ways.

- Biometric Categorisation: systems that categorise individuals based on biometric data to infer sensitive information like race, political opinions, or sexual orientation. This prohibition does not cover any labelling or filtering of lawfully acquired biometric datasets, such as images. There are also exceptions for law enforcement.

- Social Scoring: systems designed to evaluate individuals or groups over time based on their social behaviour or predicted personal characteristics, leading to detrimental treatment.

- Real-time Biometric Identification: The use of real-time remote biometric identification systems in publicly accessible spaces for law enforcement is heavily restricted, with allowances only under narrowly defined circumstances that require judicial or independent administrative approval.

- Risk Assessment in Criminal Offences: systems that assess the risk of individuals committing criminal offences based solely on profiling, except when supporting human assessment already based on factual evidence.

- Facial Recognition Databases: systems that create or expand facial recognition databases through untargeted scraping of images are prohibited.

- Emotion Inference in Workplaces and Educational Institutions: The use of AI to infer emotions in sensitive environments like workplaces and schools is banned, barring exceptions for medical or safety reasons.

In addition to the timeline given above we also have:

- 12 months after entry into force: Obligations on providers of general purpose AI models go into effect. Appointment of member state competent authorities. Annual Commission review of and possible amendments to the list of prohibited AI.

- after 18 months: Commission implementing act on post-market monitoring

- after 24 months: Obligations on high-risk AI systems specifically listed in Annex III, which includes AI systems in biometrics, critical infrastructure, education, employment, access to essential public services, law enforcement, immigration and administration of justice. Member states to have implemented rules on penalties, including administrative fines. Member state authorities to have established at least one operational AI regulatory sandbox. Commission review and possible amendment of the last of high-risk AI systems.

- after 36 months: Obligations for high-rish AI systems that are not prescribed in Annex III but are intended to be used as a safety component of a product, or the AI is itself a product, and the product is required to undergo a third-party conformity assessment under existing specific laws, for example, toys, radio equipment, in vitro diagnostic medical devices, civil aviation security and agricultural vehicles.

The EU has provided an official compliance check that helps identify which parts of the EU AI Act apply in a given use case.

Using Python for OCI Vision – Part 1

I’ve written a few blog posts over the past few weeks/months on how to use OCI Vision, which is part of the Oracle OCI AI Services. My blog posts have shown how to get started with OCI Vision, right up to creating your own customer models using this service.

In this post, the first in a series of blog posts, I’ll give you some examples of how to use these custom AI Vision models using Python. Being able to do this, opens the models you create to a larger audience of developers, who can now easily integrate these custom models into their applications.

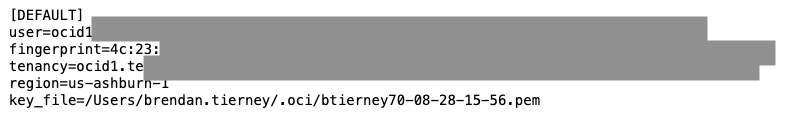

In a previous post, I covered how to setup and configure your OCI connection. Have a look at that post as you will need to have completed the steps in it before you can follow the steps below.

To inspect the config file we can spool the contents of that file

!cat ~/.oci/config

I’ve hidden some of the details as these are related to my Oracle Cloud accountThis allows us to quickly inspect that we have everything setup correctly.

The next step is to load this config file, and its settings, into our Python environment.

config = oci.config.from_file()

config

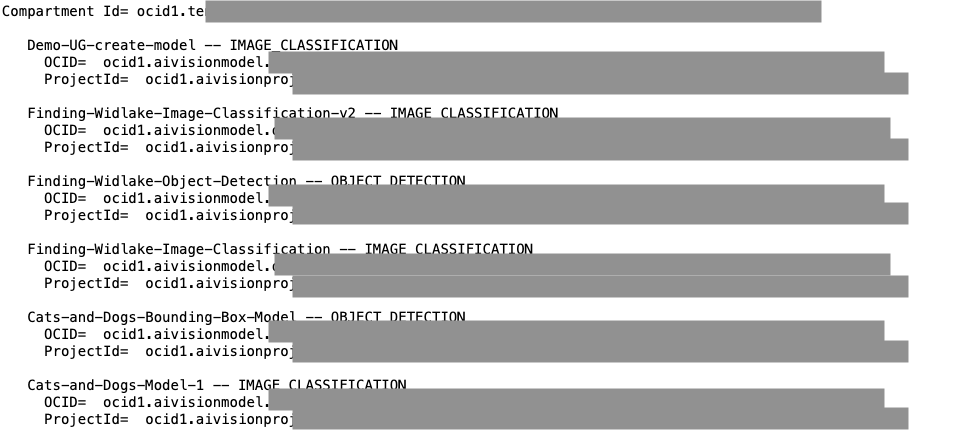

We can now list all the projects I’ve created in my compartment for OCI Vision services.

#My Compartment ID

COMPARTMENT_ID = "<compartment-id>"

#List all the AI Vision Projects available in My compartment

response = ai_service_vision_client.list_projects(compartment_id=COMPARTMENT_ID)

#response.data

for item in response.data.items:

print('- ', item.display_name)

print(' ProjectId= ', item.id)

print('')Which lists the following OCI Vision projects.

We can also list out all the Models in my various projects. When listing these I print out the OCID of each, as this is needed when we want to use one of these models to process an image. I’ve redacted these as there is a minor cost associated with each time these are called.

#List all the AI Vision Models available in My compartment

list_models = ai_service_vision_client.list_models(

# this is the compartment containing the model

compartment_id=COMPARTMENT_ID

)

print("Compartment Id=", COMPARTMENT_ID)

print("")

for item in list_models.data.items:

print(' ', item.display_name, '--', item.model_type)

print(' OCID= ',item.id)

print(' ProjectId= ', item.project_id)

print('')

I’ll have other posts in this series on using the pre-built and custom model to label different image files on my desktop.

You must be logged in to post a comment.