Analytics

Using NotebookLM to help with understanding Oracle Analytics Cloud or any other product

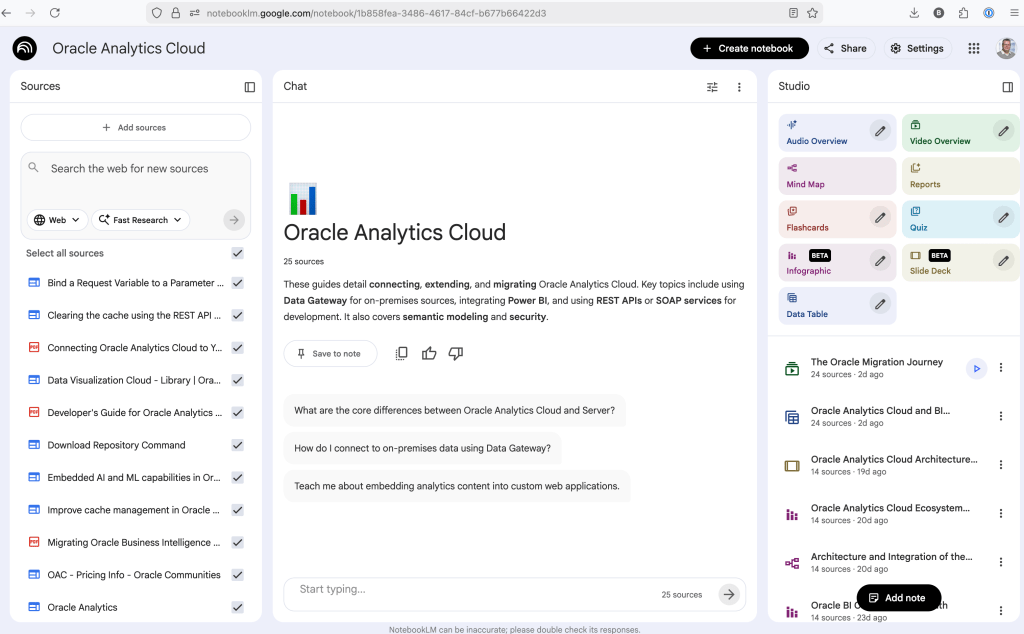

Over the past few months, we’ve seen a plethora of new LLM related products/agents being released. One such one is NotebookLM from Google. The offical description say “NotebookLM is an AI-powered research and note-taking tool from Google Labs that allows users to ground a large language model (like Gemini) in their own documents, such as PDFs, Google Docs, website URLs, or audio, acting as a personal, intelligent research assistant. It facilitates summarizing, analyzing, and querying information within these specific sources to create study guides, outlines, and, notably, “Audio Overviews” (podcast-style summaries)”

Let’s have a look at using NotebookLM to help with answering questions and how it can help with understanding Oracle Analytics Cloud (OAC).

Yes, you’ll need a Google account, and Yes you need to be OK with uploading your documents to NotebookLM. Make sure you are not breaking any laws (IP, GDPR, etc). It’s really easy to create your first notebook. Simply click on ‘Create new notebook’.

When the notebook opens, you can add your documents and webpages to the notebook. These can be documents in PDF, audio, text, etc to the notebook repository. Currently, there seems to be a limit of 50 documents and webpages that can be added.

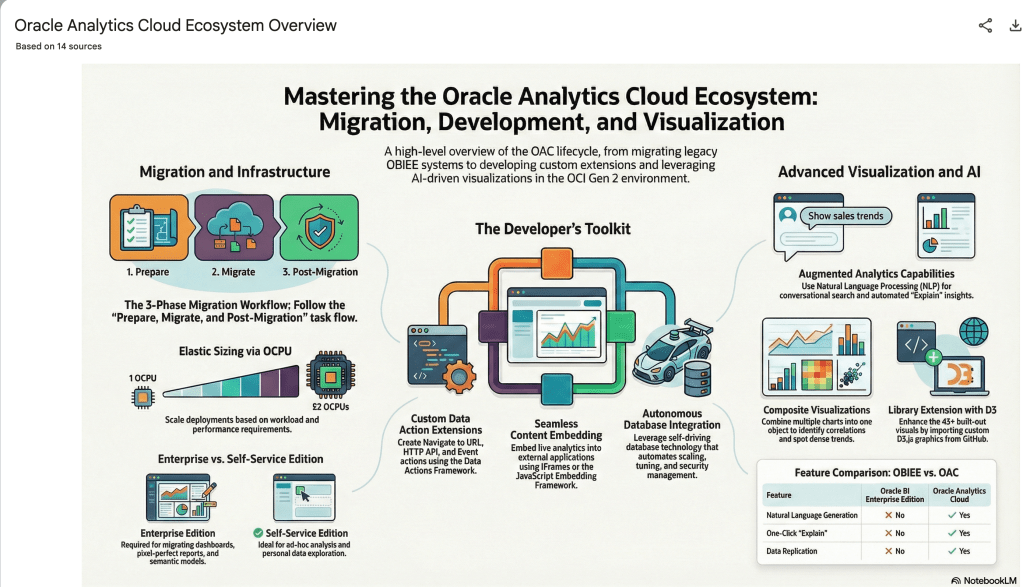

The main part of the NotebookLM provides a chatbot where you can ask questions, and the NotebookLM will search through the documents and webpages to formulate an answer. In addition to this, there are features that allow you to generate Audio Overview, Video Overview, Mind Map, Reports, Flashcards, Quiz, Infographic, Slide Deck and a Data Table.

Before we look at some of these and what they have created for Oracle Analytics Cloud, there is a small warning. Some of these can take a long time to complete, that is, if they complete. I’ve had to run some of these features multiple times to get them to create. I’ve run all of the features, and the output from these can be seen on the right-hand side of the above image.

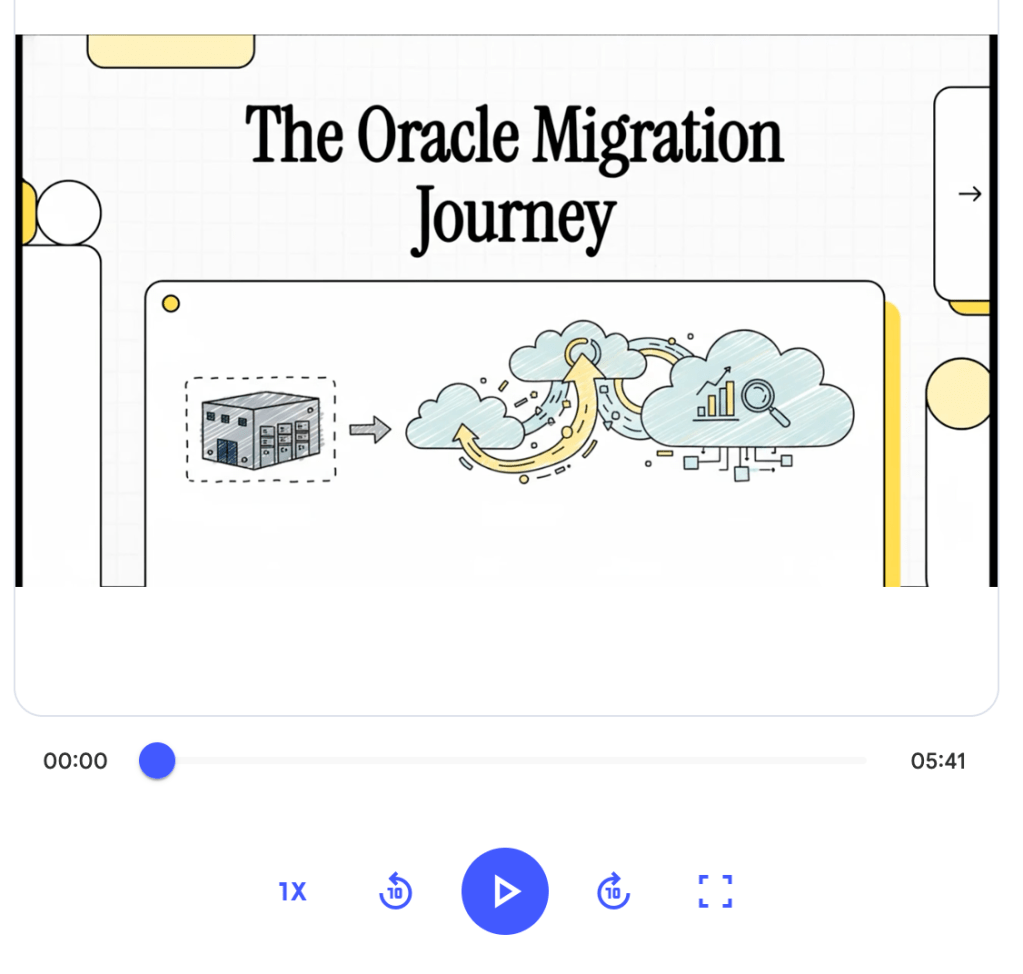

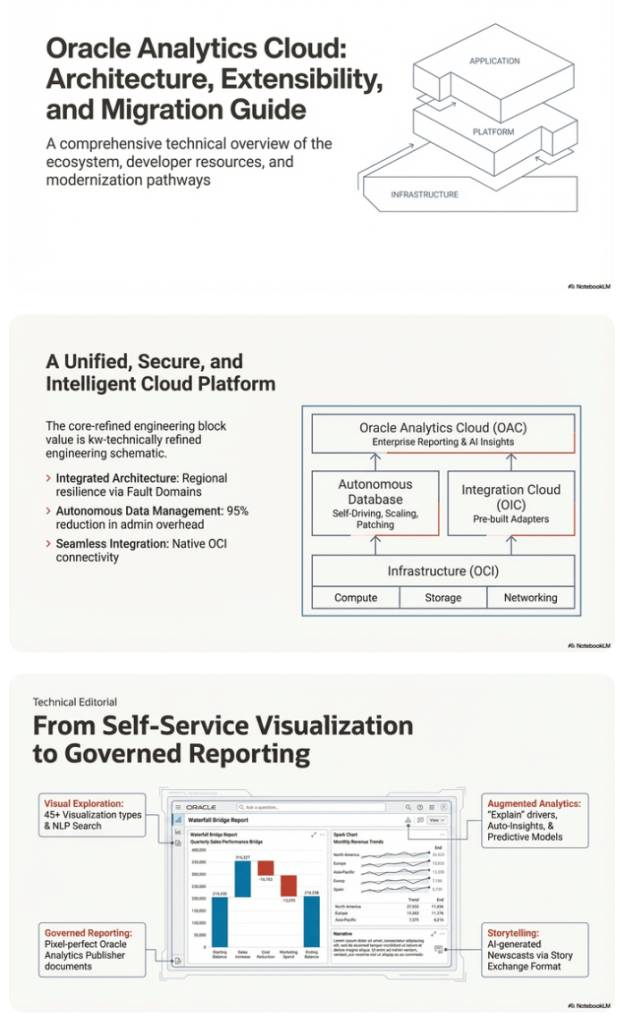

It created a 15-slide presentation on Oracle Analytics Cloud and its various features, and a five minute video on migrating OAC.

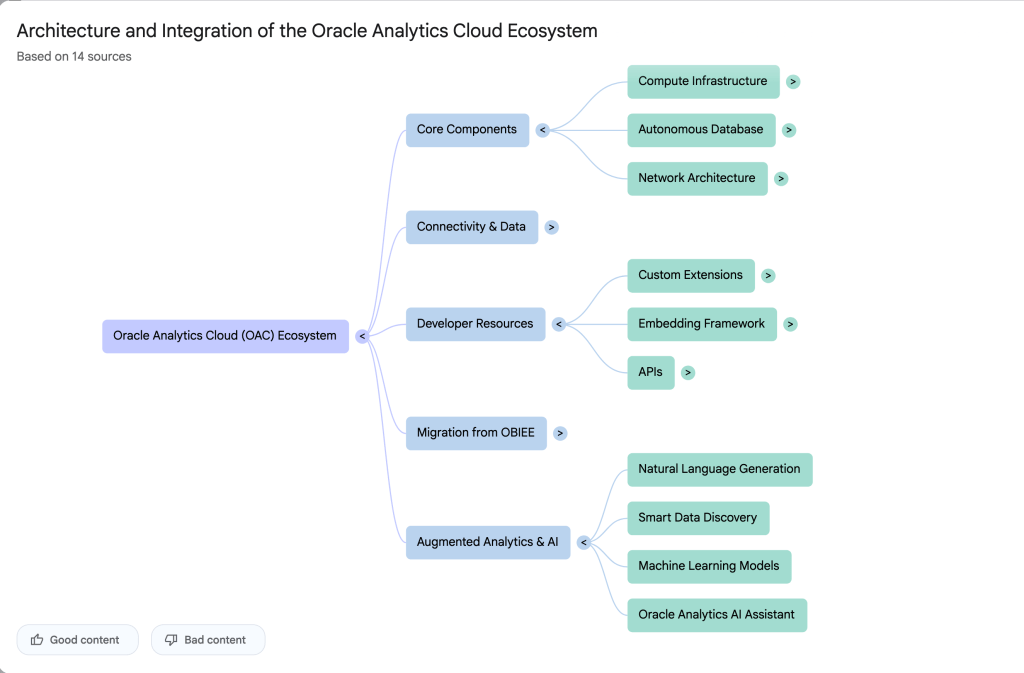

It also created a Mind-map, and an Infographic.

2024 Leaving Certificate Results – Inline

The 2024 Leaving Certificate results are out. Up, down and across the country there have been tears of joy and some muted celebrations. But there have been some disappointments too. Although the government has been talking about how the marks have been increased, with post mark adjustments, this doesn’t help students come to terms with their results.

In previous years, I’ve looked at the profile of marks across some (not all) of the Leaving Certificate tools (the tools I used included an Oracle Database, and used Oracle Analytics Cloud to do some of the analysis along with other tools). Check out these previous posts

- Leaving Certificate 2023 – Inline or more adjustments

- CAO Points 2023

- Leaving Certificate 2022 – Inflation, deflation or in-line

- CAO Points 2022

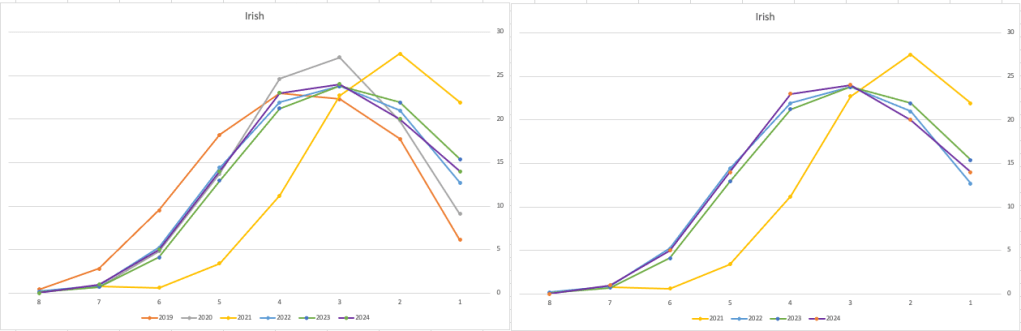

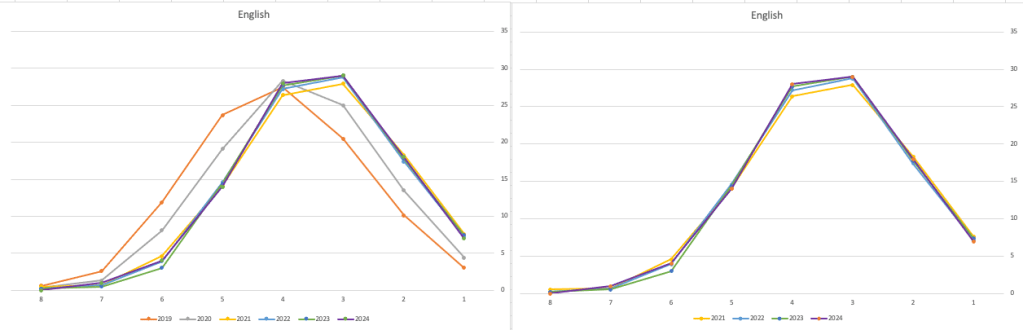

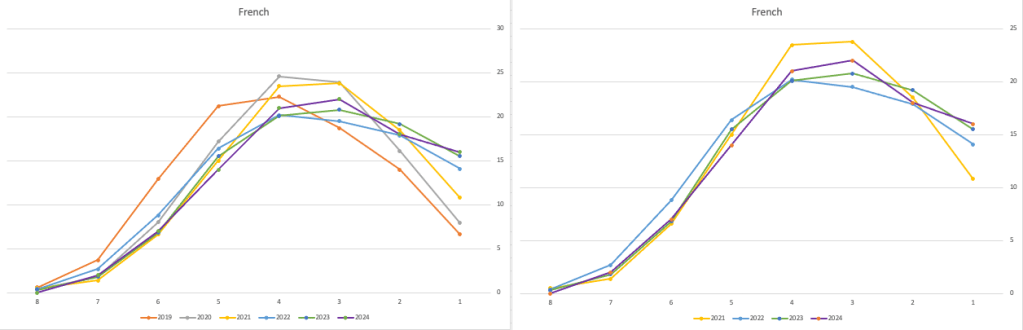

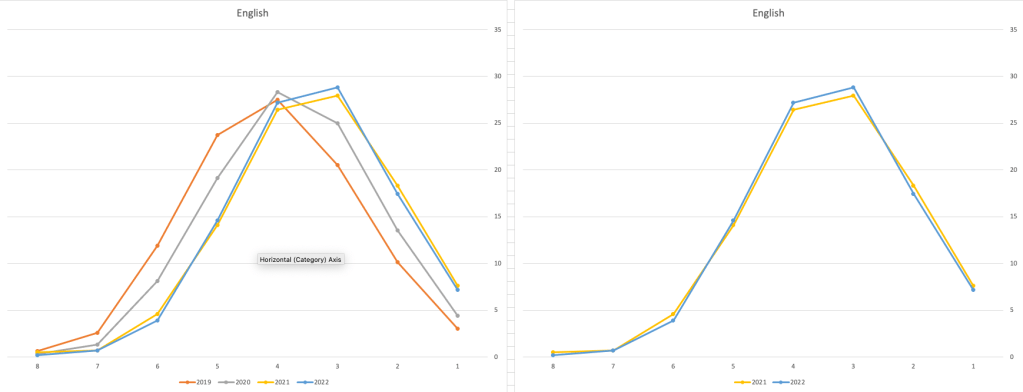

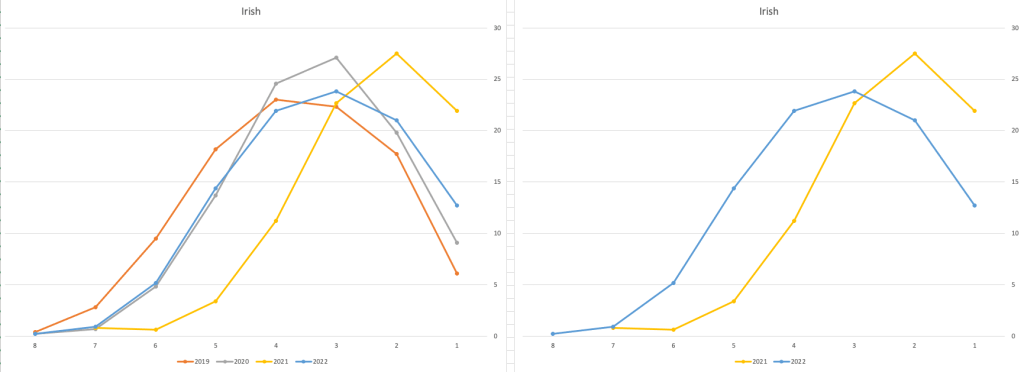

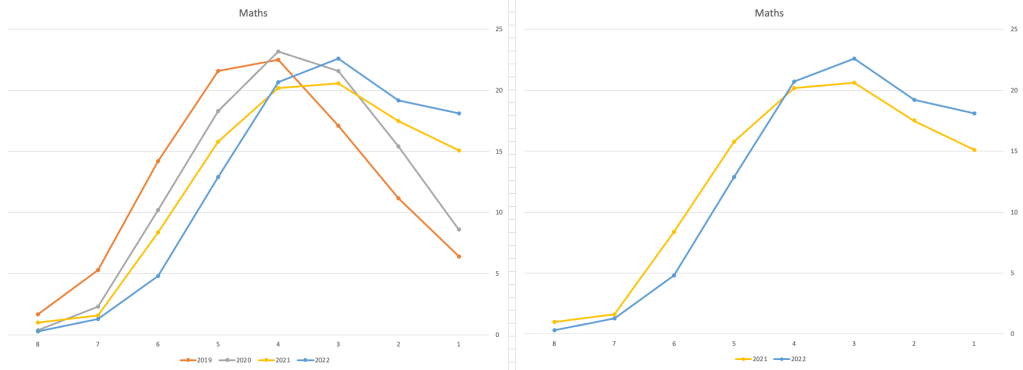

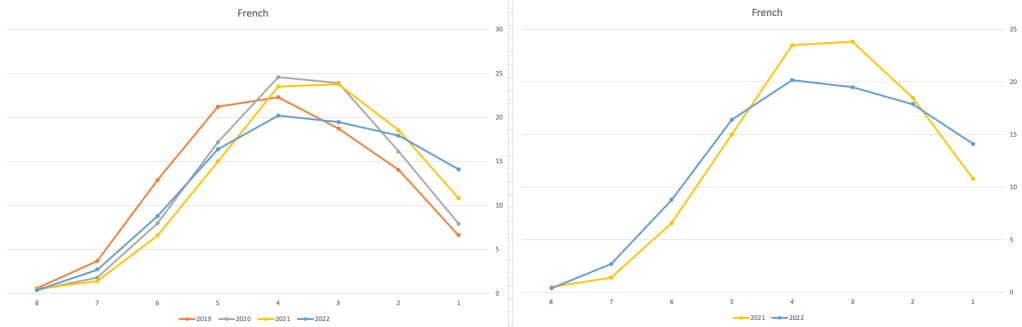

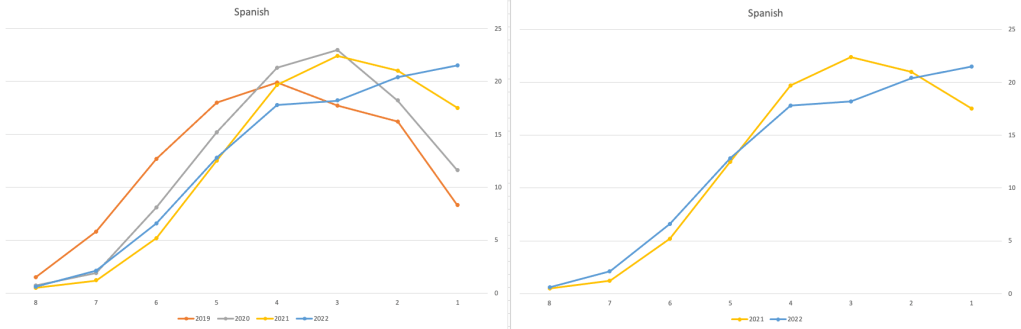

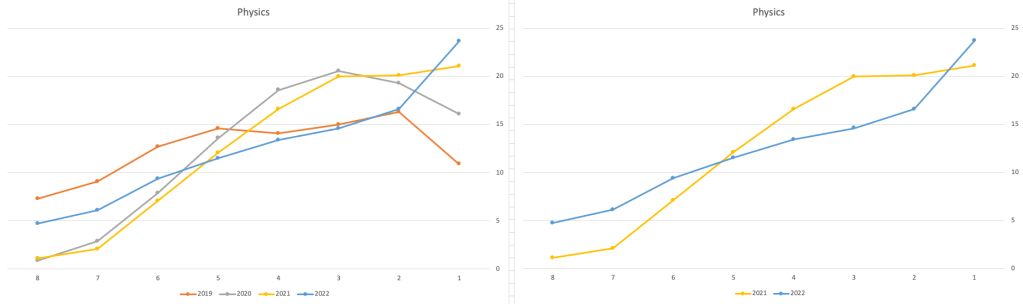

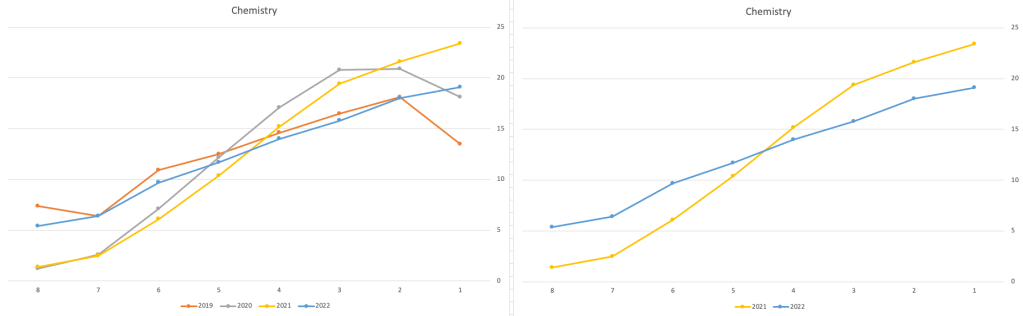

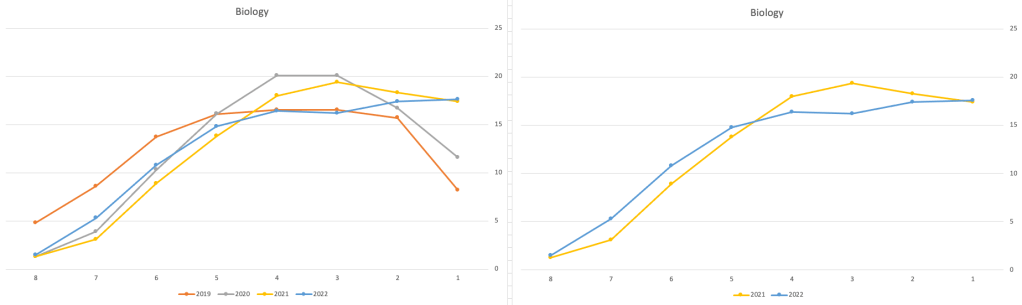

From analysing the results for 2024, both numerically and graphically, we can see the results this year are broadly inline with last year. That news might bring some joy to some students but will be slightly disappointing for others. You can see this for yourself from the graphics below. However, in some subjects, there appears to be some minor (yes very minor) changes in the profiles, with a slight shift to the left, indicating a slight decrease in the higher grades and a corresponding increase in lower grades. But across many subjects, we have seen a slight increase in those achieving a H1 grade. The impact of these slight changes will be seen in the CAO points needed for the courses in the 550+ courses. But for the courses below 500 points, we might not see much of a change in CAO points. Although there might be some minor fluctuations based on demand for certain courses, which is typical most years.

I’ll have another post looking at the CAO points after they are released, so look out for that post.

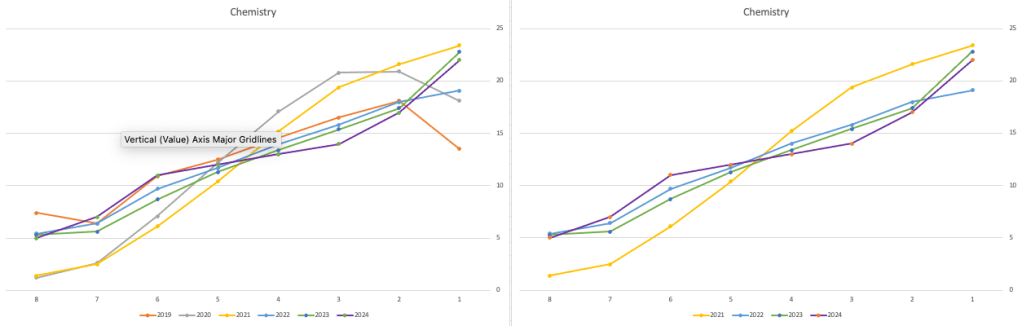

The charts below give the profile of marks for each year between 2019 (pre-Covid) and 2024. The chart on the left includes all years, and the chart on the right is for the last four years. This allows us to see if there have been any adjustments in the profiles over those years. For most subjects, we can see a marked reduction of marks for certain subjects since the 2021 exceptionally high marks. While some subjects are almost back to matching the 2019 profile (science subjects, Irish), for others the stepback needed is small. Based on the messaging from the government, the stepping back will commence in 2025

Back in April, an Irish Times article discussed the changes coming from 2025, where there will be a gradual return to “normal” (pre-Covid) profile of marks. Looking at the profile of marks over the past couple of years we can clearly see there has been some stepping back in the profile of marks. Some subjects are back to pre-Covid 2019 profiles. In some subjects, this is more evident than in others. They’ve used the phrase “on aggregate” to hide the stepping back in some subjects and less so in others.

CAO Points 2023 – Slight Deflation

There have been lots of talk and news articles written about Grade Inflation over the past few years (the Covid years) and this year was no different. Most of the discussion this year began a couple of days before the Leaving Cert results were released last week and continued right up to the CAO publishing the points needed for each course. Yes, something needs to be done about the grading profiles and to revert back to pre-Covid levels. There are many reasons why this is necessary. Perhaps the most important of which is to bring back some stability to the Leaving Cert results and corresponding CAOs Points for entry to University courses. Last year, we saw there was some minor stepping back or deflating of results. But this didn’t have much of an impact on points needed for University courses. But in 2023 we have seen a slight step back in the points needed. I mentioned this possibility in my post on the Leaving Cert profile of marks. I also mentioned the subject with the biggest step back in marks/grades was Maths, and it looks like this has had an impact on the CAO point needed.

In 2023, we have seen a drop in points for 60% of University courses. In most years (pre and post-Covid) there would always be some fluctuation of points but the fluctuations would be minor. In 2023, some courses have changed by 20+ points.

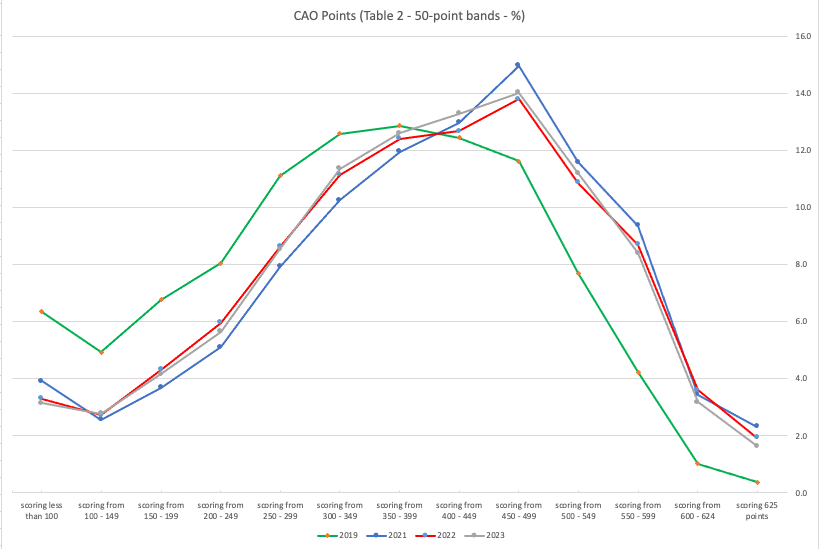

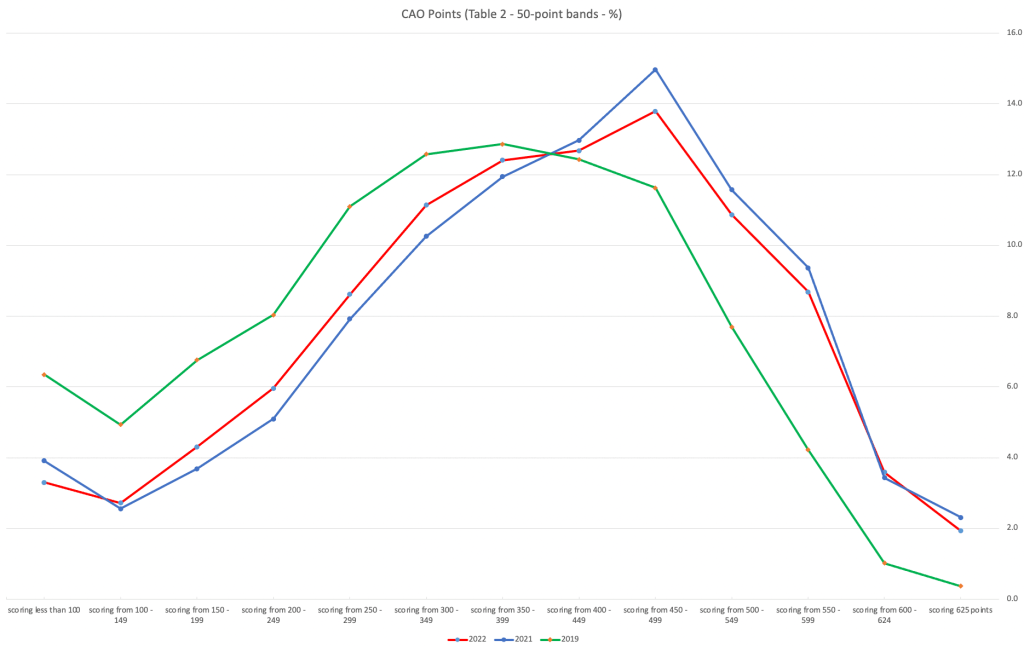

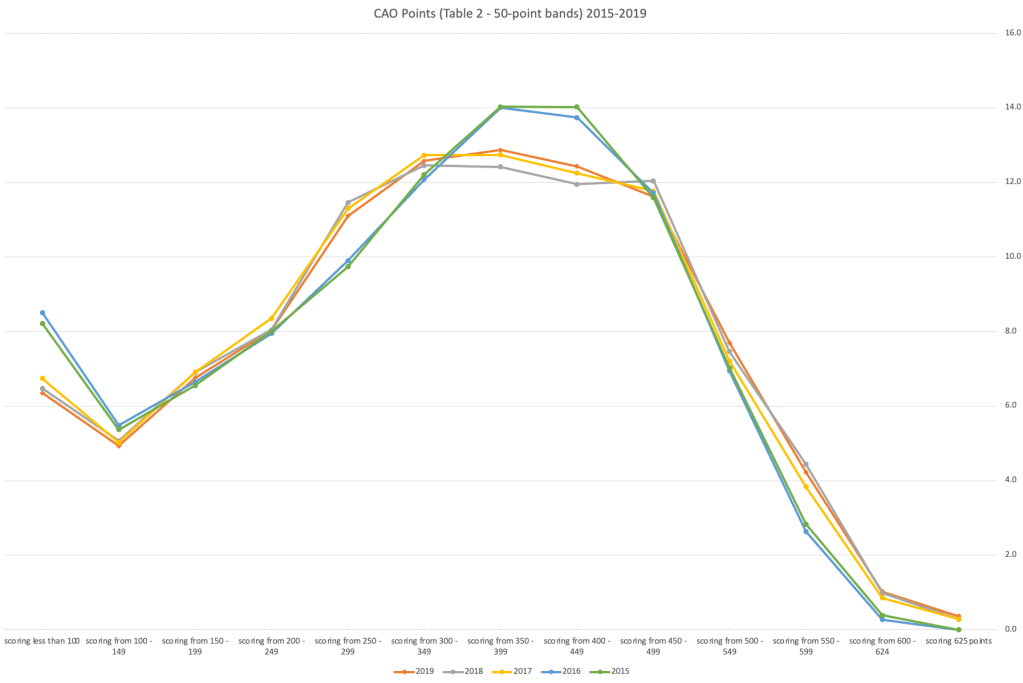

The following table and chart illustrate the profile of CAO points and the percentage of students who achieved this in ranges of 50 points.

An initial look at the data and the chart it appears the 2023 CAO points profile is very similar to that of 2022. But when you look a little closer a few things stand out. At the upper end of CAO points we see a small reduction in the percentage of students. This is reflected when you look at the range of University courses in this range. The points for these have reduced slightly in 2023 and we have fewer courses using random selection. If you now look at the 300-500 range, we see a slight increase in the percentage of students attaining these marks. But this doesn’t seem to reflect an increase in the points needed to gain entry to a course in that range. This could be due to additional places that Universities have made available across the board. Although there are some courses where there is an increase.

In 2023, we have seen a change in the geographic spread of interest in University courses, with more demand/interest in Universities outside of the Dublin region. The lack of accommodation and their costs in Dublin is a major issue, and students have been looking elsewhere to study and to locations they can easily commute to. Although demand for Trinity and UCD remains strong, there was a drop in the number for TU Dublin. There are many reported factors for this which include the accommodation issue and for those who might have considered commuting, the positioning of the Grangegorman campus in Dublin does not make this easy, unlike Trinity, UCD and DCU.

I’ve the Leaving Cert grades by subject and CAO Points datasets in a Database (Oracle). This allows me to easily analyse the data annually and to compare them to previous years, using a variety of tools.

Machine Learning App Migration to Oracle Cloud VM

Over the past few years, I’ve been developing a Stock Market prediction algorithm and made some critical refinements to it earlier this year. As with all analytics, data science, machine learning and AI projects, testing is vital to ensure its performance, accuracy and sustainability. Taking such a project out of a lab environment and putting it into a production setting introduces all sorts of different challenges. Some of these challenges include being able to self-manage its own process, logging, traceability, error and event management, etc. Automation is key and implementing all of these extra requirements tasks way more code and time than developing the actual algorithm. Typically, the machine learning and algorithms code only accounts for less than five percent of the actual code, and in some cases, it can be less than one percent!

I’ve come to the stage of deploying my App to a production-type environment, as I’ve been running it from my laptop and then a desktop for over a year now. It’s now 100% self-managing so it’s time to deploy. The environment I’ve chosen is using one of the Virtual Machines (VM) available on the Oracle Free Tier. This means it won’t cost me a cent (dollar or more) to run my App 24×7.

My App has three different components which use a core underlying machine learning predictions engine. Each is focused on a different set of stock markets. These marks operate in the different timezone of US markets, European Markets and Asian Markets. Each will run on a slightly different schedule than the rest.

The steps outlined below take you through what I had to do to get my App up and running the VM (Oracle Free Tier). It took about 20 minutes to complete everything

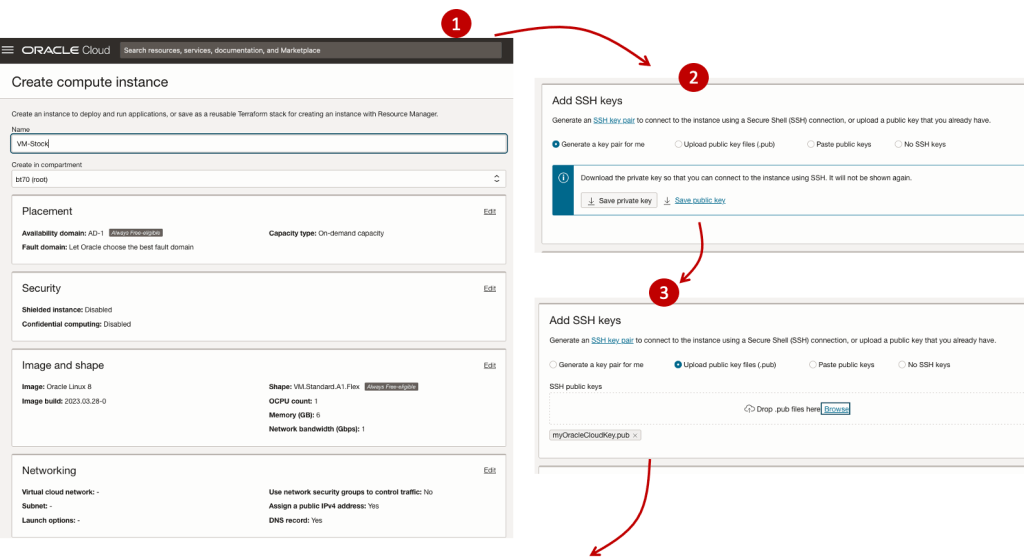

The first thing you need to do is create a ssh key file. There are a number of ways of doing this and the following is an example.

ssh-keygen -t rsa -N "" -b 2048 -C "myOracleCloudkey" -f myOracleCloudkey

This key file will be used during the creation of the VM and for logging into the VM.

Log into your Oracle Cloud account and you’ll find the Create Instances Compute i.e. create a virtual machine/

Complete the Create Instance form and upload the ssh file you created earlier. Then click the Create button. This assumes you have networking already created.

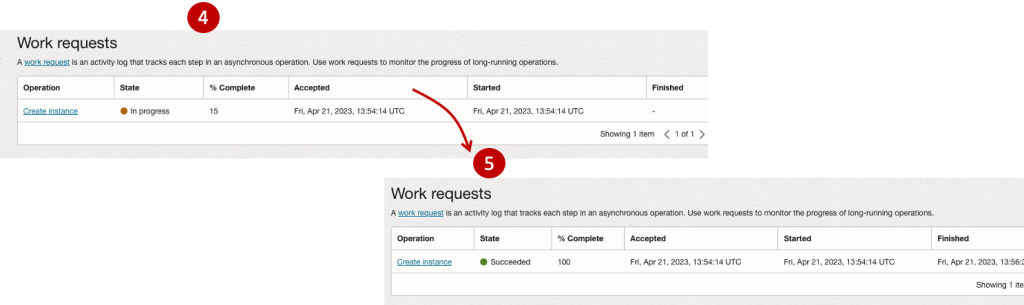

It will take a minute or two for the VM to be created and you can monitor the progress.

After it has been created you need to click on the start button to start the VM.

After it has started you can now log into the VM from a terminal window, using the public IP address

ssh -i myOracleCloudKey opc@xxx.xxx.xxx.xxxAfter you’ve logged into the VM it’s a good idea to run an update.

[opc@vm-stocks ~]$ sudo yum -y update

Last metadata expiration check: 0:13:53 ago on Fri 21 Apr 2023 14:39:59 GMT.

Dependencies resolved.

========================================================================================================================

Package Arch Version Repository Size

========================================================================================================================

Installing:

kernel-uek aarch64 5.15.0-100.96.32.el8uek ol8_UEKR7 1.4 M

kernel-uek-core aarch64 5.15.0-100.96.32.el8uek ol8_UEKR7 47 M

kernel-uek-devel aarch64 5.15.0-100.96.32.el8uek ol8_UEKR7 19 M

kernel-uek-modules aarch64 5.15.0-100.96.32.el8uek ol8_UEKR7 59 M

Upgrading:

NetworkManager aarch64 1:1.40.0-6.0.1.el8_7 ol8_baseos_latest 2.1 M

NetworkManager-config-server noarch 1:1.40.0-6.0.1.el8_7 ol8_baseos_latest 141 k

NetworkManager-libnm aarch64 1:1.40.0-6.0.1.el8_7 ol8_baseos_latest 1.9 M

NetworkManager-team aarch64 1:1.40.0-6.0.1.el8_7 ol8_baseos_latest 156 k

NetworkManager-tui aarch64 1:1.40.0-6.0.1.el8_7 ol8_baseos_latest 339 k

...

...

The VM is now ready to setup and install my App. The first step is to install Python, as all my code is written in Python.

[opc@vm-stocks ~]$ sudo yum install -y python39

Last metadata expiration check: 0:20:35 ago on Fri 21 Apr 2023 14:39:59 GMT.

Dependencies resolved.

========================================================================================================================

Package Architecture Version Repository Size

========================================================================================================================

Installing:

python39 aarch64 3.9.13-2.module+el8.7.0+20879+a85b87b0 ol8_appstream 33 k

Installing dependencies:

python39-libs aarch64 3.9.13-2.module+el8.7.0+20879+a85b87b0 ol8_appstream 8.1 M

python39-pip-wheel noarch 20.2.4-7.module+el8.6.0+20625+ee813db2 ol8_appstream 1.1 M

python39-setuptools-wheel noarch 50.3.2-4.module+el8.5.0+20364+c7fe1181 ol8_appstream 497 k

Installing weak dependencies:

python39-pip noarch 20.2.4-7.module+el8.6.0+20625+ee813db2 ol8_appstream 1.9 M

python39-setuptools noarch 50.3.2-4.module+el8.5.0+20364+c7fe1181 ol8_appstream 871 k

Enabling module streams:

python39 3.9

Transaction Summary

========================================================================================================================

Install 6 Packages

Total download size: 12 M

Installed size: 47 M

Downloading Packages:

(1/6): python39-pip-20.2.4-7.module+el8.6.0+20625+ee813db2.noarch.rpm 23 MB/s | 1.9 MB 00:00

(2/6): python39-pip-wheel-20.2.4-7.module+el8.6.0+20625+ee813db2.noarch.rpm 5.5 MB/s | 1.1 MB 00:00

...

...Next copy the code to the VM, setup the environment variables and create any necessary directories required for logging. The final part of this is to download the connection Wallett for the Database. I’m using the Python library oracledb, as this requires no additional setup.

Then install all the necessary Python libraries used in the code, for example, pandas, matplotlib, tabulate, seaborn, telegram, etc (this is just a subset of what I needed). For example here is the command to install pandas.

pip3.9 install pandasAfter all of that, it’s time to test the setup to make sure everything runs correctly.

The final step is to schedule the App/Code to run. Before setting the schedule just do a quick test to see what timezone the VM is running with. Run the date command and you can see what it is. In my case, the VM is running GMT which based on the current time locally, the VM was showing to be one hour off. Allowing for this adjustment and for day-light saving time, the time +/- markets openings can be set. The following example illustrates setting up crontab to run the App, Monday-Friday, between 13:00-22:00 and at 5-minute intervals. Open crontab and edit the schedule and command. The following is an example

> contab -e

*/5 13-22 * * 1-5 python3.9 /home/opc/Stocks.py >Stocks.txtFor some stock market trading apps, you might want it to run more frequently (than every 5 minutes) or less frequently depending on your strategy.

After scheduling the components for each of the Geographic Stock Market areas, the instant messaging of trades started to appear within a couple of minutes. After a little monitoring and validation checking, it was clear everything was running as expected. It was time to sit back and relax and see how this adventure unfolds.

For anyone interested, the App does automated trading with different brokers across the markets, while logging all events and trades to an Oracle Autonomous Database (Free Tier = no cost), and sends instant messages to me notifying me of the automated trades. All I have to do is Nothing, yes Nothing, only to monitor the trade notifications. I mentioned earlier the importance of testing, and with back-testing of the recent changes/improvements (as of the date of post), the App has given a minimum of 84% annual return each year for the past 15 years. Most years the return has been a lot more!

Automated Data Visualizations in Python

Creating data visualizations in Python can be a challenge. For some it an be easy, but for most (and particularly new people to the language) they always have to search for the commands in the documentation or using some search engine. Over the past few years we have seem more and more libraries coming available to assist with many of the routine and tedious steps in most data science and machine learning projects. I’ve written previously about some data profiling libraries in Python. These are good up to a point, but additional work/code is needed to explore the data to suit your needs. One of these Python libraries, designed to make your initial work on a new data set easier is called AutoViz. It’s good to see there is continued development work on this library, which can be really help for creating initial sets of charts for all the variables in your data set, plus it has some additional features which help to make it very useful and cuts down on some of the additional code you might need to write.

The outputs from AutoViz are very extensive, and are just too long to show in this post. The images below will give you an indication of what if typically generated. I’d encourage you to install the library and run it on one of your data sets to see the full extent of what it can do. For this post, I’ll concentrate on some of the commands/parameters/settings to get the most out of AutoViz.

Firstly there is the install via pip command or install using Anaconda.

pip3 install autovizFor comparison purposes I’m going to use the same data set as I’ve used in the data profiling post (see above). Here’s a quick snippet from that post to load the data and perform the data profiling (see post for output)

import pandas as pd

import pandas_profiling as pp

#load the data into a pandas dataframe

df = pd.read_csv("/Users/brendan.tierney/Downloads/Video_Games_Sales_as_at_22_Dec_2016.csv")We can not skip to using AutoViz. It supports the loading and analzying of data sets direct from a file or from a pandas dataframe. In the following example I’m going to use the dataframe (df) created above.

from autoviz import AutoViz_Class

AV = AutoViz_Class()

df2 = AV.AutoViz(filename="", dfte=df) #for a file, fill in the filename and remove dfte parameterThis will analyze the data and create lots and lots of charts for you. Some are should in the following image. One the helpful features is the ‘data cleaning improvements’ section where it has performed a data quality assessment and makes some suggestions to improve the data, maybe as part of the data preparation/cleaning step.

There is also an option for creating different types of out put with perhaps the Bokeh charts being particularly useful.

chart_format='bokeh': interactive Bokeh dashboards are plotted in Jupyter Notebooks.chart_format='server', dashboards will pop up for each kind of chart on your web browser.chart_format='html', interactive Bokeh charts will be silently saved as Dynamic HTML files underAutoViz_Plotsdirectory

df2 = AV.AutoViz(filename="", dfte=df, chart_format='bokeh')The next example allows for the report and charts to be focused on a particular dependent or target variable, particular in scenarios where classification will be used.

df2 = AV.AutoViz(filename="", dfte=df, depVar="Platform")A little warning when using this library. It can take a little bit of time for it to run and create all the different charts. On the flip side, it save you from having to write lots and lots of code!

Migrating SAS files to CSV and database

Many organizations have been using SAS Software to analyse their data for many decades. As with most technologies organisations will move to alternative technologies are some point. Recently I’ve experienced this kind of move. In doing so any data stored in one format for the older technology needed to be moved or migrated to the newer technology. SAS Software can process data in a variety of format, one of which is their own internal formats. Thankfully Pandas in Python as a function to read such SAS files into a pandas dataframe, from which it can be saved into some alternative format such as CSV. The following code illustrates this conversion, where it will migrate all the SAS files in a particular directory, converts them to CSV and saves the new file in a destination directory. It also copies any existing CSV files to the new destination, while ignore any other files. The following code is helpful for batch processing of files.

import os

import pandas as pd

#define the Source and Destination directories

source_dir='/Users/brendan.tierney/Dropbox/4-Datasets/SAS_Data_Sets'

dest_dir=source_dir+'/csv'

#What file extensions to read and convert

file_ext='.sas7bdat'

#Create output directory if it does not already exist

if not os.path.exists(dest_dir):

os.mkdir(dest_dir)

else: #If directory exists, delete all files

for filename in os.listdir(source_dir):

os.remove(filename)

#Process each file

for filename in os.listdir(source_dir):

#Process the SAS file

if filename.endswith(file_ext):

print('.processing file :',filename)

print('...converting file to csv')

df=pd.read_sas(os.path.join(source_dir, filename))

df.to_csv(os.path.join(dest_dir, filename))

print('.....finished creating CSV file')

elif filename.endswith('csv'):

#Copy any CSV files to the Destination Directory

print('.copying CSV file')

cmd_copy='cp '+os.path.join(source_dir, filename)+' '+os.path.join(dest_dir, filename)

os.system(cmd_copy)

print('.....finished copying CSV file')

else:

#Ignore any other type of files

print('.ignoring file :',filename)

print('--Finished--')That’s it. All the files have now been converted and located in the destination directory.

For most, the above might be all you need, but sometimes you’ll need to move the the newer technology. In most cases the newer technology will easily use the CSV files. But in some instance your final destination might be a database. In my scenarios I use the CSV2DB app developed by Gerald Venzi. You can download the code from GitHub. You can use this to load CSV files into Oracle, MySQL, PostgreSQL, SQL Server and Db2 databases. Here’s and example of the command line to load into an Oracle Database.

csv2db load -f /Users/brendan.tierney/Dropbox/4-Datasets/SAS_Data_Sets/csv -t pva97nk -u analytics -p analytics -d PDB1

CAO Points 2022 – Grade inflation, deflation or in-line

Last week I wrote a blog post analysing the Leaving Cert results over the past 3-8 years. Part of that post also looked at the claim from the Dept of Education saying the results in 2022 would be “in-line on aggregate” with the results from 2021. The outcome of the analysis was grade deflation was very evident in many subjects, but when analysed and profiled at a very high level, they did look similar.

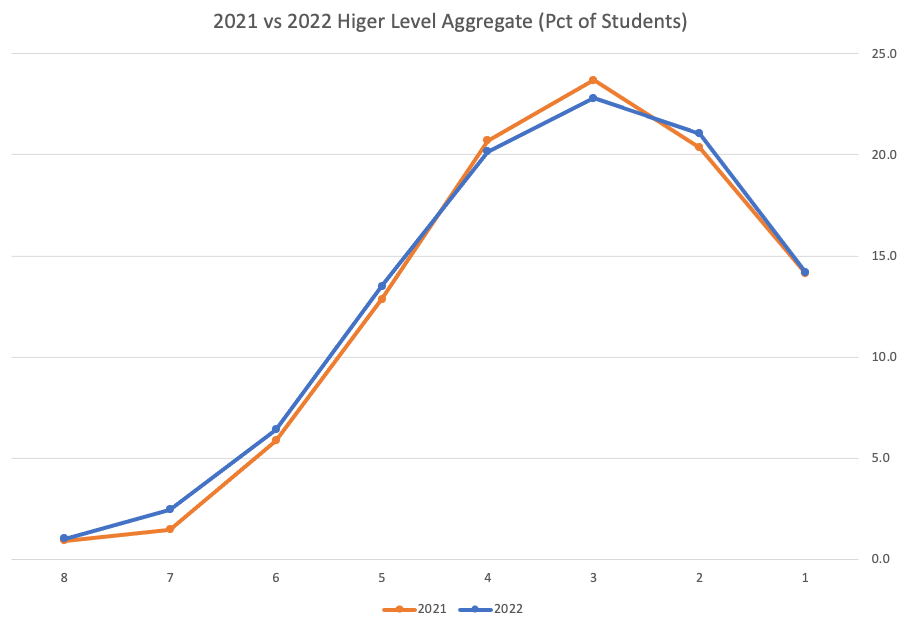

I didn’t go into how that might impact on the CAO (Central Applications Office) Points. If there was deflation in some of the core and most popular subjects, then you might conclude there could be some changes in the profile of CAO Points being awarded, and that in turn would have a small change on the CAO Points needed for a lot of University courses. But not all of them, as we saw last week, the increased number of students who get grades in the H4-H7 range. This could mean a small decrease in points for courses in the 520+ range, and a small increase in points needed in the 300-500-ish range.

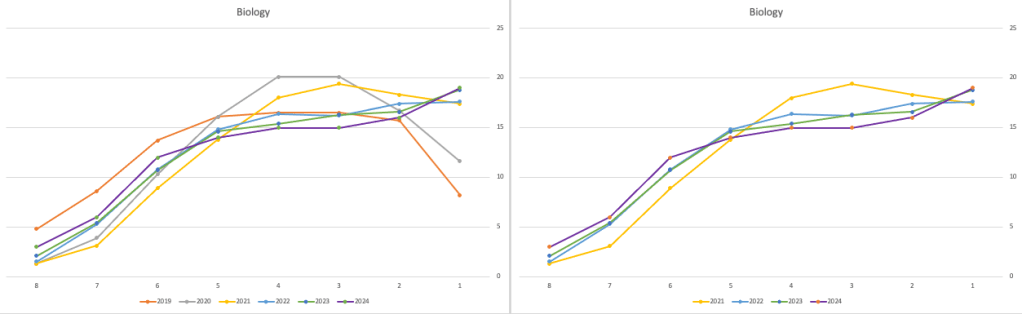

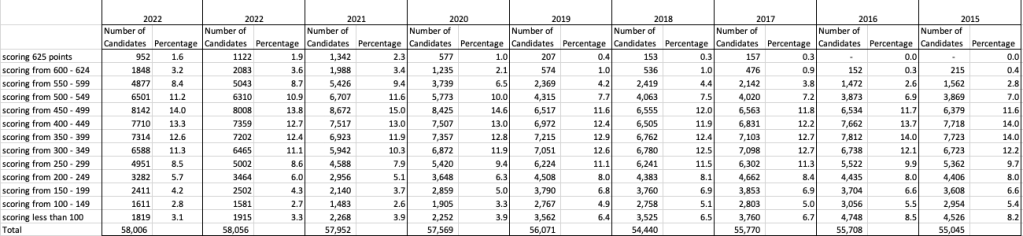

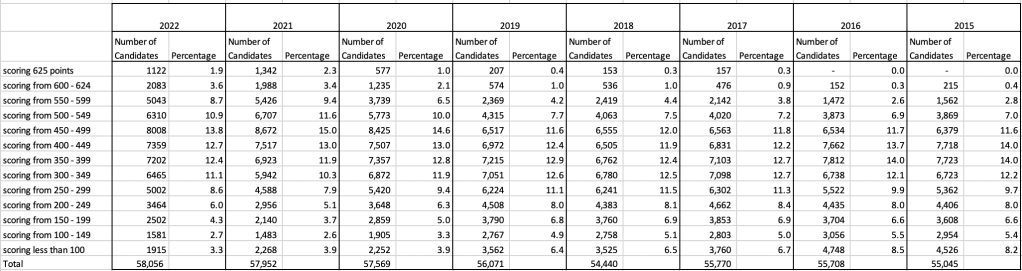

The CAO have published the number of students of each 10 point range. I’ve compared the 2022 data, with each year going back to 2015. The following table is a high level summary of the results in 50 point ranges.

An initial look at these numbers and percentages might look like points are similar to last year and even 2020. But for 2015-2019 the similarity is closer. Again looking back at the previous blog post, we can see the results profiles for 20215-2019 are broadly similar and does indicate some normalisation might have been happening each year. The following chart illustrate the percentage of students who achieved points in each range.

From the above we can see the profile is similar across 2015-2019, although there does seem to be a flattening of the curve between 2015-2016!

Let’s now have a look at 2019 (the last pre-coivd year), 2021 and 2022. This will allow use to compare the “inflated” years to the last “normal” year.

This chart clearly shows a shifting of the profile to the left for the red line which represents 2022. This also supports my blog post last week, and that the Dept of Education has started the process of deflating marks.

Based on this shifting/deflating of marks, we could see the grade/CAO Points profiles reverting back to almost 2019 profile by 2025. For students sitting the Leaving Cert in 2023, there will be another shift to the left, and with another similar shift in 2024. In 2024, the students will be the last group to sit the Leaving Cert who were badly affected during the Covid years. Many of them lost large chunks on school and many didn’t sit the Junior Cert. I’d predict 2025 will see the first time the marks/points profiles will match pre-covid years.

For this analysis I’ve used a variety of tools including Excel, Python and Oracle Analytics.

The Dataset used can be found under Dataset menu, and listed as ‘CAO Points Profiles 2015-2022’. Also, check out the Leaving Certificate 2015-2022 dataset.

Leaving Cert 2022 Results – Inflation, deflation or in-line!

The Leaving Certificate 2022 results are out. Up and down the country there are people who are delighted with their results, while others are disappointed, and lots of other emotions.

The Leaving Certificate is the terminal examination for secondary education in Ireland, with most students being examined in seven subjects, with their best six grades counting towards their “points”, which in turn determines what university course they might get. Check out this link for learn more about the Leaving Certificate.

The Dept of Education has been saying, for several months, this results this year (2022) will be “in-line on aggregate” with the results from 2021. There has been some concerns about grade inflation in 2021 and the impact it will have on the students in 2022 and future years. At some point the Dept of Education needs to address this grade inflation and look to revert back to the normal profile of grades pre-Covid.

Let’s have a look to see if this is true, and if it is true when we look a little deeper. Do the aggregate results hide grade deflation in some subjects.

For the analysis presented in this blog post, I’ve just looked at results at Higher Level across all subjects, and for the deeper dive I’ll look at some of the most popular subjects.

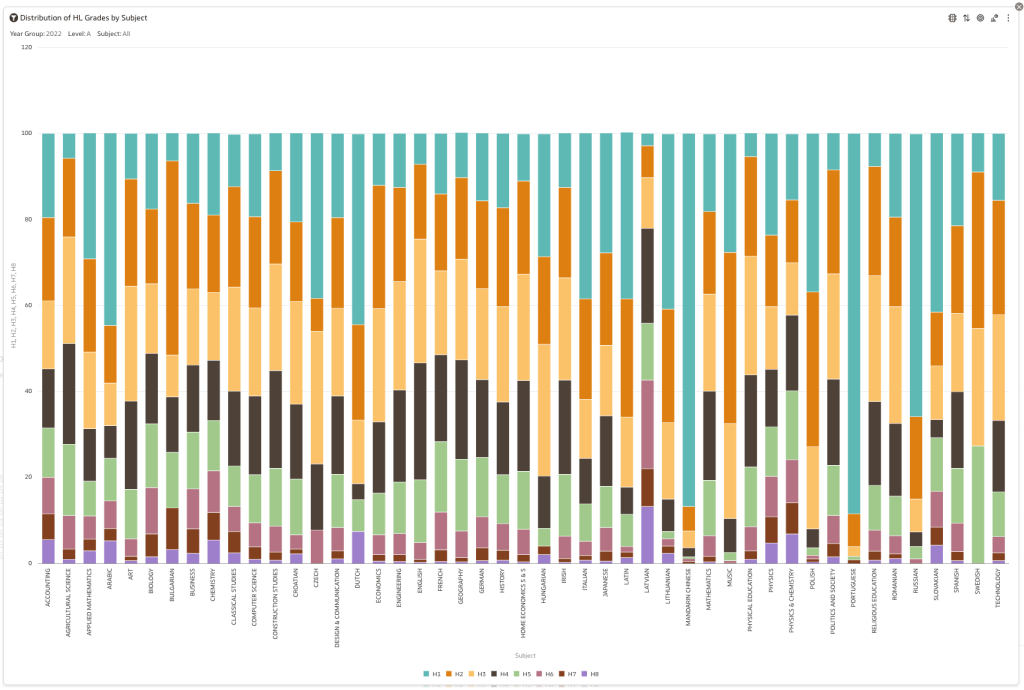

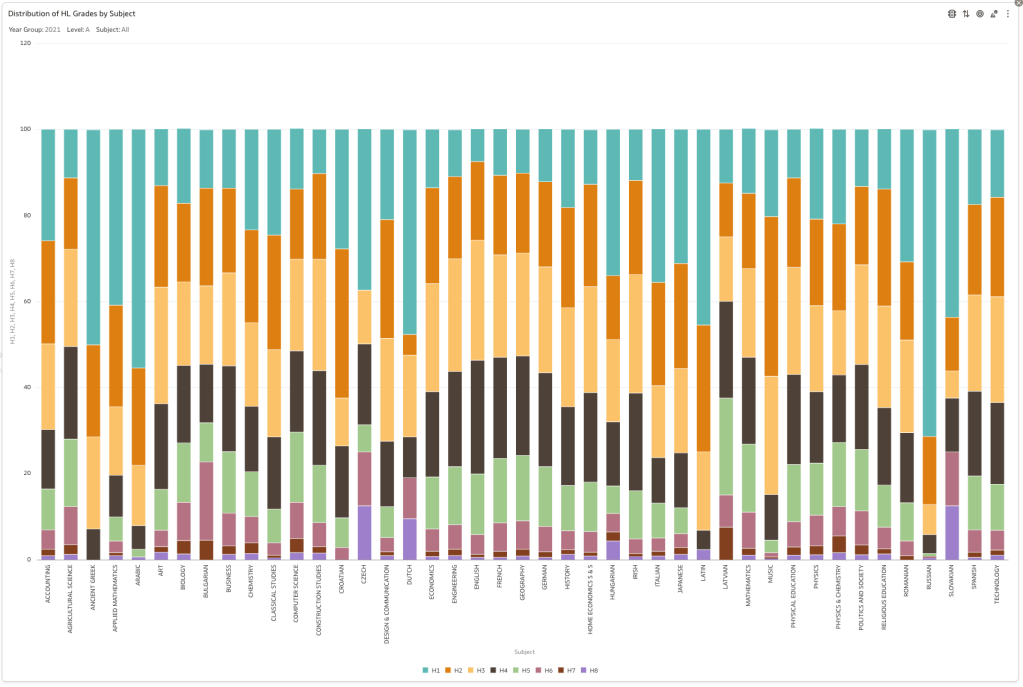

Firstly let’s have a quick look at the distribution of grades by subject for 2022 and 2021.

Remember the Dept of Education said the 2022 results should be in-line with the results of 2021. This required them to apply some adjustments, after marking the exam scripts, to give an updated profile. The following chart shows this comparison between the two years. On initial inspection we can see it is broadly similar. This is good, right? It kind of is and at a high level things look broadly in-line and maybe we can believe the Dept of Education. Looking a little closer we can see a small decrease in the H2-H4 range, and a slight increase in the H5-H8.

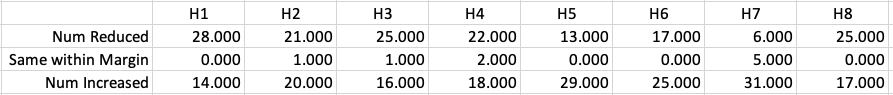

Let’s dive a little deeper. When we look at the grade profile of students in 2021 and 2022, How many subjects increased the number of students at each grade vs How many subjects decreased grades vs How many approximately stated the same. The table below shows the results and only counts a change if it is greater than 1% (to allow for minor variations between years).

This table in very interesting in that more subjects decreased their H1s, with some variation for the H2-H4s, while for the lower range of H5-H7 we can see there has been an increase in grades. If I increased the margin to 3% we get a slightly different results, but only minor changes.

“in-line on aggregate” might be holding true, although it appears a slight increase on the numbers getting the lower grades. This might indicate either more of an adjustment to weaker students and/or a bit of a down shifting of grades from the H2-H4 range. But at the higher end, more subjects reduced than increase. The overall (aggregate) numbers are potentially masking movements in grade profiles.

Let’s now have a look at some of the core subjects of English, Irish and Mathematics.

For English, it looks like they fitted to the curve perfectly! keeping grades in-line between the two years. Mathematics is a little different with a slight increase in grades. But when you look at Irish we can see there was definite grade deflation. For each of these subjects, the chart on the left contains four years of data including 2019 when the last “normal” leaving certificate occurred. With Irish the grade profile has been adjusted (deflated) significantly and is closer to 2019 profile than it is to 2021. There was been lots and lots of discussions nationally about how and when grades will revert to normal profile. The 2022 profile for Irish seems to show this has started to happen in this subject, which raises the question if this is occurring in any other subjects, and is hidden/masked by the “in-line on aggregate” figures.

This blog post would become just too long if I was to present the results profile for each of the 42+ subjects.

Let’s have a look as two of the most common foreign languages, French and Spanish.

Again we can see some grade deflation, although not to be same extent as Irish. For both French and Spanish, we have reduced numbers for the H2-H4 range and a slight increase for H5-H7, and shift to the left in the profile. A slight exception is for those getting a H1 for both subjects. The adjustment in the results profile is more pronounced for French, and could indicate some deflation adjustments.

Next we’ll look at some of the science subjects of Physics, Chemistry and Biology.

These three subjects also indicate some adjusts back towards the pre-Covid profile, with exception of H1 grades. We can see the 2022 profile almost reflect the 2019 profile (excluding H1s) and for Biology appears to be at a half way point between 2019 and 2022 (excluding H1s)

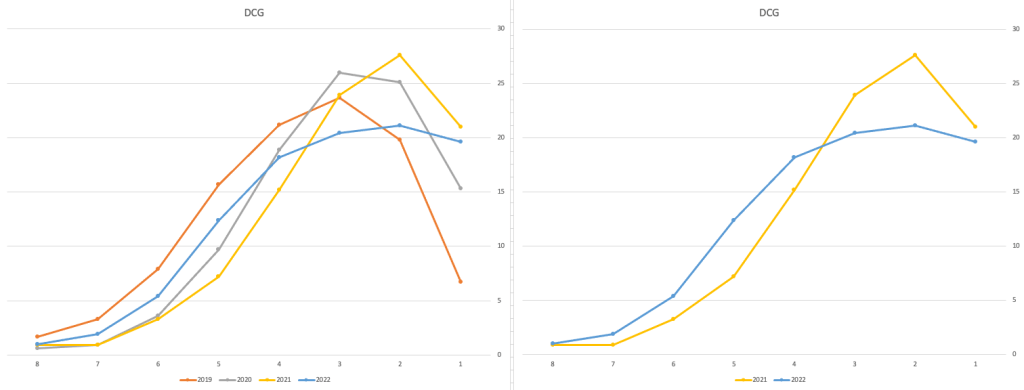

Just one more example of grade deflation, and this with Design, Communication and Graphics (or DCG)

Yes there is obvious grade deflation and almost back to 2019 profile, with the exception of H1s again.

I’ve mentioned some possible grade deflation in various subjects, but there are also subjects where the profile very closely matches the 2021 profile. We have seen above English is one of those. Others include Technology, Art and Computer Science.

I’ve analyzed many more subjects and similar shifting of the profile is evident in those. Has the Dept of Education and State Examinations Commission taken steps to start deflating grades from the highs of 2021? I’d said the answer lies in the data, and the data I’ve looked at shows they have started the deflation process. This might take another couple of years to work out of the system and we will be back to “normal” pre-covid profiles. Which raises another interesting question, Was the grade profile for subjects, pre-covid, fitted to the curve? For the core set of subjects and for many of the more popular subjects, the data seems to indicate this. Maybe the “normal” distribution of marks is down to the “normal” distribution of abilities of the student population each year, or have grades been normalised in some way each year, for years, even decades?

For this analysis I’ve used a variety of tools including Excel, Python and Oracle Analytics.

The Dataset used can be found under Dataset menu, and listed as ‘Leaving Certificate 2015-2022’. An additional Dataset, I’ll be adding soon, will be for CAO Points Profiles 2015-2022.

You must be logged in to post a comment.